自从defcon出过新版musl libc的pwn之后,感觉之后可能会有一波musl lib pwn,果然强网杯的时候就看到了。可以预见,之后可能就会有musl libc master pwn,这两天趁着空闲的时候大概看了一下musl libc的源码,简单的做了一下笔记:

!

Malloc

用户传入size之后,如果大于MMAP_THRESHOLD (#define MMAP_THRESHOLD 131052),会直接走mmap,这一部分暂时先略过。

size处理

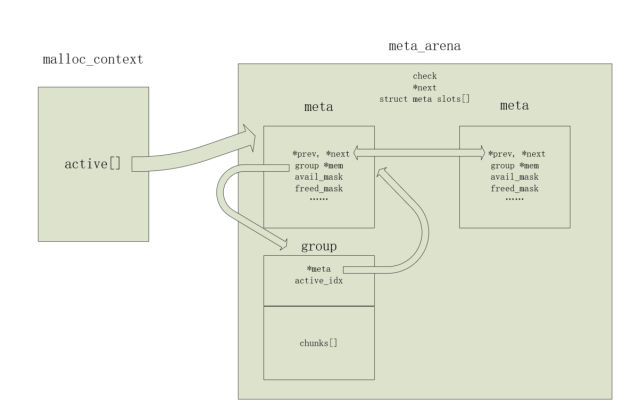

和ptmalloc的main_arena一样,musl也有一个管理堆的结构,称为malloc_context:

struct malloc_context {

uint64_t secret;

#ifndef PAGESIZE

size_t pagesize;

#endif

int init_done;

unsigned mmap_counter;

struct meta *free_meta_head;

struct meta *avail_meta;

size_t avail_meta_count, avail_meta_area_count, meta_alloc_shift;

struct meta_area *meta_area_head, *meta_area_tail;

unsigned char *avail_meta_areas;

struct meta *active[48];

size_t usage_by_class[48];

uint8_t unmap_seq[32], bounces[32];

uint8_t seq;

uintptr_t brk;

};

其中struct meta *active[48]和bins类似,musl把chunk大小分为48类,用size_to_class进行计算:

const uint16_t size_classes[] = {

1, 2, 3, 4, 5, 6, 7, 8,

9, 10, 12, 15,

18, 20, 25, 31,

36, 42, 50, 63,

72, 84, 102, 127,

146, 170, 204, 255,

292, 340, 409, 511,

584, 682, 818, 1023,

1169, 1364, 1637, 2047,

2340, 2730, 3276, 4095,

4680, 5460, 6552, 8191,

};

#define IB 4

static inline int a_ctz_32(uint32_t x)

{

#ifdef a_clz_32

return 31-a_clz_32(x&-x);

#else

static const char debruijn32[32] = {

0, 1, 23, 2, 29, 24, 19, 3, 30, 27, 25, 11, 20, 8, 4, 13,

31, 22, 28, 18, 26, 10, 7, 12, 21, 17, 9, 6, 16, 5, 15, 14

};

return debruijn32[(x&-x)*0x076be629 >> 27];

#endif

}

static inline int a_clz_32(uint32_t x)

{

x >>= 1;

x |= x >> 1;

x |= x >> 2;

x |= x >> 4;

x |= x >> 8;

x |= x >> 16;

x++;

return 31-a_ctz_32(x);

}

static inline int size_to_class(size_t n)

{

n = (n+IB-1)>>4;

if (n<10) return n;

n++;

int i = (28-a_clz_32(n))*4 + 8;

if (n>size_classes[i+1]) i+=2;

if (n>size_classes[i]) i++;

return i;

}

这代码看上去挺复杂的,但是简单的跑一下就能得到一张对应表:

0x0 ~ 0xc ->0

0xd ~ 0x1c ->1

0x1d ~ 0x2c ->2

0x2d ~ 0x3c ->3

0x3d ~ 0x4c ->4

0x4d ~ 0x5c ->5

0x5d ~ 0x6c ->6

0x6d ~ 0x7c ->7

0x7d ~ 0x8c ->8

0x8d ~ 0x9c ->9

0x9d ~ 0xbc ->10

0xbd ~ 0xec ->11

0xed ~ 0x11c ->12

0x11d ~ 0x13c ->13

0x13d ~ 0x18c ->14

0x18d ~ 0x1ec ->15

0x1ed ~ 0x23c ->16

0x23d ~ 0x29c ->17

0x29d ~ 0x31c ->18

0x31d ~ 0x3ec ->19

0x3ed ~ 0x47c ->20

0x47d ~ 0x53c ->21

0x53d ~ 0x65c ->22

0x65d ~ 0x7ec ->23

0x7ed ~ 0x91c ->24

0x91d ~ 0xa9c ->25

0xa9d ~ 0xcbc ->26

0xcbd ~ 0xfec ->27

0xfed ~ 0x123c ->28

0x123d ~ 0x153c ->29

0x153d ~ 0x198c ->30

0x198d ~ 0x1fec ->31

0x1fed ~ 0x247c ->32

0x247d ~ 0x2a9c ->33

0x2a9d ~ 0x331c ->34

0x331d ~ 0x3fec ->35

0x3fed ~ 0x490c ->36

0x490d ~ 0x553c ->37

0x553d ~ 0x664c ->38

0x664d ~ 0x7fec ->39

0x7fed ~ 0x923c ->40

0x923d ~ 0xaa9c ->41

0xaa9d ~ 0xccbc ->42

0xccbd ~ 0xffec ->43

0xffed ~ 0x1247c ->44

0x1247d ~ 0x1553c ->45

0x1553d ~ 0x1997c ->46

而在找到用户传入的size对应的active之后,会从对应的索引中取出meta结构:

struct meta {

struct meta *prev, *next; // meta是一个双向链表

struct group *mem;

volatile int avail_mask, freed_mask;

uintptr_t last_idx:5;

uintptr_t freeable:1;

uintptr_t sizeclass:6;

uintptr_t maplen:8*sizeof(uintptr_t)-12;

};

当然,如果还没有申请过对应大小的meta时,取出来的meta会是0。在meta为0时,会尝试以一种比较粗略的方式进行索引(这段逻辑有点迷,感觉是找一个附近的meta进行后续分配):

// use coarse size classes initially when there are not yet

// any groups of desired size. this allows counts of 2 or 3

// to be allocated at first rather than having to start with

// 7 or 5, the min counts for even size classes.

//如果取出来meta的是空的,且索引在[4,32)之间,且不为6,且为偶数索引,

if (!g && sc>=4 && sc<32 && sc!=6 && !(sc&1) && !ctx.usage_by_class[sc]) {

size_t usage = ctx.usage_by_class[sc|1];

// if a new group may be allocated, count it toward

// usage in deciding if we can use coarse class.

if (!ctx.active[sc|1] || (!ctx.active[sc|1]->avail_mask

&& !ctx.active[sc|1]->freed_mask))

usage += 3;

if (usage <= 12)

sc |= 1;

g = ctx.active[sc];

}

查找内存

在找到meta之后,会在meta的可用内存中进行查找:

for (;;) {

mask = g ? g->avail_mask : 0;

// 这个运算挺巧妙的,可以获取最低一位为1的值,比如3的二进制是011,最低位是1,4的二进制是100,最低一位就是4,简而言之就是获取了首个能用的内存对应的索引

first = mask&-mask;

if (!first) break;

//下面这个就是把这个内存取出来,只不过分为有锁的和无锁的

if (RDLOCK_IS_EXCLUSIVE || !MT)

g->avail_mask = mask-first;

else if (a_cas(&g->avail_mask, mask, mask-first)!=mask)

continue;

//测了一下这个函数好像是log[2,first],相当于把first转换成正常一点的索引

idx = a_ctz_32(first);

goto success;

}

当然,也存在找不到可用的内存的情况,这时候会进入alloc_slot函数中的try_avail继续查找:

static int alloc_slot(int sc, size_t req)

{

uint32_t first = try_avail(&ctx.active[sc]);

if (first) return a_ctz_32(first);

struct meta *g = alloc_group(sc, req);

if (!g) return -1;

g->avail_mask--;

queue(&ctx.active[sc], g);

return 0;

}

在try_avail中,会确认当前的avail_mask为0,假如当前的meta也没有free的chunk(freed_mask为0),那么就会从meta的链表中取出来,这个取出来的操作就是最传统的unsafe unlink,这里脱链可能是为了把这种没有空闲内存的meta拿走,减少搜索的时间。

uint32_t mask = m->avail_mask;

if (!mask) {

if (!m) return 0;

if (!m->freed_mask) {

dequeue(pm, m);

m = *pm;

// 脱链之后pm已经指向next了

if (!m) return 0;

} else {

//但是为啥这里要往后搜索一个。。。。

m = m->next;

*pm = m;

}

mask = m->freed_mask;

// skip fully-free group unless it's the only one

// or it's a permanently non-freeable group

//前面这个条件确实没看懂,看注释可能是跳过完全被free的组?尽量去选择没有被完全利用的组吗

//可能是完全被free的组应该还给操作系统了吧

if (mask == (2u<<m->last_idx)-1 && m->freeable) {

m = m->next;

*pm = m;

mask = m->freed_mask;

}

之后会进行一个尝试激活meta中的内存的操作:

// activate more slots in a not-fully-active group

// if needed, but only as a last resort. prefer using

// any other group with free slots. this avoids

// touching & dirtying as-yet-unused pages.

// ((2u<<m->mem->active_idx)-1))相当于建立了一个mask

// 比如idx是3,那建立出来的就是0b1111

if (!(mask & ((2u<<m->mem->active_idx)-1))) {

if (m->next != m) {

// 不是很懂这里怎么还要取一个next

m = m->next;

*pm = m;

} else {

// 这里应该是一个拓展内存的操作?

int cnt = m->mem->active_idx + 2;

int size = size_classes[m->sizeclass]*UNIT;

int span = UNIT + size*cnt;

// activate up to next 4k boundary

while ((span^(span+size-1)) < 4096) {

cnt++;

span += size;

}

if (cnt > m->last_idx+1)

cnt = m->last_idx+1;

m->mem->active_idx = cnt-1;

}

mask = activate_group(m);

assert(mask);

decay_bounces(m->sizeclass);

static inline uint32_t activate_group(struct meta *m)

{

assert(!m->avail_mask);

uint32_t mask, act = (2u<<m->mem->active_idx)-1;

do mask = m->freed_mask;

while (a_cas(&m->freed_mask, mask, mask&~act)!=mask);

// 扩充avail_mask?

return m->avail_mask = mask & act;

}

最后会返回获取到的free_mask或者avail中的第一个chunk:

first = mask&-mask;

m->avail_mask = mask-first;

申请内存

如果现有meta中找不到合适的chunk,那么就会进入alloc_group函数中进行申请,其中会调用alloc_meta来申请和进行meta的初始化:

//struct meta *alloc_meta(void) :

struct meta *m;

unsigned char *p;

//初始化ctx,就是获取一下secret

if (!ctx.init_done) {

#ifndef PAGESIZE

ctx.pagesize = get_page_size();

#endif

ctx.secret = get_random_secret();

ctx.init_done = 1;

}

size_t pagesize = PGSZ;

if (pagesize < 4096) pagesize = 4096;

//尝试取一个空闲的meta出来

if ((m = dequeue_head(&ctx.free_meta_head))) return m;

而如果此时avail_meta_count为0,就会开始新申请一片meta:(不为0的话直接就取avail_meta一个返回)

if (!ctx.avail_meta_count) {

int need_unprotect = 1;

// 这里可能是为了取brk的地址?

if (!ctx.avail_meta_area_count && ctx.brk!=-1) {

uintptr_t new = ctx.brk + pagesize;

int need_guard = 0;

// 获取一片内存

if (!ctx.brk) {

need_guard = 1;

ctx.brk = brk(0);

// some ancient kernels returned _ebss

// instead of next page as initial brk.

ctx.brk += -ctx.brk & (pagesize-1);

new = ctx.brk + 2*pagesize;

}

if (brk(new) != new) {

ctx.brk = -1;

} else {

//使用mmap申请一片内存

if (need_guard) mmap((void *)ctx.brk, pagesize,

PROT_NONE, MAP_ANON|MAP_PRIVATE|MAP_FIXED, -1, 0);

ctx.brk = new;

ctx.avail_meta_areas = (void *)(new - pagesize);

ctx.avail_meta_area_count = pagesize>>12;

need_unprotect = 0;

}

}

//不取brk的地址

if (!ctx.avail_meta_area_count) {

size_t n = 2UL << ctx.meta_alloc_shift;

p = mmap(0, n*pagesize, PROT_NONE,

MAP_PRIVATE|MAP_ANON, -1, 0);

if (p==MAP_FAILED) return 0;

ctx.avail_meta_areas = p + pagesize;

ctx.avail_meta_area_count = (n-1)*(pagesize>>12);

ctx.meta_alloc_shift++;

}

p = ctx.avail_meta_areas;

if ((uintptr_t)p & (pagesize-1)) need_unprotect = 0;

if (need_unprotect)

if (mprotect(p, pagesize, PROT_READ|PROT_WRITE)

&& errno != ENOSYS)

return 0;

// 更新ctx中的地址信息

ctx.avail_meta_area_count--;

ctx.avail_meta_areas = p + 4096;

if (ctx.meta_area_tail) {

ctx.meta_area_tail->next = (void *)p;

} else {

ctx.meta_area_head = (void *)p;

}

ctx.meta_area_tail = (void *)p;

//设置meta中的check

ctx.meta_area_tail->check = ctx.secret;

ctx.avail_meta_count = ctx.meta_area_tail->nslots

= (4096-sizeof(struct meta_area))/sizeof *m;

ctx.avail_meta = ctx.meta_area_tail->slots;

}

在完成申请之后,会做一些size的调整?

struct meta *m = alloc_meta();

if (!m) return 0;

size_t usage = ctx.usage_by_class[sc];

size_t pagesize = PGSZ;

int active_idx;

if (sc < 9) {

while (i<2 && 4*small_cnt_tab[sc][i] > usage)

i++;

cnt = small_cnt_tab[sc][i];

} else {

// lookup max number of slots fitting in power-of-two size

// from a table, along with number of factors of two we

// can divide out without a remainder or reaching 1.

cnt = med_cnt_tab[sc&3];

// reduce cnt to avoid excessive eagar allocation.

while (!(cnt&1) && 4*cnt > usage)

cnt >>= 1;

// data structures don't support groups whose slot offsets

// in units don't fit in 16 bits.

while (size*cnt >= 65536*UNIT)

cnt >>= 1;

}

// If we selected a count of 1 above but it's not sufficient to use

// mmap, increase to 2. Then it might be; if not it will nest.

if (cnt==1 && size*cnt+UNIT <= pagesize/2) cnt = 2;

如果size不够就会重新分配,之后再进行一些成员的初始化:

if (size*cnt+UNIT > pagesize/2) {

// check/update bounce counter to start/increase retention

// of freed maps, and inhibit use of low-count, odd-size

// small mappings and single-slot groups if activated.

int nosmall = is_bouncing(sc);

account_bounce(sc);

step_seq();

// since the following count reduction opportunities have

// an absolute memory usage cost, don't overdo them. count

// coarse usage as part of usage.

if (!(sc&1) && sc<32) usage += ctx.usage_by_class[sc+1];

// try to drop to a lower count if the one found above

// increases usage by more than 25%. these reduced counts

// roughly fill an integral number of pages, just not a

// power of two, limiting amount of unusable space.

if (4*cnt > usage && !nosmall) {

if (0);

else if ((sc&3)==1 && size*cnt>8*pagesize) cnt = 2;

else if ((sc&3)==2 && size*cnt>4*pagesize) cnt = 3;

else if ((sc&3)==0 && size*cnt>8*pagesize) cnt = 3;

else if ((sc&3)==0 && size*cnt>2*pagesize) cnt = 5;

}

size_t needed = size*cnt + UNIT;

needed += -needed & (pagesize-1);

// produce an individually-mmapped allocation if usage is low,

// bounce counter hasn't triggered, and either it saves memory

// or it avoids eagar slot allocation without wasting too much.

if (!nosmall && cnt<=7) {

req += IB + UNIT;

req += -req & (pagesize-1);

if (req<size+UNIT || (req>=4*pagesize && 2*cnt>usage)) {

cnt = 1;

needed = req;

}

}

p = mmap(0, needed, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANON, -1, 0);

if (p==MAP_FAILED) {

free_meta(m);

return 0;

}

m->maplen = needed>>12;

ctx.mmap_counter++;

active_idx = (4096-UNIT)/size-1;

if (active_idx > cnt-1) active_idx = cnt-1;

if (active_idx < 0) active_idx = 0;

} else {

int j = size_to_class(UNIT+cnt*size-IB);

int idx = alloc_slot(j, UNIT+cnt*size-IB);

if (idx < 0) {

free_meta(m);

return 0;

}

struct meta *g = ctx.active[j];

p = enframe(g, idx, UNIT*size_classes[j]-IB, ctx.mmap_counter);

m->maplen = 0;

p[-3] = (p[-3]&31) | (6<<5);

for (int i=0; i<=cnt; i++)

p[UNIT+i*size-4] = 0;

active_idx = cnt-1;

}

ctx.usage_by_class[sc] += cnt;

m->avail_mask = (2u<<active_idx)-1;

m->freed_mask = (2u<<(cnt-1))-1 - m->avail_mask;

m->mem = (void *)p;

m->mem->meta = m;

m->mem->active_idx = active_idx;

m->last_idx = cnt-1;

m->freeable = 1;

m->sizeclass = sc;

return m;

申请成功之后,就会直接把这个meta的第一个chunk对应的index取出来:

g->avail_mask--;

queue(&ctx.active[sc], g);

返回用户使用的内存

经过前面的操作,我们也只是拿到了meta和对应的idx,最后还要通过enframe函数返回一个给用户使用的区块:

static inline size_t get_stride(const struct meta *g)

{

if (!g->last_idx && g->maplen) {

return g->maplen*4096UL - UNIT;

} else {

return UNIT*size_classes[g->sizeclass];

}

}

static inline void set_size(unsigned char *p, unsigned char *end, size_t n)

{

int reserved = end-p-n;

if (reserved) end[-reserved] = 0;

if (reserved >= 5) {

*(uint32_t *)(end-4) = reserved;

end[-5] = 0;

reserved = 5;

}

p[-3] = (p[-3]&31) + (reserved<<5);

// 低7位是idx, 高5位是reserved

}

static inline void *enframe(struct meta *g, int idx, size_t n, int ctr)

{

size_t stride = get_stride(g); // 获取一个内存区域的大小

size_t slack = (stride-IB-n)/UNIT;

unsigned char *p = g->mem->storage + stride*idx; // 计算对应idx的内存区域

// IB 是4 ,用作chunk的标识

unsigned char *end = p+stride-IB;

// cycle offset within slot to increase interval to address

// reuse, facilitate trapping double-free.

int off = (p[-3] ? *(uint16_t *)(p-2) + 1 : ctr) & 255;

assert(!p[-4]);

if (off > slack) {

size_t m = slack;

m |= m>>1; m |= m>>2; m |= m>>4;

off &= m;

if (off > slack) off -= slack+1;

assert(off <= slack);

}

if (off) {

// store offset in unused header at offset zero

// if enframing at non-zero offset.

*(uint16_t *)(p-2) = off;

p[-3] = 7<<5;

p += UNIT*off;

// for nonzero offset there is no permanent check

// byte, so make one.

p[-4] = 0;

}

*(uint16_t *)(p-2) = (size_t)(p-g->mem->storage)/UNIT; // 相对于缓冲区基地址的偏移?

// 当前chunk对应的索引

p[-3] = idx;

set_size(p, end, n);

return p;

}

这个地方非常迷的就是他直接拿负数做索引还不给任何注释,只能大概归纳一下chunk的这几位的结构大概是:

struck chunk{

uint8 zero;

uint8 idx; // 低7位是idx, 高5位是reserved

uint16 offset;

char[] usermem; // <--用户拿到的内存

}

free

有malloc当然也有free:P

指针转换

这一步主要是根据用户传进来的指针获取对应的meta:

static inline int get_slot_index(const unsigned char *p)

{

return p[-3] & 31;

}

static inline struct meta *get_meta(const unsigned char *p)

{

// 检查地址对齐

assert(!((uintptr_t)p & 15));

// 获取偏移

int offset = *(const uint16_t *)(p - 2);

// 获取chunk的idx

int index = get_slot_index(p);

//p[-4]不为0就从*(uint32_t *)(p - 8)获取偏移

if (p[-4]) {

assert(!offset);

offset = *(uint32_t *)(p - 8);

assert(offset > 0xffff);

}

//通过offset获取基地址

/*struct meta_area {

uint64_t check;

struct meta_area *next;

int nslots;

struct meta slots[];

};

这个时候的group结构是

struct group {

struct meta *meta;

unsigned char active_idx:5;

char pad[UNIT - sizeof(struct meta *) - 1];

unsigned char storage[];

};

*/

const struct group *base = (const void *)(p - UNIT*offset - UNIT);

const struct meta *meta = base->meta;

// 校验group的地址

assert(meta->mem == base);

// index小于等于last index

assert(index <= meta->last_idx);

// 当前chunk不是avail的状态

assert(!(meta->avail_mask & (1u<<index)));

// 当前chunk不是free的状态

assert(!(meta->freed_mask & (1u<<index)));

const struct meta_area *area = (void *)((uintptr_t)meta & -4096);

// 检查arena

assert(area->check == ctx.secret);

// 检查size

if (meta->sizeclass < 48) {

assert(offset >= size_classes[meta->sizeclass]*index);

assert(offset < size_classes[meta->sizeclass]*(index+1));

} else {

assert(meta->sizeclass == 63);

}

if (meta->maplen) {

assert(offset <= meta->maplen*4096UL/UNIT - 1);

}

return (struct meta *)meta;

}

释放内存

在完成一系列check之后就要对chunk进行free了:

struct meta *g = get_meta(p);

int idx = get_slot_index(p);

size_t stride = get_stride(g);

//这里还是获取这个chunk的起始地址和末尾

unsigned char *start = g->mem->storage + stride*idx;

unsigned char *end = start + stride - IB;

get_nominal_size(p, end);// 根据reserved来算真实大小

uint32_t self = 1u<<idx, all = (2u<<g->last_idx)-1;

((unsigned char *)p)[-3] = 255;

// invalidate offset to group header, and cycle offset of

// used region within slot if current offset is zero.

*(uint16_t *)((char *)p-2) = 0;

// release any whole pages contained in the slot to be freed

// unless it's a single-slot group that will be unmapped.

if (((uintptr_t)(start-1) ^ (uintptr_t)end) >= 2*PGSZ && g->last_idx) {

unsigned char *base = start + (-(uintptr_t)start & (PGSZ-1));

size_t len = (end-base) & -PGSZ;

//这个系统调用知识盲区了,似乎就是释放这片区域的

if (len) madvise(base, len, MADV_FREE);

}

// atomic free without locking if this is neither first or last slot

//这里应该是把freed_mask对应的位 置位

// 但是这里并不会马上把freed转换为avail

for (;;) {

uint32_t freed = g->freed_maskd;

uint32_t avail = g->avail_mask;

uint32_t mask = freed | avail;

assert(!(mask&self));

if (!freed || mask+self==all) break;

if (!MT)

g->freed_mask = freed+self;

else if (a_cas(&g->freed_mask, freed, freed+self)!=freed)

continue;

return;

}

最后会调用nontrivial_free:

static struct mapinfo nontrivial_free(struct meta *g, int i)

{

uint32_t self = 1u<<i;

int sc = g->sizeclass;

uint32_t mask = g->freed_mask | g->avail_mask;

//这个意思应该是所有的内存都是free的状态了?

if (mask+self == (2u<<g->last_idx)-1 && okay_to_free(g)) {

// any multi-slot group is necessarily on an active list

// here, but single-slot groups might or might not be.

if (g->next) {

assert(sc < 48);

int activate_new = (ctx.active[sc]==g);

dequeue(&ctx.active[sc], g);

if (activate_new && ctx.active[sc])

activate_group(ctx.active[sc]);

}

return free_group(g);

} else if (!mask) {

//如果mask是0那就给他重新插入到active的序列中

assert(sc < 48);

// might still be active if there were no allocations

// after last available slot was taken.

if (ctx.active[sc] != g) {

queue(&ctx.active[sc], g);

}

}

// 带锁的一个or操作?

a_or(&g->freed_mask, self);

return (struct mapinfo){ 0 };

}

总结

整体看下来感觉最核心的点可能就是free的时候那一堆check了,如果能够伪造meta那应该会构造很多奇奇怪怪的效果,比如进入nontrivial_free进行一个unsafe unlink?不过这次分析感觉也比较粗略,大概画了几个图:

对于整体的数据结构大概是这个样子的:

malloc和free的流程图感觉有点麻烦,等之后有空的时候再整理一下吧 orz