文章探讨了董事会在应对人工智能(AI)风险时面临的挑战。传统上,讨论集中在技术细节而非业务结果上,导致董事难以理解潜在影响。合规框架虽提供指导但未能全面捕捉实际风险。通过将AI事件转化为财务、运营和合规等业务影响类别,并利用量化工具(如Kovrr的模块),董事会能够更清晰地评估风险并制定战略决策。 2025-12-16 15:13:26 Author: securityboulevard.com(查看原文) 阅读量:0 收藏

TL;DR

- Boards struggle to engage with AI risk when discussions center on technical mechanics rather than the business outcomes that determine performance and long-term strategy.

- AI introduces exposure across financial, operational and regulatory areas, requiring boards to interpret it using the same structures applied to other enterprise risks.

- Compliance frameworks outline minimum expectations, but they do not show where real vulnerabilities develop or how quickly exposure grows as AI adoption accelerates.

- Translating AI incidents into business impact categories gives directors a practical way to evaluate consequences and prioritize focus areas.

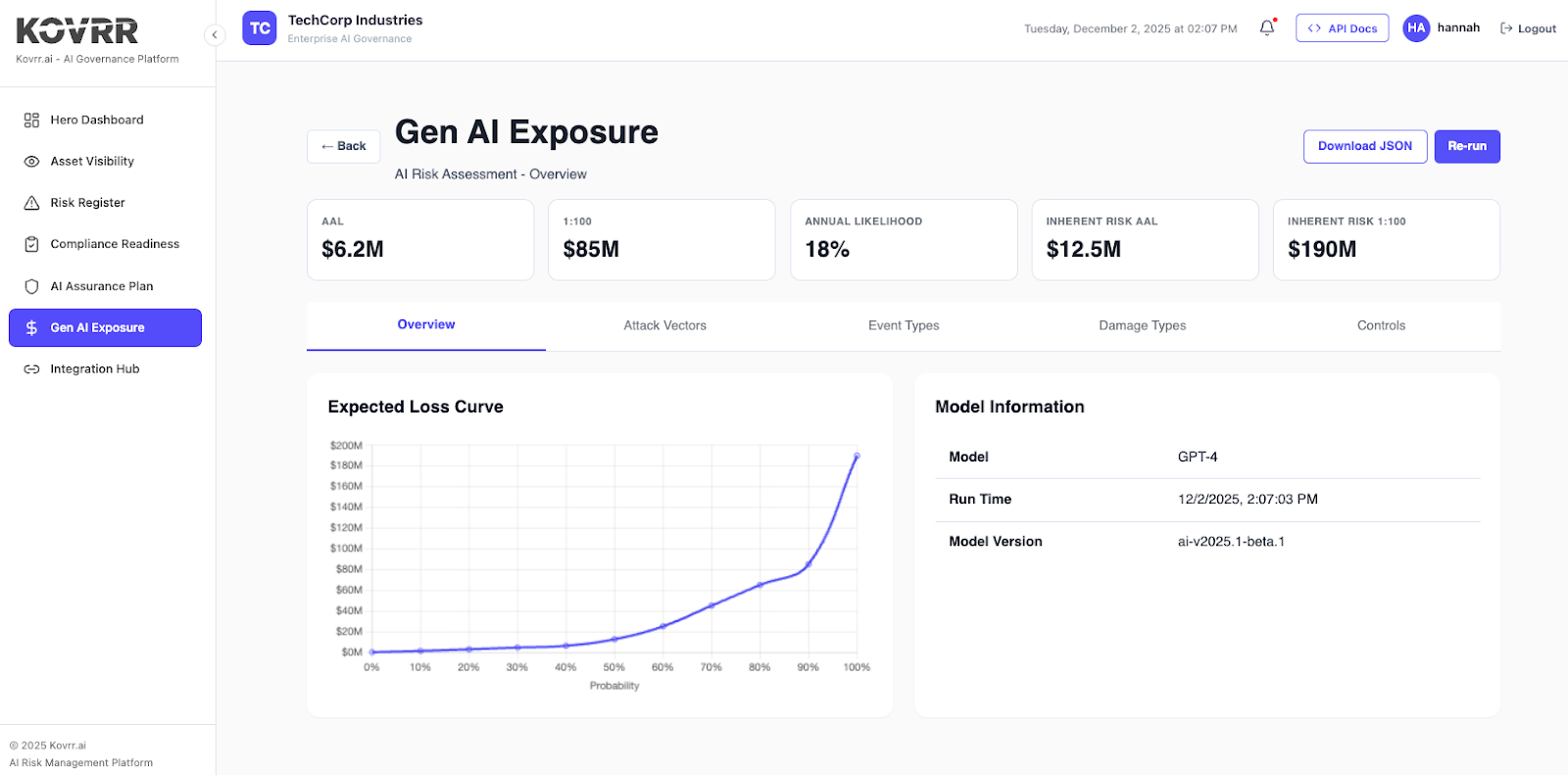

- AI Risk Quantification modules, like the one from Kovrr, bring measurable insight into how scenarios could affect operations or loss patterns, helping boards evaluate thresholds, investments, and overall governance maturity.

- SRMs who frame AI risks in outcome-focused terms create the conditions for stronger oversight, aligning leadership around the safeguards needed for scalable AI adoption.

Upgrading the Language Used to Discuss AI Exposure

AI is altering business operations and workflows at a pace that few leaders have experienced before. GenAI deployments are rising across every department, expanding their influence and maximizing business productivity and efficiency. However, the moment the conversation shifts from AI’s advantages to its inherent risk, the dynamic changes. Enthusiasm in the boardroom becomes hesitation, and the dialogue moves away from strategy optimization and slips into technical territory, making it challenging for key executives to participate in the necessary way.

Most board members can comprehend that AI will drive growth. What they fail to grasp concretely is how the technology introduces a massive amount of exposure. This predicament is typically a result of how information is presented. Security and risk managers (SRMs) often describe AI incidents in the vocabulary of adversarial inputs, model drift, and architecture choices, which matter deeply but rarely answer the questions that directors tackle during their meetings.

High-level stakeholders, in reality, are concerned with issues such as revenue protection, operational continuity, and competitive differentiation, creating a gap that requires more than translating acronyms. It demands a shift in how AI risk is communicated. Terminology needs to be anchored to the decisions boards are responsible for making if governance is to become strategic rather than procedural. Only then are GRC leaders and SRMs going to be able to ensure AI governance receives the attention needed to build resilience.

Why AI Risk Feels Different

Traditional discussions about technology risk revolve around the triad of confidentiality, integrity, and availability. Boards know these categories well, and over the past few decades, they have learned that cybersecurity failures directly affect the business along these lines. GenAI has formidably challenged this familiar structure, with its associated risks not limited to one of these three domains. AI implications spill across ethical, reputation, operational, and compliance terrains in ways that defy the old boundaries.

For example, a biased model can damage customer trust, while a subtle change in decision quality can quietly but profoundly distort outcomes at scale. An unexpected failure can even compromise architectural safety. The seemingly deafening outcome of AI implementation is thus a new risk landscape that feels both sprawling and unconventional. Boards encounter questions they have not been conditioned to assess, and they see harm that likely does not resemble the incidents known to them from cyber or operational risk.

Essentially, board members sense the unpredictability but lack the vocabulary to interpret or question it, making them hesitate in the necessary discussions. Although they see the stakes rising and regulations emerging, they are overwhelmed with the details and then, consequently, choose to relegate core business decisions to the technical team. The challenge for security leaders is to give these stakeholders a path forward, one that feels grounded and aligned with other risk domains already understood.

Turning Technical Signals Into Strategic Insight

When all is considered, boards do not need a technical explanation regarding AI exposure. What they need is context that helps them understand what a risk means for their business, and this transformation begins when SRMs start focusing on outcomes rather than internal mechanisms. While technical descriptions speak in terms of how a model can be manipulated, board discussions should speak in terms of the consequences of said manipulation.

Technical detail likewise emphasizes the action the adversary takes, but strategic framing emphasizes the implications that actions have on revenue, operations, and complications. The distinction between the former and latter shapes everything that follows. When security leaders speak in this more technical language, directors need to mentally translate each point through several layers before reaching the point that affects them.

When the conversation begins with the business consequence, though, the relevance is immediate. The most effective approach involves replacing those mechanics that mean so much to the internal teams with the strategic information boards need to operate. These details open a path for meaningful conversations that encourage directors to think through the implications and make more informed decisions. Instead of overwhelming them with details, GRC leaders can help board members make the right calls.

Reframing AI Incidents as Business Questions

Every potential weakness stemming from GenAI and other AI systems can be expressed through a statement that the board is already equipped to weigh in on. Examples include:

- This is what it would mean for our competitive position if an attacker were to influence the integrity of an AI system embedded in our core service.

- These internal processes would slow or stop if a model-based decision pipeline suddenly began producing inconsistent outcomes.

- This is how we would justify our practices to regulators or customers in the event that an AI-powered product exposed personal data during inference.

- The enterprise’s financial exposure grows exponentially over time if we rely on an AI system that evolves faster than our ability to monitor it.

Each of these questions alludes directly to revenue, operations, compliance or trust without prompting an explanation of attack vectors, techniques, or model design, ensuring directors readily understand what’s at stake.

The Compliance Comfort Illusion

Unfortunately, many leaders will instinctively anchor their initial understanding of AI oversight in compliance. Standards such as the NIST AI RFM and ISO 42001 give them structure, and AI regulations like the EU AI Act offer clear boundaries. While these instruments undoubtedly provide the necessary guidance, they do not capture the full scope of real-world risk. They do not tell board members where the exposure lies. Instead, they define obligations and potential actions. They establish minimums, as opposed to the thresholds needed to avoid insolvency.

Compliance is also the point where boards misinterpret AI readiness. They assume that demonstrating alignment with a standard or regulations ensures security, when, in reality, AI misuse can easily bypass safeguards that comply with every documented requirement. It’s critical, therefore, that boards understand this distinction and recognize that these requirements mark the starting point and that resilience ultimately demands a broader view. Once this idea is established, the board begins to see AI risk as an ongoing, iterative discipline.

Why Compliance Doesn’t Equal Resilience

A model may pass every documented audit and still make decisions that introduce bias. Similarly, a team may follow every mandated control and still end up deploying an AI product that creates reputational fallout within hours. It’s imperative that boards see that compliance can only provide them with an assurance that a process exists, not assurance that the organization can withstand an AI-driven event. This distinction is crucial when boards decide how to allocate resources and how to assess exposure.

Moreover, when they understand the limits of compliance, they become more willing to support investments that strengthen technical detection and monitoring. One way to ensure this comprehension sticks is to use a comparative framing, such as explaining that a safeguard may meet a regulator requirement, but it does not, in fact, protect the enterprise against drift. Statements like the one aforementioned respect the board’s intelligence while also revealing the underlying risk that exists if compliance and risk are conflated.

Building a Shared Language

AI governance and risk become easier for directors to engage with when it’s discussed in a commonly known set of terms. Establishing a shared language ensures that conversations don’t passively move onto parallel tracks of mechanisms and consequences. More importantly, it helps directors interpret what they’re hearing and embed it into their strategies. This vernacular alignment creates a baseline that the entire organization can use to evaluate AI decisions and understand exposure.

Translating AI Risk Into Business Impact Categories

Board members already rely on risk categories such as financial exposure and operational disruption to guide their discussions. Therefore, mapping AI risks into these same domains gives these stakeholders a familiar structure for strategization and, simultaneously, prevents the conversations from drifting into abstraction. When AI exposure is expressed through modeled financial ranges and operational loss, directors gain a clearer view of where the organization is most vulnerable.

Moreover, they can begin tying oversight to outcomes rather than abstruse metrics, strengthening both executive-level accountability and focus. Even those high-level stakeholders who feel distant from technical models can comprehend and internalize exposure trends when SRMs translate these KPIs into the categories used in every other boardroom discussion. The key is to frame AI risk as part of the enterprise risk ecosystem rather than a standalone technical problem.

Introducing AI Risk Appetite

Boards routinely debate the enterprise’s risk appetite across credit, liquidity, market volatility, and other domains. Now, however, the exposure AI usage generates demands for an equivalent position in these discussions. Once AI risk has been translated into these more common terms, becoming measurable across other risk management categories, directors can more easily define loss thresholds that signal acceptable, elevated, or unacceptable risk levels.

This process is why quantifying insights becomes essential, since subjective scoring cannot support appetite-setting with the same level of confidence or precision. When leaders see modeled annual exposure next to desired thresholds, decisions around investment and oversight become more straightforward. Additionally, leadership can determine whether safeguards and investments match the pace of AI adoption. Ultimately, factoring in quantified AI considerations when establishing AI risk appetite helps to ensure resilience.

The Leadership Shift

Strong AI governance depends as much on mindset as it does on process. Boards pay close attention to leaders who can explain complex issues through a business lens while still preserving the nuance that technical accuracy demands. SRMs and GRC executives who approach AI risk as a strategic discipline rather than a narrow technical field help directors participate more effectively in oversight. This shift invites the board into the discussion instead of asking them to interpret unfamiliar metrics.

Interpreter, Not Technician

Boards do not expect SRMs to explain the technicalities behind adversarial prompts or data drift, nor do they want that. What they need is an interpretation of the business consequences connected to these events. The role of AI governance leaders thus evolves from technical translator to strategic interpreter, someone who can articulate what exposure means for performance strength. This shift elevates the conversation and showcases security leadership as a partner in the enterprise’s long-term planning.

Enabling Innovation Safely

Board members want AI discussions that balance ambition with responsibility, and positioning governance as an enabler of sustainable innovation helps directors see the value of structured oversight. When visibility and exposure modeling are presented as tools that accelerate safe adoption, the board becomes more confident in approving AI initiatives and investments. Strong governance is not a constraint on processes. On the contrary, it is a necessary condition that allows innovation to scale without introducing uncontrolled risk.

Putting AI Governance Into Practice

Bringing AI governance to the operational reality requires evidence that oversight can be executed in a structured and repeatable way. That feat involves connecting risk signals to measurable outcomes and demonstrating how governance activities improve resilience over time. When directors can see how exposure is quantified and where safeguards influence loss patterns, AI risk becomes a strategic discipline they can confidently steer.

How to Turn AI Risk Into Measurable, Actionable Insight

Confidence is bolstered in boardrooms when AI risk is expressed in structured, concrete insights rather than broader warnings, which is why measurable, objective evaluation is indispensable. Visibility into where AI assets exist, how safeguards perform, and where exposure concentrates helps these directors see the landscape clearly. Quantifying the financial and operational impact of potential scenarios adds the layer of evidence that turns theoretical conversations into strategic decisions.

When exposure ranges and modeled losses highlight the value of specific AI governance controls, the board is equipped to prioritize investments with greater assurance. They can distinguish between the issues that require immediate funding and those that sit within acceptable thresholds or the risk appetite levels. Metrics tied to maturity progress and exposure reduction give directors a grounded way to evaluate whether governance is advancing at the pace necessary to maintain a competitive edge.

The Path Forward for Board-Level AI Governance

AI is advancing quickly, and with that acceleration comes a growing responsibility for directors to understand how these systems shape performance, trust, and long-term strategy. Boards need visibility into where AI is embedded and what kinds of outcomes could materialize if safeguards fall behind. When SRMs express these issues through business impact and modeled exposure, directors gain a foundation that supports informed oversight rather than reactive decision-making.

The next stage of board-level governance depends on transforming AI from a technical topic into a strategic discipline supported by measurable insight. Directors who can interpret exposure ranges, assess maturity progress, and engage confidently with scenario outcomes will be better positioned to guide investment and maintain accountability across the enterprise. By framing AI risk in practical terms and anchoring decisions in evidence, SRMs enable the board to move from hesitation to true leadership.

Kovrr built its AI Governance Suite to give leaders the insight they need to bring AI risk into the boardroom with confidence. If you want to see how measurable visibility and quantification can strengthen oversight, schedule a demo today.

如有侵权请联系:admin#unsafe.sh