Building FuzzForge: Why We're Rethinking Security Automation

TL;DR: Security tools exist but nobody orchestrates them together. FuzzForge chains heterogeneous tools (SAST, fuzzing, dynamic analysis) into intelligent, auditable workflows powered by specialized AI agents.

Security testing is hard. Scaling it is harder. Making it accessible without dumbing it down? That’s the challenge we set out to solve.

After two years building FuzzForge, iterating on feedback from security teams and solving real-world challenges, we’ve learned one thing: the future of security automation isn’t about building the perfect SAST engine or the most autonomous AI pentester. It’s about orchestrating heterogeneous security tools into intelligent, auditable workflows that learn and adapt.

We’re not the 20th vendor claiming autonomous hacking. We’re building transparent, deterministic, composable security automation.

In this series of articles, we’ll walk you through our engineering journey, from problem to solution. This first article explains why we’re building FuzzForge and what our approach is. Follow-up articles will dive into the how: workflow orchestration with Temporal, sandboxing untrusted code, integrating AI without black boxes, and scaling infrastructure.

One clarification upfront: “FuzzForge” suggests fuzzing-only, but the ‘Forge’ is key. We orchestrate security workflows across SAST, fuzzing, dynamic analysis, and custom modules. Complete security pipelines, not just fuzzers.

Why: The Security Accessibility Problem

Vulnerability research takes years to master. It combines deep software internals knowledge, attack pattern expertise, and the creativity to chain isolated issues into exploitable vulnerabilities. Development teams need security validation, but it’s not their core skill. Security experts don’t scale. They’re expensive, scarce, and time-constrained.

The industry faces a fundamental problem: modern teams deploy multiple times per day, yet traditional pentesting happens quarterly due to cost and availability. This leaves applications running untested in production, with attackers often discovering vulnerabilities first.

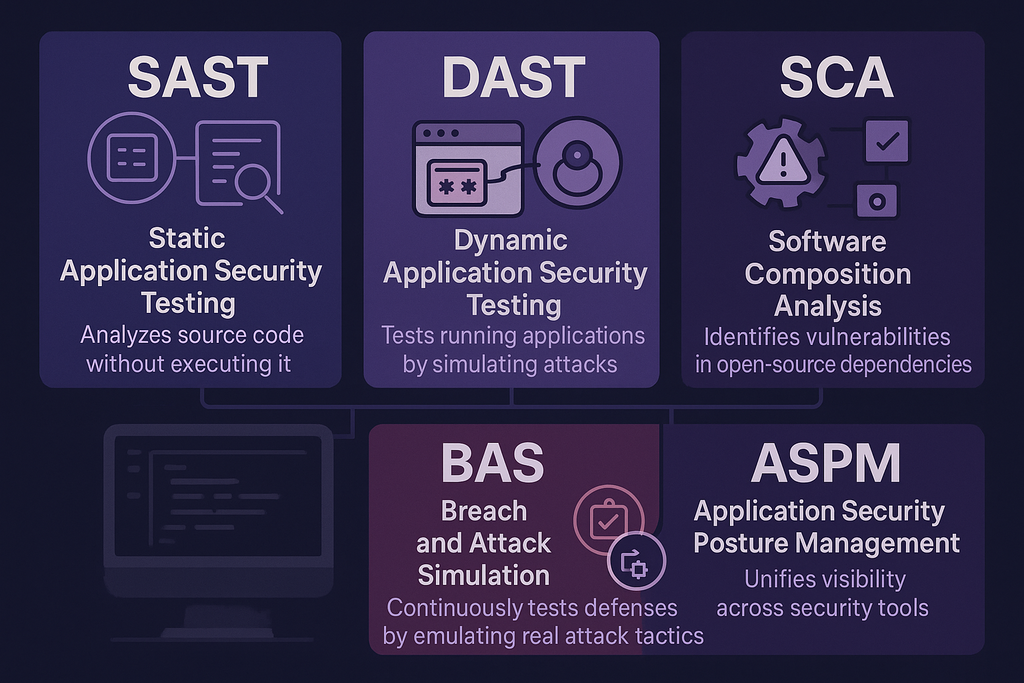

The market has fractured into specialized approaches, each optimizing for different tradeoffs. Before diving in, some key acronyms:

- SAST (Static Application Security Testing): Analyzes source code without executing it

- DAST (Dynamic Application Security Testing): Tests running applications by simulating attacks

- SCA (Software Composition Analysis): Identifies vulnerabilities in open-source dependencies

- BAS (Breach and Attack Simulation): Continuously tests defenses by emulating real attack tactics

- ASPM (Application Security Posture Management): Unifies visibility across security tools

Four philosophical approaches have emerged:

1. Specialized Tools: Fast & Focused

SAST Tools (Semgrep, CodeQL, SonarQube) prioritize speed and developer experience. Semgrep raised $100M in 2025 validating the “fast feedback, fewer false positives” approach. CodeQL goes deeper with semantic analysis but requires specialized expertise. Fuzzing Platforms like OSS-Fuzz discovered 13,000+ vulnerabilities since 2016 with zero false positives—crashes are proof. They excel at what they do but miss cross-technique workflows and struggle with contextual prioritization. Setup complexity and long campaign times limit adoption.

2. Integrated Platforms: Convenience & Vendor Lock-in

DevSecOps platforms (Checkmarx, Veracode, Snyk) consolidate multiple techniques into unified dashboards. Snyk pioneered “developer-first security,” raising ~$1.3B and reaching $8.5B peak valuation through aggressive acquisitions (DeepCode, Fugue, Enso, Helios, Probely, Invariant Labs). These platforms offer one-stop-shopping but create vendor lock-in, Gartner notes they’re “jack-of-all-trades” where individual tools may not be best-of-breed. ASPM solutions aggregate findings into prioritized views but don’t orchestrate workflows.

3. AI-First Solutions: Autonomous Promise, Black-Box Reality

AI-Powered Pentesting has exploded in 2024-2025:

- Open-source frameworks like CAI (ranked #1 in Hack The Box CTF, 3600× faster than humans) and PentestGPT emphasize transparency and human-in-the-loop workflows

- xbow ($95M from Sequoia, founded by Semmle/GitHub Copilot creators) achieved 85% on novel security challenges in 28 minutes (matching a 20-year veteran who took 40 hours) and became the first AI to reach #1 on HackerOne’s global leaderboard

- Horizon3.ai ($178M raised, $750M+ valuation) pioneered continuous autonomous pentesting with NodeZero—150,000+ autonomous pentests across 3,000+ organizations

- Tenzai (record $75M seed) promises fully autonomous “AI hackers” targeting the $8B pentesting market

The appeal is clear: AI doesn’t sleep, scales infinitely, and learns every protocol. The concern is equally clear: black-box AI decisions, explainability gaps, and “AI slop” polluting bug bounty programs. Every major vendor positions AI as “Copilot, not Autopilot.” Augmentative rather than fully autonomous.

BAS Platforms (market projected $2.4B by 2029) continuously test defenses by emulating MITRE ATT&CK tactics. Key players: Pentera ($250M raised, $1B unicorn), Picus Security ($80M, 4,000+ threats library), Cymulate ($141M), and AttackIQ ($79M, MITRE’s founding partner). BAS validates whether controls detect threats but runs scripted attacks rather than discovering new vulnerabilities.

PTaaS (Penetration Testing as a Service, market projected $301M by 2029) combines automated scanning with human ethical hackers. Unlike traditional pentesting (3-4 week setup, annual cadence, PDF reports), PTaaS launches in 24-72 hours with real-time dashboards. Key players: Cobalt, Synack (FedRAMP authorized), HackerOne (2M+ ethical hackers), and NetSPI.

4. The Gold Standard: Depth Over Scale

Manual Consultants remain the gold standard for deep assessments but don’t scale. Big Four firms dominate enterprise security audits at $2000-4000/man-day, delivering quality but not frequency.

Cyber Reasoning Systems (CRS) represent the holy grail: fully autonomous systems that find vulnerabilities, generate exploits, and patch binaries without human intervention. The concept emerged from DARPA’s Cyber Grand Challenge (2016). ForAllSecure’s Mayhem won, later securing a $45M DoD contract before Bugcrowd acquired it in 2025. The successor AIxCC (2025) showed progress: 86% of synthetic vulnerabilities identified, 18 real zero-days discovered. But here’s the reality: CRS remains largely research and POC, not productized. No commercial product delivers true end-to-end autonomous vulnerability research at scale. This is exactly the space FuzzForge aims to bridge, not by promising magic, but by coordinating proven techniques into practical workflows.

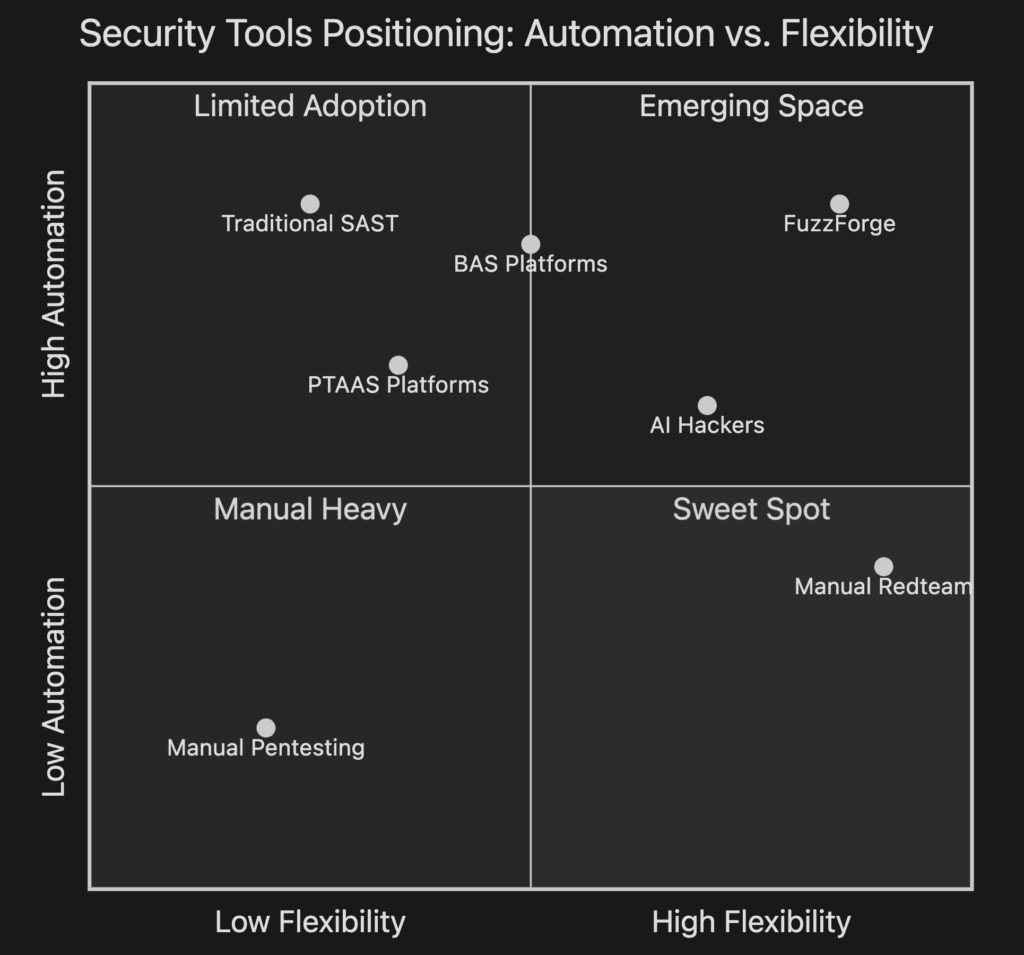

Every approach makes tradeoffs:

- Automation vs. Flexibility: Fast, consistent scanning vs. adapting to unique environments

- Scale vs. Quality: Testing 1,000 apps quickly vs. deep analysis of critical systems

- Speed vs. Accuracy: Instant feedback with noise vs. slow, precise results

- Transparency vs. Magic: Auditable results vs. black-box AI that “just works”

Nobody has solved this. Neither have we. But we’ve chosen different tradeoffs based on what security teams actually need.

So how do we address these tensions? That’s what FuzzForge is built to solve.

The core problem remains: tools exist, orchestration doesn’t. That’s what FuzzForge addresses.

What: The FuzzForge Approach

The future of security automation isn’t building the best SAST engine or most autonomous AI pentester. It’s coordinating heterogeneous tools into intelligent, auditable workflows that adapt to each organization’s needs.

We don’t believe in magic. We believe in composable, auditable, scalable workflows.

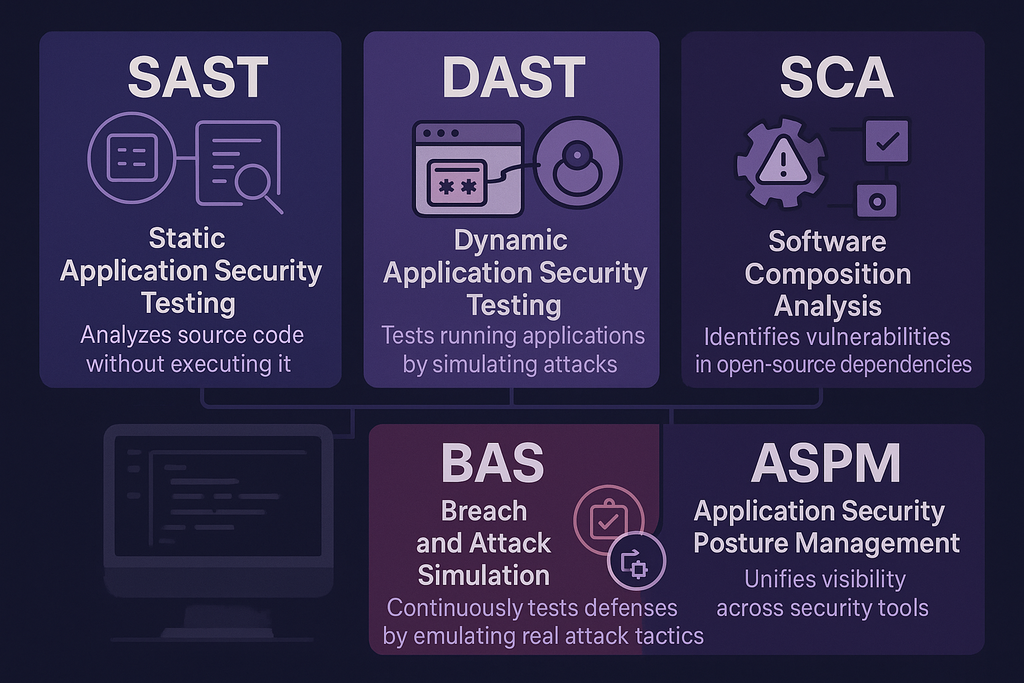

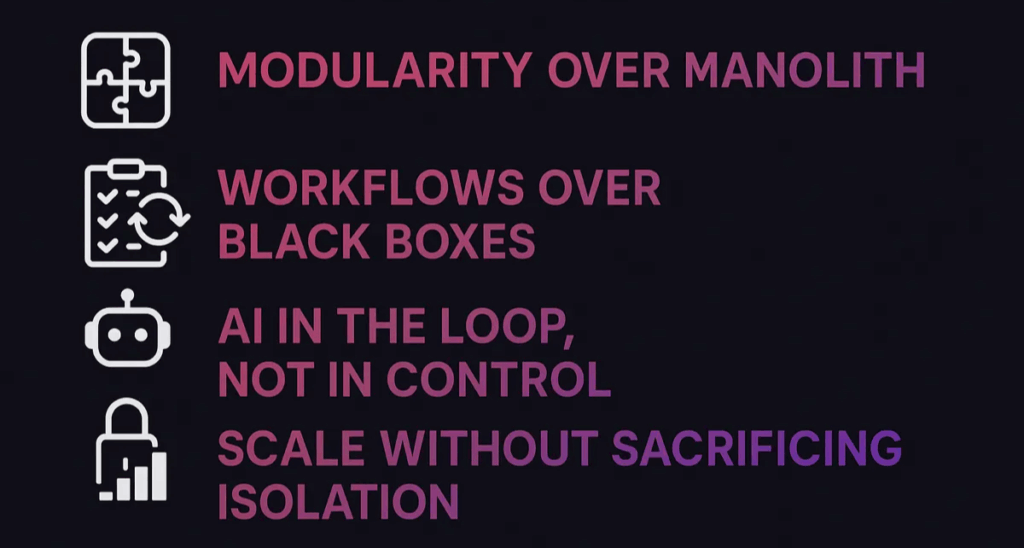

1. Modularity Over Monolith

We don’t reinvent Semgrep or AFL. We chain them together.

Each module is an isolated container with standardized inputs/outputs. Users integrate their preferred SAST scanners (Semgrep, CodeQL), fuzzers (AFL, LibFuzzer), dynamic analyzers, and custom tools. The intelligence is in the orchestration: “If Semgrep finds buffer overflow in user_input.c, launch 48-hour AFL campaign on that code path.”

Example workflow: Upload a firmware image → Binwalk extracts filesystem → Ghidra/angr decompiles binaries → Semgrep scans extracted code → AFL fuzzes network services for 72h → QEMU emulation validates crashes → unified vulnerability report with CVE mapping and PoCs. Same orchestration applies to APKs, iOS apps, source repos, or CI/CD pipelines.

FuzzForge supports three usage modes:

- CI/CD integration: Automatic analysis on every commit, PR checks, branch protection

- Target-based analysis: Upload specific artifacts (firmware images, APKs, IPAs, binaries, PCAP files) for deep security assessment. No source code or repo required

- Project-based analysis: Point FuzzForge at a source repository for comprehensive audit without CI/CD integration. Ideal for one-time assessments or external code reviews

Tradeoff: Orchestration complexity vs. flexibility. We avoid NIH syndrome and vendor lock-in but require more setup than single-tool solutions.

2. Workflows Over Blackboxes

Every finding includes full lineage: which tool, what configuration, which test case, when, with what evidence. Temporal-based orchestration ensures reproducibility. Same workflow + same code = identical results.

Contrast this with black-box AI tools where “AI found 15 vulnerabilities” tells you nothing about confidence, false positive likelihood, or how to verify.

Tradeoff: Verbosity vs. transparency. Our logs are detailed, sometimes too detailed. In security, post-incident analysis requires complete information.

3. AI in the Loop, Not in Control (But Sometimes Fully Automated)

We use AI strategically through specialized agents powered by RAG (Retrieval-Augmented Generation), grounding decisions in domain-specific knowledge (CVEs, vulnerability patterns, codebase context) to significantly reduce hallucinations, though we implement additional validation layers for critical security decisions. Details on our agentic architecture in a future article.

Two execution modes depending on context and risk:

- Agent-augmented workflows: AI analyzes, triages, and suggests. Humans approve critical actions

- Fully automated pipelines: Agents run 24/7 within defined guardrails for routine security validation

Deployment flexibility: Cloud APIs (OpenAI, Anthropic), self-hosted (Ollama, vLLM), or fully on-premise with fine-tuned SLMs or even TRMs for air-gapped environments.

The difference from black-box AI tools: full explainability. Every agent decision shows the model used, retrieved context, reasoning chain, and confidence scores. You can replay any workflow to audit exactly what happened.

Tradeoff: Less “fully autonomous” than some promise, more autonomous than pure advisory tools. We’re in the middle: automated where safe, supervised where critical.

4. Scale Without Compromising Isolation

We run untrusted code: malicious samples, vulnerable apps, proof-of-concept exploits. Each runs in isolated containers. A crashing fuzzer doesn’t affect other workflows. Malware in dynamic analysis can’t escape to infrastructure.

From 1 scan to 1000 parallel fuzzing campaigns. Quick SAST (minutes) to long fuzzing (weeks). Resource isolation prevents interference.

Tradeoff: Infrastructure overhead vs. security guarantees. Containers cost more than shared execution, but cross-contamination is unacceptable.

In short: FuzzForge orchestrates multi-technique security workflows with full transparency, modularity, and AI assistance.

FuzzForge is:

- A workflow orchestration platform for multi-technique security testing

- SAST + fuzzing + dynamic analysis + custom modules, intelligently chained

- Agentic AI: specialized agents powered by RAG, with both human-in-the-loop and fully automated modes

- API-first: full programmatic control for security workflows

- Multi-target: IoT firmware, Android APKs, iOS apps, Rust/Go binaries, web apps

- Deployment-flexible: cloud, self-hosted, or fully on-premise with local LLMs and SLMs

- Model-agnostic: OpenAI APIs, Anthropic, Ollama, vLLM, custom fine-tuned SLMs

FuzzForge is not:

- A full replacement for security experts (we augment and scale their work, not replace them)

- A magic blackbox (we show our work, every step)

- Cloud-only (we support air-gapped on-premise for compliance-sensitive orgs)

- Vendor lock-in (bring your own tools, bring your own AI models)

- Another AI hype product. We’re building practical, auditable automation

Where We Fit: The Orchestration Gap

Specialization is advancing, but integration is lagging.

Organizations deploy SonarQube for code quality + Snyk for dependencies + Semgrep for SAST + Pentera for BAS + CAI for offensive testing. Yet they lack unified orchestration beyond ASPM dashboards that aggregate findings into a single view. ASPM solves “too many dashboards” but doesn’t orchestrate workflows.

What’s missing:

- Cross-tool workflows: “If SAST finds X, trigger fuzzing Y, validate with dynamic test Z”

- Intelligent prioritization based on target’s context: “Buffer overflow in network-facing parser → critical. Same in unused debug code → low”

- Context propagation: Semgrep findings inform fuzzing campaigns, crashes inform dynamic analysis

- Adaptive testing: Workflows that adjust based on results

The gap is clear: tools exist, orchestration doesn’t. FuzzForge fills that gap.

Workflow-first, not tool-first: Most platforms build around one technique (SAST, AI pentesting) then expand. We started with orchestration and integrate whatever makes sense. An IoT workflow might combine Binwalk (firmware extraction), Ghidra (decompilation), Semgrep (code analysis), AFL (fuzzing), Frida (runtime), and custom protocol analyzers. No single-tool platform covers this.

Bring-your-own-tools AND bring-your-own-AI: Want CodeQL instead of Semgrep? Both in different workflows? Custom SAST for proprietary languages? All supported. Same for AI: use OpenAI APIs, self-host Ollama, or run fine-tuned SLMs on-premise. Contrast with Checkmarx/Veracode (closed ecosystems), Tenzai (cloud-only, unclear integration), or Snyk (optimized for their stack).

On-premise & air-gapped deployment: FuzzForge runs entirely on-premise with support for both local LLMs (Ollama, vLLM, fine-tuned SLMs) and external APIs. For air-gapped environments, organizations can deploy with local LLMs only, ensuring no code leaves their infrastructure.

Auditable AI: When agents run autonomously, you see the full reasoning chain, not just “AI found vulnerabilities.” You can replay any workflow to debug or audit decisions.

Multi-technique integration: Security isn’t SAST or fuzzing. It’s SAST and fuzzing and dynamic analysis. We integrate all three. Example: SAST finds buffer overflow → dynamic confirms it’s reachable → fuzzing generates 10K payloads → runtime monitoring confirms execution. This mirrors how human researchers work.

Flexible execution modes: Quick SAST on commits (minutes), deep fuzzing campaigns (days/weeks), one-shot firmware analysis, or autonomous AI agents running 24/7. Upload a binary, point to a repo, or integrate into CI/CD. Same powerful workflows, different entry points.

We’re not claiming superiority everywhere. SAST tools have faster cold starts and simpler setup. DevSecOps platforms excel at compliance reporting. AI pentesting tools offer more autonomous creative exploitation. BAS platforms focus on control validation with executive-friendly reporting. Manual consultants bring business context and social engineering skills we can’t replicate.

We optimize for: reproducibility, auditability, flexibility, multi-technique integration, scale.

Anyone who wants to find vulnerabilities efficiently:

- Security researchers: Upload a target, let FuzzForge handle the toolchain. Focus on finding bugs, not configuring tools

- Small teams: Punch above your weight with automated workflows that run overnight while you sleep

- Mid-to-large security teams: Orchestrate multiple tools, maintain audit trails, integrate custom modules, scale assessments

Not for: Compliance-checkbox-only needs (use DevSecOps platforms), teams expecting fully autonomous pentesting with zero configuration or oversight.

Security automation isn’t about finding the perfect tool. It’s about orchestrating the right tools for each situation.

This article covered why (the security accessibility problem) and what (our workflow orchestration approach). Next we’ll detail the how:

- Workflow Orchestration: Temporal for durable, observable security workflows

- Sandboxing & Isolation: Executing untrusted code safely at scale

- Agentic AI Architecture: Specialized agents with RAG-grounded reasoning

- Module Architecture: Standardizing security tool integration

- Infrastructure & Scale: Running 1,000 parallel fuzzing campaigns

We started development two years ago alongside the DARPA challenge (without being able to participate since we’re French and not US based). We are working toward six demos by February 2025 (IoT, Android, iOS, Rust/Go, open-source), building with a team of ten dedicated engineers. We’re not claiming victory. We’re sharing our journey, reasoning, and tradeoffs.

The security automation landscape is evolving rapidly. We believe there’s space for an approach that prioritizes transparency, flexibility, and orchestration over black-box magic.

Feedback welcome. Interested in collaboration, or contributing modules? Reach out.

Founded in 2021 and headquartered in Paris, FuzzingLabs is a cybersecurity startup specializing in vulnerability research, fuzzing, and blockchain security. We combine cutting-edge research with hands-on expertise to secure some of the most critical components in the blockchain ecosystem.

Contact us for an audit or long term partnership!

Get Your Free Security Quote!