作者表达了对Claude Code的热爱,并分享了自己围绕该平台构建开源项目和AI生态系统的经历。他提到两次独立开发的功能在短时间内被Claude Code团队采用并优化,对此感到自豪和兴奋。 2025-12-7 00:0:0 Author: danielmiessler.com(查看原文) 阅读量:1 收藏

I'm not looking for a job on the Claude Code team, but if I were, this would be the blog post I'd use

December 7, 2025

I'm not the type who brags, but I have to brag about this.

I guess it's not really bragging. It's more like validation.

Anyway.

I'm basically in love with Claude Code for multiple reasons, I have been for months. You know that by now. I've built a whole open source project around it and have constructed my entire AI ecosystem on top of it.

And now this thing has happened twice:

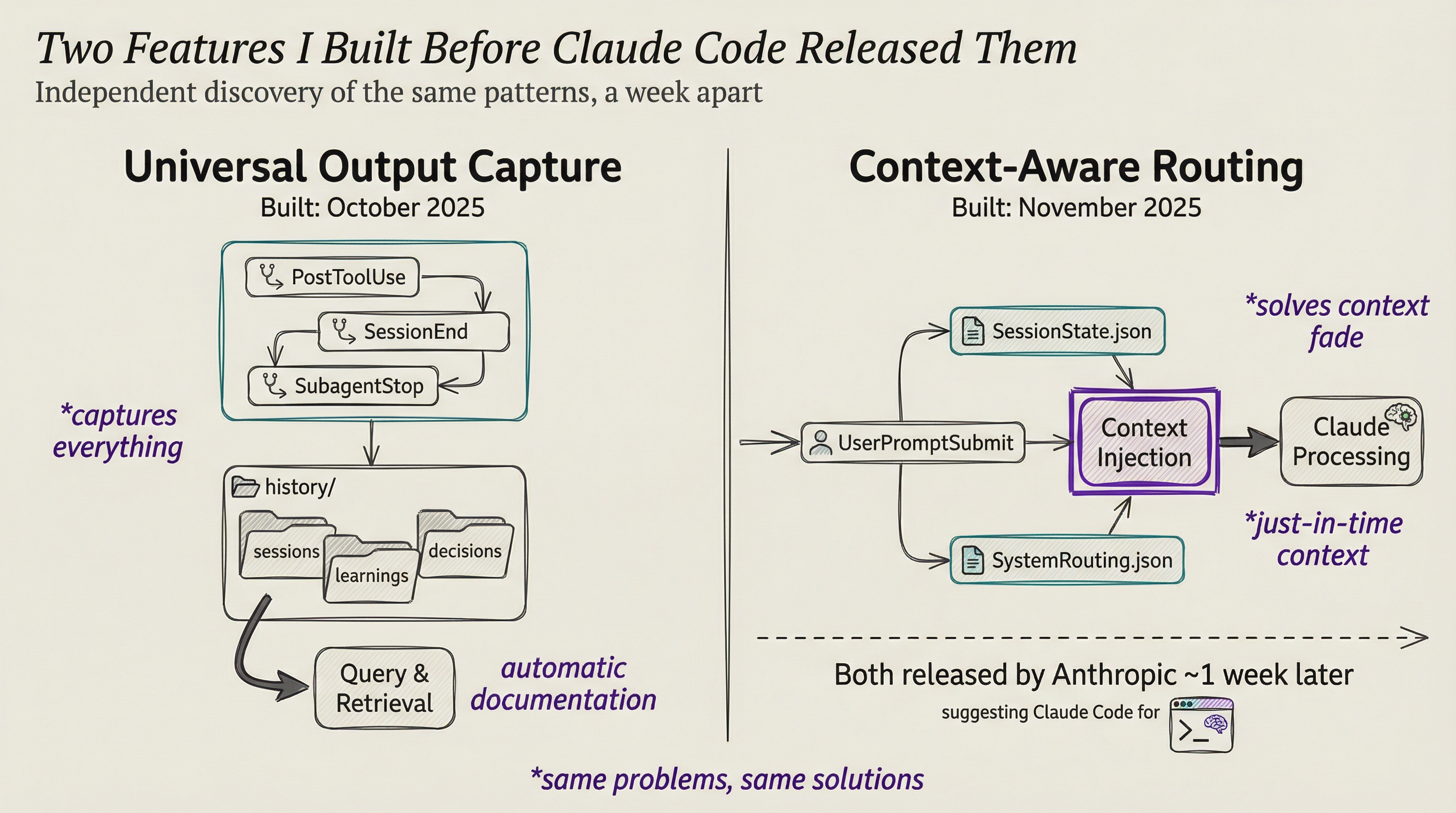

Twice I've built a complete, full-featured system into Kai (my personal AI infrastructure), and then less than a week later, Anthropic actually released the same functionality into Claude Code itself.

The Two Features

Feature 1: Universal File-based Context (UFC)

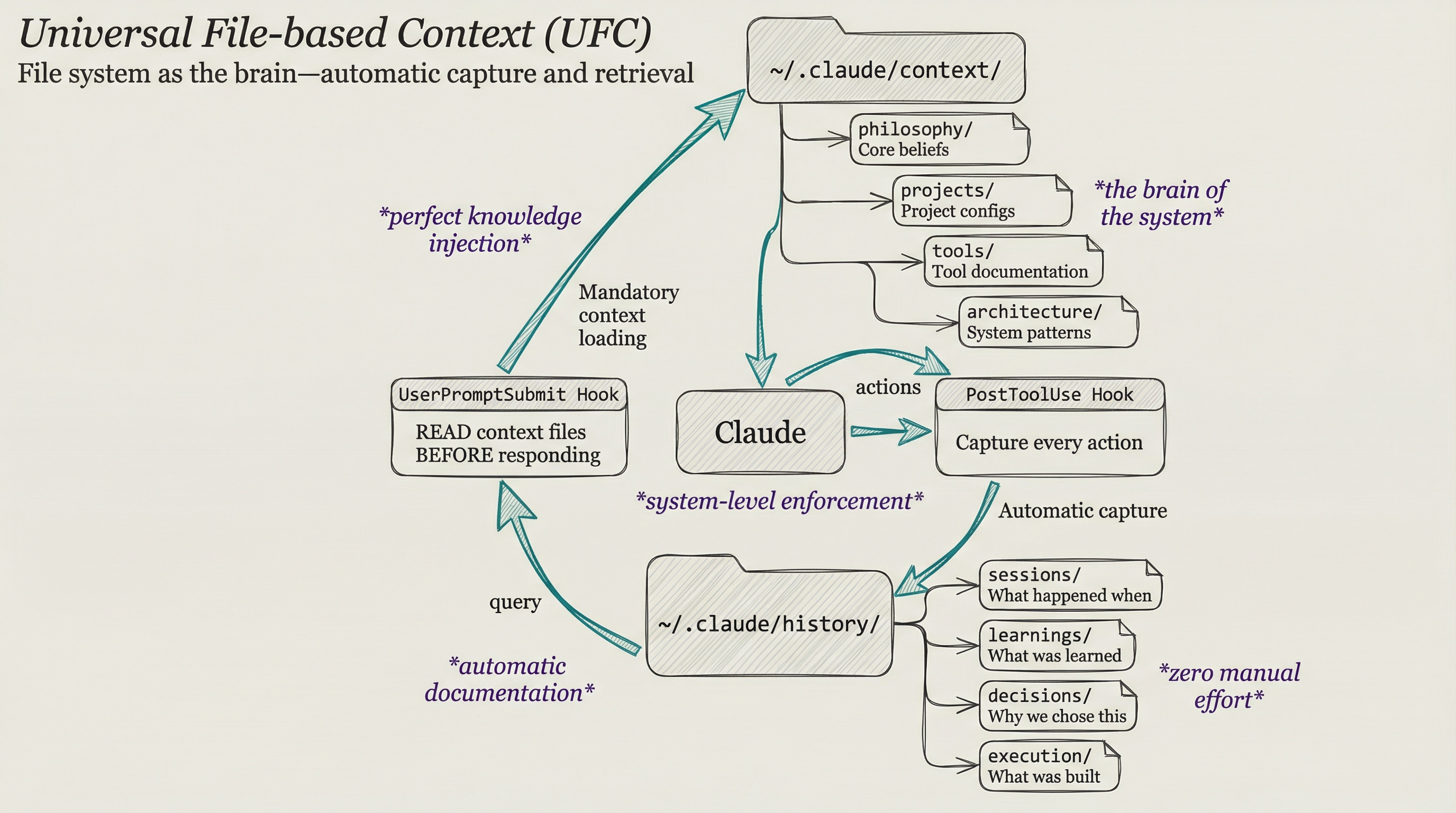

Before Claude Code existed, I was already building what I called the Universal File-based Context system. Basically just using the file system to manage AI context and history.

The Problem

Every AI conversation was ephemeral. Context disappeared between sessions. I'd have the same conversations over and over because the AI had no memory of what we'd built together.

What I Built

Universal file system-based context. It had three parts:

~/.claude/context/- The brain. System prompt, user preferences, active project state~/.claude/prompts/- Task-specific prompts that load based on what I'm doing~/.claude/history/- Automatic capture of sessions, learnings, research, and decisions

Hooks would automatically inject the right context at session start and capture the good stuff at session end.

What Anthropic Later Released

Anthropic released Skills—packaged bundles of instructions, scripts, and resources that extend Claude's capabilities. The architecture they built:

- Progressive disclosure - Claude sees only skill names and descriptions upfront, then loads full context when relevant (exactly like my task-specific prompts)

- File-based structure - Skills are markdown files with optional scripts, organized in folders (exactly like my

~/.claude/prompts/setup) - Dynamic loading - Claude autonomously decides which skills to load based on the task (exactly what my hooks were doing)

- CLAUDE.md files - Project-specific context that auto-loads (exactly like my

context/directory)

I spent all this time building something I thought would be useful. Then a week later, turns out they'd been working on the same thing and released a better, more native version of it. Made me so happy.

Feature 2: Dynamic Skill Loading

Then just a few days ago, Anthropic released a blog called Advanced Tool Use.

I had just built something similar and taken it out of production because I thought it was overkill.

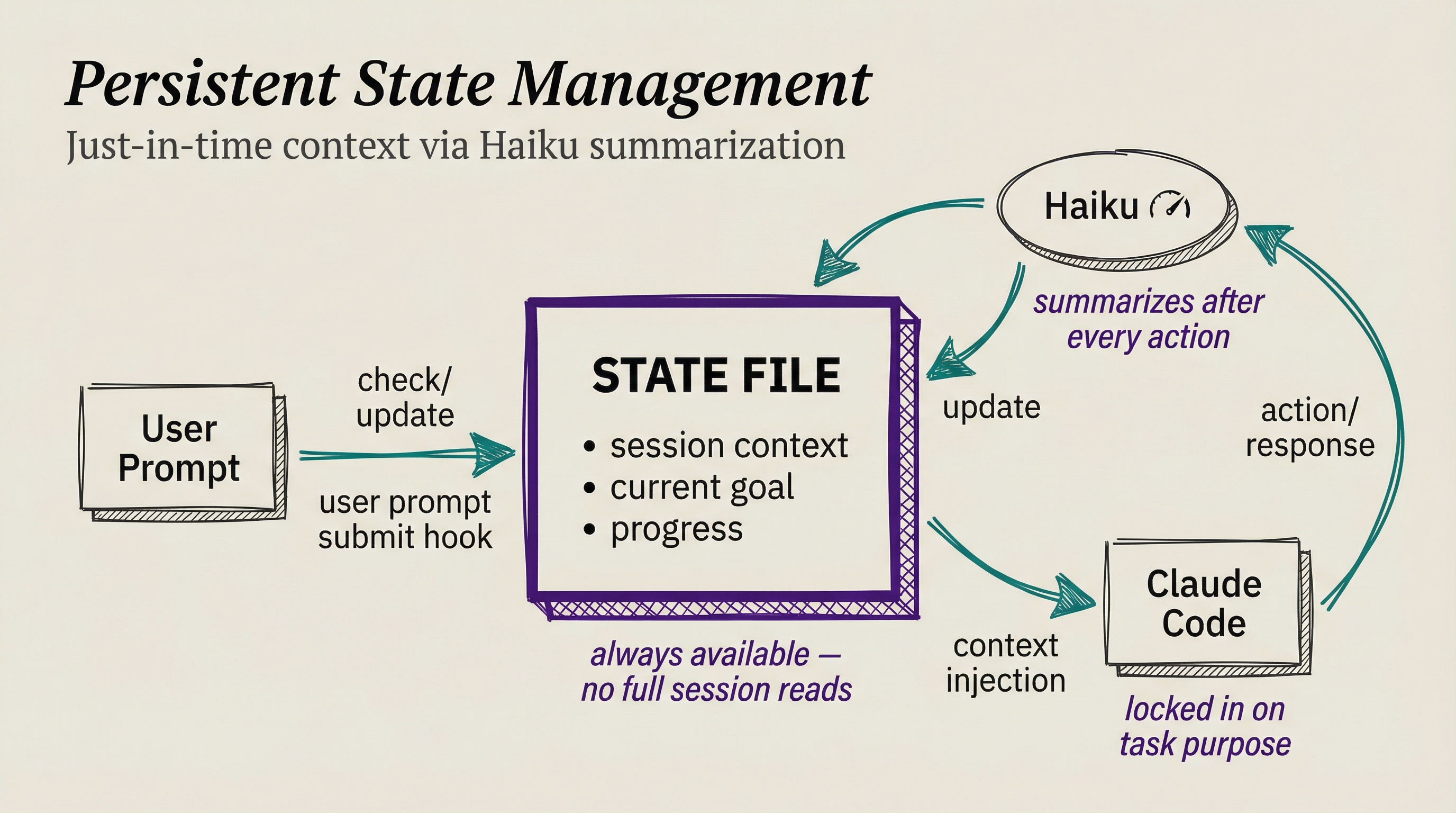

What I Built

I built a system that maintained separate context in a state directory. It got checked during user prompt submit, so I could say something like "push this" and it would know what "this" meant based on what I was doing. I could basically use shorthand to route anywhere within the skill system.

I especially built this because later on in a Claude Code session—especially if you've done multiple compactions—even Claude Code can start to lose the plot. So I built this augmented system to always keep it on the plot.

I ended up only using it for a couple of days and then swapping it out because I thought it was excessive scaffolding that could eventually be solved by a better model or by better native scaffolding.

What Anthropic Published

Guess what? They released this blog which I ran my upgrade skill against. My upgrade skill said, "Hey, you should implement it like this."

So here's my implementation of their blog post: it makes extremely abridged versions of the skills at startup and saves a whole bunch of context. But then if it needs more information, it dynamically routes to the skill that it needs. Basically an on-demand version. You can see the diagram above.

"Instead of loading all tool definitions upfront, the Tool Search Tool discovers tools on-demand. Claude only sees the tools it actually needs for the current task."

And the results?

"This represents an 85% reduction in token usage while maintaining access to your full tool library."

What This All Means

So basically in both cases I had an idea that I thought would be super useful for Claude Code, and I implemented it. Then a couple of days later—or in the case of UFC about a week later—it turns out the Claude Code team was building this the whole time and they release a better version.

On one hand you're like, "oh they made it better." But on the other hand I'm like, very happy that it validates that I'm thinking about this whole context game correctly.

I've been talking about this since 2023. The scaffolding of a system is going to be incredibly important. I wrote a whole post about the 4 components of a good AI system where I talked about context being so critical.

I feel like Anthropic gets this more than anyone, and especially the Claude Code team.

All this to really say that I just feel proud of myself for the fact that I seem to be thinking along the same lines as the Claude Code team, and in my own limited way I might even be a step ahead.

Sorry for the self-congratulations, but I just feel really excited about this.

See you in the next one.

如有侵权请联系:admin#unsafe.sh