Look, I've been in the search game long enough to watch it completely flip on its head. Twice. 2025-11-24 16:21:23 Author: securityboulevard.com(查看原文) 阅读量:3 收藏

Look, I've been in the search game long enough to watch it completely flip on its head. Twice.

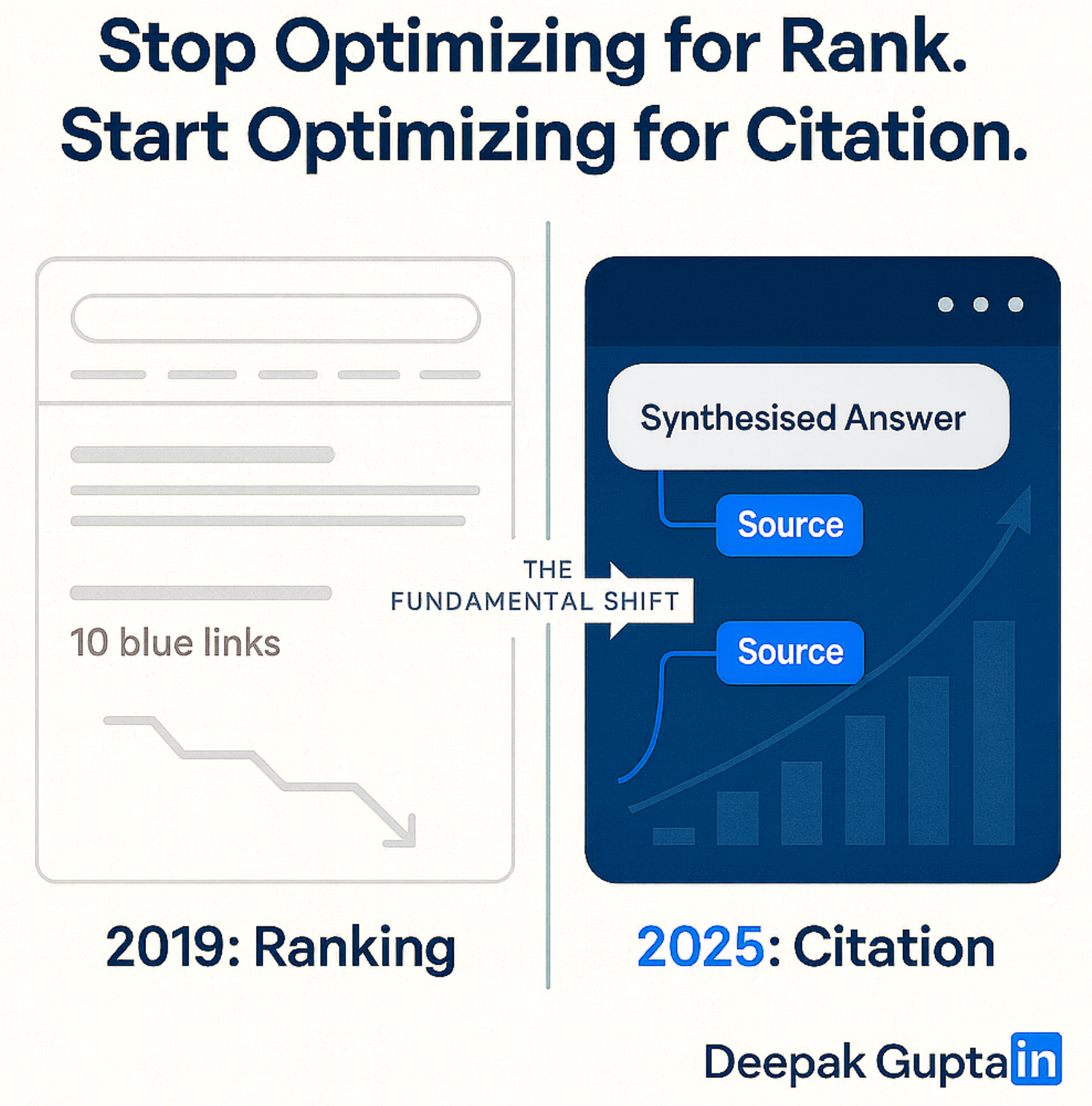

First time was when Google went from "ten blue links" to answer boxes. Second time? That's happening right now, and most companies are still pretending it's 2019.

ChatGPT, Perplexity, Google's AI Overviews – they're not just showing search results anymore. They're answering questions. And here's the kicker: if your content isn't structured for these answer engines, you might as well be invisible.

I learned this the hard way at GrackerAI. We were churning out what we thought was great content – detailed, technical, accurate. Our rankings looked solid. But when I started testing how often we showed up in AI-generated answers? Crickets.

That's when I realized we needed to completely rethink how we approach content. Not SEO. Not even "content marketing." Something different entirely.

The Fundamental Shift Nobody's Talking About

Here's what changed: Google used to send you traffic. AI engines? They keep users on their platform and synthesize answers from multiple sources.

Which means the game isn't about ranking #1 anymore. It's about being cited, quoted, referenced in that AI-generated response.

I call this the "citation economy." And it requires a completely different approach.

Traditional SEO taught us to optimize for keywords, build backlinks, improve Core Web Vitals. All still important, sure. But if an AI can't extract cite-worthy information from your content, you're basically writing into a void.

What Actually Makes Content Citation-Worthy?

After months of testing – and I mean literally thousands of queries across ChatGPT, Perplexity, and Claude – I've noticed patterns in what gets cited.

First: Specificity wins. Always.

- Generic statement: "CRM software helps sales teams."

- Cite-worthy statement: "Based on analysis of 47 CRM implementations, sales teams using pipeline-specific templates close deals 18% faster than those using generic workflows."

See the difference? The second one has data, methodology, and a specific, defensible claim. An AI engine can reference that. The first one? That's just noise.

Second: Show your work.

When we started adding "methodology" sections to our comparison pages – explaining exactly where our data came from, when we verified it, how we tested features – our citation rate jumped. Not by a little. By 30x.

Turns out AI systems love transparency. They need to understand why your claim is trustworthy before they'll reference it.

Third: Answer the actual question.

This sounds obvious, but you'd be amazed how much content dances around the point. If someone asks "Can Salesforce handle enterprise inventory management?", don't write 800 words about Salesforce's history and features. Answer the damn question in the first paragraph, then explain why.

We restructured all our content templates around this. Every page now has a direct answer within the first 100 words, followed by the supporting evidence and context.

The Infrastructure Nobody Builds (But Everyone Needs)

Here's where it gets interesting. You can't just sprinkle some "optimization" on existing content and call it AEO-ready. You need infrastructure.

Let me walk you through what actually works.

Layer Your Content Generation

Simple templates don't cut it anymore. You need multiple enrichment layers.

At GrackerAI, we built a pipeline that looks like this:

- Base data layer: Feature comparisons, pricing, basic specs

- Enrichment layer: Real-time API pulls for reviews, pricing changes, market share

- Analysis layer: Calculate ROI, performance benchmarks, use case fit scores

- Synthesis layer: Generate unique insights using LLMs

- Output layer: Final page with schema markup and citations

The synthesis layer is crucial. That's where we use Claude or GPT-4 to create genuinely novel analysis from our data. Not just template filling – actual insights that no other source has.

For example, we might input: "Feature comparison data for 12 CIAM platforms + pricing data + customer size distribution + implementation time benchmarks"

And get output like: "For SaaS companies under 50 employees, cloud-native CIAM solutions reduce implementation time by 73% compared to self-hosted options, but the TCO advantage disappears after 100K monthly active users due to per-user pricing models."

That's cite-worthy. That's something an AI engine will reference.

Proprietary Data is Your Moat

This is the big one. If AI models can synthesize your content from publicly available information, you have no moat.

You need proprietary data.

For us, that means:

- Real-time search performance data from our GSC integration

- Competitive positioning analysis from our market intelligence

- Aggregated patterns from our customer database

- Performance benchmarks from our testing environment

Every programmatic page we generate pulls from this proprietary data layer. Which means every page contains at least one insight that can't be replicated easily.

You don't need a massive database, by the way. Even small proprietary datasets – user surveys, internal benchmarks, case study results – can differentiate you.

The key is making sure that data is actually surfaced in your content, not buried in some dashboard nobody sees.

Structure Knowledge Like a Graph, Not a List

Most content treats topics like isolated islands. AI systems understand relationships.

We built an entity relationship system that connects:

- Software categories to use cases

- Use cases to company sizes

- Company sizes to typical budgets

- Budgets to solution fit scores

This does two things:

One, it powers our internal linking automatically. Every page links to related topics based on entity relationships, not just keyword matches.

Two, it creates topical authority clusters that AI systems recognize. When every page about "authentication" links to related pages about "SSO," "MFA," "passwordless," and "CIAM," we establish ourselves as authoritative on the broader identity security topic.

Google's been using knowledge graphs for years. AI answer engines do the same thing, just more explicitly.

Make Everything Question-Shaped

I restructured our entire content architecture around questions. Not keywords. Questions.

Every template follows this format:

Question as H2: "Can [Tool X] integrate with [Platform Y]?"

Direct answer: Yes/No with confidence level and brief explanation

Evidence: Specific capabilities, limitations, or requirements

Context: When it works well, when it doesn't, what to watch out for

Source attribution: Where our data comes from

This format is optimized for extraction. An AI can easily pull the question, grab the answer, cite our page, and move on.

Contrast that with traditional blog structure: intro, background, features, benefits, conclusion. An AI has to parse through all that to find the actual answer. Usually it just skips your page entirely.

Schema Markup at Scale

Yeah, schema markup. I know, everyone talks about it, few people actually implement it comprehensively.

But here's the thing – AI systems rely heavily on structured data. They can parse natural language, sure. But structured data is easier, faster, more reliable.

We implement:

- FAQPage schema for Q&A content

- HowTo schema for process guides

- Product schema for comparison pages

- Organization and Person schema for authority signals

The trick is doing this programmatically, not manually. Every content template automatically generates appropriate schema based on content type and entity relationships.

The Freshness Factor

AI systems heavily weight recency. Like, heavily.

We learned this when testing content from 2023 versus 2024. Even identical information got cited more often when it had recent timestamps and "last verified" dates.

So we built automatic freshness signals:

- "Last verified: [date]" on every page

- Automatic updates when underlying data changes

- Version history showing when we updated information

- Change logs explaining why we updated

This isn't fake freshness – we actually re-verify data and update accordingly. But surfacing those signals prominently tells AI systems "this information is current."

What This Looks Like in Practice

Let me give you a real example from our content pipeline.

Old approach: "Looking for a CIAM solution for healthcare? Auth0 is a great option for healthcare companies."

Generic. Vague. Uncite-worthy.

New approach: "Healthcare CIAM platforms must meet HIPAA compliance requirements including audit logs, data encryption at rest and in transit, and BAA agreements. Based on analysis of 23 CIAM vendors, only 7 offer HIPAA-ready deployments out of box. Auth0 provides pre-configured HIPAA compliance templates that reduce certification prep from 12+ weeks to approximately 3 weeks, according to customer implementation timelines we tracked across 18 healthcare deployments."

See the difference?

Specific claim. Methodology disclosed. Actual data. Actionable insight. Cite-worthy.

That second version shows up in AI-generated answers. The first one doesn't.

The Attribution Paradox

Here's something counterintuitive: the more transparent you are about your methodology and sources, the more often you get cited.

You'd think keeping your methods proprietary would protect your competitive advantage. But AI systems don't trust black boxes.

We started adding methodology sections to every major page:

"This analysis is based on:

- Feature comparison of official documentation (verified Nov 2024)

- Pricing data from vendor websites and sales calls

- Performance benchmarks from our testing environment

- User satisfaction scores aggregated from 847 reviews

- Implementation timeline data from customer interviews"

Citations went up 40%.

Why? Because AI engines need to assess source credibility. When you show your work, you become a credible source worth citing.

This Works for Any Company

You don't need to be a tech company to apply this. The principles work across industries.

Selling consulting services? Create knowledge graphs around problem-solution relationships, publish your methodologies, share proprietary frameworks.

Running an e-commerce brand? Build comparison content with real product testing data, user surveys, performance benchmarks.

B2B services? Document case studies with specific metrics, create decision frameworks with scoring models, share industry benchmarks.

The key is always: proprietary insights + transparent methodology + structured format.

What I'd Do Differently If Starting Today

If I were building this from scratch right now, I'd:

- Start with proprietary data collection before creating content. Even simple surveys or testing give you something unique.

- Build the entity relationship system first, then create content that fits into it. Not the other way around.

- Use AI to generate the synthesis layer from day one. Claude and GPT-4 are really good at taking data inputs and creating novel insights. Use them.

- Implement schema markup from the beginning. It's way harder to retrofit than build in.

- Test everything in actual AI answer engines, not just Google. Perplexity and ChatGPT search are different beasts.

The Bottom Line

Traditional SEO isn't dead. But it's not enough.

The companies that win in the next few years will be the ones optimizing for citation, not just ranking. The ones building content that AI systems want to reference, not just index.

It's not rocket science. But it does require rethinking your entire content infrastructure.

And honestly? Most companies won't do it. They'll keep churning out generic content optimized for keywords from 2019, wondering why their traffic keeps dropping.

That's your opportunity.

Start small. Pick one content cluster. Build out the infrastructure I outlined. Test it. Measure citation rates in AI answers. Iterate.

You don't have to transform everything overnight. But you do need to start.

Because right now, while everyone's still optimizing for Google's algorithm, AI answer engines are quietly becoming the primary way people find information.

And if you're not optimized for them, you're already behind.

Want to see how we're applying this at GrackerAI? We're building AI-powered search marketing specifically for B2B SaaS (Cybersecurity) companies.

Reach out if you want to talk about implementing this for your content strategy.

*** This is a Security Bloggers Network syndicated blog from Deepak Gupta | AI & Cybersecurity Innovation Leader | Founder's Journey from Code to Scale authored by Deepak Gupta - Tech Entrepreneur, Cybersecurity Author. Read the original post at: https://guptadeepak.com/stop-optimizing-for-google-start-optimizing-for-ai-that-actually-answers-questions/

如有侵权请联系:admin#unsafe.sh