嗯,用户让我帮忙总结一篇文章,控制在100字以内,而且不需要用“文章内容总结”这样的开头。首先,我需要仔细阅读这篇文章,理解它的主要内容和重点。 这篇文章主要讨论了AI在网络安全方面的挑战和风险。很多组织在使用AI时,安全策略和治理措施跟不上,导致数据泄露等问题。员工缺乏AI安全培训,导致“影子AI”现象,员工未经批准使用AI工具,泄露公司数据。此外,AI相关的安全风险已经引起了董事会的关注,成为高级管理层的优先事项。同时,拥有AI安全技能的网络安全专业人士需求增加,薪资上涨。 接下来,我需要将这些要点浓缩到100字以内。要注意用词简洁明了,涵盖主要问题:AI安全治理滞后、员工培训不足、影子AI、董事会关注、人才需求增加。 可能的结构是:首先点出问题所在——组织在AI安全方面滞后;然后说明具体问题——员工培训不足导致数据泄露;接着提到董事会的关注;最后提到人才需求增加。 现在把这些整合成一个连贯的句子: “文章指出许多组织在关键的AI安全政策领域如使用治理、风险监督、数据保护和员工培训方面进展缓慢。由于缺乏有效的安全策略和治理措施,导致了与AI相关的数据泄露事件激增。此外,员工缺乏AI安全培训也加剧了‘影子AI’现象,并导致敏感公司数据泄露。这些新的AI网络安全风险已促使企业将AI治理提升至董事会层面作为优先事项,并推动了对具备特定AI技能的网络安全专业人士的需求增加。” 检查一下字数是否符合要求,并确保内容准确全面。 </think> 文章指出许多组织在关键的AI安全政策领域如使用治理、风险监督、数据保护和员工培训方面进展缓慢。由于缺乏有效的安全策略和治理措施,导致了与AI相关的数据泄露事件激增。此外,员工缺乏AI安全培训也加剧了“影子AI”现象,并导致敏感公司数据泄露。这些新的AI网络安全风险已促使企业将AI治理提升至董事会层面作为优先事项,并推动了对具备特定AI技能的网络安全专业人士的需求增加。 2025-10-31 13:0:0 Author: www.tenable.com(查看原文) 阅读量:42 收藏

Many organizations are playing catch-up in key AI security policy areas, such as usage governance, risk oversight, data protection, and staff training. In this Cybersecurity Snapshot special edition, we round up recent guidance on preparing for, managing and governing AI cyber risks.

Key takeaways

- Most organizations' AI adoption is dangerously outpacing their security strategies and governance, leading to a surge in costly, AI-related breaches.

- A massive gap in employee AI security training is fueling "shadow AI" and causing staff to leak sensitive company data.

- This new AI cyber risk has finally made AI governance a boardroom-level priority, while also driving up demand and salaries for cybersecurity professionals who possess AI-specific skills.

In case you missed it, here’s fresh guidance from recent months on how organizations can manage, govern, and prep for the new wave of AI cyber risks.

1 - Tenable report: The “act now, secure later” AI problem

Most organizations have taken a cavalier attitude towards their use of artificial intelligence (AI) and cloud, a bit along the lines of: “Don’t worry, be happy.”

In other words: Use AI and cloud now, deal with security later. Of course, this puts them in a precarious position to manage their cyber risk.

This is the dangerous scenario that emerges from the new Tenable report “The State of Cloud and AI Security 2025,” published in September.

“Most organizations already operate in hybrid and multi-cloud environments, and over half are using AI for business-critical workloads,” reads the global study, commissioned by Tenable and developed in collaboration with the Cloud Security Alliance.

“While infrastructure and innovation have evolved rapidly, security strategy has not kept pace,” it adds.

Based on a survey of 1,025 IT and security professionals, the report found 82% of organizations have hybrid – on-prem and cloud – environments and 63% use two or more cloud providers.

Meanwhile, organizations are jumping into the AI pond headfirst: 55% are using AI and 34% are testing it. The kicker? About a third of those using AI have suffered an AI-related breach.

“The report confirms what we’re seeing every day in the field. AI workloads are reshaping cloud environments, introducing new risks that traditional tools weren’t built to handle," Liat Hayun, VP of Product and Research at Tenable, said in a statement.

Key obstacles to effectively secure AI systems and cloud environments include:

- Rudimentary identity and access management protection methods

- Unfocused and misguided AI security efforts

- A skills gap

- Reactive security strategies

- Insufficient budgets and leadership support

The fix? Shift from a reactive to a proactive approach. To stay ahead of evolving threats:

- Adopt integrated visibility and controls, and consistent policies across on-prem, cloud and AI workloads.

- Enhance identity governance for all human and non-human identities.

- Make sure executives understand what it takes to secure your AI and cloud infrastructure.

To get more details, check out:

- The announcement “Tenable Research Shows Organizations Struggling to Keep Pace with Cloud Security Challenges”

- The blog “New Tenable Report: How Complexity and Weak AI Security Put Cloud Environments at Risk”

- The full report “The State of Cloud and AI Security 2025”

For more information about cloud security and AI security, check out these Tenable resources:

- “Introducing Tenable AI Exposure: Stop Guessing, Start Securing Your AI Attack Surface” (blog)

- “AI Is Your New Attack Surface” (on-demand webinar)

- “Complete Cloud Lifecycle Visibility” (solution overview)

- “Breaking Down Silos: Why You Need an Ecosystem View of Cloud Risk” (blog)

- “Tenable Named a Major Player in IDC MarketScape: Worldwide CNAPP 2025 Vendor Assessment” (analyst research)

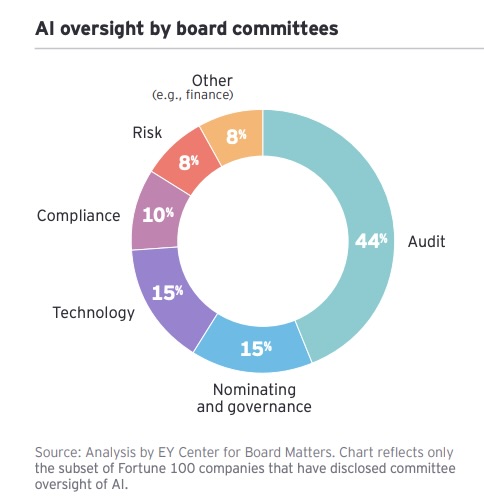

2 - AI risks hit the boardroom

AI risk isn't just an IT problem anymore. It's a C-suite and boardroom concern as well.

The sign? Fortune 100 boards of directors have boosted the number and the substance of their AI and cybersecurity oversight disclosures.

That’s the headline from an EY analysis of proxy statements and 10-K filings submitted to the U.S. Securities and Exchange Commission (SEC) by 80 of the Fortune 100 companies in recent years.

“Companies are putting the spotlight on their technology governance, signaling an increasing emphasis on cyber and AI oversight to stakeholders,” reads the EY report “Cyber and AI oversight disclosures: what companies shared in 2025,” published in October.

What’s driving this trend? Cyber threats are getting smarter by the minute, while the use of generative AI, both by security teams and by attackers, is growing exponentially.

Key findings on AI oversight include:

- 48% of the analyzed companies now mention AI risk in their board's enterprise risk oversight, a threefold increase from 2024.

- 44% list AI expertise as a director qualification, up from 26% last year, signaling the growth of AI proficiency on boards.

- 40% have formally assigned AI oversight to a board committee.

- 36% now list AI as a standalone risk factor, more than double last year's 14%.

“Board oversight of these areas is critical to identifying and mitigating risks that may pose a significant threat to the company,” reads the report.

For more information about AI governance in the boardroom and the C-suite:

- “Governance of AI: A critical imperative for today’s boards” (Deloitte)

- “AI Governance In The Boardroom: Strategies For Effective Oversight And Implementation” (Forbes)

- “The Hidden C-Suite Risk Of AI Failures” (Harvard Law School)

- “How Board-Level AI Governance Is Changing” (Forbes)

- “How AI Impacts Board Readiness for Oversight of Cybersecurity and AI Risks” (National Association of Corporate Directors)

3 - CSA releases new security framework for AI systems

Now that the C-level executives and the board are paying attention, organizations need an AI game plan. A new Cloud Security Alliance AI playbook might be useful in this area.

The CSA’s “Artificial Intelligence Controls Matrix,” published in July, is described as a vendor-agnostic framework for developing, deploying, and running AI systems securely and responsibly.

“The AI Controls Matrix bridges the gap between lofty ethical guidelines and real-world implementation. It enables all stakeholders in the AI value chain to align on their roles and responsibilities and measurably reduce risk,” Jim Reavis, CSA CEO and co-founder, said in a statement.

The matrix maps to cybersecurity standards such as ISO 42001 and the National Institute of Standards and Technology’s “Artificial Intelligence Risk Management Framework” (NIST AI 600-1).

It features 243 AI security controls across 18 domains, including:

- Audit and assurance

- Application and interface security

- Cryptography, encryption and key management

- Data security and privacy

- Governance, risk management and compliance

- Identity and access management

- Threat and vulnerability management

- Model security

For example, the “application and interface security” domain includes controls for secure development, testing, input and output validation, and API security. Meanwhile, the “threat and vulnerability management” domain covers penetration testing, remediation, prioritization, reporting and metrics, and threat analysis and modeling.

For more information about AI data security, check out these Tenable resources:

- “Harden Your Cloud Security Posture by Protecting Your Cloud Data and AI Resources” (blog)

- “Tenable Cloud AI Risk Report 2025” (report)

- "How AI Can Boost Your Cybersecurity Program" (blog)

- “Tenable Cloud AI Risk Report 2025: Helping You Build More Secure AI Models in the Cloud” (on-demand webinar)

- Know Your Exposure: Is Your Cloud Data Secure in the Age of AI? (on-demand webinar)

4 - IBM: How the AI gold rush is costing you

Once you’ve adopted an AI security playbook, use it.

As IBM’s “Cost of a Data Breach Report 2025” found, companies are paying a pretty penny when they roll out AI systems without the proper usage governance and security controls.

“This year's results show that organizations are bypassing security and governance for AI in favor of do-it-now AI adoption. Ungoverned systems are more likely to be breached—and more costly when they are,” reads an IBM statement.

Check the stats:

- A whopping 63% of surveyed organizations lack AI governance policies.

- 13% suffered an AI-related security incident that resulted in a breach. Among them, 97% lacked proper AI access controls.

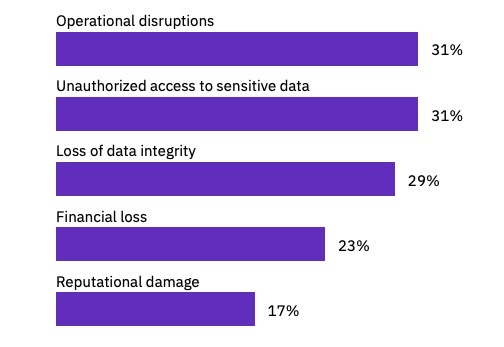

- 60% of those incidents led to compromised data and 31% led to operational disruption.

- Attackers weaponized AI in 16% of all data breaches.

The report, released in July, also calls out shadow AI – the unapproved use of AI by employees. This practice caused a breach at 20% of organizations.

And companies with high shadow AI rates experienced higher data breach costs and more compromised personal information and intellectual property.

In short: Cyber attackers are exploiting the lack of basic AI access controls and AI governance.

Impacts of security incidents on authorized AI

(From organizations that reported a security incident involving an AI model or application; more than one response permitted. Source: IBM’s “Cost of a Data Breach Report 2025,” July 2025)

The report is based on analysis of data breaches at 600 organizations. Almost 3,500 security and C-level executives were interviewed.

To get more details, check out:

- The report’s announcement

- The report’s home page

- The companion IBM blog “2025 Cost of a Data Breach Report: Navigating the AI rush without sidelining security”

For more information about shadow AI, check out these Tenable resources:

- “Tackling Shadow AI in Cloud Workloads” (blog)

- “Introducing Tenable AI Exposure: Stop Guessing, Start Securing Your AI Attack Surface” (blog)

- “Mitigating AI-Related Security Risks: Insights and Strategies with Tenable AI Aware” (on-demand webinar)

- “Security for AI: A Practical Guide to Enforcing Your AI Acceptable Use Policy” (blog)

- “Know Your Exposure: Is Your Cloud Data Secure in the Age of AI?" (on-demand webinar)

- “Cybersecurity Awareness Month Is for Security Leaders, Too” (blog)

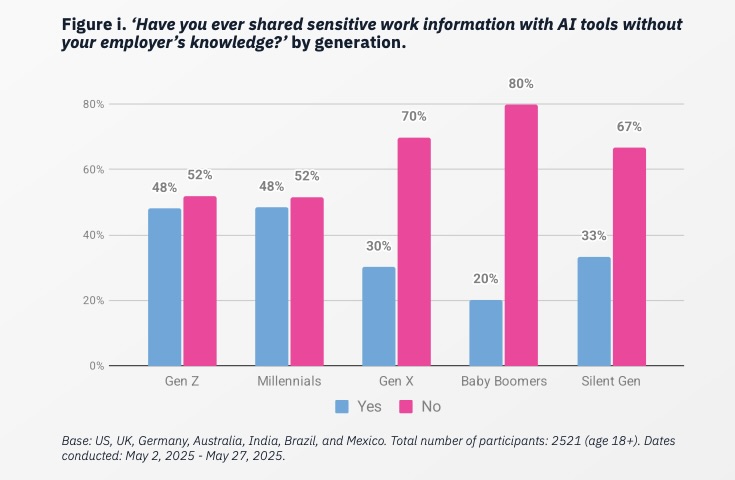

5 - Lacking AI training and oversight, employees input company data into AI tools

Lack of governance isn't just a high-level policy failure. It's happening at every desk.

Just how bad is the AI security situation at the user level? Check out these stats:

- AI use is widespread: 65% of people are using AI tools, up from 44% a year ago.

- Most people aren’t trained for it: A staggering 58% of employees have received zero training on AI security or privacy risks.

- Business secrets are spilling: 43% of employees admit to feeding company info into AI tools without their employers' authorization. We're talking internal documents (50%), financial data (42%) and even client data (44%).

Those numbers come from the report “Oh, Behave! The Annual Cybersecurity Attitudes and Behaviors Report 2025-2026,” which the National Cybersecurity Alliance (NCA) and CybSafe published in October, based on a survey of 7,000-plus respondents from Australia, Brazil, Germany, India, Mexico, the U.K., and the U.S.

“The rapid rise in AI usage is the double-edged sword to end all double-edged swords: while it boosts productivity, it also opens up new and urgent security risks, particularly as employees share sensitive data without proper oversight,” reads the report.

And it’s not like people are clueless. They worry about AI super-charging scams and cyber crime (63%), fake info (67%), security system bypassing (67%) and identity impersonation (65%). Yet, respondents’ faith in companies adopting AI responsibly and securely is only 45%.

In fact, the report states that shadow AI is “here to stay” and “becoming the new norm,” due to insufficient AI security awareness training.

“Without urgent action to close this gap, millions are at risk of falling victim to AI-enabled scams, impersonation, and data breaches,” Lisa Plaggemier, Executive Director of the NCA, said in a statement.

To learn more about AI security awareness training:

- “Cybersecurity leaders scramble to educate employees on generative AI threats” (Fortune)

- “Employers Train Employees to Close the AI Skills Gap” (Society for Human Resource Management)

- “Are Employees Receiving Regular Data Protection Training? Are They AI Literate?” (JD Supra)

- “Fit for AI - How to train your staff on how to use AI” (Robert Half)

- “Superagency in the workplace: Empowering people to unlock AI’s full potential” (McKinsey)

6 - Report: Cyber pros with AI security skills are in high demand

All of these AI challenges have a silver lining for cybersecurity professionals with AI security skills.

That’s the word from Robert Half’s “2026 Salary Guide,” published in October. If you know how to use AI for things like managing vulnerabilities, automating security, or hunting for threats, you're going to be "highly sought."

“Many employers look for candidates who can work with AI programs or models, such as neural networks and natural language processing, for predicting and mitigating cyber risks,” Robert Half wrote in an article about the guide titled “What to Know About Hiring and Salary Trends in Cybersecurity.”

Cyber hiring managers are also eager for candidates with AI-related certifications, like Microsoft’s AI-900 and Google Cloud’s Machine Learning Engineer.

Of course, other skills still shine:

- Cloud security

- Data security

- DevSecOps

- Ethical hacking

- Risk management

To get more details, check out:

- Robert Half’s full “2026 Salary Guide”

- The report's section on technology salaries, where you can find the cybersecurity salary ranges for various roles

- The announcement “Robert Half Releases 2026 Salary Guide Highlighting Key Compensation Trends Amid a Complex Job Market”

Juan Perez

Senior Content Marketing Manager

Juan has been writing about IT since the mid-1990s, first as a reporter and editor, and now as a content marketer. He spent the bulk of his journalism career at International Data Group’s IDG News Service, a tech news wire service where he held various positions over the years, including Senior Editor and News Editor. His content marketing journey began at Qualys, with stops at Moogsoft and JFrog. As a content marketer, he's helped plan, write and edit the whole gamut of content assets, including blog posts, case studies, e-books, product briefs and white papers, while supporting a wide variety of teams, including product marketing, demand generation, corporate communications, and events.

如有侵权请联系:admin#unsafe.sh