费城DevOpsDays 2025探讨了AI安全、机密管理、运行时安全与恢复力等主题,强调动态安全控制循环的重要性。 2025-10-6 14:0:1 Author: securityboulevard.com(查看原文) 阅读量:1 收藏

Philadelphia might make you think of cheesesteaks, but did you know it is the mural capital of the world? You can walk for blocks and see entire neighborhoods stitched together by paint, story, and community. That visual language is a fitting metaphor for what modern security demands. We are no longer guarding a single wall; we are curating a system of interconnected surfaces and signals. It made "The City of Brotherly Love" a perfect backdrop for around 150 developers, DevOps professionals, and other IT practitioners to get together and talk about how we build and secure that varied landscape we call our web applications at DevOpsDays Philadelphia 2025.

Over two days in a single track, we listened to sessions that covered AI in DevOps and governance, secrets and non-human identities, runtime security and observability, resilience over perfection, GitOps at scale, and alerting hygiene. In addition to the planned content, DevOps Days features Open Spaces, where the participants hold group discussions, following a simple model. These conversations are some of the best chances to ask questions of our peers and share our experiences working in tech.

Here are just a few highlights from this year's DevOps Days Philadelphia.

Intent As Attack Surface

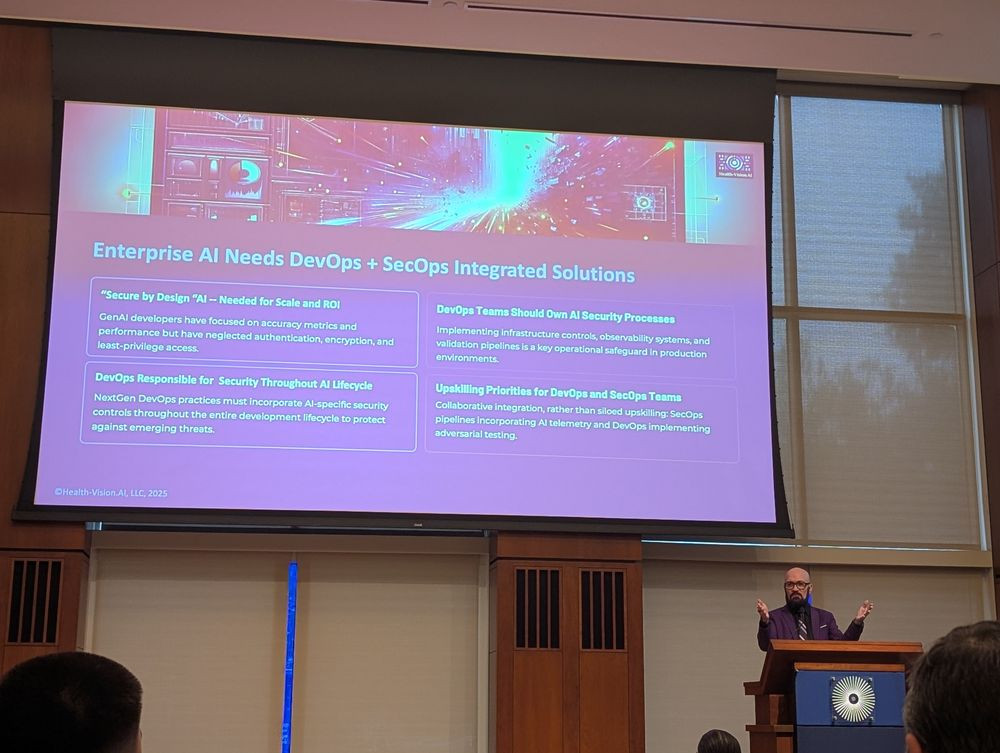

In the session "Cybersecurity Meets AI: Roadmap for Next Gen Skills," led by Brian M. Green, Chief AI Ethics Officer & Strategist at Health-Vision.AI, the focus was squarely on AI security and the operational risks associated with agentic automation.

When an AI can plan, act, and adapt, the prompt layer stops being a suggestion and becomes code, which means it inherits all the responsibilities of code, version control, policy enforcement, auditability, and rollback. He laid out the threat surface in human terms, identity and access management for machines and models, prompt inputs that can be adversarial, a reasoning layer that can hallucinate, and an execution surface that can touch real systems.

That framing matters because it recasts LLM vulnerabilities as governance problems, not just model problems. If the AI is a non-human identity in your environment, then privileged access misuse and credential exposure look like familiar IAM issues, not magic. LLMOps, in that view, is simply DevSecOps for a new kind of actor.

The takeaways were pragmatic. Treat prompts as code artifacts. Make policy enforcement explicit. Keep humans in the loop when the risk warrants it. Use AI red teaming and adversarial inputs to test assumptions. Build policy as code for AI pipelines the same way we did for CI, and assume shadow AI will appear until you surface it with security observability. None of that is a silver bullet; it is discipline. The discipline is what turns AI security from panic into a practice.

Secrets Are Still The Keys To The Kingdom

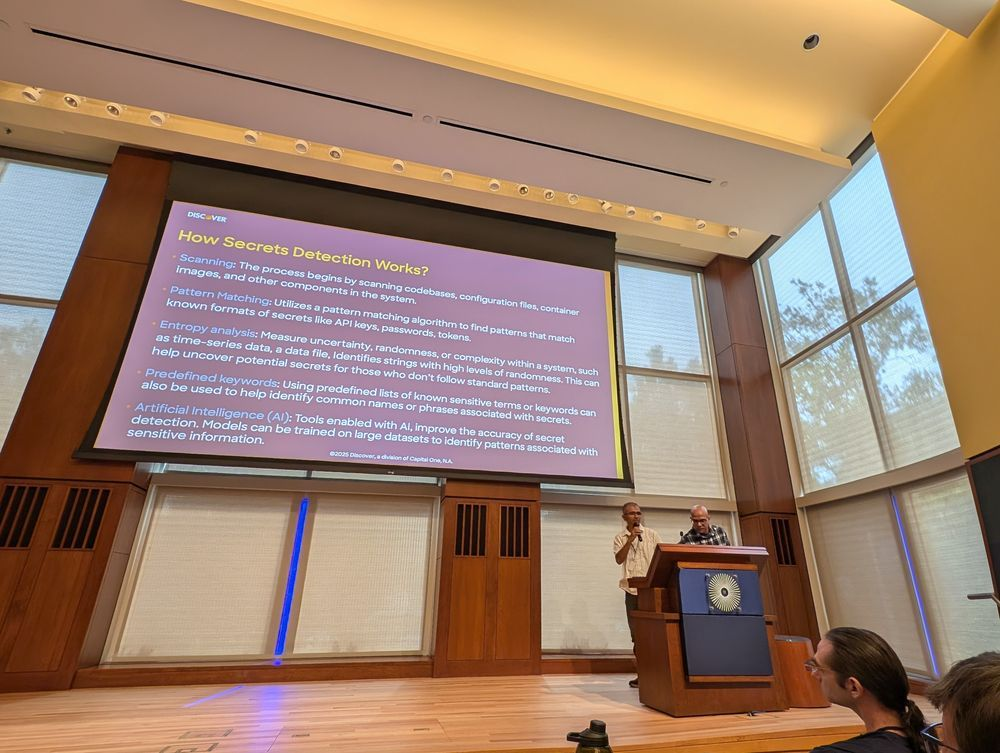

In "Secure Coding: Automate secret management and security scanning," from Ehfaj Khan, Expert Application Engineer, and Ankur Bansal, Expert Application Engineer, both from Discover Financial Services, the conversation focused on basics that remain anything but basic.

Secrets sprawl continues to be a leading operational risk, and it is not limited to source code. Delivery pipelines, logs, containers, and productivity tools all collect non-human identities in plain text. The presenters walked through how secrets detection works, starting with pattern matching, entropy analysis, and machine learning to cut false positives. They also showed the consumption flow most organizations struggle to operationalize, mapping a machine identity to a vault object, ensuring the right application identity can fetch it, and rotating or revoking when something leaks.

The duo framed secrets security as a continuous practice, not a one-time cleanup. Detection in pull requests reduces blast radius. Scanning registries and images reduces time to discovery. You can hear the control loop taking shape. It becomes a feedback system, find, route, fix, and then verify that the fix held. In a world where agentic automation can deploy changes at high speed, this loop protects trust boundaries that human reviewers can no longer police by hand.

Run The Code You Actually Have, Not The Code You Imagine

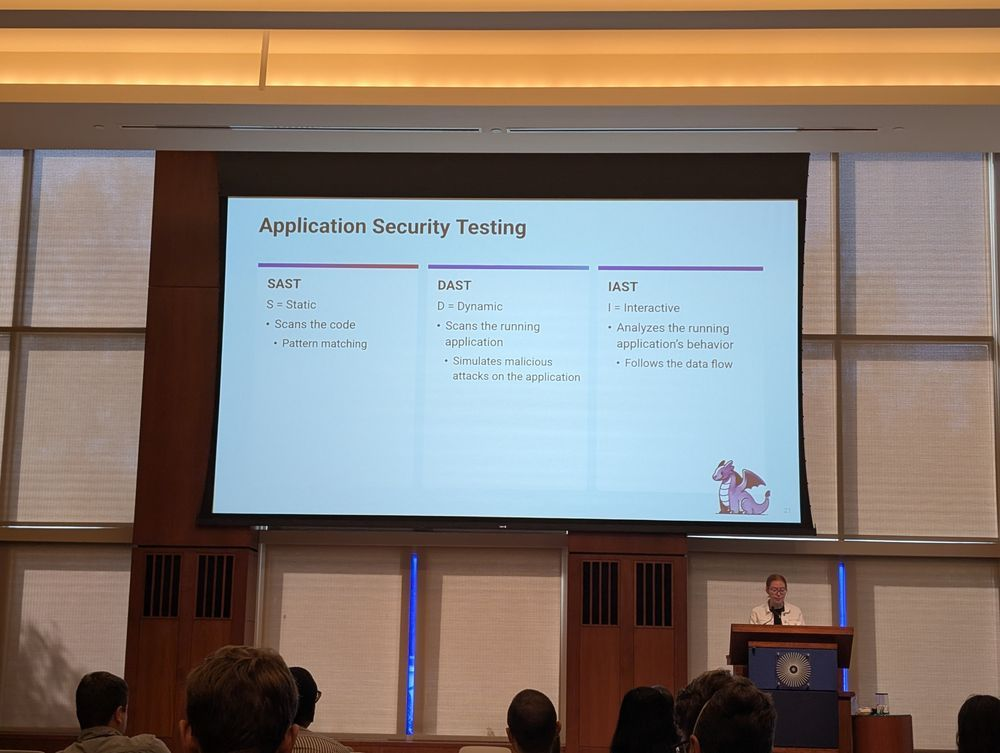

In "Follow The Trace: How Traditional AppSec Tools Have Failed Us," Kennedy Toomey, Technical Security Advocate at DataDog, took aim at a painful reality. We ask development teams to stop everything for static findings that may never execute, then we wonder why triage stalls. Runtime tracing and IAST change that dynamic. By observing live execution paths, you get context, which inputs flowed where, which services were touched, and which calls left the trust boundary. An SSRF payload that never reaches a risky endpoint is not the same risk as one that does. When you trace, you prioritize with evidence, not guesses.

Kennedy discussed how runtime context lowers friction between security and engineering, since teams can discuss an observed path rather than debate a hypothetical. This converts security observability into a shared language. It also aligns with the AI governance point, because when agents start to write or wire code, the intent gets messy. Observability at runtime becomes the last honest witness. We need to instrument it so it tells you where it is unsafe, then decide what to fix first.

Resilience Over Perfection

In his session "Ablative Resilience," Damion Waltermeyer, DevSecOps Consultant at Craftedsecure and organizer of Philly DevOps, said, "We cannot promise invincibility, but our systems must survive contact with the enemy." The underlying philosophy of ablative technology is that you can discard any misbehaving or infected systems, treating infrastructure as expendable.

If a service behaves oddly, kill it and redeploy from a known good state. Destroy keys at the first sign of trouble. Keep backups and warm standbys so your mean time to clean is shorter than the attacker’s mean time to pivot. The metaphor comes from aerospace, materials that burn away on reentry, protecting what must endure by sacrificing what can be rebuilt.

This is not a license for chaos, but a strategy that assumes incidents will happen, and that your speed of rotation is a core control. If a token is compromised, rotation beats argument. If a runtime path looks wrong, replacement beats debate. The goal is not zero incidents, but the rapid containment that preserves customer trust and business continuity.

A Focus On Intent, Execution, And Containment

Throughout all the talks, a few recurring themes emerged. Security is shifting from static control points to dynamic control loops. AI governance defines intent boundaries for agentic automation, while secrets management provides the keys and ledger that constrain what those agents can reach. Model the AI as a non-human identity, then apply least privilege, and verify trust boundaries. The difference is speed, so your loop must keep pace, or you drift into shadow AI.

Execution: Evidence Over Assumptions

Static analysis provides early feedback; however, without runtime context, it becomes noise. Let runtime tracing confirm or refute findings so teams prioritize with evidence. Show real call chains and touched services to move the conversation from blame to fix, which is how DevSecOps builds trust across disciplines.

Recovery speed is credibility. Rotate keys in minutes, rebuild from known good images, and cut over to warm standbys without a war room. Ablative resilience treats infrastructure as expendable, discards the suspect piece, restores the system, and limits the attacker’s window of opportunity.

One Loop To Start

Verify intent before execution with policy as code for agents and pipelines, observe runtime to confirm or contradict intent, rotate secrets and rebuild on deviation, then feed lessons back into prompts, policies, and playbooks.

Policies are versioned like code, detections map to non-human identities and their reach, observability shows cross-service paths without archaeology, and the platform can discard and restore on demand. Align the nouns, machine identities, privileges, sources of truth, and timestamps.

A Culture Of Guardrails, Not Slogans

Write down how you trust, then automate it. Prompts as code create auditable artifacts. Secrets detection in pull requests creates fast feedback. Runtime tracing turns fear into evidence. Ablative resilience makes posture measurable. The novelty is speed and scale; the practices are familiar, and that is good news.

Closing The Loop On Risk

Philadelphia’s murals work because thousands of small choices add up to something coherent. Security in an AI-shaped DevOps world needs the same patience, a system that is always verifying, always learning, always ready to repaint the section that cracked. Your author was able to give a talk that inadvertently echoed some of the other speakers and drew some of the same conclusions when it comes to non-human identities and secrets security.

Leaving the event, a few bits of wisdom that really stuck out were: if you want a place to begin, pick one agent or one critical prompt and put it under governance with policy as code. Connect your secrets detections to owners and non-human identities so that fixes find the right hands. Turn on one runtime trace that can confirm or deny a high-severity path. Run one discard and restore drill until it feels boring. This is how control loops get built, small, real, and repeatable.

*** This is a Security Bloggers Network syndicated blog from GitGuardian Blog - Take Control of Your Secrets Security authored by Dwayne McDaniel. Read the original post at: https://blog.gitguardian.com/devops-days-philly-2025/

如有侵权请联系:admin#unsafe.sh