文章探讨了网络安全从传统的基于IP的访问控制列表(ACLs)转向基于身份的微分段和零信任模型的关键转变。传统方法在云计算和容器化环境中失效,而新方法通过绑定身份和意图实现动态、弹性的安全策略,并强调使用mTLS、服务标识及云平台工具来实施这些策略。 2025-10-3 08:43:46 Author: securityboulevard.com(查看原文) 阅读量:2 收藏

The evolution from perimeter ACLs to identity-aware microsegmentation is the most critical shift in network security. Here’s how to build rules that power true zero-trust.

For decades, we spoke firewall in five fields — source internet protocol (IP), destination IP, protocol, source port and destination port. It worked when servers were pets and perimeters were real. Today, workloads are ephemeral, autoscaled, containerized and scattered across clouds. IPs churn; east-west traffic dominates; the thing we actually protect today is data, not subnets.

Who is this for? Security leaders, network and platform engineers and compliance owners in environments with legacy segmentation or static allowlists — common in healthcare, operational technology (OT)/industrial control systems (ICS) and government — who need to cut east-west risk and satisfy auditors without ripping and replacing. If that’s you, here’s how to translate brittle IP rules into resilient, identity-aware policies.

Identity-based microsegmentation flips the unit of trust from ‘where’ to ‘who’. Instead of blessing 10.0.5.22, we bless payment-service in production calling user-db on port 5432 — no matter where those land. This is not a feature. It’s a philosophy.

Rick Howard — Board President & Cofounder of the Cybersecurity Canon, former CSO of Palo Alto Networks, CMU CISO-Executive Program faculty and author of ‘Cybersecurity First Principles’ (Wiley, 2023) — puts it plainly:

“I believe that the absolute cybersecurity first principle, regardless of how large your organization is and regardless of which vertical your organization falls into, is to reduce the probability of a material cyber event in the next business cycle. If that’s true, one strategy that you should consider is zero-trust; reduce access to everything in your digital environment based on need to know. If you don’t need access to do your job, then you don’t need access.”

Why IP-Centric Rules Keep Failing

At enterprise scale, while IPs are still critical for routing, telemetry and incident triage, they are an unstable proxy for trust. They widen the blast radius, lengthen dwell time and accrue compliance debt — the opposite of zero-trust.

- Brittle: Access control lists (ACLs) break during a re-IP process.

- Over-Permissive: To stop the breakage, open /16s and ‘any-any’s— lateral movement lanes.

- Blind: An address says nothing about the workload, user or intent.

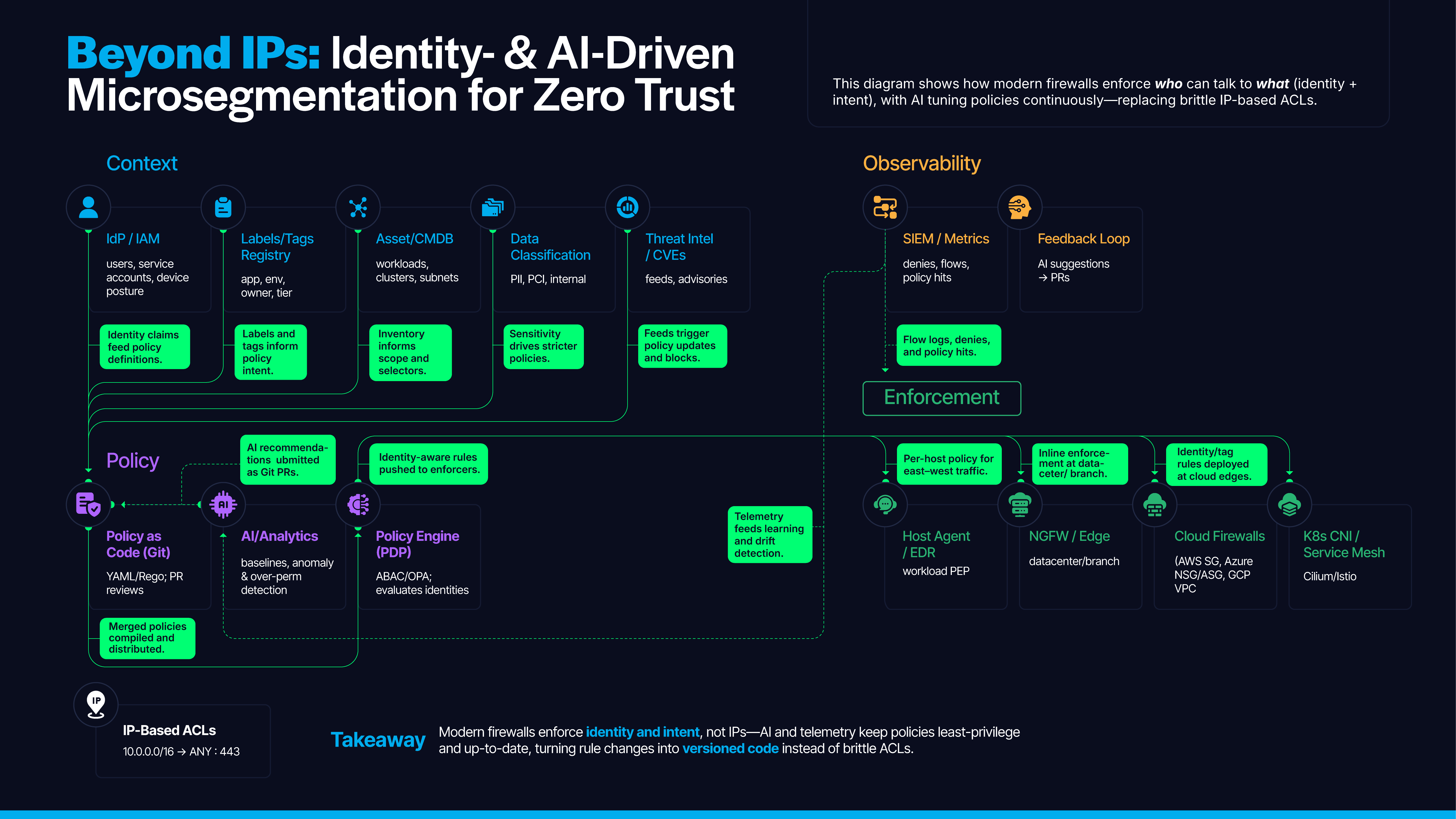

Figure 1: Beyond IPs illustrates the evolution of microsegmentation from traditional network-based controls (IP addresses) to a modern zero-trust model

The New Grammar of Policy: Identity + Intent

Bind access to attested identities and explicit intent such that rules follow workloads across clouds, survive re-IP events, enforce least privilege from Layer 3 (L3) to Layer 7 (L7) and emit audit-ready evidence by default.

Think of a modern rule as:

source.identity → destination.identity: action on port/protocol with optional L7 constraints

Examples of identity attributes are as follows:

- Workload: app=payment-service, tier=frontend, env=prod

- User/robot: principal=spiffe://corp/services/payment, [email protected]

- Device/site: zone=cctv, office=sjc-2

Core Building Blocks (Zero-Trust Baseline)

Zero-trust lands when identity is universal, encryption proves it at every hop and policy executes where the workload lives.

“The supporting key and essential tactic for zero-trust is identity and access management (IAM). You can’t do zero-trust without knowing exactly who wants access, what device is trying to connect and which piece of software is trying to act in the environment. That’s identity. But don’t forget the access part either. What access permissions do each of those entities have? Identity and access go together like peas and carrots, chocolate and peanut butter and salt and pepper. You can do them separately, but why would you want to?” — Rick Howard

In practice: Use mutual Transport Layer Security (mTLS) to authenticate peers, stable service identities (labels/tags/secure production identity framework for everyone [SPIFFE]) to target policy and in-cluster/cloud enforcers ensuring that rules follow services, survive re-IP events and emit audit-ready evidence.

- mTLS Everywhere (Workload/Workload and Service-to-User): Proves who’s talking.

- Consistent Identity: K8s) labels, cloud tags, service accounts (SAs), SPIFFE IDs.

- Policy Engines Close to Workloads: K8s container network interface (CNI) (Cilium/Calico), service mesh (Istio), cloud firewalls (Amazon Web Services Security Groups [AWS SG], Azure Network Security Group [NSG]/Application Security Group [ASG], Google Cloud Platform Virtual Private Cloud [GCP VPC]).

- Policy as Code: Versioned, reviewed, tested and deployed via continuous integration/continuous deployment (CI/CD).

- Rich Telemetry: logs show which identity was allowed/denied, not just an IP.

Hands-On: Identity Rules in the Cloud

Turn zero-trust into deployable controls across AWS/Azure/GCP by binding access to service identities — not classless inter-domain routings (CIDRs) — thereby allowing policies to survive re-IP, autoscaling and audits.

CISO Note (Why CIDRs are Brittle and Getting Worse)

CIDR rules describe ‘where’, not ‘who’ — and in the cloud, that ‘where’ churns constantly. Autoscaling, containers and elastic network interfaces (ENIs) re-IP workloads; new accounts/VPCs bring overlapping RFC1918 ranges; network address translation (NAT)/proxies collapse many apps behind one egress IP; managed services rotate addresses; dual-stack (IPv6) expands the surface; and ‘one-off’ /32 exceptions snowball into exception creep. The result is wider CIDRs to ‘keep things working’, a bigger blast radius and endless drift.

Why SG-to-SG (Identity) Fixes This

You express who→who on what — membership in a security group is the identity. Instances join or leave, and the rule doesn’t change, ensuring that policies survive re-IP, autoscaling and rolling upgrades — shrinking lateral movement without hand-editing CIDRs. (Use within a VPC and supported peering scenarios.)

AWS: Security Groups by Identity of Other Workloads (SG-to-SG)

Instead of CIDRs, reference the other service’s SG.

Terraform:

# DB instances belong to this SG

# This security group is used for database instances.

# The tags help identify the application and environment

resource “aws_security_group” “db” {

name = “sg-user-db-prod”

vpc_id = var.vpc_id

tags = { app = “user-database”, env = “prod” }

}

# Payment service SG (ECS/EC2/ASG pods/nodes attach this)

# This security group is attached to payment service resources (such as ECS tasks, EC2 instances or ASG nodes).

# Tags make it easy to target or filter resources in policies and reporting.

resource “aws_security_group” “pay” {

name = “sg-payment-prod”

vpc_id = var.vpc_id

tags = { app = “payment-service”, env = “prod” }

}

# Identity-based allow: only “pay” may reach “db” on 5432

# This rule allows inbound TCP traffic to the database SG on port 5432,

# but only from resources in the payment service SG. Implements least privilege based on identity.

resource “aws_security_group_rule” “pay_to_db” {

type = “ingress”

security_group_id = aws_security_group.db.id

from_port = 5432

to_port = 5432

protocol = “tcp”

source_security_group_id = aws_security_group.pay.id

description = “prod payment-service → prod user-db (5432)”

}

Why it’s Identity-Based: Membership in sg-payment-prod is the identity. New IPs automatically inherit access; random app subnets do not.

Add egress allowlists for SaaS: Restrict HR traffic to the vendor’s fully qualified domain name (FQDN) using AWS Network Firewall or a proxy; log all activities to a security information and event management (SIEM) system.

Figure 2: Identity, Not IP: The New Firewall Rulebook contrasts the traditional method of filtering traffic based on IP addresses and ports with a modern approach that uses identity context (user, device, application) to make smarter, more dynamic access decisions.

Azure: NSG + ASG

Most teams still write NSG rules against IPs/subnets, which crumble under azure virtual machine scale set (VMSS)/azure kubernetes service (AKS) churn, peered azure virtual networks (VNets) and overlapping RFC1918 ranges — breeding brittle, over-permissive exceptions. ASGs can help close the gap by giving NSGs identity-based targets: Group network interface cards (NICs) by role (web/API/db) and author ASG→ASG rules that follow workloads automatically, reduce IP sprawl and enforce least-privilege east-west traffic.

Group NICs into named ASGs and write rules between ASGs — no IP math required.

# Create ASGs

az network asg create -g prod-net -n asg-payment-prod

az network asg create -g prod-net -n asg-userdb-prod

# NSG rule: payment → userdb 5432

az network nsg rule create \

-g prod-net -n r-payment-to-db –nsg-name nsg-prod \

–priority 200 –direction Inbound –access Allow –protocol Tcp \

–destination-port-ranges 5432 \

–source-asgs asg-payment-prod \

–destination-asgs asg-userdb-prod \

–description “prod payment-service → prod user-db”

Attach NICs to ASGs in VMSS/AKS node pools and let the rule follow the identity.

GCP: VPC Firewall With Service Account Identity

You can scope rules to source/target SAs (workload identity), not IPs.

gcloud compute firewall-rules create pay-to-db-allow \

–network prod-vpc \

–action allow –rules tcp:5432 \

–source-service-accounts [email protected] \

–target-service-accounts [email protected] \

–direction INGRESS \

–description “prod payment → prod user-db (SA→SA)”

Result: Only virtual machines (VMs)/pods running with those SAs can communicate — instance IPs don’t matter.

Hands-On: Identity Rules in Kubernetes

K8s breaks IP-centric policy — pods reschedule, services hide IPs and clusters churn — such that network ACLs drift and become over-permissive. Identity-based rules (labels, namespaces, SAs + CNI/mesh enforcement) close the gap by binding access to who the workload is. This makes policies follow pods across nodes, survive re-IP and enforce least-privilege east-west traffic.

CiliumNetworkPolicy (L3/L4 + optional L7)

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: allow-payment-to-db

spec:

endpointSelector:

matchLabels:

app: user-database

env: prod

ingress:

– fromEndpoints:

– matchLabels:

app: payment-service

env: prod

toPorts:

– ports:

– port: “5432”

protocol: TCP

Add L7 HTTP constraints (for services speaking HTTP):

– fromEndpoints:

– matchLabels:

app: checkout

env: prod

toPorts:

– ports:

– port: “8080”

– rules:

http:

– method: “POST”

path: “^/api/v1/orders$”

Istio AuthorizationPolicy (mTLS + Workload Principal)

apiVersion: security.istio.io/v1

kind: AuthorizationPolicy

metadata:

name: pay-to-db

namespace: prod

spec:

selector:

matchLabels:

app: user-database

rules:

– from:

– source:

principals: [“spiffe://corp/ns/prod/sa/payment-sa”]

to:

– operation:

ports: [“5432”]

Tip: Enable mesh-wide STRICT mTLS to ensure the principal is cryptographically verified.

DevOps: Treat Policies as Code (and Test Them)

Manual policy changes introduce drift and break apps. Automation via your version control system (VCS)/CI platform of choice — e.g., GitHub Actions, GitLab CI, Bitbucket Pipelines, Azure DevOps or Jenkins — runs linting, one-page acceptance (OPA) tests, dry-run applies, approvals and signed deploys, closing the gap between intent and enforcement.

GitHub Actions (example)

name: policy-ci

on: [pull_request]

jobs:

test-and-apply:

runs-on: ubuntu-latest

steps:

– uses: actions/checkout@v4

– name: Lint Cilium/Istio policies

run: kubeconform -strict -summary -ignore-missing-schemas ./policies

– name: Policy unit tests (OPA)

run: conftest test ./policies –policy ./rego

– name: Dry-run apply

run: kubectl apply –server-side –dry-run=server -f ./policies

– name: Canary verify (identity)

run: |

hubble observe –last 30s –http –to-identity app=user-database,env=prod \

| grep -q “POST /api/v1/orders” && echo OK

Rego snippet (Guardrail): Block policies that do not start with default-deny.

package policy.guardrails

deny[msg] {

input.kind == “CiliumNetworkPolicy”

not input.specs_default_deny

msg := “CNP must implement default-deny baseline in namespace”

}

Migration Playbook (90-Day Path)

Use this three-phase, 90-day plan to help migrate from brittle IP ACLs to identity-based policies: Discover and label, simulate and stage then enforce.

Days 0–30: Discover and Label

- Turn on flow logs/Hubble; map ‘who talks to whom’.

- Fix your label/tags taxonomy – app, tier, env, owner.

- Pilot mTLS in non-production environments.

Days 31–60: Simulate and Stage

- Write identity policies for top services; run them in monitor/alert mode.

- Convert cloud firewalls from SG → SG/ASG → ASG/SA → SA where possible.

- Kill obvious any-any rules and add owner/expiry tags.

Days 61–90: Enforce and Shrink Blast Radius

- Enforce policies service by service, with rollbacks.

- Segment special zones (closed-circuit television (CCTV)/network video recorder (NVR), printers) separately from corporate networks.

- Build dashboards showing identity-denies, stale rules and temporary rules nearing expiration.

Troubleshooting Shifts From Route to Identity

Yesterday’s firewall playbook —‘Is the route up? (ARP/NAT/ACL line 37)’ — worked when IPs were stable. In cloud/K8s, IPs churn and overlays hide sources, which enables effective debugging with identity (labels, SAs, mTLS principals) and asks, ‘Which identity did the policy evaluate, and why?’

Questions you will answer now are as follows:

- Does the source have the right labels/SA/SG?

- Does the destination selector match what’s actually running?

- Is the mTLS principal what the policy expects?

- Which L7 path/method was denied?

Useful Commands

- Cilium: hubble observe –from-labels app=payment-service

- Istio: istioctl x authz check deploy/user-database -n prod

- AWS: VPC Flow Logs + SG rule “references” (sg-to-sg)

- Azure: NSG Flow Logs; az network watcher flow-log show

- GCP: VPC firewall logs, filter by serviceAccount

‘Firewall SME’ Corner: Translating Old to New

For seasoned firewall engineers, this is a practical bridge from IP-era habits to identity-first controls — start with hit-count hygiene (age out zero-hit rules), then collapse wide CIDRs into identity groups you can test and enforce.

- Hit-Count Cleanup: Remove zero-hit rules after 90 days; convert wide CIDRs to identities.

- Owner/Expiry Tags: Implement time-bound temporary access; auto-expire these permissions.

- Reachability Tests: Prove that apps still work (and unwanted paths don’t).

- Version Control: Export configurations nightly; review differences in pull requests (PRs).

Metrics That Show Progress

Move from activity to outcomes: Your north star is the percentage of traffic governed by identity-based policies versus IP/CIDR rules. Tag rule types in logs, trend weekly and link gains to reduced lateral movement and improved audit readiness.

- Percentage of traffic governed by identity-based versus IP rules

- Count of any-any rules (showing a descending trend)

- Temporary rule expirations occurring on time

- Mean time to policy change (request → merge → enforce)

- Deny rate for lateral movement attempts (and time to resolution)

Though outcomes vary, identity-first rulesets have delivered up to ~50% reductions in both east-west exposure and the effective attack surface in real-world programs.1

Quick Add: Beyond Identity, Aim It

Identity-first policy hardens everything; round it out with a small, actor-aware layer that targets the crews you actually face.

- Pick 1–2 named actors/techniques, tactics and procedures (TTPs). Map their techniques to your stack (kill chain → prevent/detect), then bind each control to identity (SAs, namespaces, device posture) such that it survives re-IP.

- Let AI do the grunt work. Use lightweight models to cluster logs by identity, auto-tag events to adversarial tactics techniques and common knowledge (ATT&CK) and draft change PRs (SG → SG/ASG → ASG, expiries); humans review/merge.

- Measure it. Track percentage of traffic under identity policy, time from intelligence to enforced control and stages with at least one preventive control per actor.

Result: Identity gives you the fabric; actor-aware playbooks make that fabric purposeful — tight, fast and affordable.

Conclusion: The Firewall Isn’t Dead — It Just Grew Up

We still draw boundaries; we just draw them around identities instead of subnets. When rules follow app and user intent, you get least-privilege that survives autoscaling, clusters and cloud sprawl. Write policy in the language of who and what, enforce it close to the workload and ship it through the same pipelines as code.

Your next great firewall change won’t be a CIDR. It will be a PR that says:

allow: payment-service@prod → user-db@prod on 5432, POST /api/v1/orders only.

That’s zero-trust you can read, test and trust.

如有侵权请联系:admin#unsafe.sh