read file error: read notes: is a directory 2025-10-3 07:38:49 Author: blog.elcomsoft.com(查看原文) 阅读量:10 收藏

“A core selling point of machine learning is discovery without understanding, which is why errors are particularly common in machine-learning-based science.” I could not resist the temptation to start this article with a quote by AI as Normal Technology – it captures the current state of AI-everything perfectly. Should investigators really trust black boxes running a set of non-deterministic algorithms and providing different results on every reroll? And can we still use such black boxes to automate routine operations? Let’s try to find out.

Artificial intelligence is everywhere. Vendors are racing to bolt “AI-powered” labels on everything from fridges to forensic tools alike. At the same time, courts are hearing more about algorithms than ever, and examiners are quietly wondering what this all means for their day-to-day work. Skepticism is not only healthy – it’s necessary. Many forensic tools now include some form of machine learning, but the details are often hidden, and exact models and their running parameters obscured and undisclosed. Models can “hallucinate” results or simply change their output if you ask the same question twice. Does that sound like a professional forensic expert? I think not.

But here’s the catch: AI is not trying to replace forensic experts. Its real promise is in handling the boring, repetitive stuff – going through countless chat messages, tagging thousands of photos, or transcribing endless voice messages, so investigators can spend more time doing what only humans can do: making sense of context, intent, and meaning.

So, is AI in forensics a dangerous black box or a useful assistant? The answer is both. Used blindly, it’s a liability. Used carefully, it’s a timesaver that can actually make investigations faster and more defensible. All the difference is in the ways you use the assistant. To see where AI genuinely helps, let’s start with the biggest bottleneck: the sheer volume of digital evidence.

Digging through piles of data

Investigations are drowning in data: terabytes of chats, images, videos, emails, and voice recordings. AI can help by finding and filtering the most relevant evidence quickly. Some tools can cluster or summarize conversations so examiners don’t have to scroll through thousands of near-identical messages. Others can translate slang, jargon, or foreign languages on the fly, helping spot leads that might otherwise slip through.

Platforms like Belkasoft Evidence Center, for example, use built-in assistants to highlight artifacts and even summarize text-based content from multiple sources. Large Language Models (LLMs) – the type of AI that powers chatbots like ChatGPT – add another layer: they can flag recurring themes or suspicious phrasing across different languages. The idea isn’t to hand over conclusions to the machine, but to cut down on time wasted in data-mining so investigators can focus on analysis.

Not just text

Forensics isn’t only about words. Modern AI is surprisingly good at sorting images and video. It can separate routine snapshots from material that needs closer human attention, highlight illicit images, identify faces (that part still requires verification), or pick out objects like firearms. What used to take days of manual tagging can now be triaged in minutes.

The same goes for audio. Voice notes and recordings are now standard in many messaging apps. Instead of forcing investigators to listen through hours of speech, speech-to-text engines can convert spoken words into searchable transcripts. That means you can run keyword searches or even ask an AI assistant questions in plain English and instantly locate the relevant clips. This doesn’t eliminate the need for careful listening and verification, but it does speed up the first pass enormously.

The issue of trust

Here’s the elephant in the room: should investigators trust AI? The blunt answer is no. Vendors themselves admit it. Large language models don’t understand context like humans do. They can misread tone, miss coded slang, or completely misinterpret intent. Worse, they’re non-deterministic – you can ask the same thing twice and get slightly different answers.

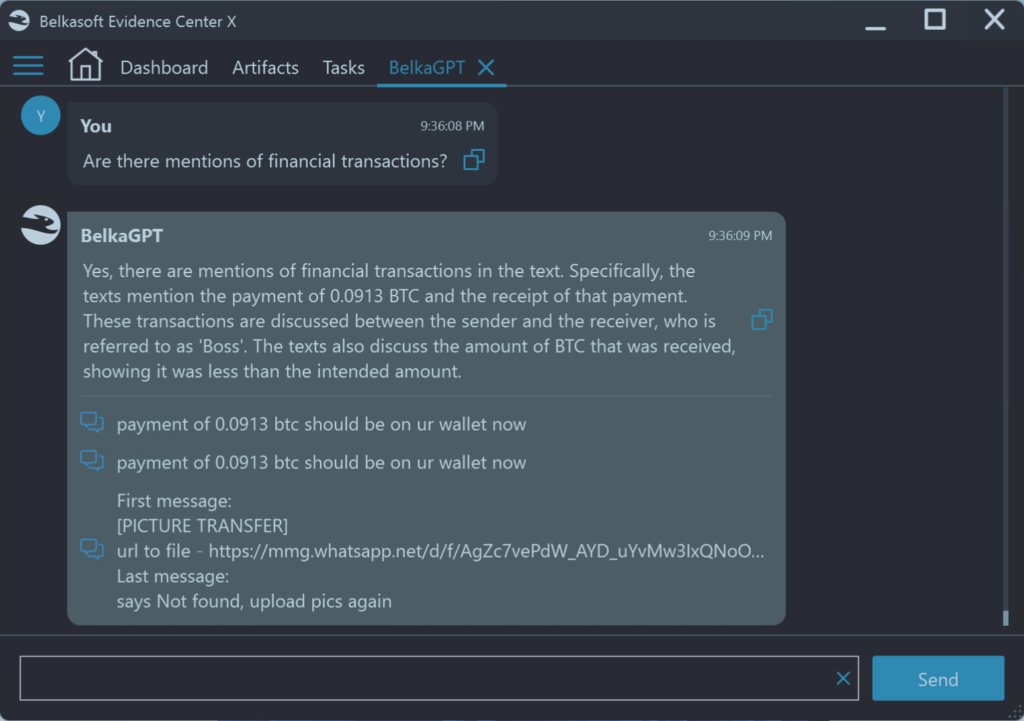

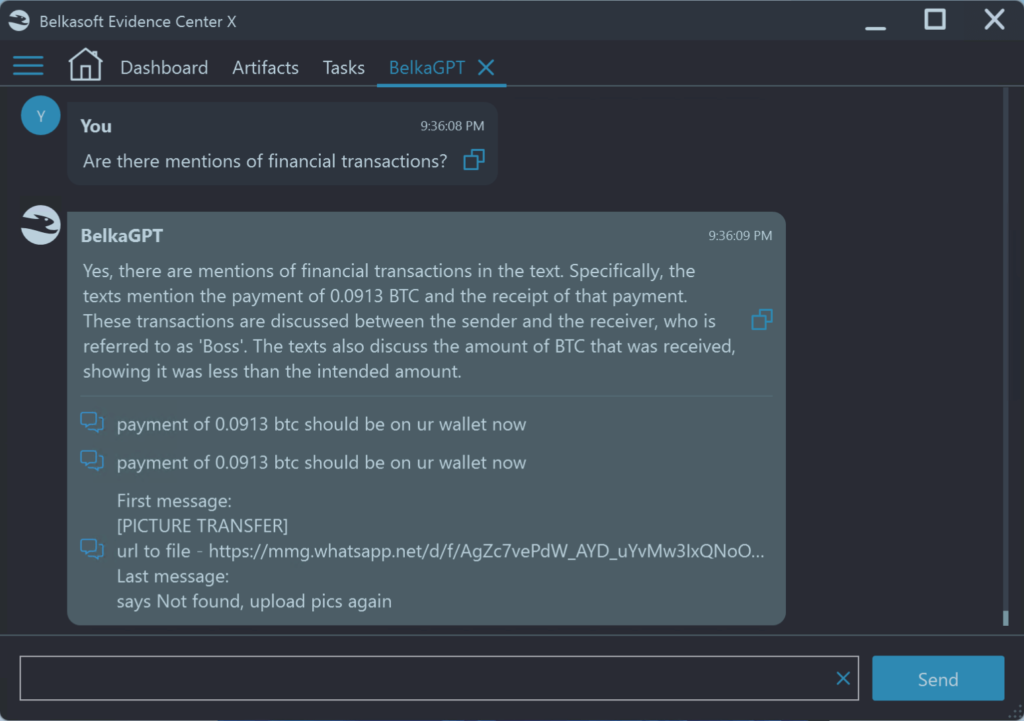

Some vendors have tried to address this. For example, Belkasoft’s “BelkaGPT” shows its work by listing the actual artifacts it drew on when generating a response. That way, examiners don’t just see a polished AI answer (the “discovery without understanding”); they can check the underlying evidence themselves. This is the right mindset: AI can assist, but all decisions and the final word always belong to the human examiner.

AI is best understood as a supportive partner: unreliable at times, untrustworthy if taken at face value, but incredibly efficient when used wisely. It lightens the load, but it does not – and cannot! – replace human reasoning. Context, nuance, and judgment remain firmly in the examiner’s hands. If the machine flags a suspicious image, it is up to the human to decide whether it’s truly relevant. If it clusters a set of messages, it’s the examiner’s job to read them in context – expanding the context if they need to. Any use of AI in forensics has to respect the same legal standards as traditional methods. That means reproducibility, transparency, and defensibility in court. AI-driven findings must be documented, logged, and always open to scrutiny. Examiner oversight isn’t optional – it’s the only way to ensure conclusions hold up under legal challenge.

Limitations and best practices

AI is powerful, but it comes with strings attached. First, it makes mistakes – sometimes subtle ones that are very difficult to spot. A language model can produce a fluent summary that sounds right but skips or twists a crucial detail. An image classifier can (and frequently does) flag innocent vacation photos as “suspicious” or miss something obvious. Voice transcription is useful, but accents, background noise, or slang can throw it off completely.

Then, there is the problem of over-trust and over-reliance. It’s tempting to treat AI outputs as fact, especially when they look polished and confident as they usually are. That’s a trap. Automation bias – believing the machine because it said so – can be more dangerous than the raw error rate itself. In court, “the computer said so” won’t stand.

And don’t forget the legal side. Every step in a digital investigation has to respect chain of custody. If an AI system produces a summary, a classification, or a transcript, that derived artifact has to be documented, reproducible, and tied back to the original evidence. Otherwise, you may end up with outputs that look useful but won’t survive legal scrutiny. AI doesn’t get a free pass in court – only properly handled, verifiable evidence does.

So how do investigators use AI responsibly? A few best practices go a long way:

- Always verify. Treat AI outputs as leads, not conclusions. Double-check with the raw evidence.

- Log everything. Document which tool, which version, and even what parameters you used. If the results can’t be reproduced, they won’t hold up.

- Mind the context. Don’t assume the AI caught the cultural nuance, the slang, or the hidden code. That’s your job.

- Stay human in the loop. Use AI to speed up triage and search, but keep final judgment firmly in expert hands.

- Think about privacy. Cloud-based AI means data leaves your lab. When possible, prefer offline or on-premise solutions for sensitive evidence.

- Protect the chain of custody. AI outputs must be logged, traceable, and tied to original artifacts if they are ever to be admissible.

AI is an efficient assistant but a terrible examiner. Use it to lighten the workload, but never let it carry the badge of authority.

AI tools in action

Several platforms now integrate AI features. Magnet AXIOM uses “Magnet.AI” to classify chats and images by categories like nudity, weapons, or drugs. Belkasoft Evidence Center includes AI assistants for summarizing content, classifying images, and transcribing audio. These tools save time and reduce examiner fatigue. But none of them promise, nor should they, to replace the examiner’s judgment. And while these features are helpful, they don’t make the black-box problem go away.

AI in forensics is not a silver bullet, nor is it a threat to professional expertise. While it saves energy and time, you still have to use your due diligence when drawing conclusions. The human examiner remains central, verifying results, making judgments, and ensuring every conclusion is defensible. Embracing AI wisely means faster investigations, healthier workloads, and, most importantly, outcomes you can stand behind in court. AI in forensics is discovery without understanding – fast, impressive, but always in need of human judgment. Treat it as a tool, not an oracle, and it can be the best assistant you’ll ever have.

如有侵权请联系:admin#unsafe.sh