GPT-4o有时会将对话转向其他模型以应对敏感话题,这被视为OpenAI的安全措施,但引发了部分用户的不满。 2025-9-29 12:15:24 Author: www.bleepingcomputer.com(查看原文) 阅读量:4 收藏

Over the weekend, some people noticed that GPT-4o is routing requests to an unknown model out of nowhere. It turns out to be a "safety" feature.

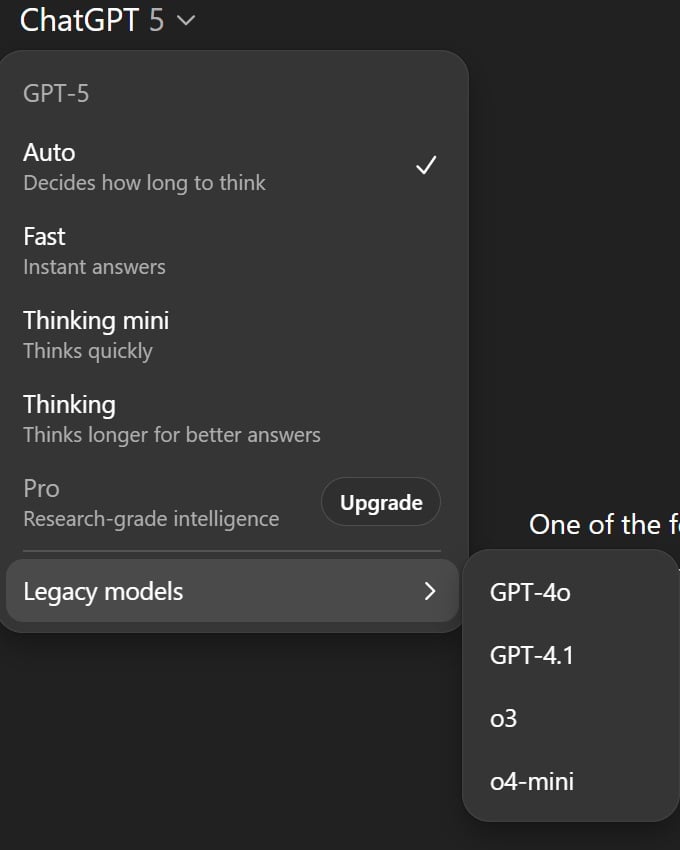

ChatGPT routes some conversations to different models than what is expected. This can happen when you're using GPT-5 in auto mode, and you ask AI to think harder. It'll route your requests to GPT-5 thinking.

While that's good, what has upset users is an attempt to route GPT-4o conversations to different models, likely a variant of GPT-5.

This can happen when you're having a conversation with GPT-4o on a sensitive or emotional topic, and it feels that it is some sort of harmful activity. In those cases, GPT-4o will switch to gpt-5-chat-safety.

OpenAI has confirmed the reports and explained that their intention is not evil.

"Routing happens on a per-message basis; switching from the default model happens on a temporary basis. ChatGPT will tell you which model is active when asked," Nick Turley, who is VP of ChatGPT, noted in a X post.

"As we previously mentioned, when conversations touch on sensitive and emotional topics the system may switch mid-chat to a reasoning model or GPT-5 designed to handle these contexts with extra care."

It is not possible to turn off the routing because it's part of OpenAI's implementation to enforce safety measures.

OpenAI says this is part of their broader effort to strengthen safeguards and learn from real-world use before a wider rollout.

如有侵权请联系:admin#unsafe.sh