文章探讨了检测开发生命周期中部署阶段的复杂性及关键组件,包括API消费者设计、凭证管理、速率限制和错误处理,并介绍了手动、基于发布和自动触发的部署方法以及多租户并行部署策略。 2025-9-23 08:0:0 Author: blog.nviso.eu(查看原文) 阅读量:14 收藏

The deployment phase is one of the most challenging steps in the Detection Development Life Cycle due to its implementation complexity. Factors that must be considered when designing a deployment pipeline are secret management, request timeouts, API response codes, and API request limits. Each of these elements must be carefully addressed during the implementation of the deployment pipeline.

In this part, we will explore the principles and practices of deploying rules to target platforms. Additionally, we will go through some of the challenges encountered when designing and implementing a deployment pipeline, along with suggestions on how to overcome them, to ensure that our Continuous Deployment pipeline operates smoothly. We are going to provide examples, from different deployment pipelines that you could refer to when deciding on your own design.

Deployment Components

To be able to understand more about the CD pipeline design, we’ll go through the key components we will be using.

API Consumer

Most modern SIEM or XDR platforms provide an API that can be used to manage the detection rules. To utilize this API, detection engineers must develop an API consumer. An API consumer is software designed to interact with the API by sending requests and receiving responses, enabling integration and utilization of the functionalities provided by the API provider. The API consumer should be able to use the API for listing, creating, updating, or deleting the detection rules in the target platform.

Key considerations when developing the API consumer include:

- Authentication and Authorization – Typically the API will require the use of API keys, OAuth or other authentication mechanisms, to ensure secure access. It is essential to handle credential material with care when storing and retrieving them to ensure security.

- Rate Limiting – Often, the API provider may impose limits on the number of requests an API consumer can make within a specific timeframe to prevent abuse of the application. Rate limiting should be taken into account when developing the API consumer and a required sleep time should be enforced to ensure that we adhere to the limitations.

- Response and Error Handling – The API consumer must be able to manage responses and errors, such as HTTP 400 or 500 status codes or read timeouts, in a graceful manner. It is important to log verbose messages related to the status codes and error messages provided by the API, so that we are able to troubleshoot potential issues with ease when we deploy our detections.

In our example for Sentinel, we will be using the Microsoft documentation as a reference to the authentication mechanism [1], the expected API Responses [2], as well as the API rate limits. Similar documentation is typically available for other products as well, like Microsoft Defender for Endpoint for example [3] (even though it’s still in beta).

A sample screenshot from our API consumer is provided below. The consumer class implements methods for basic functionalities that we are going to use, like fetching all the platform rules, deploying a rule and deleting a rule.

App Registration

In order to be able to access the Sentinel API we need to create an App registration in Azure. The App registration connects an application with Azure Active Directory (Azure AD) to enable it to authenticate and access Azure resources and services.

The App registration for Sentinel must be assigned with the Sentinel Contributor role [4] that allows it to perform actions under Microsoft.SecurityInsights/* which includes the API endpoints that we need to manage the rules [2]. This is a necessary step, so that the API consumer will be able authenticate and use the API. The assignment can be done under the Log Analytics workspace settings -> Access Control (IAM) -> Role Assignments.

Credential Material Management

Credential Material to access the target platform should be stored securely and encrypted. In Azure DevOps, you have several options for accessing credential material. Credentials can be stored directly in Azure DevOps as secret variables. Another option would be to use Azure Key Vault as a secure service for storing secrets, which also integrates well with Azure DevOps, allowing pipelines to fetch secrets dynamically. However, if you need to handle secrets in your pipelines, always follow the best practices [5] as advised by Microsoft.

In addition to that, instead of using a Key Vault, Azure DevOps offers the option to use service connections [6] and managed identities [7]. A service connection provides secure access to external services from your pipelines, while managed identities allow Azure resources to authenticate against Azure services without storing credentials in code.

Pipeline Agents

Code that runs in Azure Pipelines requires at least one agent. In Part 3, we briefly went through the basic components of a pipeline, one of which was a Job. When a pipeline is executed, the system initiates one or more jobs. An agent is computing infrastructure with installed agent software that runs one job at a time [8]. Deploying more than one agents allows us to run Jobs in parallel.

This is particularly useful from the standpoint of an MSSP, as it allows us to speed up the deployment by deploying in parallel to multiple tenants. We are going a bit more into depth on that topic on the section “Multitenant Deployments” below.

Component Diagram

In Part 1, we introduced a high-level workflow diagram outlining the approach for this series. In Part 2 and Part 3, we explored the repository and build validations in more detail. Now that we have also examined the rest of the key components required to deploy our rules in Sentinel, we can revisit and redesign our original graph with the specific components involved.

In the next sections, we are going to see how we can leverage the above design to perform manual, automatic or multitenant deployments.

Manual Deployments

Manual deployments describe the process where detection engineers personally undertake the task of deploying content packs or rules. They do this by manually selecting the desired content pack or rule and specifying the environment to which they want to deploy. Manual deployments offer the most control for the detection engineering team and are more suitable for managing a small number of environments and content packs.

The following pipeline is designed to allow executing of rule deployments manually, without automatic triggers. We have included parameters such as content_pack, tenant, platform, and action, each with default values and selectable options. These parameters are used to form the matrix_parameters variable, which guides the deployment process. The pipeline consists of a single job, “ExecuteDeployment,” where the repository is checked out, Python dependencies are installed, and a deployment script is executed. This script uses the matrix_parameters to manage the deployment specifics, determining the content pack, tenant, platform, and action (deploy or delete) to be performed.

name: Manual Deployment

trigger: none

parameters:

- name: content_pack

type: string

default: cloud_azure

values:

- cloud_azure

- cloud_o365

- os_windows

displayName: Content Pack

- name: tenant

type: string

default: QA

values:

- QA

- QA-Testing

displayName: Tenant

- name: platform

type: string

displayName: Platform

default: sentinel

values:

- sentinel

- mde

- name: action

type: string

displayName: Action

default: deploy

values:

- deploy

- delete

variables:

- name: matrix_parameters

value: '[{"content_pack": "${{ parameters.content_pack }}", "tenant": "${{ parameters.tenant }}","platform": "${{ parameters.platform }}", "action": "${{ parameters.action }}"}]'

jobs:

- job: ExecuteDeployment

displayName: 'Execute Deployment'

steps:

- checkout: self

- script: |

pip install -r pipelines/scripts/requirements.txt

displayName: 'Python Dependencies Installation'

- bash: |

python pipelines/scripts/deployment_execute.py --deployment-parameters '$(matrix_parameters)'

displayName: "Deployment ${{ parameters.content_pack }} for ${{ parameters.platform }} on ${{ parameters.tenant }}"YAML

We can manually run the pipeline by selecting it and clicking on Run pipeline. We are then presented with a side panel that allows us to select the parameters that we defined above. Once we have selected the content pack, the tenant, the platform and the action we click on Run to execute the deployment.

The deployment script is executed and the rules are deployed to the selected environment. An example screenshot is seen below:

Automatic Deployments triggered by Release

Release-based deployments refer to a structured approach where updates of the detection library or bug fixes are deployed together in designated releases. These deployments follow a planned schedule and are typically part of a broader release cycle. This method ensures that changes are bundled together, often aligning with project milestones or timelines. This workflow fits best teams that work in sprints and/or provide detection content as a service or as part of a security product.

In Part 2, we went over a few different branching strategies for our repository. Trunk-based and Gitflow in particular can utilize release- branches for rule deployments. We can implement that logic directly into the pipeline, so that when a new branch is created prefixed with “release-“, the deployment pipeline is triggered and deploys rules in our mapped environments.

But first, to perform automatic deployments, we should create a mapping file, that describes which content packs must be deployed to which environment. As an example, we are going to use the following JSON structure to define the deployment mapping. The JSON dictates that cloud_azure and cloud_o365 packs are going to be deployed in the QA tenant, and cloud_azure is going to be deployed on QA-Testing tenant.

[

{

"content_pack": "cloud_azure",

"tenant": "QA",

"platform": "sentinel",

"action": "deploy"

},

{

"content_pack": "cloud_o365",

"tenant": "QA",

"platform": "sentinel",

"action": "deploy"

},

{

"content_pack": "cloud_azure",

"tenant": "QA-Testing",

"platform": "sentinel",

"action": "deploy"

}

]JSON

We then create the pipeline to deploy the rules. The pipeline will trigger when a new branch with the “release-” prefix is created. For the sake of simplicity in this example we will map the deployment mapping above directly to the matrix_parameters value.

name: Automatic Release Branch Deployment

trigger:

branches:

include:

- release-*

variables:

- name: matrix_parameters

value: '[{"content_pack": "cloud_azure", "tenant": "QA","platform": "sentinel", "action": "deploy"}, {"content_pack": "cloud_o365", "tenant": "QA","platform": "sentinel", "action": "deploy"}, {"content_pack": "cloud_azure", "tenant": "QA-Testing","platform": "sentinel", "action": "deploy"}]'

jobs:

- job: ExecuteDeployment

displayName: 'Execute Deployment'

steps:

- checkout: self

- script: |

pip install -r pipelines/scripts/requirements.txt

displayName: 'Python Dependencies Installation'

- bash: |

python pipelines/scripts/deployment_execute.py --deployment-parameters '$(matrix_parameters)'

displayName: "Automatic Release Branch Deployment"YAML

Once we create the branch, the pipeline is triggered and the deployment pipeline is executed.

Alternatively, it is also possible to implement release-based deployments using tags [9]. At the end of each sprint, or whenever it is deemed necessary, we can add a tag to the main branch commit and trigger the automatic deployment that way. To trigger the deployment automatically based on tags, simply change the trigger of the pipeline above to:

trigger:

tags:

include:

- release-*YAML

We then proceed and create a tag for the commit, prefixed with “release-“, like so:

This will trigger our modified pipeline and initiate a deployment.

Automatic Deployments triggered by Repository Updates

Release-based deployments offer a structured and automated approach to managing deployments. However, they come with the downside of delaying the deployment due to the scheduled nature of releases. In environments where immediate deployment of detection rules is required, an alternative approach can be followed. This method involves using a pipeline to continuously monitor the repository for updates following a pull request merge. When a change is detected, the pipeline automatically triggers the deployment of the modified content packs based on the a mapping file. This method minimizes manual intervention and ensures that updates are rapidly available in the target environment platforms.

Before implementing the logic described above, we will first go through some Git commands that we will use in our script.

The following command is used to obtain the hash of the most recent commit on the main branch. The -1 option restricts the output to just the latest commit, ensuring that only one commit is displayed. The --pretty=format:%H part customizes the output to show only the full commit hash.

git log main -1 --pretty=format:%HBash

Once we identify the latest commit, we use the following command to display the commit message.

git show --pretty=format:%s 1a6d6e81fef265562630b18b0555de37db585e0dBash

Azure DevOps Repos automatically created this commit, denoted by the commit message “Merged PR <pr-number>“, when we merged our pull request. So, as a next step, we execute the following Git command to get the commits parents. This helps us in analysing merge commits, which can have multiple parent commits.

git show --pretty=format:%P 1a6d6e81fef265562630b18b0555de37db585e0dBash

Then, we are fetching the commit list between the parent commits by executing the following command. The range <df05a7…>..<b5e3b0…> defines the starting and ending commits, allowing us to see all the commits that fall between these two hashes on the main branch. By specifying the --pretty=format:%H option, the output is customized to show only the commit hashes.

git log main --pretty=format:%H df05a775c64201fe325cd8ba240e235dd87d2e6..b5e3b0a8c8d8578eae84eb60e74cb964b677f1efBash

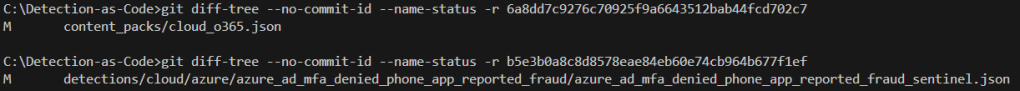

For each commit, we then execute git diff-tree, which examines the differences introduced by the commit. The --no-commit-id flag omits the commit ID from the output, focusing solely on the file changes. The --name-status option provides a summary of the changes, showing us the status of each file (e.g., added, modified, deleted) along with the file names.

git diff-tree --no-commit-id --name-status -r 6a8dd7c9276c70925f9a6643512bab44fcd702c7

git diff-tree --no-commit-id --name-status -r b5e3b0a8c8d8578eae84eb60e74cb964b677f1efBash

Depending on the Git client version you are using you might need to verify that the output is as displayed in the screenshots.

For the output that is displayed in the above commands we are using no-fast-forward merge. The git commands might need to be modified if another merge type is used.

The next step is to put everything together into a script that we can execute from a pipeline. The script identifies content packs that were created/modified or content packs that include modified detection files in the Git repository. It retrieves the latest commit of the main branch, checks if it’s a merge commit, and processes the changes between its parent commits to find modified detection and content pack files. It then matches these content packs with deployment parameters from a tenant deployment map that we provided in the release based deployments section, creating a matrix of parameters for deployment. The deployment parameters are then stored in the matrix_parameters pipeline variable to be used by the deployment job of the pipeline.

import subprocess

import os

import json

base_paths = ["detections/*.json", "content_packs/*.json", "detections/*.yml"]

def run_command(command: list) -> str:

"""Executes a shell command and returns the output."""

try:

#print(f"[R] Running command: {' '.join(command)}")

output = subprocess.check_output(command, text=True, encoding="utf-8", errors="replace").strip()

#print(f"[O] Command output:\n{'\n'.join(['\t'+line for line in output.splitlines()])}")

return output

except subprocess.CalledProcessError as e:

print(f"##vso[task.logissue type=error] Error executing command: {' '.join(command)}")

print(f"##vso[task.logissue type=error] Error message: {str(e)}")

return ""

except UnicodeDecodeError as e:

print(f"##vso[task.logissue type=error] Unicode decode error: {e}")

return ""

def get_last_commit() -> str:

"""Retrieve the most recent commit hash on the main branch."""

return run_command(["git", "log", "main", "-1", "--pretty=format:%H"])

def get_commit_message(commit_hash: str) -> str:

"""Retrieve a commit message"""

return run_command(["git", "show", "--pretty=format:%s", commit_hash])

def get_commit_parents(commit_hash: str) -> list:

"""Retrieve a commit parents"""

return run_command(["git", "show", "--pretty=format:%P", commit_hash]).split(" ")

def get_commit_list(start_commit:str, end_commit:str) -> list:

"""Retrieve a commit list"""

return run_command(["git", "log", "main", "--pretty=format:%H", f"{start_commit}..{end_commit}"]).splitlines()

def get_commit_modified_files(commit_hash: str) -> list:

"""Get a list of modified files in the commit, along with their status"""

return run_command(["git", "diff-tree", "--no-commit-id", "--name-status", "-r", commit_hash, "--"]+ base_paths).splitlines()

def get_content_packs_from_detection(detection_path: str) -> list:

content_packs_found = []

content_packs_dir = "content_packs"

for file_name in os.listdir(content_packs_dir):

file_path = os.path.join(content_packs_dir, file_name)

if os.path.isfile(file_path) and file_name.endswith('.json'):

try:

with open(file_path, 'r', encoding='utf-8') as f:

data = json.load(f)

detections = data.get("detections", [])

if detection_path in detections:

content_pack_name = os.path.basename(file_path).rstrip(".json")

content_packs_found.append(content_pack_name)

except (json.JSONDecodeError, IOError) as e:

logging.error(f"##vso[task.logissue type=error]Skipping {file_name}: {e}")

return content_packs_found

def identify_content_packs():

content_packs = []

matrix_parameters = []

input_commit_hash = get_last_commit()

print(f"Last commit ID: {input_commit_hash}")

commit_message = get_commit_message(input_commit_hash)

print(f"Commit message: {commit_message}")

if commit_message.startswith("Merged PR"):

print("PR merge commit identified. Identifying changes...")

commit_parents = get_commit_parents(input_commit_hash)

if len(commit_parents) == 2:

start_commit = commit_parents[0]

end_commit = commit_parents[1]

print(f"Start commit:{start_commit}..End commit:{end_commit}")

commit_list = get_commit_list(start_commit, end_commit)

print(f"Commit list:\n {', '.join(commit_list)}")

for commit_hash in commit_list:

print(f"Processing commit:{commit_hash}")

commit_modified_files = get_commit_modified_files(commit_hash)

for commit_modified_file in commit_modified_files:

status, filepath = commit_modified_file.split("\t")

if filepath.startswith("detections/") and status == "M":

detection_path = os.path.dirname(filepath).removeprefix("detections/")

print(f"Detection {filepath} modified. Identifying content_pack...")

content_packs_from_detection = get_content_packs_from_detection(detection_path)

print(f"{detection_path} included the following content packs: {', '.join(content_packs_from_detection)}")

content_packs = content_packs + content_packs_from_detection

if filepath.startswith("content_packs/") and (status in ["A", "M"]):

print(f"Content_pack {filepath} {"created" if status=="A" else "modified"}.")

content_packs.append(os.path.basename(filepath.removesuffix(".json")))

else:

print(f"##vso[task.logissue type=error]Could not identify parents of {input_commit_hash}")

content_packs = list(set(content_packs))

print(f"Content packs identified for deployment: {', '.join(content_packs)}")

print(f"Reading tenant deployment map...")

tenant_deployment_map = []

try:

with open("pipelines/config/tenant_deployment_map.json", 'r', encoding='utf-8') as f:

tenant_deployment_map = json.load(f)

except (json.JSONDecodeError, IOError) as e:

print(f"##vso[task.logissue type=error]{e}")

for deployment_parameter in tenant_deployment_map:

deployment_parameter_content_pack = deployment_parameter.get("content_pack")

if deployment_parameter_content_pack:

if deployment_parameter_content_pack in content_packs:

matrix_parameters.append(deployment_parameter)

print(f"Creating matrix parameters variable.")

matrix_parameters_str = json.dumps(matrix_parameters)

print(matrix_parameters_str)

print(f"##vso[task.setvariable variable=matrix_parameters;isOutput=true]{matrix_parameters_str}")

return

print("##vso[task.setvariable variable=matrix_parameters;isOutput=true][]")

print(matrix_parameters)

def main():

identify_content_packs()

if __name__ == "__main__":

main()Python

The pipeline is triggered by changes in the main branch, within the detections and content_packs directories. It consists of two jobs: IdentifyChanges and ExecuteDeployment. The IdentifyChanges job checks out the latest branch changes and runs a script that sets the deployment parameters. The ExecuteDeployment job executes the deployment using the defined matrix_parameters variable.

name: Automatic Deployment Triggered By Repo Changes

trigger:

branches:

include:

- main

paths:

include:

- "detections/*"

- "content_packs/*"

jobs:

- job: IdentifyChanges

displayName: "Identify Changes"

condition: eq(variables['Build.SourceBranchName'], 'main')

steps:

- checkout: self

fetchDepth: 0

- script: |

git fetch origin main

git checkout -b main origin/main

displayName: "Fetch Branches Locally"

- script: |

python pipelines/scripts/identify_changes.py

displayName: 'Run Identify Changes Script'

name: setMatrixParameters

- job: ExecuteDeployment

displayName: 'Execute Deployment'

dependsOn: IdentifyChanges

condition: succeeded()

variables:

matrix_parameters: $[ dependencies.IdentifyChanges.outputs['setMatrixParameters.matrix_parameters'] ]

steps:

- checkout: self

- script: |

pip install -r pipelines/scripts/requirements.txt

displayName: 'Python Dependencies Installation'

- bash: |

python pipelines/scripts/deployment_execute.py --deployment-parameters '$(matrix_parameters)'

displayName: "Automatic Deployment"YAML

To test the pipeline and created scripts we will create a new branch and modify one content pack and one detection rule in the repository. We then create the pull request.

We are going to merge the pull request using “no fast forward” merge.

Our pipeline is executed, changes are being identified, and our deployments parameters are passed on the the ExecuteDeployment job to initiate the deployment.

Multitenant Deployments

Multitenant deployments are particularly useful for Managed Security Service Providers (MSSPs), as they often oversee the detection content across multiple monitored environments. To be able to deliver detections more efficiently, we are going to implement parallelization by utilizing multiple agents simultaneously. This approach will allow us to deliver content to multiple environments faster.

We can implement the parallel deployments to multiple tenants using the matrix strategy [10] in the pipeline jobs.

strategy:

matrix:

{ job_name: { matrix_parameters: parameter_json}YAML

The use of a matrix generates copies of a job, each with different input. For each occurrence of job_name in the matrix, a copy of the job is generated with the name job_name. For each occurrence of matrix_parameters, a new variable called matrix_parameters with the value parameter_json is available to the new job.

To pass the matrix parameters correctly to the pipeline according to the specifications of the matrix strategy, we are going to create the following JSON structure where the matrix parameters are passed as a string representation of JSON.

{

"QA": {

"matrix_parameters": "[{\"action\": \"deploy\", \"content_pack\": \"cloud_azure\", \"platform\": \"sentinel\", \"tenant\": \"QA\"}]"

},

"QA-Testing": {

"matrix_parameters": "[{\"action\": \"deploy\", \"content_pack\": \"cloud_o365\", \"platform\": \"sentinel\", \"tenant\": \"QA-Testing\"}]"

}

}JSON

We then create the pipeline that consists of two jobs: SetDeploymentMatrix and ExecuteDeployment. The first job, SetDeploymentMatrix, uses a Python script to create a deployment configuration dictionary containing matrix parameters for two environments, “QA” and “QA-Testing”. These parameters are serialized into JSON strings and set as an output variable named DeploymentMatrix. The second job, ExecuteDeployment, depends on the successful completion of the first job. It uses the DeploymentMatrix output to dynamically create a strategy matrix for deployment. The job checks out the repository, installs necessary Python dependencies, and executes a deployment script using the matrix parameters specific to each environment.

trigger: none

jobs:

- job: SetDeploymentMatrix

displayName: "Set DeploymentMatrix Output Variable"

steps:

- script: |

python -c "import json; deployment_config = {'QA': {'matrix_parameters': json.dumps([{'action': 'deploy', 'content_pack': 'cloud_azure', 'tenant': 'QA'}])}, 'QA-Testing': {'matrix_parameters': json.dumps([{'action': 'deploy', 'content_pack': 'cloud_o365', 'tenant': 'QA-Testing'}])}}; print(f'##vso[task.setvariable variable=DeploymentMatrix;isOutput=true]{json.dumps(deployment_config)}')"

displayName: "Set DeploymentMatrix"

name: createDeployMatrix

- job: ExecuteDeployment

displayName: 'Execute Deployment'

dependsOn: SetDeploymentMatrix

condition: succeeded()

strategy:

matrix: $[ dependencies.SetDeploymentMatrix.outputs['createDeployMatrix.DeploymentMatrix'] ]

steps:

- checkout: self

- script: |

pip install -r pipelines/scripts/requirements.txt

displayName: 'Python Dependencies Installation'

- bash: |

python pipelines/scripts/deployment_execute.py --deployment-parameters '$(matrix_parameters)'

displayName: "Deployment for $(System.JobName)"YAML

We execute the pipeline and we can see now that the deployment for QA and QA-Testing environments run in parallel.

The multitenant deployment can be combined with the manual or automated methods that we demonstrated above if need to leverage the benefits of both of them.

Wrapping Up

It’s clear that the deployment step presents significant challenges due to its inherent complexity. Successfully designing and implementing a deployment pipeline requires attention to several factors, including secret management, request timeouts, API response codes, and API request limits. These elements must be addressed to ensure a robust deployment process. Throughout this part, we have explored the principles and practices of deploying detection rules to target platforms, highlighting common challenges and presenting ways to overcome them. By using techniques such as manual, release-based, and automatic deployments, as well as leveraging multitenant deployments, we can optimize the deployment process.

The next blog post in the series will be about monitoring the trigger rate and health of our deployed detections.

References

[1] https://learn.microsoft.com/en-us/entra/identity-platform/v2-oauth2-auth-code-flow

[2] https://learn.microsoft.com/en-us/rest/api/securityinsights/alert-rules?view=rest-securityinsights-2025-06-01

[3] https://learn.microsoft.com/en-us/graph/api/security-detectionrule-post-detectionrules

[4] https://learn.microsoft.com/en-us/azure/role-based-access-control/built-in-roles/security#microsoft-sentinel-contributor

[5] https://learn.microsoft.com/en-us/azure/devops/pipelines/security/secrets

[6] https://learn.microsoft.com/en-us/azure/devops/pipelines/library/service-endpoints

[7] https://learn.microsoft.com/en-us/entra/identity/managed-identities-azure-resources/overview

[8] https://learn.microsoft.com/en-us/azure/devops/pipelines/agents/agents

[9] https://learn.microsoft.com/en-us/azure/devops/repos/git/git-tags

[10] https://learn.microsoft.com/en-us/azure/devops/pipelines/yaml-schema/jobs-job-strategy

About the Author

Stamatis Chatzimangou

Stamatis is a member of the Threat Detection Engineering team at NVISO’s CSIRT & SOC and is mainly involved in Use Case research and development.

如有侵权请联系:admin#unsafe.sh