read file error: read notes: is a directory 2025-9-19 11:27:20 Author: payatu.com(查看原文) 阅读量:19 收藏

Introduction: What is Credential Dumping?

Credential dumping refers to the systematic extraction of usernames, passwords, and other sensitive information from operating systems by malicious actors. This technique poses a significant threat, particularly within Linux environments, where successful credential extraction can lead to unauthorised access to user accounts, services, and network resources. Understanding the mechanisms of credential storage in Linux, the vulnerabilities that may expose these credentials, and the strategies for safeguarding them are essential for enhancing system security.

Objective of this Blog

This blog aims to elucidate the mechanisms by which Linux systems manage user credentials, the prevalent techniques employed by malicious actors to compromise these credentials, and the strategies for mitigating such security threats.

Linux Credentials 101

In a comprehensive document authored by David Howells, the intricacies of credential management within the Linux operating system are meticulously detailed. This document elucidates the foundational role of credentials in enforcing security protocols and delineates how various system objects, including files and processes, adhere to established security frameworks.

Objects and Credentials in Linux Security

Linux operating systems utilise a robust framework for managing security contexts through the concept of “credentials” associated with various entities, such as processes and files. These credentials are integral to defining the system’s security posture and are composed of several key components that collectively determine the capabilities and permissions of tasks within the environment.

Components of Linux Credentials:-

- Identity ID (IID): The Identity ID represents the unique identifier for a user or process. It is crucial for establishing ownership and access rights to system resources.

- Group ID (GID): The Group ID complements the IID by associating a process with one or more groups. This association allows for the implementation of group-based permissions, facilitating collaborative access to shared resources.

- Security Labels: Security labels are part of a broader security framework that includes Mandatory Access Control (MAC) systems, such as SELinux or AppArmor. These labels provide an additional layer of security by enforcing policies that restrict actions based on predefined rules, independent of traditional user/group permissions.

- Capabilities: Capabilities are a set of distinct privileges that can be independently assigned to processes, allowing for fine-grained control over what actions a process can perform. Unlike traditional UNIX permissions, which rely on user and group IDs, capabilities enable specific operations (e.g., binding to network ports, modifying system settings) without granting full root access.

Subject and Access Controls

Linux employs two primary access control models: Discretionary Access Control (DAC) and Mandatory Access Control (MAC).

Discretionary Access Control (DAC) is implemented through user identifiers (UIDs) and group identifiers (GIDs). Under this model, resource owners have the authority to set permissions on their resources, allowing them to dictate who can access or modify their files.

Mandatory Access Control (MAC), on the other hand, enforces access policies that are centrally managed and cannot be altered by individual users. This is achieved through systems such as Security-Enhanced Linux (SELinux), AppArmor, and Smack. These MAC systems utilise labels and rules to provide fine-grained control over access permissions.

Role of Labels and Rules

In MAC systems, security labels are assigned to both subjects and objects. These labels serve as identifiers that dictate the level of access each subject has to various objects within the system. For instance:

SELinux employs a label-based approach where security contexts are assigned to files and processes. This allows for detailed policy definitions that specify what actions subjects can perform on objects based on their security labels.

AppArmor, in contrast, utilises a path-based model where profiles define the allowed interactions for specific applications with the filesystem. This approach simplifies the management of security policies while still providing robust protection.

Subjective vs. Objective Qualifications

The distinction between subjective and objective qualifications is crucial in understanding how credentials are applied within these access control frameworks:

Subjective credentials refer to those associated with tasks or processes (subjects). These credentials govern what actions a subject can undertake during its execution.

Objective credentials, however, pertain to the attributes of objects such as files. When accessing these objects, the system relies on objective criteria, namely, the established permissions defined by DAC or enforced by MAC policies.

Task Credential Management: Each task has a cred structure in task_struct. Alterations to a task’s credentials require a copy-modify-replace process to maintain immutability and use RCU (Read-Copy-Update) for safe access by other tasks.

Altering and Managing Credentials: Tasks can change their credentials, but not others. Metamorphic functions such as prepare_creds() and commit_creds() deal with these changes. RCU locking is required to access credentials for other tasks.

Open File Credentials: When a file is opened, the task’s credentials at that moment are attached to the file structure, ensuring consistent access permissions.

Overriding VFS Credentials: Under certain scenarios (like core dumps), it’s possible to override the default credential handling in the Virtual File System (VFS) to specify which credentials to apply to actions.

This system of credentials offers Linux a good level of security control, guaranteeing that permissions correspond to user-based and policy-based requirements across the system.

If you want to get more details, you can read the full document here.

Types of Credentials

Credentials in Linux systems come in various forms, each serving a unique purpose:

User Credentials: Authorisation is accomplished using credentials, including usernames and passwords usually hashed. This includes system administrators, application users, and other normal system users.

Service Credentials: Services such as a database, web server, or API use these to validate requests between applications.

SSH Keys: SSH is a private-public key pair that enables secure terminal login to systems without a password, suitable for programs with secure authentication requirements.

Environment Variables are temporary storage locations in the system environment that hold data such as API tokens, database credentials, or other sensitive information.

Every credential type is also accompanied by certain security considerations because some may be accidentally leaked in files, scripts, or logs.

Key Credential Files and Storage Methods

Shadow (/etc/shadow)

- Purpose and Security

The /etc/shadow file is a critical component of Linux security architecture, designed to securely store hashed passwords and related metadata. This file is accessible exclusively by the root user or processes running with superuser privileges, effectively minimising the risk of unauthorised access. By restricting access to this file, Linux systems enhance their defences against potential intrusions and malicious activities aimed at compromising user credentials.

- Format and Fields

The structure of the /etc/shadow file is composed of lines representing individual user accounts, each containing several fields separated by colons. The key fields include:

- Username: The user’s login name.

- Hashed Password: The encrypted representation of the user’s password.

- Last Change: The date of the last password modification.

- Minimum Age: The minimum time required before the password can be changed again.

- Maximum Age: The maximum time before a password must be changed.

- Warning Period: The time period before password expiration during which the user is warned.

- Inactive Period: The time after password expiration before the account is disabled.

- Expiration Date: The date when the account will no longer be accessible.

- Reserved Field: Currently unused but reserved for future use.

This format supports robust system-wide security policies by enforcing password strength requirements and managing reset intervals effectively

Common Vulnerabilities

Despite its security advantages, the /etc/shadow file is not impervious to threats. Attackers may exploit privilege escalation vulnerabilities or other weaknesses to gain access to this file. If successful, they can extract hashed passwords and attempt to crack them offline using various methods, including brute force attacks or pre-computed hash tables (rainbow tables).

To mitigate these risks, it is essential to utilise strong hashing algorithms such as bcrypt or SHA-512. These algorithms significantly increase the complexity of cracking attempts, making it more challenging for attackers to reverse-engineer passwords from their hashes. Regularly updating hashing algorithms and enforcing stringent password policies are critical strategies in safeguarding against unauthorised access.

In summary, while the /etc/shadow file plays a vital role in enhancing Linux system security through controlled access and structured data storage, it remains a target for attackers. Therefore, continuous vigilance and proactive security measures are necessary to protect sensitive user credentials effectively.

System Credential Cache with PAM (Pluggable Authentication Modules)

Overview of PAM

Programs that grant users access to a system use authentication to verify each other’s identity (that is, to establish that a user is who they say they are).

Historically, each program had its own way of authenticating users. In Linux, many programs are configured to use a centralised authentication mechanism called Pluggable Authentication Modules (PAM).

PAM uses a pluggable, modular architecture, which affords the system administrator a great deal of flexibility in setting authentication policies for the system. PAM is a useful system for developers and administrators for several reasons:

- PAM provides a common authentication scheme that can be used with a wide variety of applications.

- PAM provides significant flexibility and control over authentication for both system administrators and application developers.

- PAM provides a single, fully documented library that allows developers to write programs without creating their own authentication schemes.

Credential Caching Mechanism

The Pluggable Authentication Module (PAM) is a critical component in Linux systems, responsible for managing user authentication across various services. One of its functionalities includes the caching of credentials in memory during an active user session. This caching mechanism significantly enhances user experience by allowing access to resources without the need for repeated reauthentication, which is particularly useful in environments where network connectivity may be intermittent.

Benefits of Credential Caching

- Improved User Experience: Cached credentials enable users to authenticate seamlessly, even when the primary authentication source, such as an LDAP server, is unavailable. This is especially beneficial for mobile devices and laptops that frequently switch networks.

- Reduced Latency: By utilising cached credentials, the system can minimise delays associated with remote authentication requests, thereby improving overall performance.

- Resource Efficiency: The caching mechanism reduces the load on authentication servers by limiting the number of requests made for user verification.

Security Implications

Despite its advantages, credential caching poses significant security risks if not managed properly. If PAM’s memory cache is not configured to clear immediately after use, sensitive information such as usernames and passwords may remain accessible in system memory. This vulnerability can be exploited by attackers or unauthorised internal users with administrative rights who gain access to memory dumps or can inspect system memory.

Configuration Files

The Pluggable Authentication Modules (PAM) framework is essential for managing authentication in Linux systems. This modular approach allows administrators to customise authentication processes for various services efficiently. Key configuration files within the PAM system include /etc/pam.d/common-auth and /etc/pam.d/common-session, which play crucial roles in controlling authentication methods and managing user sessions, respectively.

Important PAM Configuration Files

- /etc/pam.conf: This is the primary configuration file for PAM. It contains service entries that route requests to specific PAM modules based on defined rules. If the /etc/pam.d/ directory exists, PAM will prioritize it over /etc/pam.conf, ignoring the latter entirely.

- /etc/pam.d/: This directory holds individual configuration files for each PAM-aware application or service. Each file is named after the service it configures, allowing for tailored authentication settings. For instance, the login service has its configuration file located at /etc/pam.d/login.

- Common Files:

- common-auth: Controls the authentication methods utilised by various services.

- common-session: Manages session-related tasks after a user has successfully authenticated.

Risks of Misconfiguration

Misconfigured PAM files can lead to severe security vulnerabilities, such as:

- Sensitive Data Caching: Improper settings may inadvertently enable caching of sensitive authentication data, increasing the risk of exposure.

- Unauthorised Access: Errors in configuration can allow unauthorised users to gain access to restricted services or resources.

Browser and Application-Specific Credential Storage

Common Applications

Many tools, such as the AWS Command Line Interface (CLI) and Git, store user credentials in plaintext format within user directories. For instance, AWS credentials are typically found in ~/.aws/credentials, while Git credentials may reside in ~/.git-credentials. These files often lack adequate encryption or access restrictions, posing a significant security risk. In contrast, modern web browsers like Chrome and Firefox store credentials—including login data and session tokens—in their respective directories, such as ~/.config/google-chrome and ~/.mozilla/firefox. Although these credentials are encrypted, the encryption is generally tied to the local user account. Consequently, if an attacker gains local access to the user’s profile, they may successfully decrypt and retrieve these stored credentials.

Configuration and Storage Locations

Credential storage locations are typically found within the user’s home directory. If permissions are not configured correctly, these files can be easily accessed by unauthorized users. Implementing restrictive permissions is crucial to prevent unauthorised access to saved credentials, thereby enhancing the overall security posture.

Security Implications

The security implications of misconfigured permissions or inadequate encryption can be severe, as sensitive access details may be exposed. High-risk passwords should not be stored in plaintext or easily accessible formats. Instead, they should be secured using encrypted filesystems or specialised secret management tools designed for secure credential storage14.

To mitigate risks associated with browser-stored credentials, organisations should prioritise robust detection strategies that focus on credential access. This includes monitoring for suspicious activities that may indicate credential harvesting attempts, particularly from browsers where many users store sensitive information.

Recommendations for Secure Credential Management

- Use Secure Storage Solutions: Employ encrypted filesystems or dedicated secret management tools to store high-risk passwords securely.

- Implement Restrictive Permissions: Ensure that file permissions are configured to limit access to credential storage locations.

- Monitor Access and Usage: Utilise endpoint detection and response (EDR) solutions to monitor for unauthorised access attempts to credential storage files.

- Educate Users: Provide guidance on the importance of secure password practices and the risks associated with storing credentials in easily accessible formats.

Name Service Switch (NSS)

Purpose of NSS

The Name Service Switch (NSS) is a critical component in Linux that facilitates the operating system’s ability to retrieve and switch between various information sources, such as user and group information, hostname mapping, and more. By leveraging NSS, system administrators can configure the system to source information according to specific environmental requirements. This may involve querying local file databases (e.g., /etc/passwd, /etc/shadow) or network databases (e.g., LDAP, NIS, Active Directory). The flexibility provided by NSS is particularly beneficial in large or networked environments where centralised user management and data retrieval are essential for operational efficiency.

Configuration Files

The configuration for NSS is primarily managed through the /etc/nsswitch.conf file, which defines the order and sources for data retrieval. This file enumerates various databases (e.g., passwd, shadow, group) alongside one or more sources from which to obtain that information. The sources can include:

- files: Local file databases

- ldap: Lightweight Directory Access Protocol

- nis: Network Information Service

- nisplus: NIS+

- dns: Domain Name System

- wins: Windows Internet Name Service

Potential Attack Surfaces

If external data sources aren’t secured, they may allow unauthorised users to retrieve sensitive information. Using TLS for LDAP and limiting the scope of external sources enhances security.

Encrypted Storage Locations (e.g., LUKS)

LUKS, or Linux Unified Key Setup, is a sophisticated on-disk format designed for encrypting volumes, primarily within Linux environments. This specification, developed by Clemens Fruhwirth in 2004, serves as a standard for disk encryption, providing robust security features suitable for various applications, including portable storage devices like USB drives.

Structure and Functionality

LUKS employs a unique architecture where metadata is stored at the beginning of the encrypted volume. This metadata includes essential parameters such as:

- Encryption Algorithm: Specifies the algorithm used for encryption (e.g., AES, Twofish).

- Key Length: Defines the size of the encryption key.

- Block Chaining Method: Indicates the method used to chain blocks of data during encryption.

This metadata structure allows users to avoid memorising complex parameters, facilitating ease of use when deploying LUKS on devices like USB memory sticks.

Master Key and Passphrase Management

A key feature of LUKS is its use of a master key, which is encrypted using a hash derived from the user’s passphrase. This design enables multiple passphrases to unlock the same encrypted volume, allowing users to change their passphrases without needing to re-encrypt the entire disk. Each passphrase is associated with a unique key slot that contains a 256-bit salt used in the hashing process. When a user enters a passphrase, LUKS combines it with each salt, hashes the result, and checks against stored values to derive the master key.

Versions and Enhancements

LUKS has evolved over time, with two primary versions: LUKS1 and LUKS2. LUKS2 introduces several enhancements over its predecessor:

- Resilience to Header Corruption: LUKS2 features improved mechanisms for header integrity, including checksums and redundant metadata storage.

- Flexible Metadata Storage: The metadata in LUKS2 is formatted in JSON, allowing for future extensibility without altering binary structures.

- Advanced Key Derivation: By default, LUKS2 uses the Argon2 key derivation function, which provides enhanced security against brute-force attacks compared to PBKDF2 used in LUKS1.

Practical Applications

LUKS is particularly well-suited for encrypting entire block devices, making it an ideal choice for protecting sensitive data on mobile devices and removable storage media. Its ability to encrypt arbitrary data formats allows it to be utilised effectively for swap partitions and databases that require secure storage solutions.

Environment Variables

Usage of Environment Variables

Environment variables are essential components in modern software development, commonly utilised to store sensitive information such as API tokens, database credentials, and configuration secrets. They provide a flexible way to manage configuration settings across different environments (development, testing, production) without hardcoding sensitive data directly into the source code. However, the convenience of environment variables comes with significant risks, particularly when processes and users can access these variables without appropriate safeguards.

Risks and Best Practices

While environment variables offer a straightforward solution for managing configuration data, they inherently lack fine-grained access control. This deficiency can lead to potential exposure of sensitive information if not properly secured. For instance, if a malicious actor gains access to a system where environment variables are accessible, they could retrieve critical secrets that can compromise the entire application or infrastructure. Furthermore, environment variables can be inadvertently logged or exposed through error messages, increasing the risk of data leaks.

Best Practices for Managing Sensitive Information

To mitigate the risks associated with environment variables, organisations should consider adopting purpose-built secrets management solutions. These solutions provide enhanced security features that are crucial for protecting sensitive data:

- Role-Based Access Control (RBAC): This feature allows organisations to define who can access specific secrets based on their roles within the organisation, ensuring that only authorised personnel have access to sensitive information.

- Audit Logs: Maintaining detailed logs of access and modifications to secrets helps organisations monitor and track any unauthorised attempts to access sensitive data.

- Automated Secret Rotation: Regularly rotating secrets reduces the risk of long-term exposure and ensures that even if a secret is compromised, its usability is limited

SSSD and Quest Authentication Services: Cached Credential Mechanisms in Linux

In Linux systems that are integrated into enterprise identity infrastructures such as Active Directory, tools like SSSD and Quest Authentication Services (VAS/One Identity) are used to provide seamless authentication via centralized directories. One of their core capabilities is credential caching, which allows a user to authenticate using previously validated credentials even if the machine becomes disconnected from the domain controller. This enables persistent access for mobile or remote systems.

Usage and Functionality

When a user logs in successfully to a system that uses SSSD or Quest, the authentication service caches the user’s credentials locally in an encrypted or obfuscated format. This cache is then referenced in subsequent login attempts, especially when the system is offline.

- SSSD: Caches credentials under /var/lib/sssd/ and uses /etc/sssd/sssd.conf for configuration. Key setting: cache_credentials = true.

- Quest/VAS: Stores similar data typically in /var/opt/quest/vas/ with configuration handled via vas.conf.

Both tools store:

- Encrypted user password hashes (or offline Kerberos tickets)

- Cached group membership and authorisation data

- Session configuration

Best Practices for Secure Credential Caching

While credential caching improves usability, it introduces potential risks if not properly secured. Best practices include:

- Enable disk encryption (e.g., LUKS) to protect the credential cache at rest.

- Use restrictive file permissions on /var/lib/sssd and /var/opt/quest/vas.

- Set cache timeout policies using offline_credentials_expiration in SSSD.

- Disable caching on sensitive systems (e.g., jump boxes) via cache_credentials = false.

- Clear cache on user deletion or system deprovisioning to prevent stale access.

- Monitor for abnormal login patterns—especially repeated offline logins.

LDAP Authentication

Overview of LDAP

The Lightweight Directory Access Protocol (LDAP) is a robust, vendor-neutral application protocol designed for accessing and managing directory services over an Internet Protocol (IP) network. LDAP provides a structured and hierarchical framework for storing, retrieving, adding, updating, and deleting user, group, and resource information, making it essential for identity and access management within organisations. Its design facilitates efficient data retrieval and management through a tree-like structure known as the Directory Information Tree (DIT), which organises data intuitively for both users and applications.

Key Features of LDAP

- Hierarchical Structure: LDAP organises data in a tree-like format that enhances efficiency in data management and retrieval.

- Portability: Being platform-independent, LDAP ensures seamless integration across various environments.

- Security Measures: LDAP incorporates robust authentication mechanisms and secure data transmission protocols to protect sensitive information.

Credential Storage

LDAP serves as a secure repository for user identity information, allowing only authorised applications to access authentication details. This security is paramount; however, misconfigurations—such as the absence of encryption or inadequate access controls—can expose credential information, rendering the system vulnerable to security breaches. Implementing stringent access controls and encryption protocols is critical to maintaining the integrity of stored credentials.

Common Misconfigurations

- Lack of Encryption: Not utilising secure communication channels (e.g., LDAPS) can lead to interception of sensitive data.

- Inadequate Access Controls: Failing to enforce strict access policies can result in unauthorised access to sensitive information.

LDAP Architecture

Understanding the architecture of LDAP is crucial for leveraging its capabilities effectively. The architecture consists of several models:

- Information Model: Defines the structure of entries and attributes within the directory.

- Naming Model: Assigns unique Distinguished Names (DNs) to entries, ensuring each entry is distinctly identifiable.

- Functional Model: Outlines permissible operations such as searching, modifying, and deleting entries.

- Security Model: Focuses on user authentication and access control mechanisms to safeguard valuable data.

LDAP Operations

LDAP supports a variety of operations that facilitate interaction with the directory service:

- Connect Operation: Establishes a network connection between the client and the LDAP server.

- Bind Operation: Authenticates the client to the server.

- Search Operation: Retrieves specific information from the directory.

- Modify Operation: Allows changes to existing directory entries.

- Add Operation: Facilitates the addition of new entries to the directory.

- Delete Operation: Enables removal of entries from the directory.

Understanding Kerberos: A Comprehensive Overview

Core Concepts of Kerberos

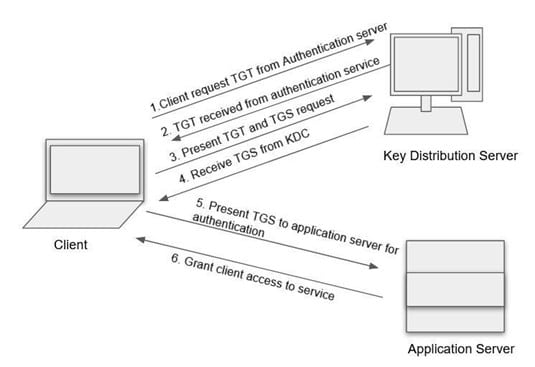

Kerberos is a sophisticated network authentication protocol that enables secure user access to multiple services without the need for repeated password entries. Developed at MIT, it employs a unique ticket-based system that enhances security by eliminating the transmission of passwords over the network.

Kerberos operates through several key entities:

- Key Distribution Centre (KDC): This central authority is responsible for authenticating users and services within the network. It comprises two main components: the Authentication Server (AS), which verifies user credentials, and the Ticket Granting Server (TGS), which issues service tickets.

- Client: The user or application seeking access to a service. The client must first authenticate with the KDC using its credentials.

- Service: The resource or application that the client wishes to access. Each service must be registered with the KDC to facilitate secure communication.

Ticket Granting Tickets (TGT) in Kerberos Authentication

In the realm of network security, Ticket Granting Tickets (TGT) play a pivotal role within the Kerberos authentication framework. Upon initial authentication, users are issued a TGT by the Key Distribution Centre (KDC), which serves as a credential to request access to various services without the need for repeated credential input. This mechanism not only enhances user convenience but also bolsters security by minimising the frequency with which sensitive credentials are transmitted over the network.

TGTs are typically stored in secure locations, such as /tmp/krb5cc_* on Unix systems, facilitating quick access to services. Users can specify the location of their TGT cache using the KRB5CCNAME environment variable. For an in-depth understanding, resources such as “Kerberos Tickets on Linux Red Teams” by Mandiant provide valuable insights into TGT management and usage in practical scenarios

Mitigating Kerberoasting in Active Directory: Transitioning from RC4 to AES

As organisations increasingly prioritise cybersecurity, transitioning from the outdated RC4 encryption to the more robust AES (Advanced Encryption Standard) for Kerberos authentication has become essential. This shift not only enhances security but also mitigates the risk of kerberoasting attacks, where attackers exploit weak encryption methods to extract service tickets and crack passwords offline.

Understanding the Vulnerability

Kerberoasting is a technique used by attackers to exploit service accounts in Active Directory (AD) that utilize weak encryption types, particularly RC4. The inherent weaknesses in RC4 make it susceptible to various attacks, allowing malicious actors to obtain service tickets and attempt to crack the associated passwords. By transitioning to AES, organisations can significantly reduce their exposure to these vulnerabilities.

Steps to Transition from RC4 to AES

Assess Current Encryption Settings:

- Verify the current encryption types used across your Active Directory environment. This can be done by inspecting the msDS-SupportedEncryptionTypes attribute for user and service accounts.

Modify Active Directory Settings:

- Update the encryption settings for service accounts that currently use RC4. This involves setting the msDS-SupportedEncryptionTypes attribute to allow only AES encryption (values 24 for both AES128 and AES256).

- For accounts that require compatibility with older systems, consider setting this attribute to “28” (AES + RC4) temporarily while you transition.

Group Policy Configuration:

- Implement Group Policy Objects (GPOs) to enforce AES encryption across all domain members. Specifically, configure the “Network Security: Encryption types allowed for Kerberos” policy to allow only AES encryption.

Monitor Kerberos Ticket Requests:

- Centralise logging for Event ID 4769 across all Domain Controllers (DCs) to monitor ticket requests and identify any services that may still rely on RC4. This proactive monitoring will help catch any issues before fully disabling RC4 support.

Registry Key Adjustments:

- On Domain Controllers, set the registry key DefaultDomainSupportedEncTypes to enforce AES as the default encryption type when msDS-SupportedEncryptionTypes is not explicitly set on an account.

Testing and Validation:

- After making these changes, conduct thorough testing to ensure that all services function correctly without relying on RC4. Pay special attention to any critical accounts that may have dependencies on legacy systems.

Gradual Rollout:

- If your environment includes older systems that do not support AES, consider a phased approach where you gradually disable RC4 support while ensuring compatibility with essential services.

Documentation and Training:

- Document the changes made and provide training for IT staff on managing encryption types in Active Directory moving forward.

Credentials in the /proc Filesystem

Overview of the /proc

The /proc filesystem serves as a vital virtual interface within Unix-like operating systems, providing comprehensive insights into the state of running processes. It functions as a dynamic representation of kernel data, offering real-time information on various aspects such as process environment variables, memory utilisation, open file descriptors, and command-line arguments. Each active process is represented by a unique directory within /proc, identified by its Process ID (PID), formatted as /proc/<PID>. This hierarchical structure enables system administrators and applications to access process-specific data efficiently, facilitating resource management and performance monitoring. Consequently, the /proc filesystem is an indispensable tool for system diagnostics and introspection, acting as a control centre for kernel interactions and process management.

Security Concerns

Despite its utility, the /proc filesystem presents significant security challenges. Sensitive information may be exposed to unauthorised users if proper permissions are not enforced. The detailed data contained within /proc—such as environment variables, memory maps, open files, and command-line arguments—can inadvertently reveal critical credentials like API keys, tokens, and passwords stored in environment variables. Notably, files located under /proc/<PID>/, such as environ and cmdline, can disclose these sensitive details. This vulnerability makes the /proc filesystem a potential target for attackers seeking to extract confidential information from running processes.

Common Techniques for Credential Dumping

Credential dumping is a significant cybersecurity threat where attackers extract authentication credentials from operating systems. This technique is essential for lateral movement within networks and can lead to severe breaches if not adequately mitigated. Below are key methods employed in credential dumping, particularly on Linux systems.Co

1. Cached Credential Discovery and Exploitation (SSSD & Quest)

Attacker Perspective: Discovery and Exploitation

From an attacker’s standpoint, cached credentials present an attractive post-exploitation target—especially in environments where network authentication is unavailable.

Discovery Techniques

- Identify systems using SSSD/Quest:

- ps aux | grep sssd

- ls /etc/sssd/sssd.conf

- ls /opt/quest/vas/vas.conf

- Look for credential cache paths:

- SSSD: /var/lib/sssd/db/ccache_*, /var/lib/sssd/db/cache_*.ldb

- Quest: /var/opt/quest/vas/ (especially vasd or vas_auth data)

- Check configuration files:

- SSSD: Look for cache_credentials = true or offline_credentials_expiration

- Quest: Look for caching-related flags in vas.conf

Abuse Techniques

- Credential Extraction (Local Privilege Required):

- Offline brute-force tools exist to extract or replay cached credentials from ldb files, though decryption requires root privileges and offline parsing tools.

- Tools like ldbsearch, ldbedit, or custom scripts using python-ldb can help extract cached metadata.

- TGT Replay:

- In some setups, SSSD or Quest may cache Kerberos tickets (TGTs). If accessible, these can be replayed to access other services, especially in poorly segmented environments.

- Offline Enumeration:

- Parse cached databases to enumerate AD user names, domain info, and group memberships.

Persistence

Attackers can tamper with the cache to:

- Inject users with cached credentials for persistence

- Avoid detection by operating fully offline

2. Extracting Password Hashes from /etc/shadow

With elevated privileges, attackers can access the /etc/shadow file, which securely stores hashed passwords for all system users. By extracting these hashes, adversaries can utilise sophisticated cracking tools such as John the Ripper or Hashcat to perform offline attacks. These attacks allow them to guess passwords without alerting the system administrators, employing various techniques, including:

Brute-force attacks: Systematically trying every possible password combination.

Dictionary attacks: Using a pre-defined list of likely passwords.

Rainbow tables: Precomputed tables for reversing cryptographic hash functions.

These methods are particularly effective against weak or commonly used passwords, making it crucial for organisations to enforce strong password policies and regular audits of their user accounts

3. Reading /proc for Sensitive Data

Attackers can exploit files within the /proc filesystem, such as /proc/<PID>/mem and /proc/<PID>/environ, to read sensitive information, including command-line arguments and environment variables. By leveraging debugging tools like gdb or ptrace, they can attach to running processes and dump their memory. This technique can expose sensitive data temporarily held in RAM, such as plaintext passwords.

One notable tool used in this context is MimiPenguin, which specifically targets clear-text credentials stored in memory. It operates by dumping a process’s memory and scanning for lines that may contain clear-text credentials. MimiPenguin employs a statistical approach to assess the likelihood of each word being a valid credential by comparing hashes found in /etc/shadow, memory contents, and utilising regex searches. When potential matches are identified, it outputs them directly to the standard output stream

4. SSH Key and Browser Credential Extraction

The compromise of SSH keys and browser credentials represents a significant threat to system security. When attackers gain access to a user’s home directory, they can extract SSH keys stored in the ~/.ssh/ directory. These keys allow unauthorised access to other systems without the need for password authentication, facilitating lateral movement within networks. Attackers can utilise these stolen keys to impersonate users and perform actions that could lead to severe data breaches or system compromises.

Risks Associated with SSH Key Compromise

- Stolen Private Keys: Keys left in publicly accessible directories or shared via insecure channels can be easily exploited.

- Duplicate Keys: Multiple copies of the same key across different systems increase the attack surface.

- Unsecured Private Keys: Keys without adequate protection measures (e.g., passphrases) can be accessed by unauthorised users.

5. Browser Credential Extraction

In addition to SSH keys, attackers often target browser directories such as ~/.mozilla for Firefox or ~/.config/google-chrome for Chrome. These directories may contain sensitive information, including credentials, cookies, and session tokens. By accessing these files without permission, attackers can hijack active sessions or extract login credentials for various web applications. This capability underscores the importance of securing browser data as part of a comprehensive credential dumping strategy.

6. Kerberos Cached Ticket Discovery and Exploitation

Kerberos tickets, especially Ticket Granting Tickets (TGTs), are often cached locally to support offline authentication or reduce authentication overhead. These cached tickets can be harvested and replayed by an attacker to impersonate a legitimate user without knowing their password.

Discovery Techniques

klistto view active tickets.- Inspect

/tmp/krb5cc_*or/var/tmp/krb5cc_*for cached ticket files. - Review

/etc/krb5.conffor cache-related settings.

7. Environment Variable Credential Discovery ($ENV)

From an attacker’s perspective, environment variables can be a rich source of sensitive data, especially in environments where credentials are injected into processes for application or automation purposes.

Discovery Techniques

printenv,env, or reading/proc/<PID>/environand replacing null bytes with newlines.

Mitigation Strategies

To mitigate the risks associated with SSH key and browser credential extraction, organisations should implement robust security measures:

- Key Management: Regularly audit and manage SSH keys to ensure that only necessary keys are in use. Remove orphaned or unused keys promptly.

- Access Controls: Implement strict access controls on directories containing sensitive information, ensuring that only authorised users have access.

- Encryption: Use strong encryption methods for storing sensitive data, including SSH keys and browser credentials.

- Monitoring and Alerts: Establish monitoring systems to detect unauthorised access attempts or anomalies in key usage patterns.

- Restrict root/local access to systems with credential caches.

- Use kernel lockdown mechanisms or memory hardening to prevent offline cache inspection.

- Rotate Kerberos secrets and invalidate cached credentials upon compromise.

- Monitor for unauthorised reads or access to credential cache paths using auditd or EDR solutions.

By adopting these strategies, organisations can significantly reduce their vulnerability to attacks that exploit SSH keys and browser credentials.

如有侵权请联系:admin#unsafe.sh