文章探讨了安全运营中心(SOC)在处理警报时面临的“混乱中间”问题,即大量警报未被及时调查或忽视关键警报。AI技术被引入以解决这一瓶颈,Morpheus AI通过混合模型提供透明自治和灵活工作流,在保证速度的同时降低风险。 2025-9-18 20:16:4 Author: securityboulevard.com(查看原文) 阅读量:8 收藏

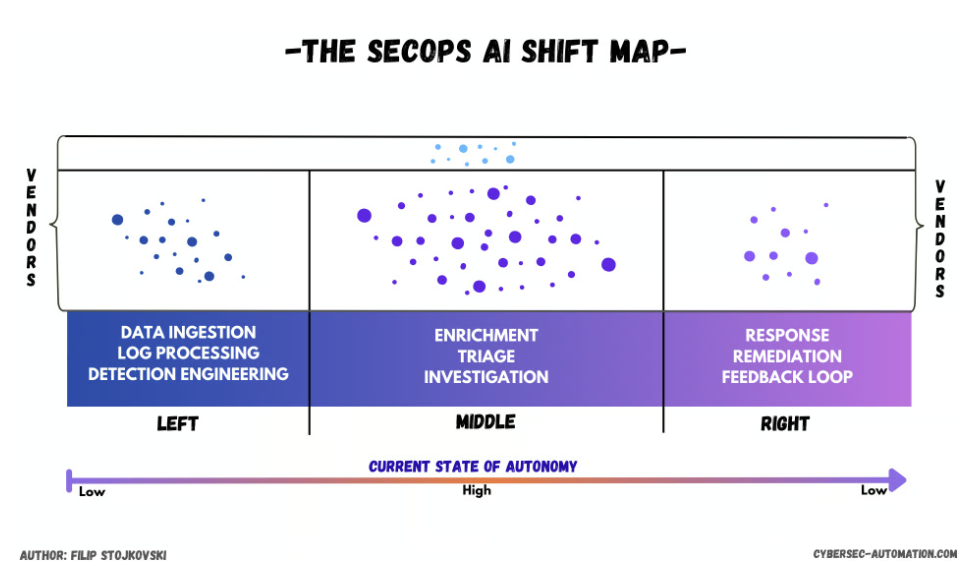

Forty percent of alerts are never investigated, and sixty-one percent of teams later admit they ignored ones that proved critical. The SecOps AI shift map below highlights how the action has moved to the middle to solve this bottleneck, where many AI SOC startups promise quick wins in triage and investigation.

Cybersecurity analyst Filip Stojkovski calls it the “messy middle”, where AI could be transformative in his latest post: where evidence is inconsistent, context lives across tools, and there’s no scripted playbook that can stretch to fit every case. This is where alert floods pile up, where SOC analysts burn out, and where backlogs grow.

What the Messy Middle Looks Like

“The middle of the SOC lifecycle isn’t just messy; it’s where the real detective work happens,” Stojkovski says, explaining why the middle ground has been fragile for automation.

Alerts pull in data from cloud, endpoint, identity, and business systems. Each source “speaks” a different dialect. Analysts bounce between SIEM queries and logs, chasing fragments. The problem is not just in gathering raw data, but turning those fragments into a single, defensible story, as each investigation shifts with new clues.

Why Black-Box AI SOCs and Build-It-Yourself SOAR Fail

A “plug-and-play” AI SOC looks great on day one. It connects, clusters, and even investigates. The cost shows up later. You cannot see the logic, tune the steps, or add a single validation where it matters. If a platform suppresses a signal you care about, trust drops. Fast.

Owning every build-it-yourself SOAR workflow feels safe until API changes and schema shifts break the playbooks, leaving analysts debugging and stitching the hardest, most fragile part of the workflow. Hiring more engineers just to keep these workflows alive is not a scalable solution.

The Hybrid Answer: Autonomy With Guardrails

You don’t have to choose between black box and brittle. Stojkovski’s post offers a brilliant gaming analogy for the hybrid AI SOC model: First, a ‘city-builder’ setup phase where your team lays rules, integrations, and approvals. Second, an ‘RPG’ run phase where autonomous AI agents investigate within those rules, pivot, and propose fixes, but always “stay on the roads you created”. This approach uses AI for heavy lifting in the middle, with deterministic guardrails for the steps that must be precise. It delivers speed and keeps human approvals where risk is high.

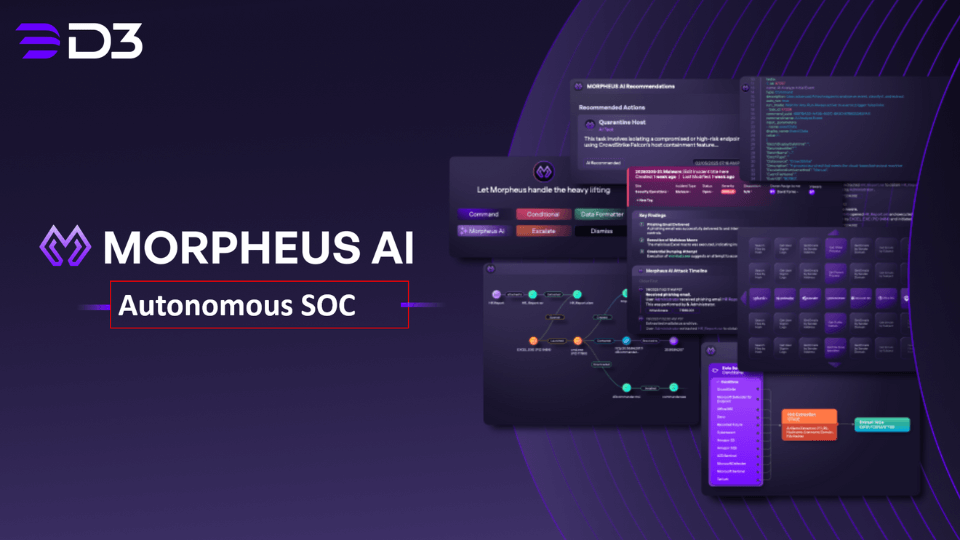

How Morpheus Closes the Gap

Morpheus AI immediately builds an adaptive investigation workflow from live ingestions, mapping every field and correlation path. Each workflow is transparent: you see the YAML, every planned step, the unit and integration tests that validate it, and the GitHub pull request created before anything runs in production. Analysts can chat with the system to add steps (“send a Slack alert”), re-order tasks, or drill into timelines and attack graphs.

Unlike black-box AI SOC solutions, Morpheus shows its reasoning, logs every action, and enforces approvals for sensitive moves, giving you autonomy with control. Horizontal IOC graphs and vertical event timelines surface hidden links, while new analyst dashboards, attack maps, and AI-summarized incident views give leaders a real-time view of posture.

The outcomes are measurable. Morpheus covers 100% of alerts, triaging 95% in under two minutes and executing investigations that span 800+ maintained integrations across endpoint, identity, cloud, and ticketing systems.

These are the levers that tame the “messy middle”: adaptive playbooks, unified correlation, and transparent governance, delivering speed at enterprise scale.

Morpheus: Get Autonomy With Control

The middle is where your SOC wins or falls behind. Opaque, black box AI cannot be trusted there. Deterministic flows cannot keep up there. Morpheus meets the problem on its own ground with transparent autonomy, editability, and breadth, delivering:

- Balanced autonomy: Routine, high-volume work runs without a person. High-impact decisions pause and escalate.

- Flexible exploration: Analysts can pivot off AI findings in chat and ask new questions.

- Custom logic: Workflows adapt to your environment instead of forcing you into one path.

Book a demo and see the middle get clean, fast, and auditable.

The post The Messy Middle: Where SOC Automation Breaks (and How Morpheus AI Fixes It) appeared first on D3 Security.

*** This is a Security Bloggers Network syndicated blog from D3 Security authored by Shriram Sharma. Read the original post at: https://d3security.com/blog/soc-messy-middle-morpheus-ai/

如有侵权请联系:admin#unsafe.sh