自动化和AI正在改变安全运营中心(SOCs)的工作方式,但需依赖高质量数据。现代沙盒技术通过提供可靠的行为证据支持检测工程、事件响应和威胁情报。关键在于选择具备抗规避能力、完整执行跟踪、环境仿真和高保真输出的沙盒工具以提升决策准确性。 2025-9-10 08:36:38 Author: www.vmray.com(查看原文) 阅读量:21 收藏

Automation and AI are reshaping how Security Operations C enters (SOCs) work. That’s a good thing, but only if the systems you automate and the models you train are fed high-quality, reliable data. When you hand decision-making to AI-assisted investigators or automated playbooks, you need the behavioral truth. You need facts, not assumptions; signals, not noise.

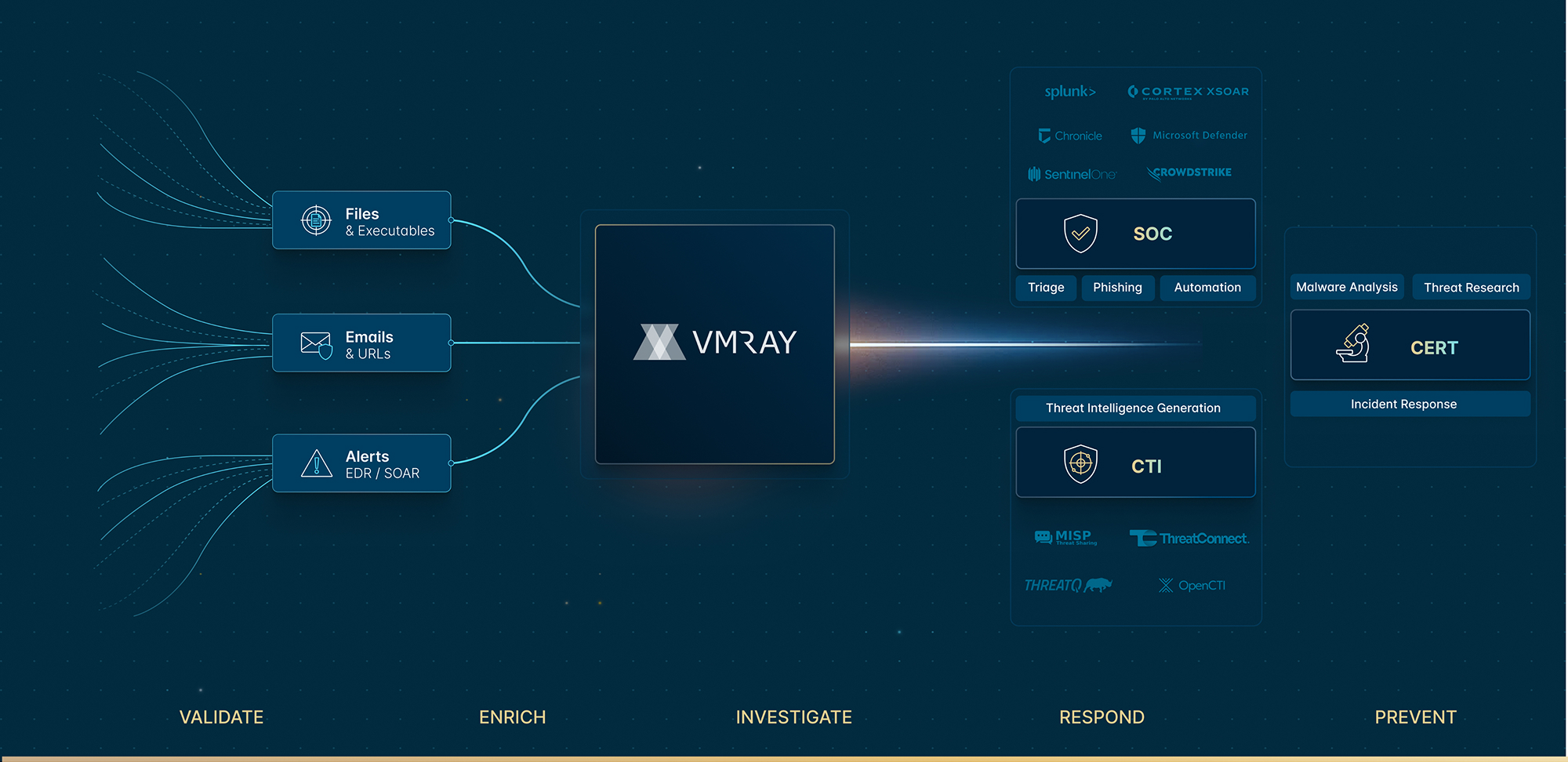

This is exactly where sandboxing still plays a decisive role. Far from being a dated technique, modern sandboxing provides the verifiable behavioral evidence that detection engineering, incident response, threat intelligence, and AI pipelines all depend on. This post will explain why sandboxing is more essential today than ever before and offer a practical checklist for evaluating a sandbox, so your automation and AI make the right decisions, quickly.

Why Sandboxing is Needed More Than Ever

1 – Your Automation and AI Are Only as Good as Their Inputs

Automation removes human error and speeds up response, but it also removes human judgment. That tradeoff works perfectly when the inputs are accurate and predictable. It becomes dangerous when the input is noisy, incomplete, or misleading.

AI models are equally vulnerable. They amplify whatever you feed them. High-fidelity telemetry improves precision, while inconsistent or incomplete data amplifies false positives, model drift, and poor decisions. If you expect your automated playbooks or AI agents to act autonomously, they must consume accurate, reproducible evidence of what happened.

2 – EDR Telemetry is Necessary, but Not Always Sufficient

Endpoint Detection and Response (EDR) agents provide excellent endpoint telemetry. They are built to detect, block, and record activity on live systems. However, because EDRs are optimized for endpoint performance and protection, they can miss behaviors that only appear under full execution or in multi-stage attack chains. EDRs may also suppress or aggregate traces to reduce noise—which is sensible for daily operations but not ideal when you need the full forensic picture for automation or model training.

Dynamic analysis in a dedicated sandbox fills those gaps. It safely executes suspicious samples, observes their end-to-end behavior, and produces structured artifacts that show what actually happened in a controlled environment.

3 – Attackers Use Evasion and Multi-Stage Chains, and You Need Full Execution Visibility

Today’s threats often involve multi-stage delivery (documents downloading loaders, loaders downloading payloads), complex redirections, and explicit anti-analysis checks. These behaviors can be invisible to static scanners or truncated by simple sandboxes. A sandbox that follows the entire chain, simulates realistic user interaction, and resists anti-analysis techniques surfaces the true end state of an attack—the part your automation and AI must act upon.

4 – Behavioral Outputs Empower Detection Engineering and Threat Intelligence

A strong sandbox does more than just flag something as malicious. It extracts configuration, C2 endpoints, command-and-control patterns, persistence mechanics, and behavioral indicators mapped to frameworks like MITRE ATT&CK.

These outputs allow threat hunters to pivot quickly, enable detection engineers to build robust rules, and provide threat intelligence teams with reliable IOCs and campaign context—the exact artifacts you want feeding your automation and AI.

What to Look for in a Sandbox

When you’re choosing or validating a sandbox for modern security operations, you need to evaluate it against concrete criteria. These are the attributes that determine whether the sandbox will produce facts your automation and AI can safely consume.

- Evasion Resistance: The analysis must remain invisible to the malware. If the sample detects the analysis environment and suppresses its malicious behavior, the result is meaningless. To avoid tipping off advanced samples, you need an evasion-resistant architecture, such as one that is hypervisor-based.

- Complete Execution Tracing: The sandbox must follow the entire delivery chain—including staged downloads, decompression, dynamic loader behavior, and any in-memory execution techniques. If a sandbox stops at the first step, it will miss the final payloads and the actual malicious intent.

- Realistic Interaction & Environment Simulation: Some attacks only trigger when they detect user interaction or specific environment signals. The sandbox should simulate user input and common environmental conditions so the sample behaves as it would in the wild.

- High-Fidelity, Structured Outputs: Raw artifact dumps aren’t enough. Look for platforms that provide structured, prioritized reports with clear verdicts, IOCs, behavior timelines, ATT&CK mappings, and machine-readable exports like JSON or STIX. This is the precise, consistent, and easily parsed data your automation and AI will ingest.

- Noise Reduction & Clarity: Sandboxes that return every benign artifact create more work than they solve. Look for a clarity layer that groups, prioritizes, and filters artifacts so your analysts and automated systems get what matters—not a flood of irrelevant data.

- API-First & Integration Readiness: Your sandbox should be designed to plug seamlessly into your SIEM, SOAR, EDR, and other orchestration layers. An API-first approach with connectors and clear documentation lets you automate submission, enrichment, and response without manual steps.

- Speed and Scalability: Analysis latency is a critical factor. A sandbox that takes hours to return a verdict won’t support fast triage or real-time automation. Verify its throughput and how the platform scales under peak load.

- Config Extraction & Infrastructure Indicators: Effective defenders want more than just a “malicious” or “benign” flag. They want configuration strings, domain lists, and other pivot points. The accurate extraction of C2, domains, and credentials is essential for threat intelligence and long-tail campaign tracking.

- Privacy, Data Residency, and Governance: If you’re feeding samples into a third-party platform, you must understand its data retention, sharing policies, and deployment options. Enterprise teams often require on-premise or region-specific cloud deployments to meet compliance and confidentiality requirements.

- Transparent Detection Logic & Testability: Insist on platforms that explain why they produced a verdict. Black-box outputs undermine automation. The ability to run known evasion tests, see the triggers, and understand the scoring improves trust and operational adoption.

How These Criteria Translate into Better Automation and AI

When your playbooks and models consume sandbox outputs that meet the checklist above, three things happen:

- Automation is safer. Actions (block, quarantine, enrich) can be based on deterministic signals rather than heuristics, which reduces accidental disruption and increases automation coverage.

- AI becomes reliable. Models trained or fed with clear behavioral facts are less prone to hallucination and drift. They produce higher-precision alerts and more useful information for analysts.

- Teams scale confidently. Junior analysts can accelerate to advanced tasks when reports are readable and prioritized, and senior teams can refocus their efforts on threat hunting and threat modeling instead of basic triage.

How to Validate a Sandbox Before You Rely on It

To ensure you can rely on a sandbox, a short validation plan gives you confidence before you feed it into your automated systems:

- Evasion Tests: Run known anti-sandbox samples and compare their behavior to a hardened lab environment. Verify whether the sample changes its behavior under analysis.

- Chain Completeness Test: Submit multi-stage artifacts (like a document that downloads a downloader) and confirm the sandbox follows the entire chain to its completion.

- Report Audit: Review a variety of sample reports for clarity, IOC quality, and consistency. Do the exports map cleanly to your SIEM or SOAR schemas?

- Integration Dry Run: Use the API to automate submission and ingestion. Measure the end-to-end latency and validate the enrichment fields.

- Privacy Check: Confirm that data retention policies, data sharing, and regional deployment options meet your governance requirements.

- Operational Pilot: Run a short pilot with a human-in-the-loop on real alerts and measure the mean time to detect and respond, the analyst time saved, and the automation safety by tracking false positive avoidance.

The Path Forward

You don’t need more buzzwords or flashy demos. You need reliable behavioral truth: trustworthy analysis, clear artifacts, and machine-ready outputs that automation and AI can act upon without second-guessing. The right sandbox is the difference between accelerating your security operations and automating your mistakes.

If you’re building or scaling AI and automation in your SOC, make sandbox quality a key criterion. Insist on evasion resistance, full chain visibility, clear reporting, and robust integration capabilities. These attributes are the foundation of safer, more effective automation and higher-value AI.

If you’d like to evaluate a sandbox against these criteria, you can explore a platform that was built from day one for evasion resistance, end-to-end execution tracing, and noise-free reporting.

如有侵权请联系:admin#unsafe.sh