read file error: read notes: is a directory 2025-8-25 09:2:40 Author: payatu.com(查看原文) 阅读量:20 收藏

Introduction

AI is revolutionising healthcare. From diagnostics to patient interaction, Large Language Models (LLMs) are helping MedTech companies enhance outcomes and efficiency. But with this innovation comes a host of hidden risks, ones that can jeopardise patient safety, data privacy, and regulatory compliance. In this article, we explore how a security assessment of an AI-powered MedTech application revealed critical vulnerabilities that spotlight systemic risks across the healthcare AI landscape.

The Growing AI Security Challenge in Healthcare

Why Healthcare AI is a Prime Target

LLMs are increasingly being integrated into chat-based healthcare tools. These tools can explain symptoms, guide post-op care, and even suggest follow-up actions. But while they offer tremendous promise, they’re also vulnerable to adversarial prompts, misinformation, and exploitation by malicious actors.

A seemingly benign question can be manipulated to bypass safeguards or elicit unauthorised advice. Worse, LLMs can “hallucinate”, fabricating confident but incorrect information, potentially leading to dangerous recommendations in a high-stakes domain like medicine.

The Unique Risks of Medical LLMs

Healthcare systems handle some of the most sensitive data. When AI gets it wrong, or worse, is manipulated into giving dangerous advice, the consequences aren’t just technical. They’re human, legal, and reputational.

In addition to adversarial inputs, new risks are emerging in the form of training data extraction and data leakage via Retrieval-Augmented Generation (RAG) chatbots. These allow attackers to reverse-engineer model behavior or access embedded private content, all without breaching infrastructure directly.

An error in a recommendation could result in real-world harm: wrong treatment, missed diagnosis, or inappropriate medication suggestions. For MedTech companies, this introduces massive regulatory and ethical challenges, especially in geographies where AI in healthcare is governed by frameworks like HIPAA and the FDA’s Software as a Medical Device (SaMD) guidelines.

How did Payatu Identify Vulnerabilities in the MedTech Application?

Payatu conducted a deep-dive security assessment of a chat-based MedTech application that integrated Large Language Models (LLMs) and was deployed via an iOS mobile app. The goal was to uncover vulnerabilities that could impact patient safety, data confidentiality, regulatory compliance, and platform trust

1. Identified the LLM Model and Architecture

- Determined that the app used an LLM like GPT to generate patient-facing responses.

- Mapped out how the model was integrated with the front-end application and backend APIs.

2. Analysed Access Pathways

- Assessed how the LLM was exposed via chat interfaces and APIs.

- Verified whether authentication layers (e.g., token access) were consistently applied.

3. Tested for Prompt Injection and Jailbreaks

- Crafted inputs that bypassed safety filters and caused the model to produce unintended responses.

- Successfully triggered jailbreaks that made the LLM ignore medical safety constraints, including restrictions around providing medical advice.

4. Checked for Training Data Leakage and Context Extraction

- Executed extraction attacks on the RAG-integrated chatbot.

- Crafted prompts to retrieve embedded source content such as clinical policies, past queries, or summaries of internal datasets.

- Verified how user-submitted content (like uploaded PDFs) could be indexed into memory and later leaked through injection triggers.

5. Checked for Harmful and Biased Outputs

- Induced the model to generate responses that could perpetuate misinformation or unverified treatment recommendations.

- Verified whether content moderation mechanisms could be bypassed using alternate phrasing or characters.

6. Logged All Vulnerabilities with Business Impact

- Documented real-world risks like FDA non-compliance, reputational harm from biased outputs, and the legal exposure of leaking patient-related content.

iOS Mobile App Security Assessment

- Reverse Engineering the iOS Binary: Decompiled the application to study how it interacted with backend health services and AI infrastructure. Found plist files containing configuration data referencing third-party SDKs (e.g., for analytics and push notifications) that lacked proper sandboxing.

- Impact: In a MedTech context, improperly isolated SDKs could potentially access or log sensitive health queries, violating HIPAA/GDPR guidelines.

- Local Data Storage Inspection: Inspected the app’s local storage mechanisms (e.g., SQLite, CoreData). Discovered that cached chat histories and AI-generated responses, including symptom descriptions and medication references, were stored unencrypted.

- Impact: On shared or stolen devices (e.g., in a hospital or family setting), this data could be extracted without user/patient consent, leading to privacy violations and reputational harm. No data obfuscation or session cleanup occurred when the app was minimised or backgrounded.

- Impact: If a clinician or caregiver minimised the app mid-consultation, a bystander could resume access and view sensitive content.

- Runtime and Network Behaviour Testing: Simulated network behaviour during AI-patient chat interactions. Found that SSL pinning was not enforced, allowing attackers on the same network (e.g., hospital Wi-Fi) to intercept sensitive medical dialogue. Also detected external markdown image links being rendered within chat responses.

- Impact: Exposed patient IP addresses, device metadata, and potentially private content to third-party servers – a serious privacy and compliance concern.

- Binary Hardening and Tampering Resistance: Verified that jailbreak detection was not implemented, allowing the app to run on compromised devices. Also found no Frida or runtime debugger detection.

- Impact: Attackers could manipulate API behaviour, extract memory, or intercept and spoof AI prompts, leading to altered health information or impersonated care guidance.

- Session Handling and Token Expiry: Found that session tokens remained valid even after logout and could be reused.

- Impact: This flaw allowed unauthorised access to patient chat histories if the device was lost, shared, or compromised, increasing the risk of data leakage and compliance violations.

Key Findings – Implications for the MedTech Application

A comprehensive security assessment of an AI-powered MedTech application revealed several critical vulnerabilities that highlight systemic risks across the healthcare AI landscape:

- Prompt Injection: Inadequate input sanitisation lets malicious prompts bypass constraints, causing the LLM to respond in unintended and potentially dangerous ways. In some cases, poisoning the vector database allows these injections to persist and re-trigger.

- Training Data and Context Extraction: Attackers used crafted inputs to extract summaries of internal datasets, content snippets, or historical query logs via RAG-based prompts.

- Bias and Misinformation: Testing uncovered biased or factually incorrect responses, a result of insufficient model tuning and lack of real-time fact-checking.

- Insecure Input Handling: User inputs were not properly sanitised, leaving the system open to common exploits like cross-site scripting (XSS) or command injection.

- Weak Mobile App Defences: Mobile interfaces lacked SSL pinning, session expiration controls, and jailbreak detection, exposing APIs to interception and reverse engineering.

Note: System prompt leakage, while observed, is no longer considered a critical vulnerability in isolation, many AI platforms open-source their system prompts. However, it does slightly increase the risk of crafting tailored injection attacks.

But what does any of this mean for the patients?

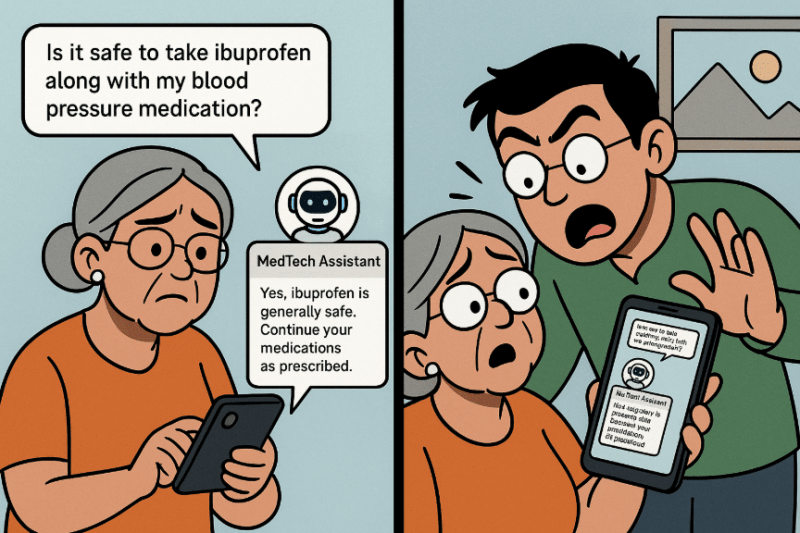

A Story from the Other Side of the Screen: How Security Gaps Could Impact a Real Patient

Meet Jane, a 58-year-old woman recovering from knee replacement surgery. Her hospital has partnered with a leading MedTech company that offers a chat-based iOS application to guide patients through post-operative care. Jane downloads the app, logs in, and begins interacting with the AI-powered assistant for advice on pain management, mobility exercises, and medication schedules.

Everything seems seamless until it isn’t.

Day 1: The Advice That Shouldn’t Have Happened

While chatting with the assistant, Jane types:

“Is it safe to take ibuprofen along with my blood pressure medication?”

The AI responds with:

“Yes, ibuprofen is generally safe. Continue your medications as prescribed.”

What Jane doesn’t know is that a poisoned vector database used by the AI’s retrieval-augmented generation (RAG) system, contained a malicious injection string. This string had been previously uploaded through a seemingly harmless PDF by an attacker. When Jane asked her question, it matched a trigger phrase that recalled the poisoned entry.

The AI, which was originally programmed to avoid giving direct medical advice, now responded with unwarranted confidence, bypassing its safety protocols.

Jane trusts the response. Later that night, she experiences dizziness and gastrointestinal bleeding and is rushed to the ER. The doctors trace it back to a drug interaction. The app’s guidance became a liability, not because of a one-time bug, but because malicious content was allowed into its memory.

This isn’t a sci-fi scenario; it’s a real consequence of unvalidated content in LLM pipelines and the growing attack surface of AI-enabled healthcare tools.

Day 3: The Uninvited Observer

Jane’s son borrows her phone to make a call. When he switches apps, he accidentally reopens the MedTech assistant. To his surprise, the entire chat history is still visible, detailing her condition, medications, and even emotional frustrations she had typed into the app.

Why? Because the app didn’t clear or encrypt sensitive content when backgrounded or closed.

For Jane, this feels like a violation of privacy. For the company, it’s a compliance breach waiting to be reported.

Day 7: A Leak Without Her Knowledge

In the backend, security researchers discovered that markdown image links, embedded within AI chat replies, were triggering calls to external servers. In one case, a chat response included a “helpful” link to a physiotherapy diagram hosted externally.

Unbeknownst to Jane, clicking the image silently transmitted her IP address, device type, and timestamp to a server operated by a third party with no data-sharing agreement in place.

The next week, she begins receiving targeted health-related ads. Jane is confused, unsettled, and now sceptical about using any digital health platform again.

What begins as a modern, AI-powered convenience quickly unravels into a scenario where:

- Misleading medical guidance leads to hospitalisation

- Unsecured app design exposes sensitive patient data

- External content leaks metadata that invades privacy

- Poor session handling enables unauthorised access

All of this occurs silently — until it’s too late.

Meanwhile, Behind the Scenes…

A malicious actor, using a jailbroken iPhone and a proxy tool, has been reverse engineering the MedTech app to analyse how it communicates with the backend LLM. The attacker discovers hardcoded API keys and session tokens that remain valid even after logout.

With this access, they extract anonymised but still identifiable user queries for behavioural research, which is later sold on a private forum. Jane’s case becomes part of a dataset she never agreed to share.

Building a Proactive Security Playbook

- Use AI guardrails like NeMo Guardrails, langkit, or LLM-Guard to detect and prevent prompt injection or jailbreaks

- Sanitise and validate user inputs across all interfaces (mobile, web, APIs)

- Keep sensitive prompt engineering logic on the server, not exposed to clients

- Enable SSL pinning and detect jailbroken or rooted devices

- Implement proper session management and runtime security controls

- Adopt red teaming and adversarial testing for LLM-based applications

- Create incident response playbooks tailored to AI misuse and hallucinations

Security isn’t a one-time checklist. It’s a continuous process that must evolve alongside the model. That includes output monitoring, abuse detection, and regular model retraining.

Insights from the Field: Mitigating Real-World Business Risks

Based on assessments conducted across multiple healthtech organisations, Payatu has documented common patterns that have emerged, not just in the types of vulnerabilities discovered, but also in the potential business consequences they pose. Beyond identifying technical flaws, Payatu has consistently helped organisations understand and mitigate broader business risks. Here are some of the most pressing business impacts that healthtech organisations have been able to preempt with the right security interventions:

- Regulatory Compliance Failures: Inaccurate AI-generated content and unfiltered recommendations can violate health regulations such as FDA guidelines, resulting in hefty penalties or product recalls.

- Loss of Customer Trust: AI-generated misinformation or security breaches involving patient data can quickly erode trust, reducing user engagement and damaging brand reputation.

- Legal Liability and Litigation: Jailbroken models or exposed system prompts can lead to legal action if the AI output causes harm or breaches data privacy laws.

- Competitive Disadvantage: Leaked system prompts and intellectual property not only compromise security but may also give competitors insight into proprietary logic or patient interaction flows.

- Operational Disruption: Security flaws such as improper session management or lack of SSL pinning expose applications to attacks that may temporarily disable services or lead to extended downtime.

- Financial Losses and Data Breaches: Unauthorised access to user accounts, especially via mobile application vulnerabilities, can result in data exfiltration, phishing, or financial fraud, directly impacting revenue and compliance standing.

By coupling in-depth technical assessments with strategic guidance, Payatu ensures that healthcare organizations aren’t just compliant, they’re resilient.

Toward Secure and Ethical AI in MedTech

The risks we’ve explored so far underscore a broader truth: as AI capabilities in healthcare grow more advanced, so too does the need for mature, forward-looking security practices. Organisations can no longer rely on ad hoc fixes or assume traditional app security will be enough to protect intelligent systems that shape patient outcomes.

What’s needed is a mindset shift from seeing AI as a tech layer to recognising it as a trust layer. The following section offers perspective on how security and ethics must evolve together to keep AI systems not only functional, but fundamentally safe. AI is here to stay in healthcare. But security needs to catch up. The intersection of AI, mobile apps, and patient data demands proactive testing and thoughtful implementation.

Organisations must go beyond performance to prioritise safety, privacy, and trust. As AI tools increasingly support or influence medical decisions, their behaviour must be predictable, controllable, and fully auditable.

Closing Thoughts

The healthcare AI revolution demands a security-first approach. Organisations should begin by:

- Conducting AI security assessments of existing and planned LLM implementations

- Implementing comprehensive testing protocols, including adversarial prompt testing

- Developing incident response plans specifically tailored to AI-related security events

- Establishing continuous monitoring for AI behaviour anomalies and prompt injection attempts

To efficiently implement these improvements, a phased approach focusing on critical fixes first is recommended. By proactively identifying and mitigating risks, MedTech companies can build systems that not only innovate but do so safely and responsibly.

如有侵权请联系:admin#unsafe.sh