文章讨论了端到端加密(E2EE)的误解与局限性。通过特朗普政府误将记者加入Signal群聊的事件,指出E2EE无法防止人为错误或身份验证问题。E2EE保护消息不被中间人窃听,但不能确保交流对象可信。文章还提到E2EE不适合军事用途,并强调加密应以隐私为核心,反对后门。 2025-3-25 09:21:32 Author: soatok.blog(查看原文) 阅读量:31 收藏

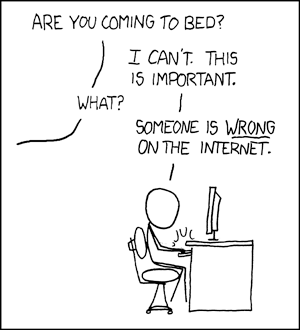

Internet discussions about end-to-end encryption are plagued by misunderstandings, misinformation, and some people totally missing the point.

Of course, people being wrong on the Internet isn’t exactly news.

“What do you want me to do? LEAVE? Then they’ll keep being wrong!”

Yesterday, a story in The Atlantic alleged that the Trump Administration accidentally added their editor, Jeffrey Goldberg, to a Signal group chat discussing a military action in Yemen.

This was quickly confirmed to be authentic.

Brian Hughes, the spokesman for the National Security Council, responded two hours later, confirming the veracity of the Signal group. “This appears to be an authentic message chain, and we are reviewing how an inadvertent number was added to the chain,” Hughes wrote.

The Trump Administration Accidentally Texted Me Its War Plans — The Atlantic

In the aftermath of this glorious fuck-up by the Trump administration, I have observed many poorly informed hot takes. Some of these were funny, but others are dangerous: they were trying to promote technologies that claim to be Signal alternatives, as if this whole story was somehow a failure of Signal’s security posture.

Not to put too fine a point on it: Switching to Threema or PGP would not have made a lick of difference. Switching to Matrix would have only helped if you consider “unable to decrypt message” helping.

To understand why, you need a clear understanding of what end-to-end encryption is, what it does, what it protects against, and what it doesn’t protect againt.

Towards A Clear Understanding

Imagine for a moment that you decided to build a dedicated, invite-only app that enables you to chat with your friends. For the sake of argument, let’s assume that your friends use multiple devices (computers, smartphones, tablets, one uses a watch, etc.). Despite this, they aren’t always online.

The simplest way to implement such an app requires an architecture that looks like this:

- You have an app (or a website) that your friends use to chat with their friends.

- If it’s a website, it lives in a browser window.

- If it’s an app, it’s installed on their devices.

- You have some sort of channel for passing messages between users.

- This is often a server that the apps or websites connect to, but you can also setup some sort of peer-to-peer infrastructure.

Any additional requirements only add to the complexity. For example, a consistent message history across devices without storing the message history server-side is actually doable, but requires a bit of careful planning.

What’s End-to-End Encryption?

If you were to take this abstract description of a chat app and add end-to-end encryption to it, what you end up doing is encrypting messages with the app such that only your friends can decrypt them, using the app on their own devices.

Thus, the “ends” are the software running on each device (also called “endpoints”).

This is in contrast with transport encryption (which protects messages between your app and the channel, and also from the channel and your friends’ apps, but not from the channel itself) and at-rest encryption (which protects messages on your device when you’re not using it).

How Does End-to-End Encryption Improve Security?

End-to-end encryption (E2EE for short) aims to ensure only the participants added to a conversation can read its contents.

The channel that delivers encrypted messages shouldn’t be able to read them.

Other Internet-enabled devices that can observe your network traffic to/from the chat app shouldn’t be able to read them.

What Doesn’t E2EE Give Us?

For one, just because you’re having a private conversation doesn’t mean you’re having a trustworthy conversation. An encrypted chat with a scammer will not save you from being scammed.

And as we saw with yesterday’s news story, E2EE also doesn’t prevent you from accidentally adding an editor for a newspaper to a group chat.

On Security Clearances and SCIFs

I must be cautious with my wording here, as I have never held a security clearance in my life (and I’ve never aspired to hold one).

Even if a smartphone app was developed tomorrow that successfully boasted better cryptographic security than Signal, it still wouldn’t be an appropriate tool for classified communications for military operations. In fact, I do not foresee any smartphone app ever being approved for this purpose.

Broadly speaking, when politicians describe Signal as a “non-secure” channel, they’re not talking about its cryptographic security at all. Instead, they’re remarking that Signal has not been approved by the US government for this sort of purpose.

Additionally, Signal’s “disappearing messages” feature is fundamentally incompatible with the requirements of governments to preserve documents (even if they’re highly classified).

When government and military officials want to discuss operations, they’re typically required to go into a SCIF (Sensitive Compartmented Information Facility), which ensures:

- That they are not being wiretapped. (To this end, mobile phones are not permitted in a SCIF.)

- That they can only access information they need access to (thus, compartmented).

- That they are discussing information only with other, known individuals with the relevant security clearances.

There’s almost certainly more to SCIFs than just what I listed, but as I said previously, I don’t have any firsthand experience with them. (If that’s what you’re looking for, ask one of the many security industry personalities on social media that advertise prior military experience.)

Point being: SCIFs are the right tool for the job. Smartphone apps like Signal are not.

It is not a failure of Signal (or any other E2EE technology) to not be suitable for military operations.

Quick Recap

End-to-end encryption provides confidentiality and integrity of messages between endpoints in a network.

When implemented correctly, E2EE prevents server software from reading the contents of messages or tampering with them.

E2EE doesn’t protect messages after they’re delivered.

E2EE doesn’t prevent magically make your conversations trustworthy. You could have a totally encrypted, private conversation with the editor of The Atlantic.

E2EE isn’t sufficient for military use, especially when implemented as a smartphone app.

Why Do We Even Encrypt?

Bad takes aside, one area of confusion that occurs frequently when discussing encryption technology is the motive for using it to begin with.

This might sound silly, but there are actually at least three different answers here.

- Privacy. This is probably the most obvious one, especially for the sort of people that read my blog.

- Access controls. To certain business types, encryption is a means to an end. It ensures that only people with permission can read the data in a way that’s harder to bypass than filesystem permissions.

- Compliance. This is the perspective of the sort of person that will store the key and ciphertext in the same database just to claim it’s encrypted at rest to comply with their understanding of (for example, HIPAA) compliance requirements–even if this is, ultimately, security theater.

Speaking from experience, it’s very difficult to convince people in the second and third camps that a cryptographic weakness in their implementation of a protocol is important.

Law enforcement wavers back and worth on end-to-end encryption. Once called the “going dark” problem by the FBI, apps like Signal are now touted as an important security measure for Americans in the wake of salt typhoon. (On that note, I don’t think our telecom systems are robust at all.)

I think it’s reasonable to conclude that, when they’re promoting the use of encryption technology, the US government at large thinks of encryption as a form of access controls rather than privacy. After all, encryption-as-privacy gets in their way.

Furthermore, the recurring demands for “lawful intercept” capabilities (a.k.a. backdoors) is compatible with “encryption as an access controls mechanism” worldview, while it undermines privacy.

But fuck them, and fuck what they want.

Encryption should be a privacy technology first and foremost.

Always say “No” to backdoors.

As politicians continue to decry the Trump administrations’ use of Signal for military operations, remember that it’s about not using an approved tool for military communications rather than misgivings about the cryptography used by Signal (which I recently reviewed).

Closing Remarks

Anyone who insists that the the real problem in this story is that the cryptographic security of Signal is lacking (compared to that person’s preferred communication software) is either a liar or a fucking moron.

Feel free to reply to anyone that does this with a link to this blog post. Maybe if they’re annoying enough, I’ll feel spicy and choose to 0day the app they’re peddling (assuming I haven’t already done that before).

如有侵权请联系:admin#unsafe.sh