DeepSeek, a groundbreaking Chinese artificial intelligence (AI) company founded in 2023 by Liang We 2025-1-28 16:1:0 Author: www.hackingdream.net(查看原文) 阅读量:27 收藏

DeepSeek, a groundbreaking Chinese artificial intelligence (AI) company founded in 2023 by Liang Wenfeng and headquartered in Hangzhou, Zhejiang, has captured the global AI market's attention. With backing from the Chinese hedge fund High-Flyer, DeepSeek has quickly risen as a formidable contender in the AI space. In January 2025, the company made waves by releasing its flagship AI model, R1.

If you're looking to run DeepSeek AI locally on your PC or laptop, you're in the right place. In this guide, we'll cover essential details about the R1 model, its advantages, and step-by-step instructions for getting it up and running on your personal machine.

Why Choose DeepSeek AI R1 for Local Use?

DeepSeek's R1 model stands out for several reasons:

Cost-Efficiency: Developed for approximately $6 million, R1 delivers performance comparable to leading AI models like OpenAI’s ChatGPT, which required substantially higher investments.

Mixture of Experts Architecture: This innovative design activates only the necessary computing resources for a specific task, optimizing efficiency and reducing energy consumption.

Open-Source Availability: DeepSeek has made its models open-source, allowing researchers and developers worldwide to access and build upon their work.

Local Deployment: Running DeepSeek AI locally ensures data privacy and eliminates the need for constant internet connectivity.

Requirements

Before diving into the setup process, ensure your system meets the following requirements:

- Graphics Card: A GPU with CUDA support for efficient processing.

- Memory: At least 16/32 GB of RAM to handle the operations smoothly.

- Operating System: A Windows, Linux, or MAC OS environment.

Process Overview

The process involves four main steps:

- Setting up WSL (Windows Sub-System for Linux)

- Installing OLLAMA

- Installing DeepSeek AI

- Running the LLM Model

1. Setting Up WSL (Windows Sub-System for Linux)

Note: This process is to setup Linux environment on windows, if you already have a working Linux setup, feel free to skip to Step 2.

Windows Sub-System for Linux (WSL) allows you to run a Linux environment directly on Windows, without the overhead of a virtual machine. Here's how to set it up:

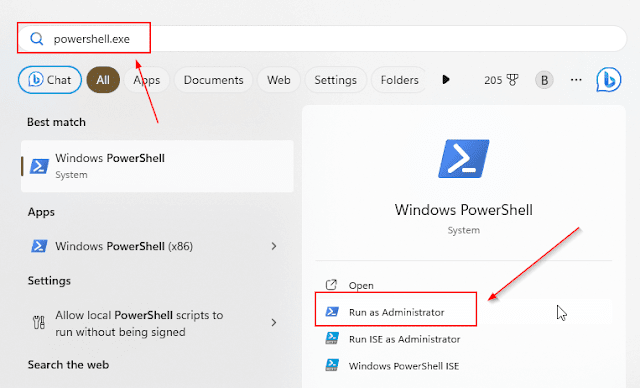

- Enable WSL

and run the command:

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Windows-Subsystem-Linux

Note: If RestartNeeded: True, you are supposed to restart your machine before continuing the process.

- Install Linux Distribution

- Install Ubuntu (or your preferred distribution) by running `

wsl --install -d Ubuntu` You'll be prompted to enter a username and password. Once completed, your Linux environment is ready to use. In this case, I have it installed already, but you should see something similar to below once the setup is completed.wsl --install -d Ubuntu

2. Installing OLLAMA

OLLAMA is a platform that simplifies the installation and running of LLM models. To install OLLAMA:

- Access WSL Terminal: Run `

wsl -d Ubuntu` in the Command Prompt to access your Linux terminal. - Install OLLAMA: Execute the below command

.You may need to enter your root user credentials set during WSL setup.curl https://ollama.ai/install.sh | sh

3. Installing DeepSeek AI

- Download DeepSeek Model: Run `

ollama run deepseek-r1:7b`in the WSL terminal, This command downloads the 7-billion-parameter model, which is suitable for most generic tasks. The number afterdeepseek-r1:represents the size of the model in billions (B). Larger models generally offer better performance but require more system resources. Choose a model that matches your system's configuration and intended usage.Model Commands

DeepSeek-R1-Distill-Qwen-1.5B

ollama run deepseek-r1:1.5bDeepSeek-R1-Distill-Qwen-7B

ollama run deepseek-r1:7bDeepSeek-R1-Distill-Llama-8B

ollama run deepseek-r1:8bDeepSeek-R1-Distill-Qwen-14B

ollama run deepseek-r1:14bDeepSeek-R1-Distill-Qwen-32B

ollama run deepseek-r1:32bDeepSeek-R1-Distill-Llama-70B

ollama run deepseek-r1:70b4. Running the LLM Model

Once installed, the model operates similarly to OpenAI's ChatGPT, capable of performing a wide array of tasks like writing emails, essays, coding, general speech, problem solving etc.

- The response speed depends on your GPU's capabilities. WSL is recommended over heavy configuration changes in other virtual environments like VMWare Workstation and Virutalbox.

you can always run "ollama run deepseek-r1:7b" to start and interact with DeepSeek AI

- Few other useful Ollama Commands

- Download New models: Visit the OLLAMA library at ollama.ai to browse and download various models. Use `

ollama run MODEL_NAME` to download new models. List downloaded Models:ollama list. Delete downloaded Models: ollama rm Model Name

Conclusion

Running a local LLM model like ChatGPT on your Windows PC doesn't have to be a daunting task filled with complex coding. With the right tools like WSL, OLLAMA, and DeepSeek AI, you can seamlessly set up and explore the capabilities of large language models. Whether you're a tech enthusiast or a general user, this guide ensures that you're well-equipped to step into the world of AI and machine learning.

You can Integrate it with your workflow to increase productivity by automating tasks, Get AI Powered Suggestions and Ideas, write emails and documents privately. You can also adjust how DeepSeek AI generates its responses and limit them to the desired scope according to your needs, but that's a topic for another post, that's all for now.

如有侵权请联系:admin#unsafe.sh