2024-12-18 14:0:0 Author: www.malwarebytes.com(查看原文) 阅读量:3 收藏

This is no secret, online criminals are leveraging artificial intelligence (AI) and large language models (LLMs) in their malicious schemes. While AI tends to be abused to trick people (i.e. deepfakes) in order to gain something, sometimes, it is meant to defeat computer security programs.

With AI, this process has just become easier and we are seeing more and more cases of fake content produced for deception purposes. In the criminal underground, web pages or sites that are meant to be decoys are sometimes called “white pages,” as opposed to the “black pages” (malicious landing pages).

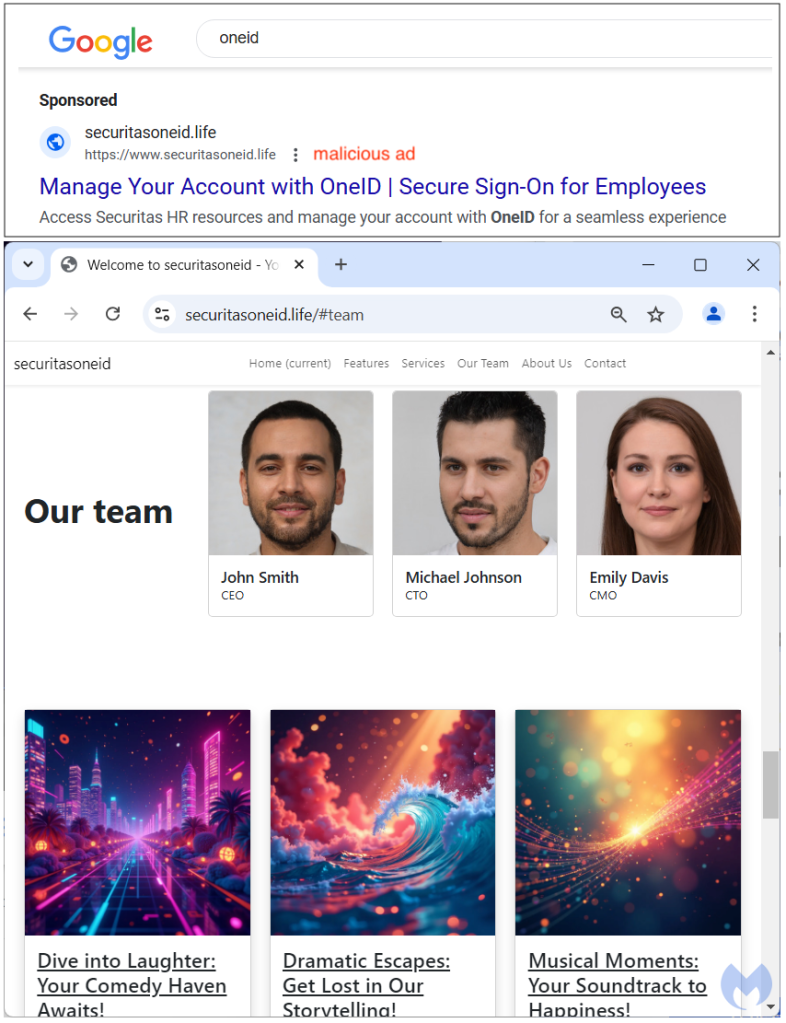

In this blog post, we take a look at a couple of examples where threat actors are buying Google Search ads and using AI to create white pages. The content is unique and sometimes funny if you are a real human, but unfortunately a computer analyzing the code would likely give it a green check.

Fake-faced executives

The first example is a phishing campaign targeting Securitas OneID. The threat actors are very cautious about avoiding detection by running ads that most of the time redirect to a completely bogus page unrelated to what one would expect, namely a phishing portal.

It did cross our minds they could very well be trolling security researchers, but if that was truly the case, why not simply go for Rick Astley’s Never Gonna Give You Up?

The entire site was created with AI, including the team’s faces. While in the past, criminals would go for stock photos or maybe steal a Facebook profile, now it’s easier and faster to make up your own, and it’s even copyright-free!

When Google tries to validate the ad, they will see this cloaked page with pretty unique content and there is absolutely nothing malicious with it.

Parsec and the universe

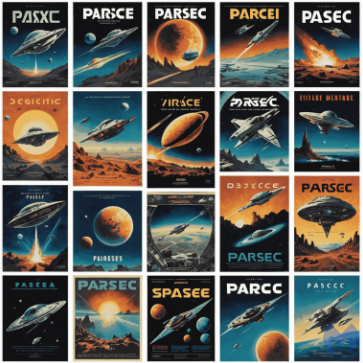

Our second example is another Google ad for Parsec this time, a popular remote desktop program used by gamers.

It so happens that a parsec is also an astronomical measurement unit and the threat actors (or should we say AI) went wild with it, creating a white page heavily influenced by Star Wars:

The artwork, including posters, is actually quite nice, even for a non-fan.

Once again, this cloaked content is a complete diversion which will take detection engines for a ride.

AI vs AI: humans to the rescue

These are just some of the many examples of AI being misused. In the early days of deepfakes, one may remember companies already training AI to detect AI.

There will naturally be content produced by AI for legitimate reasons. After all, nothing prohibits anyone from creating a website entirely with AI, simply because it’s a fun thing to do.

In the end, AI can be seen as a tool which on its own is neutral but can be placed in the wrong hands. Because it is so versatile and cheap, criminals have embraced it eagerly.

Ironically, it is quite straightforward for a real human to identify much of the cloaked content as just fake fluff. Sometimes, things just don’t add up and are simply comical. Do jokes trigger the same reaction in an AI engine as they would to a human? It doesn’t seem like it… yet.

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.

如有侵权请联系:admin#unsafe.sh