2024-12-11 00:16:52 Author: hackernoon.com(查看原文) 阅读量:2 收藏

Table of Links

-

Discussion and Broader Impact, Acknowledgements, and References

D. Differences with Glaze Finetuning

H. Existing Style Mimicry Protections

3 Threat Model

The goal of style mimicry is to produce images, of some chosen content, that mimic the style of a targeted artist. Since artistic style is challenging to formalize or quantify, we refrain from doing so and define a mimicry attempt as successful if it generates new images that a human observer would qualify as possessing the artist’s style.

We assume two parties, the artist who places art online (e.g., in their portfolio), and a forger who performs style mimicry using these images. The challenge for the forger is that the artist first protects their original art collection before releasing it online, using a state-of-the-art protection tool such as Glaze, Mist or Anti-DreamBooth. We make the conservative assumption that all the artist’s images available online are protected. If a mimicry method succeeds in this setting, we call it robust.

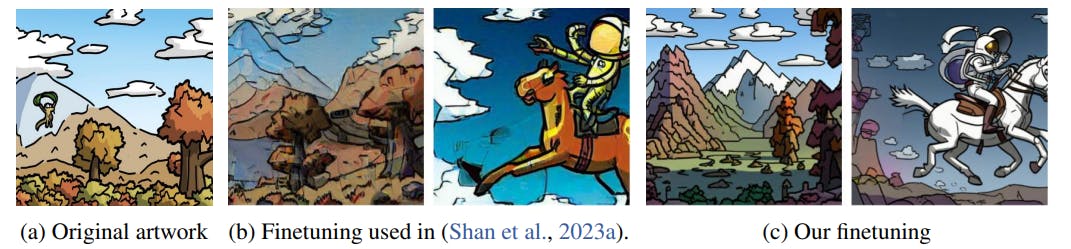

In this work, we consider style forgers who finetune a text-to-image model on an artist’s images—the most successful style mimicry method to date (Shan et al., 2023a). Specifically, the forger finetunes a pretrained model f on protected images X from the artist to obtain a finetuned model ˆf. The forger has full control over the protected images and finetuning process, and can arbitrarily modify to maximize the mimicry success. Our robust mimicry methods combine a number of “off-the-shelf” manipulations that allow even low-skilled parties to bypass existing style mimicry protections. In fact, our most successful methods require only black-box access to a finetuning API for the model f, and could thus also be applied to proprietary text-to-image models that expose such an interface.

如有侵权请联系:admin#unsafe.sh