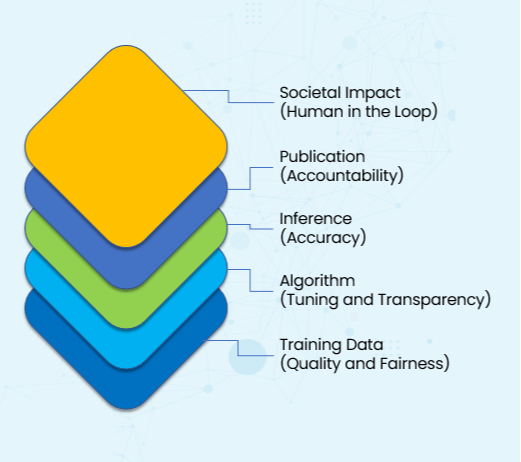

Discussions about Generative AI safety are more urgent than ever. As AI systems become more embedded in our daily lives, from personalized recommendations to complex decision-making processes, ensuring their safety and ethical alignment with human values is paramount. However, the complexity of AI systems requires a nuanced understanding of the multiple layers that contribute to their reliability and societal impact. To unravel this complexity, it helps to think of AI safety as an onion, with each layer addressing a critical facet of the AI lifecycle.

Training Data (Quality and Fairness):

At the core of any AI system lies the data on which it is trained. The adage "garbage in, garbage out" could not be more applicable here. AI models rely on vast amounts of data, and the quality of that data directly affects their performance. Poor-quality data introduces biases and inaccuracies, which can result in skewed outputs or even harmful outcomes. Ensuring the quality, diversity, and fairness of training data is a foundational step towards building systems that are not only accurate but also fair and representative of diverse groups.

Algorithm (Tuning and Transparency):

Moving outward, we reach the algorithm itself. Algorithms define how the AI interprets data, learns from it, and generates predictions. The choices made during algorithm tuning and development can have far-reaching consequences, and transparency in these processes is essential for accountability. By understanding how an AI model makes decisions, we can prevent the "black box" phenomenon, where even developers don’t fully understand how certain outputs are produced. Transparency enables stakeholders to scrutinize, audit, and improve AI systems continually, aligning them with intended ethical standards.

Inference (Accuracy):

As AI systems process data and make predictions, the accuracy of these inferences becomes the next critical layer. Inaccurate inferences can lead to costly errors, especially in high-stakes fields such as healthcare, finance, and law enforcement. Rigorous testing and validation of AI models are essential to ensure that they perform as expected in real-world scenarios. This layer underscores the importance of accuracy and reliability, setting a high standard for performance before deployment.

Publication (Accountability):

Accountability comes into play when AI models are deployed into society. Publication and documentation of AI systems' goals, methods, and expected outcomes create an essential checkpoint for their use. This layer of the onion ensures that the developers and operators of AI systems can be held accountable for their actions and decisions. Clear documentation, including the limitations and potential risks of the AI, helps stakeholders understand and anticipate its impact, fostering a culture of responsibility in AI deployment.

Societal Impact (Human in the Loop):

At the outermost layer lies the societal impact, where AI systems intersect with human lives. This is where "human in the loop" becomes essential. No AI system should operate in complete isolation from human oversight, especially when it comes to decisions affecting individuals and communities. Ensuring that humans have the ability to monitor, intervene, and correct AI processes can prevent unintended consequences, biases, or harmful actions. This final layer emphasizes the need for a balance between AI autonomy and human oversight, promoting AI systems that enhance human well-being rather than detract from it.

Peeling back these layers reveals the complexity of AI safety, which cannot be addressed by focusing on any single aspect. Just like an onion, each layer builds upon the previous ones, contributing to a holistic approach to safety that spans from data quality to societal impact. As we continue to integrate AI into more areas of life, it's crucial to adopt this layered perspective. AI safety is not just a technical issue; it is a societal one that requires ongoing vigilance, transparency, and a commitment to ethical standards at every level.

The future of AI depends on our ability to address each layer thoughtfully, creating systems that are as beneficial as they are innovative. By treating AI safety as an onion, we peel away the superficial and dig deep into the core of responsible AI development, creating a future where technology serves humanity rather than the other way around.

For those interested, I wrote a more detailed discussion on this layered approach here: http://dx.doi.org/10.13140/RG.2.2.15152.57604

Happy for your comments and feedback.

About Me: 25+ year IT veteran combining data, AI, risk management, strategy, and education. 4x hackathon winner and social impact from data advocate. Currently working to jumpstart the AI workforce in the Philippines. Learn more about me here.

如有侵权请联系:admin#unsafe.sh