Executive SummaryOur researchers discovered dozens of scam campaigns using deepfak 2024-8-29 18:0:23 Author: unit42.paloaltonetworks.com(查看原文) 阅读量:19 收藏

Executive Summary

Our researchers discovered dozens of scam campaigns using deepfake videos featuring the likeness of various public figures, including CEOs, news anchors and top government officials. These campaigns appear in English, Spanish, French, Italian, Turkish, Czech and Russian. Each campaign typically targets potential victims in a single country, including Canada, Mexico, France, Italy, Turkey, Czechia, Singapore, Kazakhstan and Uzbekistan.

Due to their infrastructural and tactical similarities, we believe that many of these campaigns likely stem from a single threat actor group. We have observed this threat actor group using deepfake videos to spread fake investment schemes and fake government-sponsored giveaways.

As of June 2024, we had discovered hundreds of domains being used to promote these campaigns. Each domain has been accessed an average of 114,000 times globally since going live, based on our passive DNS (pDNS) telemetry.

Starting with a campaign promoting an investment scheme called Quantum AI, we studied the infrastructure behind this campaign to track its spread over time. Through this infrastructure investigation, we discovered several additional deepfake campaigns leveraging completely different themes that the same threat actor group created and promoted. These additional scam campaigns used different languages and the likeness of different public figures, suggesting that each campaign is intended for a different target audience.

Despite the use of generative AI (GenAI) in these campaigns, traditional investigative techniques remain useful to identify the hosting infrastructure leveraged by these threat actors. As the malicious usage of deepfake technology increases among threat actors, so should defenders’ efforts to proactively detect and prevent these types of attacks.

Customers of Palo Alto Networks are better protected from these attacks via Advanced URL Filtering, which will continue to detect and block websites that are used to propagate deepfake-based scam campaigns.

| Related Unit 42 Topics | Scams, Phishing |

Discovering Quantum AI Hosting Infrastructure

To study the Quantum AI campaign, we decided to analyze the infrastructure behind the websites hosting these attacks. Starting with an initial seed set of detections from our deepfake video detection pipeline, we then looked for additional websites serving up known malicious videos. We observed several Quantum AI-related videos that adversaries were widely sharing via websites hosted on newly registered domains.

Upon further investigation, we identified that the videos themselves were primarily hosted on a single domain:

- Belmar-marketing[.]online

Next, we searched for other webpages that served video files from this domain and also contained keywords like Quantum AI in the HTML content.

Through this process, we were able to uncover dozens of other scam pages using these videos as a lure. We then used the content on these webpages as well as the pathnames of the video files to create signatures to expand our detections. (See the Indicators of Compromise section for example URLs.)

In May 2024, we noticed that an increasing number of Quantum AI videos were being hosted on other domains as well, including the following:

- Ai-usmcollective[.]click

- Fortunatenews[.]com

- Fiirststreeeet[.]top

We also discovered some sites built using website builders that were promoting the same Quantum AI scam. In these cases, the attackers re-hosted the video directly on the site via the website builder, not on one of the shared video-hosting domains mentioned above.

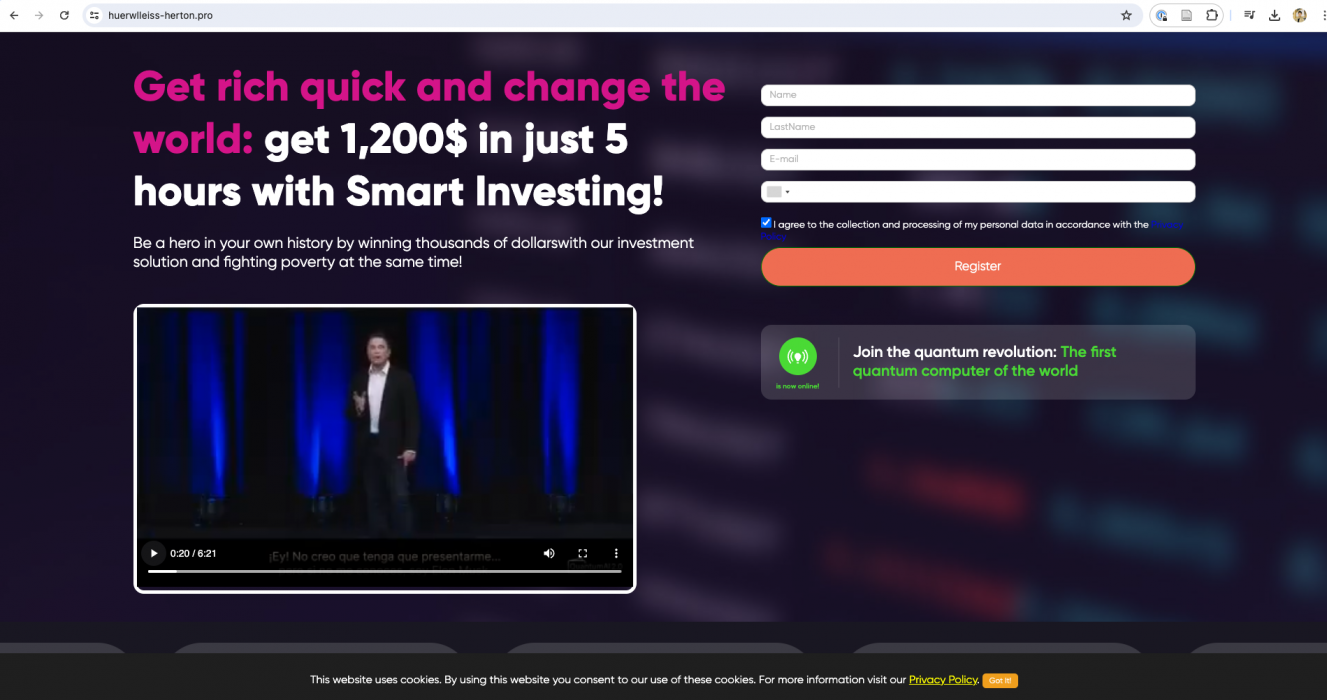

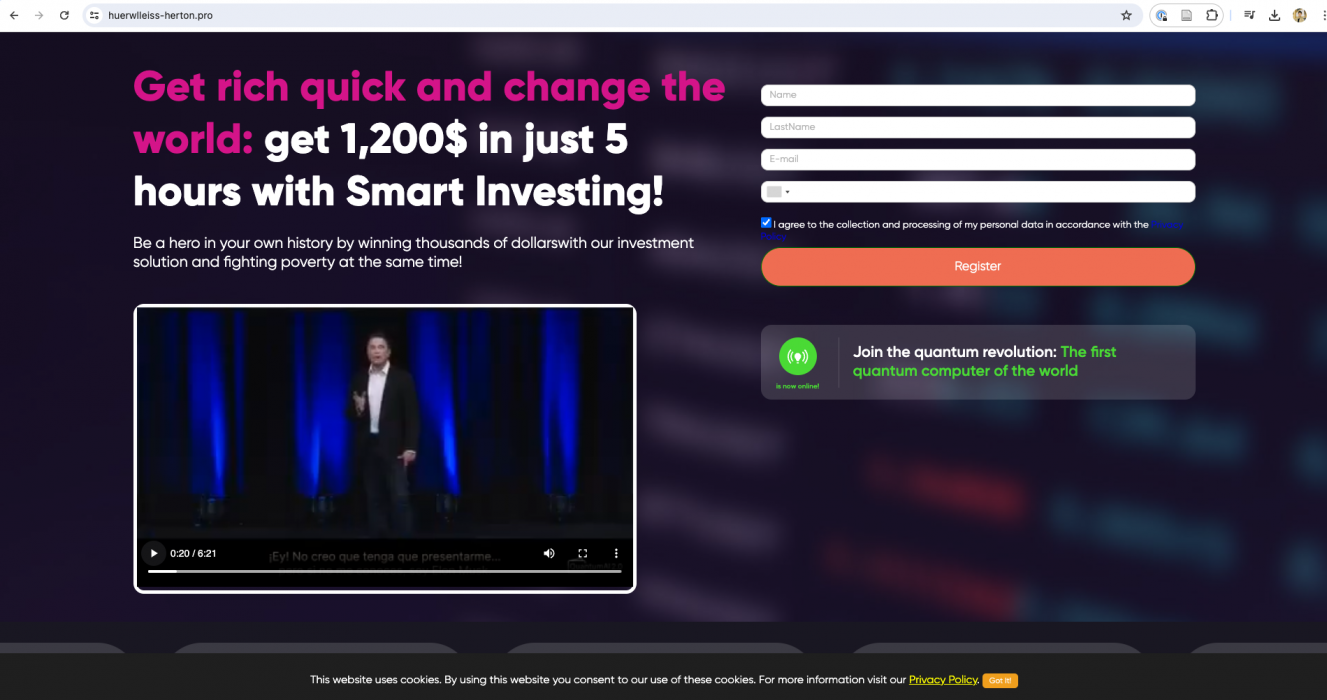

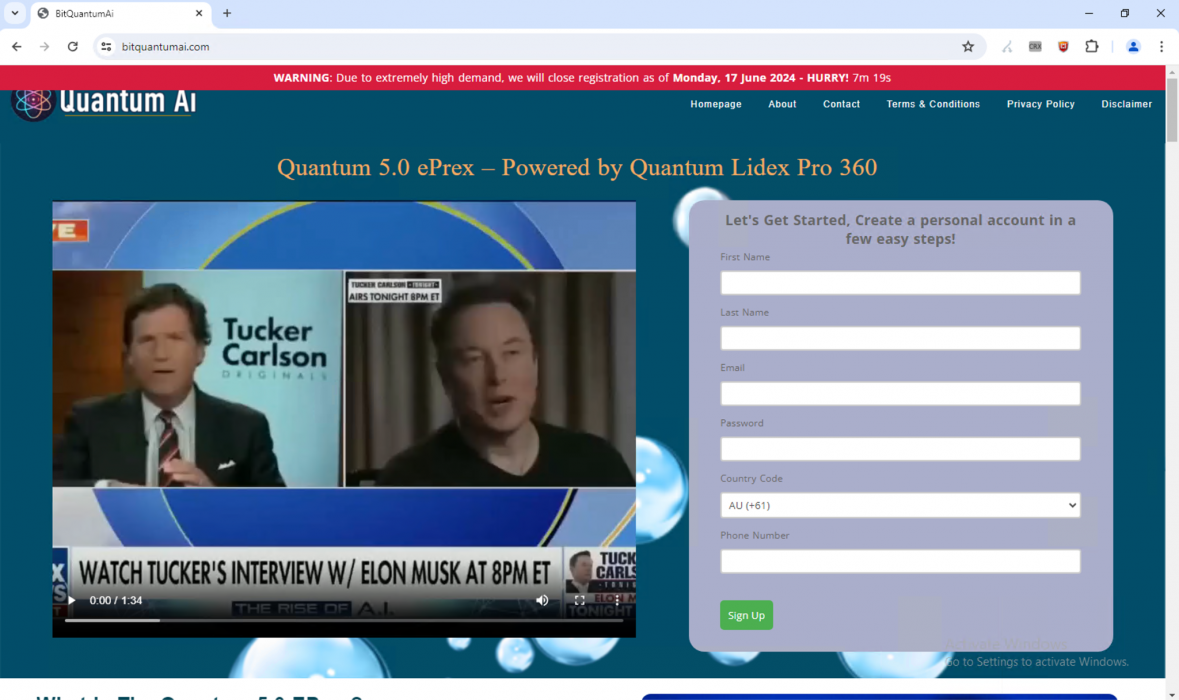

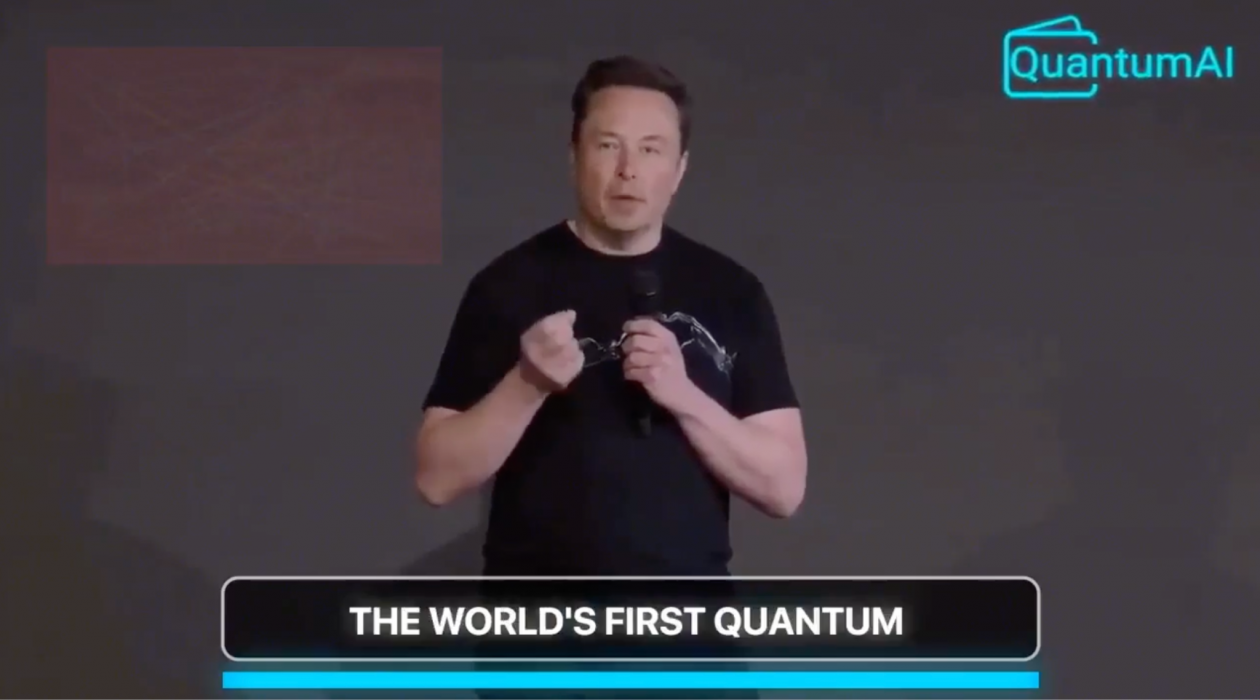

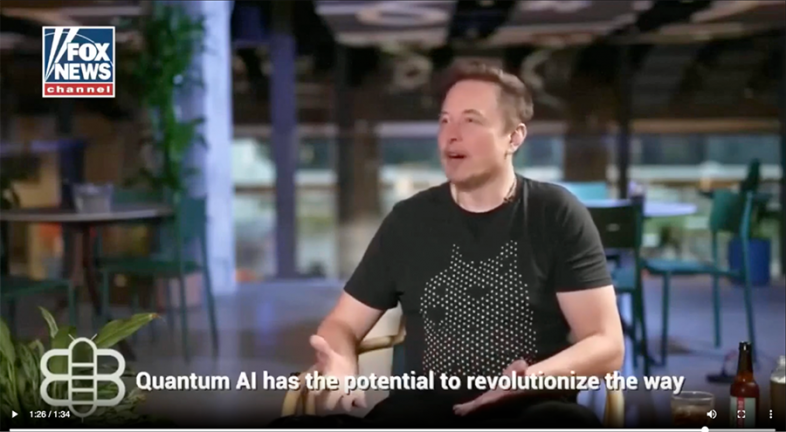

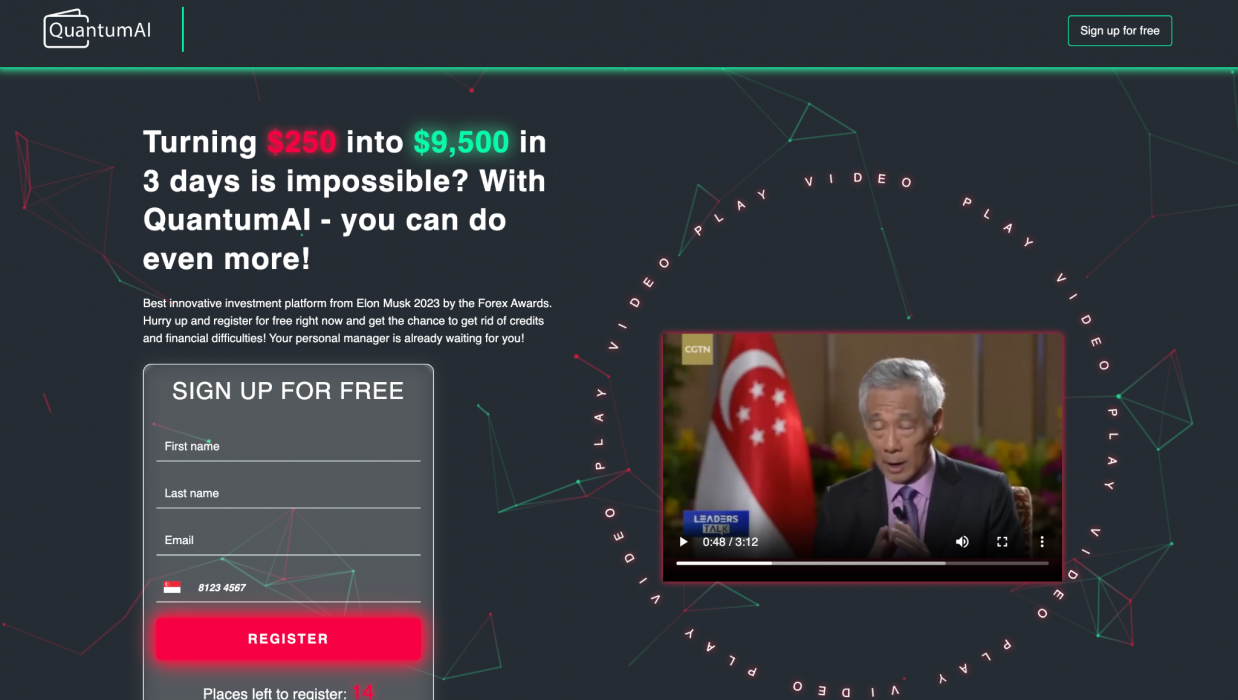

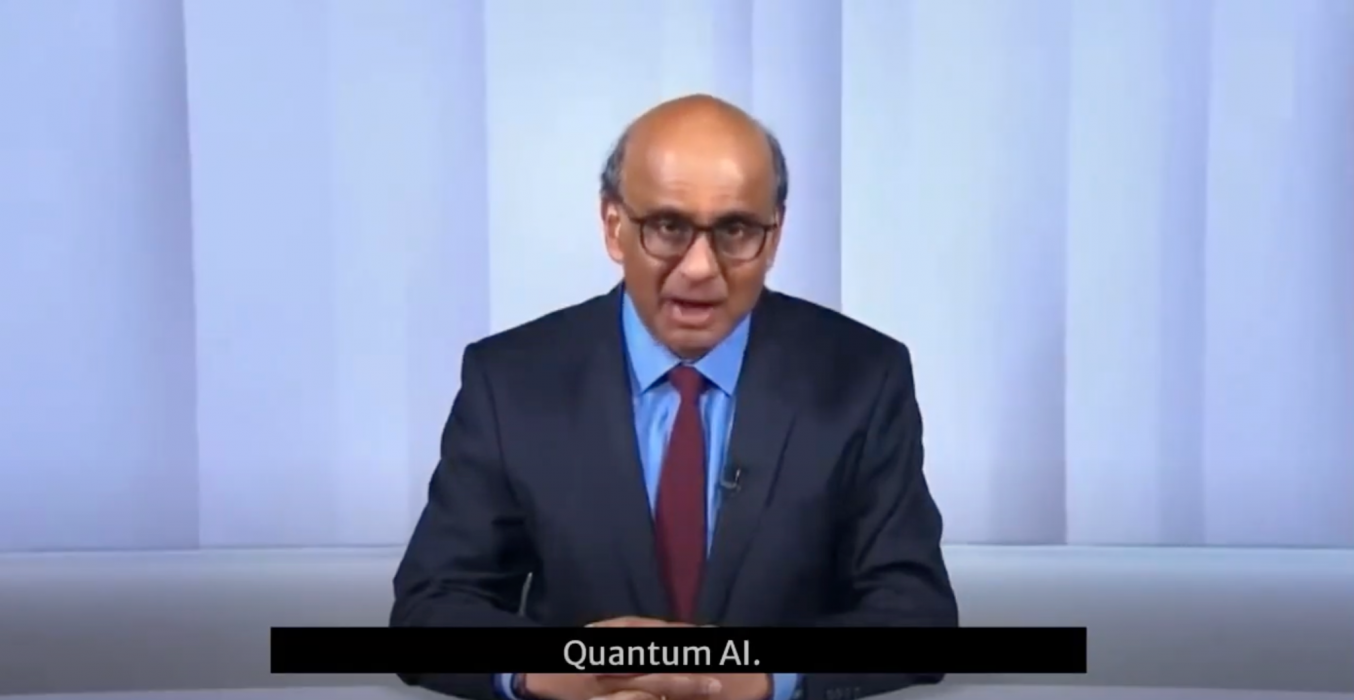

Quantum AI Example Videos and Webpages

In Figures 1-7, we present examples of Quantum AI scam webpages and videos discovered by our video analysis pipeline and infrastructure-based investigation. In most of these examples, the scam webpage was hosted on a newly registered domain, and the video was hosted as an .mp4 file on one of the shared video-hosting domains mentioned above (e.g., belmar-marketing[.]online).

In most cases, the attackers appear to have started with a legitimate video and added on their own AI-generated audio. Finally, they used lip-syncing technology to modify the lip movement of the speaker to match the AI-generated audio. Most of the videos use Elon Musk as their celebrity of choice, although we discovered a handful of examples using other public figures as well:

- Tucker Carlson

- Lee Hsien Loong, the former Prime Minister of Singapore

- Tharman Shanmugaratnam, the President of Singapore (as of June 2024)

Deepfake Campaign Discovery and Tracking

After investigating the Quantum AI scam campaign, we explored broader trends surrounding these deepfake campaigns as a whole.

Uncovering Additional Deepfake Scams

In addition to the Quantum AI scam, we also investigated the other videos that were present on these video-hosting domains. In doing so, we were able to uncover several other scam campaigns (likely propagated by the same threat actor group), many of which use deepfake videos as a lure. These deepfake videos typically use the likeness of public figures like CEOs, news anchors or top government officials.

We discovered deepfake videos in several different languages, including English, Spanish, French, Italian, Turkish, Czech and Russian. Each campaign typically targets potential victims in a single country, including Canada, Mexico, France, Italy, Turkey, Czechia, Singapore, Kazakhstan and Uzbekistan.

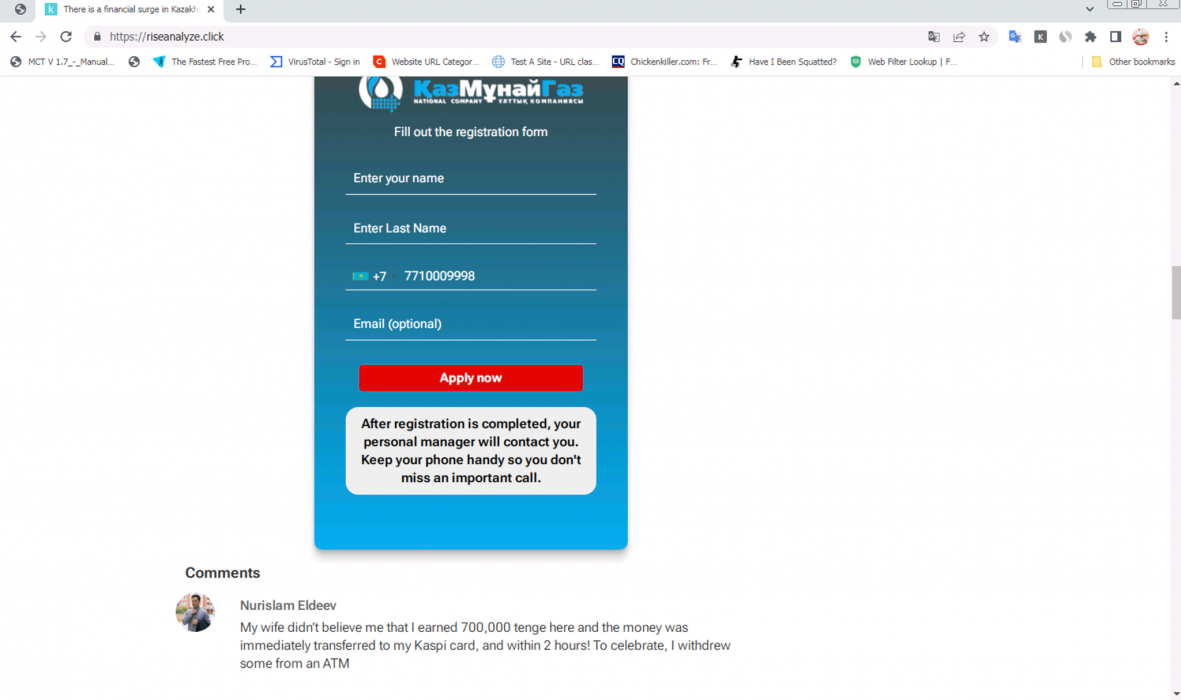

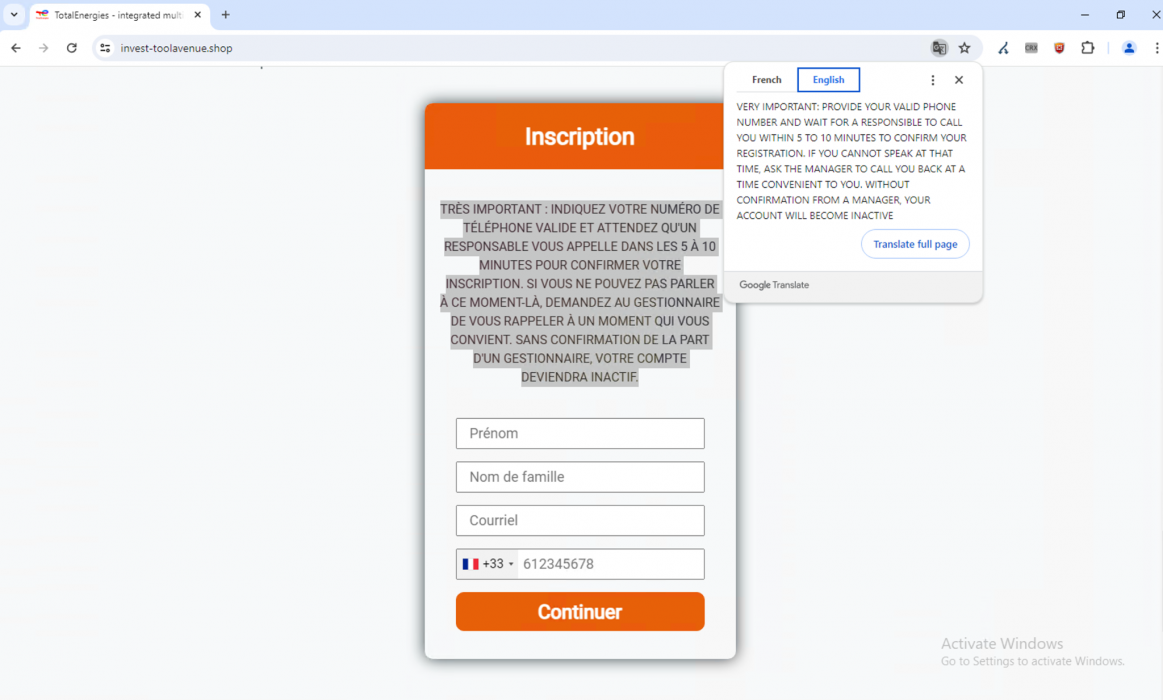

Similar to the Quantum AI scam campaign, these videos add AI-generated audio on top of an existing video and use lip-syncing tools to alter the lip movement of the speaker to match the new audio. Visitors to these webpages are prompted to register with their name and phone number, and they are instructed to await a call from an account manager or representative.

In many of these cases, we discovered several newly registered domains hosting the same content (and the same deepfake video lure). This means that each of these scam webpages is part of a larger campaign, not just a one-off scam webpage.

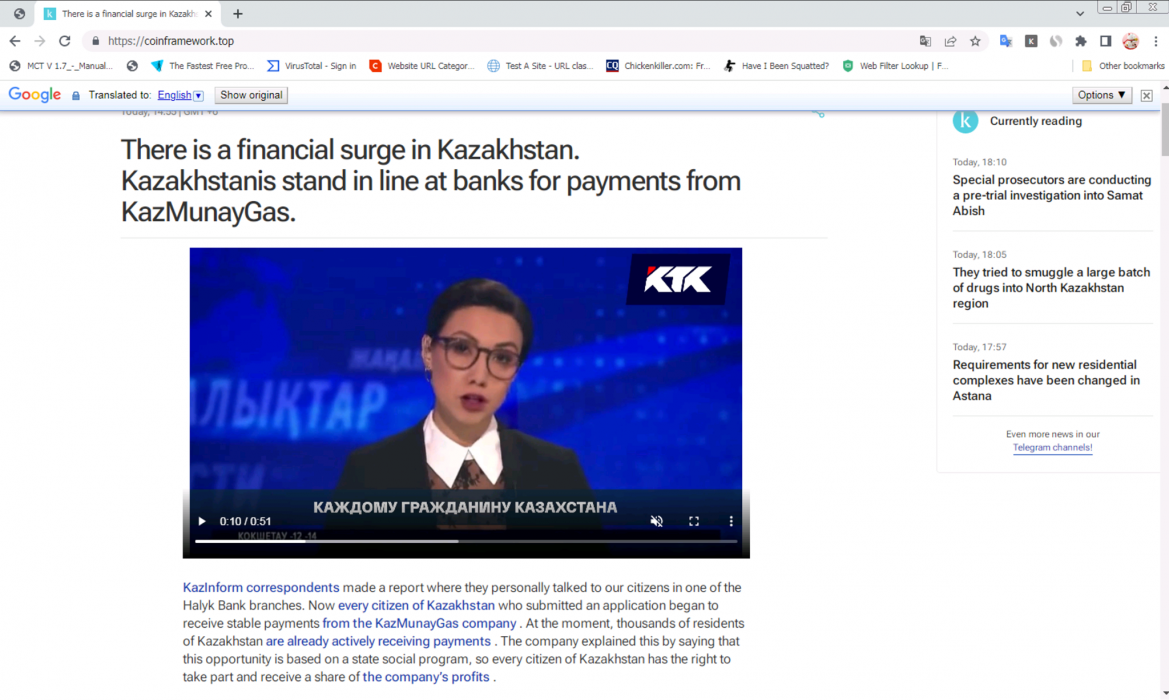

For example, in the case of the KazMunayGas scam shown below in Figure 8, we found scammers using the same video across several recently registered domains, including:

- Aigroundwork[.]top

- Aitfinside[.]shop

- Block4aischeme[.]top

- Systemaigroundwork[.]shop

The Indicators of Compromise section provides a more complete list of these domains.

Although the languages and impersonation-targets of these scam campaigns are different, they share the following similarities:

- They all use similar deepfake techniques

- They have similar calls to action

- They host their videos on a small, shared set of domains (that do not seem to be used for any other purpose than to host these scam videos)

This suggests that these campaigns can most likely be attributed to a single threat actor group.

We present some selected examples of these additional scam campaigns in Figures 10-15.

Campaign Activity Over Time

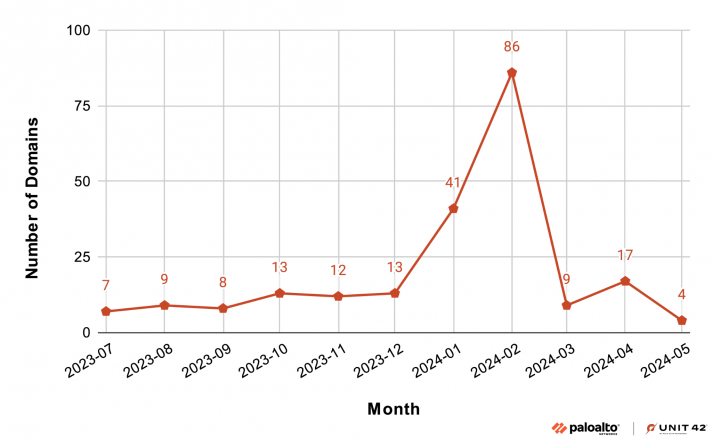

While these campaigns started about a year ago, we observed a large spike of newly observed domains (shown in Figure 16) in February 2024. Unlike typical phishing or malware domains, these domains are relatively long-lived, with an average active time of 142 days.

Figure 17 shows that the number of active domains exponentially increased until about March 2024, and the total number of active domains has remained steady to the present day.

Furthermore, it is concerning to note that these domains have a high reach, with each domain being accessed an average of 114,000 times since they first went live, based on pDNS telemetry. The actual number is likely much higher.

Hosting Infrastructure Analysis

Going with the current trend of camouflaging in shared hosting infrastructure, 86.7% of these campaign domains are using the same popular content delivery network (CDN). Multiple IP addresses from the CDN host the same content across different geographic locations, with the U.S., the Netherlands and Russia being the top 3 locations. The utilization of CDNs makes it difficult to attribute the campaign to a particular threat actor or geolocation.

We observed that the attackers primarily hosted their videos on a small set of attacker-owned domains. Attackers presumably did this to circumvent takedown issues that could occur on more popular video-hosting platforms.

Until April 2024, we observed that the deepfake videos were predominantly hosted on belmar-marketing[.]online. However, starting in May 2024, the attackers began to rotate their video hosting locations more frequently. As such, we observed that videos were now mainly hosted on fiirststreeeet[.]top, ai-usmcollective[.]click and fortunatenews[.]com.

Looking for webpages that link to videos hosted on these domains has been very valuable for tracking the spread of these campaigns so far. We will continue to monitor for new video-hosting domains as these campaigns progress.

How the Quantum AI Scam Works

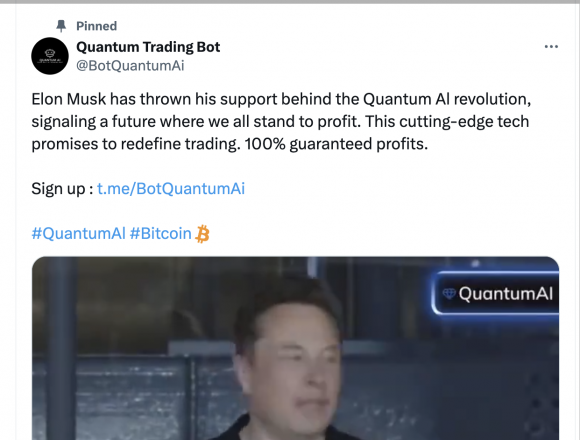

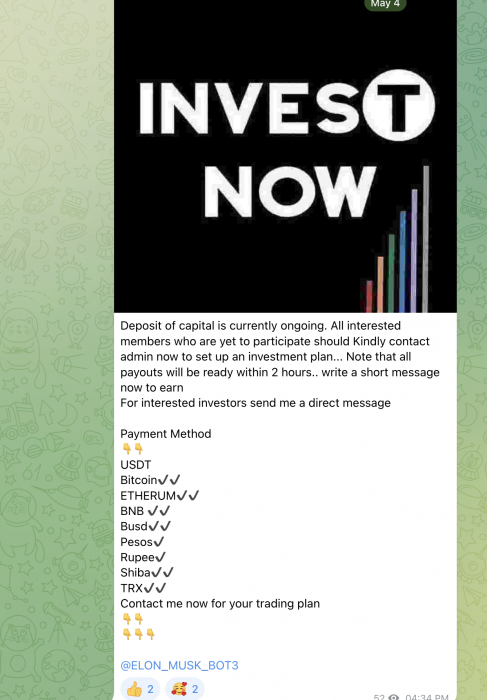

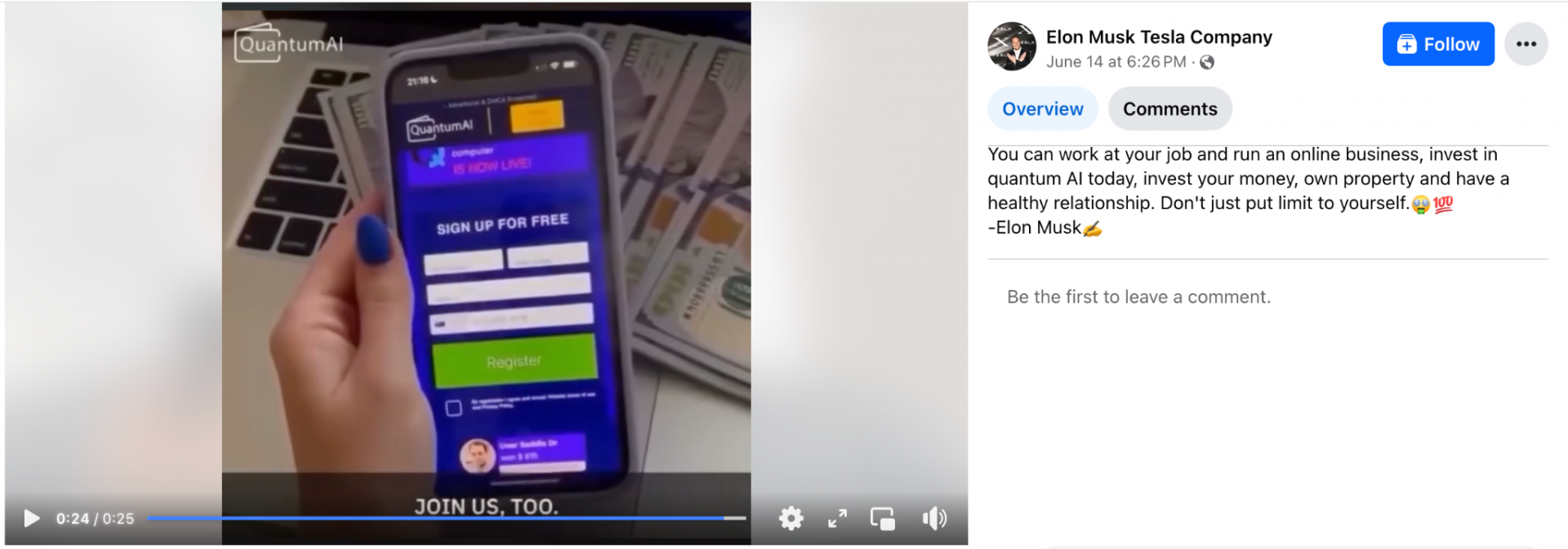

During our research, we observed posts and ads promoting Quantum AI on various social media platforms (shown in Figures 18-20). According to the Australian National Anti-Scam Center, scammers often first use these sorts of social media ads or fake news articles to link to scam webpages that ask for the victim user’s contact information.

After visiting the scam landing page and filling out a form to sign up for the platform, one of the scammers gives the victim a phone call. In this call, the scammer tells the victim they’ll need to pay around $250 to access the platform.

The scammer instructs the victim to download a special app so that they can “invest” more of their funds. Within the app, a dashboard appears to show small profits. From there, the scammers continue persuading the victim to deposit more of their money and may even allow the victim to withdraw a small amount of money as a way to gain trust.

Finally, when the victim tries to withdraw their funds, the scammers either demand withdrawal fees, or cite some other reason (e.g., tax issues) for not being able to get their funds back. The scammers may then lock the victim out of their account and pocket the remaining funds, causing the victim to have lost the majority of the money that they put into the “platform.”

Overview of the Web-Based Deepfake Scam Landscape

Quantum AI is not the only scam that adversaries are using involving deepfakes. In this section we’ll discuss other techniques and services that they have been using.

In early 2024, a company lost $25 million to attackers who used deepfake technology to impersonate their chief financial officer on a video conferencing call. While video conferencing deepfakes have received significant attention as a result of this attack, web-based deepfake scams are an emerging threat that researchers should monitor as well.

2024 is predicted to be the largest voting year in history. Over 60 countries are holding significant elections this year, meaning that around half the world's population could be directly affected by these events. In response, researchers have raised concerns about the potential for deepfakes to promote political misinformation. However, the impact of deepfakes is not limited to the political domain. Cybercriminals have already been creating and sharing deepfake videos for their own malicious purposes.

Deepfakes Used to Promote Scams and Phishing Attacks

Since the advent of GenAI, attackers have used deepfakes to promote political misinformation, and even to further voter outreach. For example, in early 2024, a Democratic political consultant used an audio deepfake of Joe Biden to discourage voters in the state of New Hampshire from voting in the upcoming primary election.

In India, politicians actively encouraged their supporters to create deepfakes to promote their own candidacies. In 2022, an unknown threat actor used manipulated deepfake videos of Ukrainian President Volodymir Zelensky as a part of information warfare in the Russia-Ukraine war.

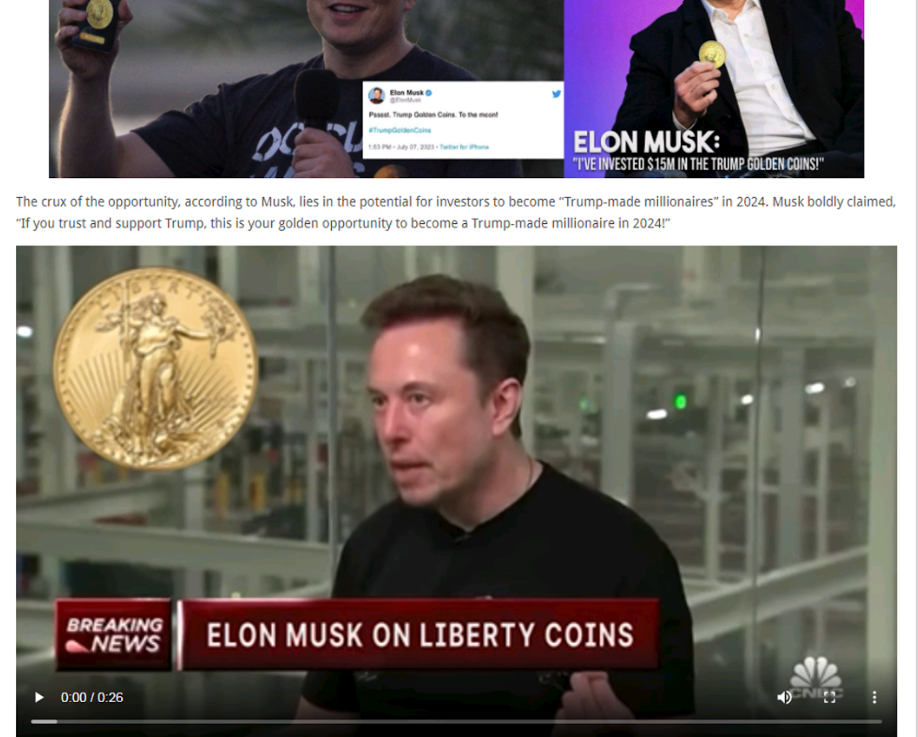

Now, we are seeing cybercriminals start to use deepfake media to promote their own scams and phishing attacks as well. In recent months, reporters have observed scams impersonating figures like Elon Musk (shown in Figure 21), Bill Gates and Warren Buffett to peddle fake cryptocurrencies, giveaways and other investment schemes. These are created with the ultimate goal of stealing funds from victims. (Our previous tweets on this topic have more details.)

Previously, these attackers might have used paid actors to promote their scams (e.g., by creating videos purporting to be a client who profited from a fake investment scheme). With the increasing popularity and effectiveness of tools that use AI to generate content (e.g., images, audio and video), attackers are now able to create these videos in a cheaper and more convincing way.

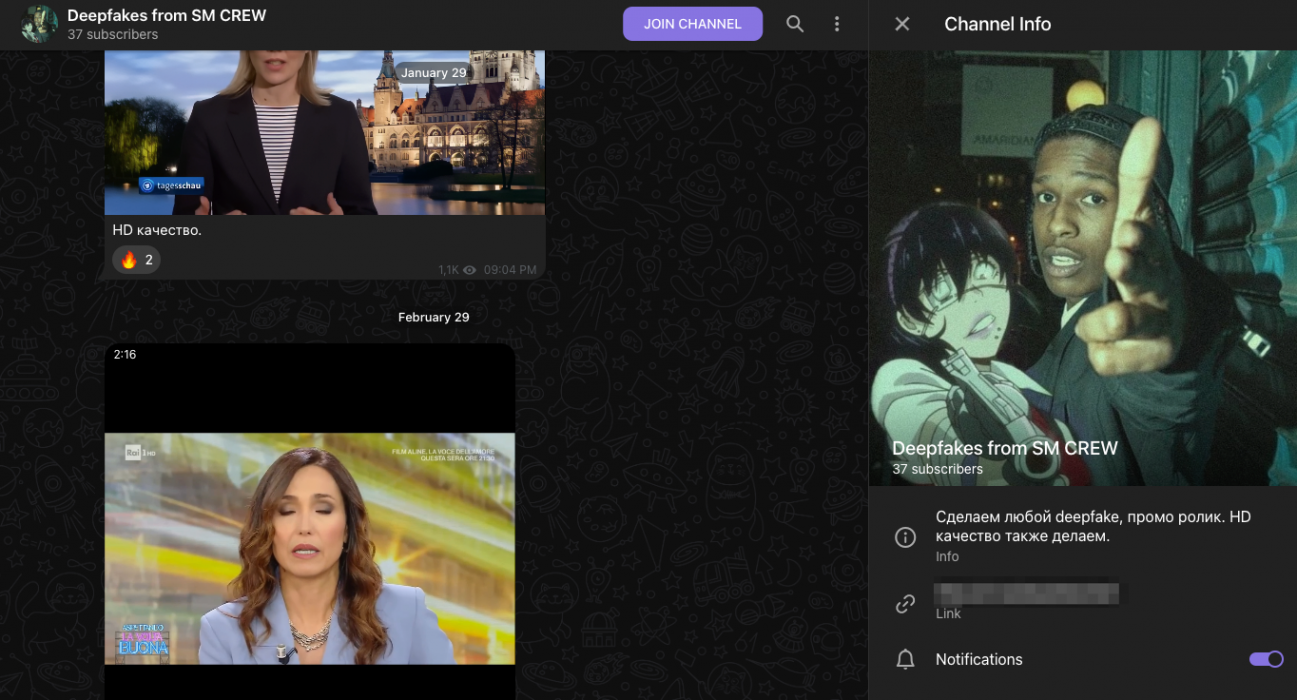

Deepfakes as a Service

Our researchers have encountered cybercriminals selling, discussing and trading deepfake tooling and creation services across forums, social media chat channels and instant messaging platforms. These tools and services offer capabilities for generating deceptive and malicious content including audio, video and imagery. The ecosystem surrounding deepfake creation and tooling is alive and vibrant, and cybercriminals are selling a variety of options from face swapping tools to deepfake videos.

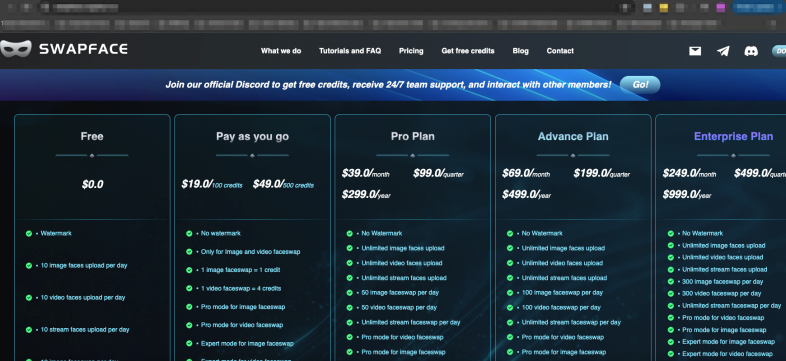

Face swapping tools like Swapface (shown in Figure 22) range in price from free to over $249 a month. Adversaries abuse these tools, placing the videos they create on diverse platforms and social media platforms. Swapface offers user-friendly filters and face swapping that operates in real time, allowing users to alter or replace faces in videos.

The criminal application of deepfake tooling and creation services and tools includes the following activities:

- Facilitating the creation of fake identities

- Committing bank fraud

- Orchestrating disinformation campaigns

- Bypassing know-your-customer verification checks

- Performing cryptocurrency theft

These services can manipulate visuals and audio with alarming precision, leading to significant ethical and security concerns. The cost of generating a deepfake video can vary widely, typically ranging from $60 to $500, reflecting the complexity and quality of the requested forgery.

As seen in Figure 23, we also discovered messaging platform channels specifically advertising the creation of deepfake videos for scam purposes.

The accessibility and range of costs make deepfakes particularly versatile tools for maliciousness, posing challenges for both individuals and institutions trying to safeguard against fraud and misinformation.

Conclusion

Despite the use of GenAI in these campaigns, traditional investigative techniques remain useful to identify the hosting infrastructure leveraged by these threat actors. As threat actors increase their use of deepfake technology, organizations should also proactively defend against these types of attacks.

Palo Alto Networks researchers will continue to monitor these deepfake-based scam campaigns and continue to discover and investigate additional deepfake-based scam campaigns. As such, we can ensure that our customers are better protected from them via Advanced URL Filtering.

Indicators of Compromise

Additional Deepfake Scam Examples

Example 1

- Webpage URL: xtradgpt[.]online

- Video URL: hxxps://quontic[.]site/wp-content/uploads/2024/07/449030935_482215324194392_281914555774571171_n[.]mp4

Language: English - Country targeted: Canada

- Deepfake of: Kevin O'Leary (Canadian businessman)

Example 2

- Webpage URL: euphemiouslystner[.]life

- Video URL: hxxps://hemicdn[.]com/1501-1400-3505[.]mp4

Language: English - Country targeted: Singapore

- Deepfake of: Tharman Shanmugaratnam (Prime Minister of Singapore)

Example 3

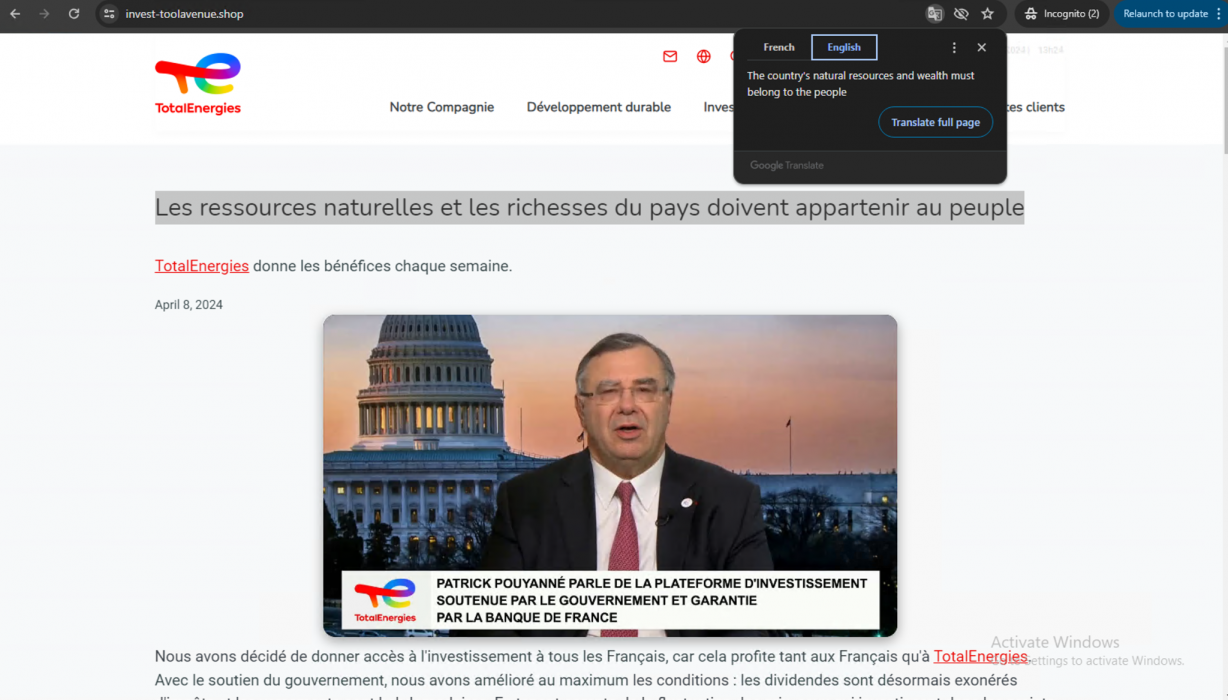

- Webpage URL: invest-toolavenue[.]shop

- Video URL: hxxps://ai-usmcollective[.]click/videos/TotalEnergies_news_FR[.]mp4

Language: French - Country targeted: France

- Deepfake of: Patrick Pouyanné (CEO of TotalEnergies)

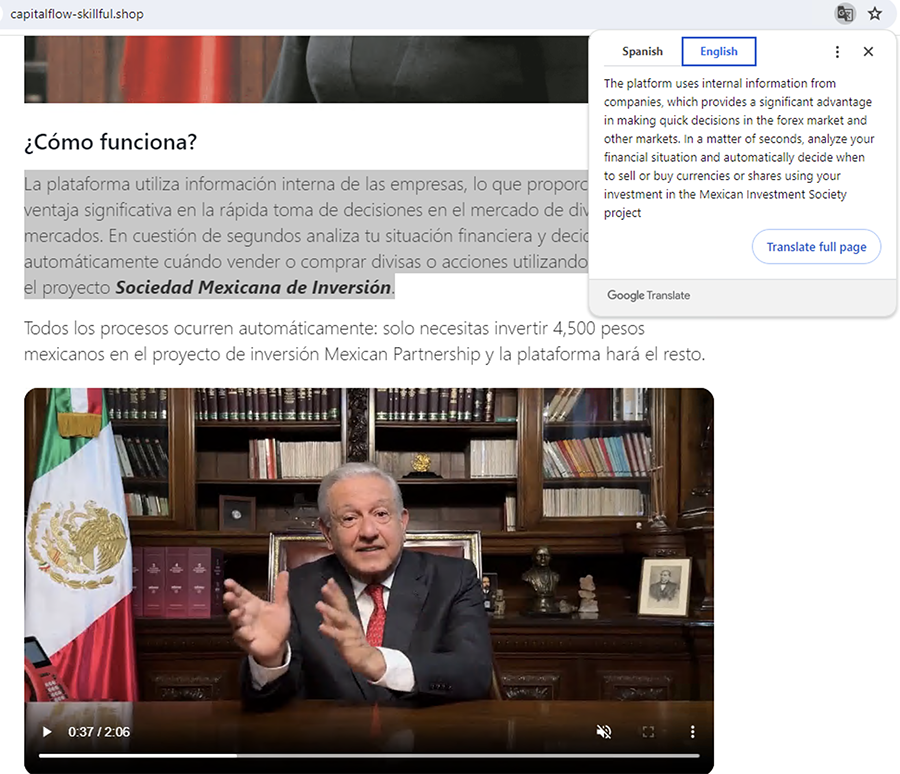

Example 4

- Webpage URL: capitalflow-skillful[.]shop

- Video URL: hxxps://ai-usmcollective[.]click/videos/MexicanPartnership_man_MX[.]mp4

Language: Spanish - Country targeted: Mexico

- Deepfake of: Andrés Manuel López Obrador (President of Mexico)

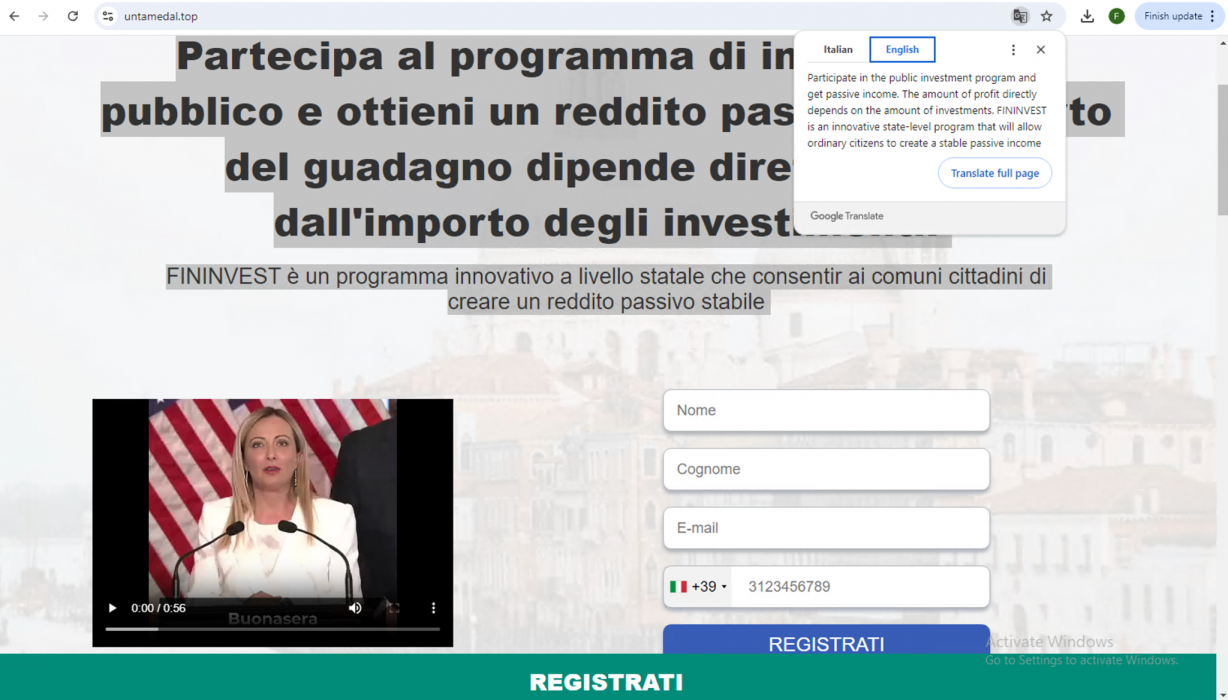

Example 5

- Webpage URL: untamedal[.]top

- Video URL: hxxps://ai-usmcollective[.]click/videos/FinInvest_woman-performing_IT[.]mp4

Language: Italian - Country targeted: Italy

- Deepfake of: Giorgia Meloni (Prime Minister of Italy)

- Webpage URL: hxxps://hybridpowerit[.]com/

Example 6

- Video URL: hxxps://ai-usmcollective[.]click/videos/ImmediateMatrix-PNewsQZ-Invest[.]mp4

Language: Czech - Country targeted: Czechia

- Deepfake of: Andrej Babis (Czech politician and businessman)

Example 7

- Webpage URL: rondeliercore[.]com

- Video URL: hxxps://hemicdn[.]com/video_5848484848485[.]mp4

Language: Turkish - Country targeted: Turkey

- Deepfake of: Omer Koc (Turkish businessman)

Example 8

- Webpage URL: hxxps://naatuureeffocus[.]com/

- Video URL: hxxps://ai-usmcollective[.]click/videos/USM_novosti-usmanov_UZ[.]mp4

Language: Russian - Country targeted: Uzbekistan

- Deepfake of: Alisher Usmanov (Uzbek and Russian businessman)

Video Hosting Domains

- video[.]belmar-marketing[.]online

- fiirststreeeet[.]top

- ai-usmcollective[.]click

- fortunatenews[.]com

- conspatriots2024[.]com

Quantum AI Scam Webpage Examples

- agentvisitliarpoint[.]click

- ai-usmcapital[.]click

- ai-usmfence[.]click

- ai-usmoutlay[.]click

- ai-usmroot[.]shop

- ai-usmside[.]shop

- alt-fin-side[.]shop

- baelandworld[.]com

- bikeputaware[.]click

- bit-360[.]site

- blackenedretl[.]shop

- blaroya[.]com

- boarbooks-rh[.]cloud

- cavernaid-ky[.]cloud

- certifilite[.]com

- coredale-pg[.]cloud

- crystalincantation[.]com

- d1g1talpoint[.]xyz

- dearetung[.]homes

- dflihdr[.]top

- directors[.]homes

- dongice-jksd[.]cloud

- echolimirs[.]top

- edenovougobio[.]site

- enter-up[.]top

- excellentone[.]one

- fabulous4onee[.]monster

- firstcoyotecapital[.]click

- flowcyber[.]click

- flowersys-sv[.]cloud

- flowpulse[.]click

- foodtruckit[.]com

- fourhillsfarmva[.]com

- g-tradytactics3[.]site

- gatenewtechlikew[.]world

- gfnuw[.]top

- ggenniusprrojecct[.]site

- globalmastersilvernew[.]world

- globalpolytechasd[.]world

- globalpolytr[.]world

- goldzonexa[.]world

- gravetechno-jy[.]cloud

- greenrealm[.]world

- grottostones-gl[.]cloud

- groundbreakinginitiative[.]top

- gtriotit-wqm[.]site

- hideoutglownew[.]pro

- hotelierjobz[.]com

- huerwlleiss-herton[.]pro

- iaqa[.]life

- illjp4gpz[.]sbs

- illjp4xty[.]sbs

- inc-co[.]site

- infosysdata[.]store

- inv-platform2024[.]site

- investcontribution[.]top

- jetshow-qi[.]cloud

- juoguquda[.]com

- kevxk[.]top

- kos4mzf[.]monster

- kurtilast[.]top

- kuvukye[.]space

- lartons[.]top

- liketechnewgateq[.]world

- lozlas-ta[.]cloud

- lozlas-tb[.]cloud

- lozlas-tc[.]cloud

- lsuhhf[.]top

- maplegateu-yj[.]xyz

- metawings[.]top

- milfarka[.]store

- mmajp1oxg[.]monster

- newforestjoyzonega[.]world

- newglowhideout[.]pro

- njecil[.]top

- oakcloudhi-ere[.]world

- oakcloudhi-erg[.]world

- onlinepasivechange[.]sbs

- originalquantum[.]shop

- outlayquantum[.]shop

- owrsasd-tf[.]cloud

- passionquantum[.]shop

- peerfeectwall[.]online

- pinkwheels-ra[.]cloud

- pinkwheels-rb[.]cloud

- prevaczcgv[.]site

- pro-fitters[.]store

- promisingendeavor[.]website

- qatuk[.]org

- quantum-ai[.]link

- quantumai[.]tools

- quantumal[.]xyz

- qvantum-ai[.]tech

- redwoodrest[.]world

- registration-form[.]website

- restigood-oo[.]cloud

- ricegoodniceproas[.]world

- rockriddle[.]world

- rwtfoa-hpl[.]cloud

- sacvenih[.]sbs

- ser2kke[.]monster

- silvermaster-gtj[.]cloud

- spacemome[.]online

- spikemaster-ra[.]cloud

- spikemaster-rj[.]cloud

- srkgjh[.]top

- sskkilfulinnvestoor[.]site

- st-twp[.]cloud

- stakspat[.]site

- stockainet[.]site

- subterrasphere[.]world

- superstarone[.]one

- swapfavour[.]com

- sweetchaseonly[.]click

- techmerge-ai[.]space

- techverseasx[.]world

- testdomaintest[.]site

- theebeestbrookeer[.]website

- thequantumai[.]org

- treewavet[.]world

- tulalavno[.]store

- ultravirilehemer[.]com

- unityventurehub[.]space

- voidrover-hjsi[.]xyz

- whitesphereworldjoyful[.]world

- wizardstar-srea[.]xyz

- wizardstar-sred[.]xyz

- wizardstar-sree[.]xyz

- wizardstar-srej[.]pro

- wizardstar-sren[.]pro

- wizardstar-srey[.]xyz

- wizardstarsrea[.]pro

- wizardstarsreb[.]pro

- wizardstarsrek[.]pro

- wizardstarsreo[.]pro

- wztnb[.]shop

Quantum AI Scam Video URL Examples

- hxxps[:]//video[.]belmar-marketing[.]online/videos/QuantumAI_Muskpresentations_EN[.]mp4

- hxxps[:]//video[.]belmar-marketing[.]online/videos/QuantumAI_musk_EN[.]mp4

- hxxps[:]//telegra[.]ph/file/3ae9666ce78ae1ba90b47.mp4

- hxxps[:]//fortunatenews[.]com/video/quantumal__video[.]mp4

- hxxps[:]//fiirststreeeet[.]top/videos/QuantumAI_Musk-presentation_EN[.]mp4

TotalEnergies Scam Webpage Examples

- invest-toolavenue[.]shop

- invest-toolrealm[.]shop

- investseries[.]shop

TotalEnergies Scam Video URL Examples

- hxxps[:]//video.belmar-marketing[.]online/videos/TotalEnergies_news_FR[.]mp4

- hxxps[:]//ai-usmcollective[.]click/videos/TotalEnergies_news_FR[.]mp4

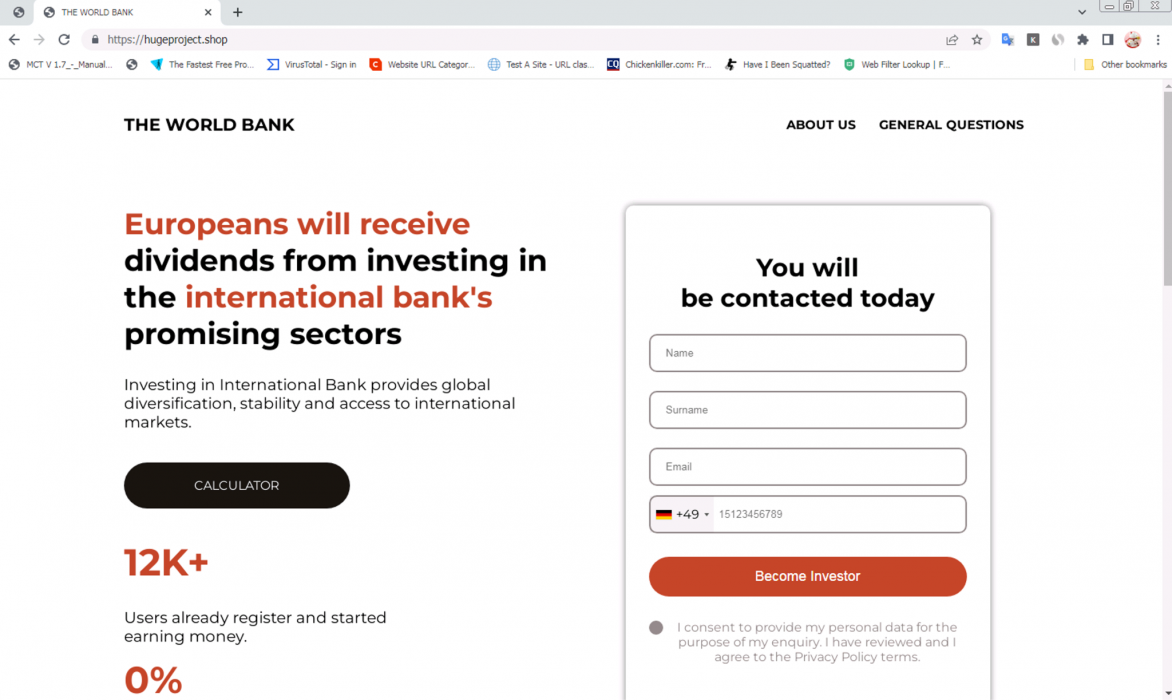

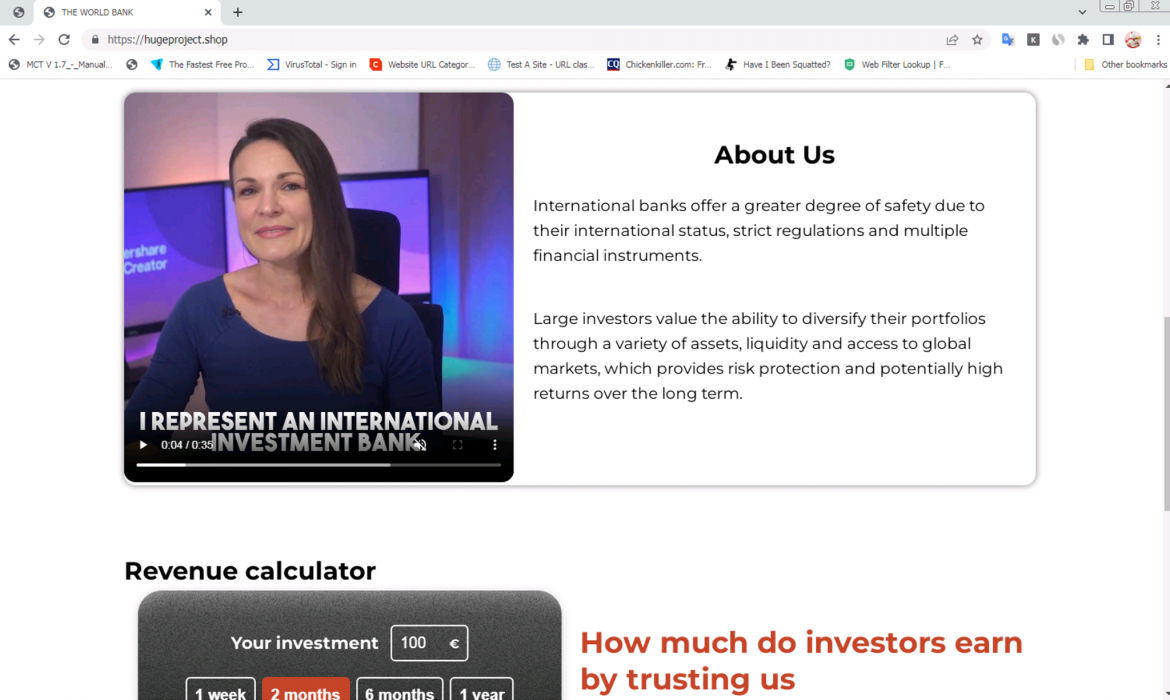

World Bank Scam Webpage Examples

- hugeproject[.]shop

- higheststudy[.]click

- growthventure[.]click

- growthwall[.]click

World Bank Scam Video URL Examples

- hxxps[:]//video[.]belmar-marketing[.]online/videos/TheWorldBank_woman_EN[.]mp4

- hxxps[:]//ai-usmcollective[.]click/videos/TheWorldBank_woman_EN[.]mp4

KazMunayGas Scam Webpage Examples

- aifaith[.]shop

- aiprincipal[.]top

- aireliance[.]top

- aishield[.]shop

- aiside[.]shop

- aisimple[.]top

- aitfinoutlay[.]top

- block4aiendeavor[.]shop

- block4aifinancier[.]shop

- block4aiinitiative[.]shop

- block4aimethod[.]top

- block4aioperation[.]top

- block4aipatron[.]top

- block4aiprecaution[.]top

- block4aiprotection[.]shop

- block4aiprotection[.]top

- block4aisafeguarding[.]shop

- block4aischedule[.]top

- block4aisystem[.]top

- block4aitask[.]top

- block4aiwell-being[.]shop

- block4alinitiative[.]shop

- coinaibarrier[.]top

- coinaibasis[.]top

- coinaiboundary[.]top

- coinaichannel[.]top

- coinaicommunicate[.]top

- coinaieducate[.]top

- coinaienclosure[.]top

- coinaifence[.]top

- coinaifinancier[.]top

- coinaiframework[.]top

- coinaimedium[.]top

- coinaimethod[.]shop

- coinaipartition[.]top

- coinaiprecaution[.]top

- coinaischedule[.]shop

- coinaischeme[.]shop

- coinaishareholder[.]top

- coinaiside[.]top

- coinaistage[.]top

- coinaitask[.]shop

- coinaiwell-being[.]top

- coinalcommunity[.]shop

- coinalendeavor[.]top

- coinalgamble[.]shop

- coinalguard[.]top

- coinalinitiative[.]shop

- coinalinternational[.]shop

- coinalprecaution[.]shop

- coinalsafeguarding[.]top

- coinalsafetynet[.]shop

- coinalsafetynet[.]top

- coinalscheme[.]top

- coinaltask[.]top

- coinalundertaking[.]shop

- coinaluniversal[.]top

- coinalwell-being[.]shop

- coinalwell-being[.]top

- coinalwidespread[.]top

- coinangelinvestor[.]top

- coinbacker[.]shop

- coinband[.]shop

- coinband[.]top

- coinbarrier[.]top

- coincapitalist[.]top

- coincollective[.]shop

- coincommunity[.]shop

- coincommunity[.]top

- coindependence[.]shop

- coinfinancier[.]shop

- coingamble[.]top

- coingathering[.]shop

- coininitiative[.]shop

- coinpartition[.]top

- coinreliance[.]shop

- coinside[.]top

- coinundertaking[.]shop

- coinundertaking[.]top

- easylender[.]top

- gaibarricade[.]top

- gaibasis[.]top

- gaiboundary[.]top

- gaicurriculum[.]top

- gaidiscover[.]top

- gaifence[.]top

- gaiintermediary[.]top

- gailegalentity[.]top

- gaimiddleman[.]top

- gaipioneer[.]shop

- gaiprecaution[.]shop

- gaiprecaution[.]top

- gaiprestige[.]shop

- gaiprincipal[.]top

- gaiprinciple[.]top

- gaiproduction[.]top

- gaiprotection[.]top

- gairesplendent[.]top

- gairush[.]shop

- gaisafeguarding[.]shop

- gaishield[.]shop

- gaiside[.]shop

- gaisource[.]top

- gaitfinagent[.]shop

- gaitfincore[.]shop

- gaitfinfacilitator[.]shop

- gaitfinnegotiator[.]shop

- gaitfinroot[.]shop

- gaitfinsalesperson[.]shop

- gaitfinside[.]shop

- gaitfinsource[.]shop

- gaiwell-being[.]shop

- gaiwise[.]shop

- gaiwonderful[.]top

- galtcoindiscover[.]top

- gbeginstart[.]shop

- gblock4aiacquaint[.]top

- gblock4aiangelinvestor[.]shop

- gblock4aicommunicate[.]shop

- gblock4aiendeavor[.]shop

- gblock4aifinancier[.]shop

- gblock4aifortification[.]top

- gblock4aiguard[.]top

- gblock4aiinitiative[.]shop

- gblock4aimethod[.]shop

- gblock4aimethod[.]top

- gblock4aioperation[.]shop

- gblock4aioperation[.]top

- gblock4aipatron[.]top

- gblock4aiprotection[.]top

- gblock4aisafeguarding[.]shop

- gblock4aisafeguarding[.]top

- gblock4aisafetynet[.]top

- gblock4aischedule[.]top

- gblock4aischeme[.]top

- gblock4aisequence[.]top

- gblock4aishield[.]shop

- gblock4aisystem[.]top

- gblock4aitask[.]shop

- gblock4aitask[.]top

- gblock4aiwell-being[.]shop

- gblock4aiwell-being[.]top

- gblock4aiworldwide[.]shop

- gblock4alinitiative[.]shop

- gbrightenclosure[.]top

- gcapitalstart[.]click

- gcentralboundary[.]shop

- gchiefcommunicate[.]top

- gcleverbacker[.]shop

- gcleverbacker[.]top

- gcoinaiangelinvestor[.]top

- gcoinaibacker[.]top

- gcoinaibarricade[.]top

- gcoinaibarrier[.]top

- gcoinaibasis[.]top

- gcoinaichannel[.]top

- gcoinaieducate[.]top

- gcoinaienclosure[.]top

- gcoinaifence[.]top

- gcoinaifinancier[.]top

- gcoinaiframework[.]top

- gcoinaimedium[.]top

- gcoinaimethod[.]shop

- gcoinaiprecaution[.]top

- gcoinaisafetynet[.]top

- gcoinaischeme[.]shop

- gcoinaisequence[.]shop

- gcoinaishareholder[.]top

- gcoinaisystem[.]top

- gcoinaitask[.]shop

- gcoinaiwell-being[.]top

- gcoinalband[.]shop

- gcoinalcommunity[.]shop

- gcoinalendeavor[.]shop

- gcoinalgamble[.]shop

- gcoinalguard[.]top

- gcoinalinitiative[.]shop

- gcoinalinternational[.]shop

- gcoinalprecaution[.]top

- gcoinalrisk[.]top

- gcoinalsafeguarding[.]top

- gcoinalsafetynet[.]shop

- gcoinalsafetynet[.]top

- gcoinalschedule[.]top

- gcoinalscheme[.]top

- gcoinaltask[.]top

- gcoinaluniversal[.]top

- gcoinalwell-being[.]shop

- gcoinalwidespread[.]top

- gcoinangelinvestor[.]top

- gcoinassurance[.]shop

- gcoinband[.]top

- gcoinbarrier[.]top

- gcoincapitalist[.]top

- gcoincollective[.]shop

- gcoincommunicate[.]shop

- gcoincommunity[.]shop

- gcoincommunity[.]top

- gcoincredit[.]shop

- gcoindependence[.]shop

- gcoinfinancier[.]shop

- gcoingamble[.]top

- gcoingathering[.]shop

- gcoininitiative[.]shop

- gcoinmedium[.]top

- gcoinpartition[.]top

- gcoinreliance[.]shop

- gcoinside[.]top

- gcoinundertaking[.]shop

- gcoinundertaking[.]top

- gdrivenfacilitator[.]top

- geffortlesslegalentity[.]top

- genergyemporium[.]store

- gexceptionalmodule[.]top

- gexhilaratingcontribution[.]club

- gexxclusivefoundation[.]space

- gfinancingstart[.]shop

- ggiftedcollective[.]top

- ggroundworkband[.]shop

- ggrrandventure[.]fun

- ggrrandventure[.]site

- ggrrandventure[.]website

- gharmoniousundertaking[.]top

- ghighestrisk[.]top

- gimmenseinitiative[.]top

- gimportantinternational[.]club

- gincompleteuniversal[.]top

- ginnspiringdefense[.]site

- ginstitutestart[.]shop

- ginvestfortification[.]shop

- ginvestguard[.]shop

- ginvestingstart[.]shop

- ginvestprecaution[.]shop

- ginvestwell-being[.]shop

- gnetworkstart[.]click

- goriginatestart[.]shop

- grrandventure[.]site

- gscarceai[.]top

- gsplendidai[.]top

- gsubsidystart[.]shop

- gsurprisingai[.]top

- gsystemaibarricade[.]top

- gsystemaibasis[.]shop

- gsystemaiboundary[.]shop

- gsystemaiboundary[.]top

- gsystemaifence[.]shop

- gsystemaifence[.]top

- gsystemaigroundwork[.]shop

- gsystemainegotiator[.]shop

- gsystemaiside[.]shop

- gtopinvestigate[.]shop

- individualestablish[.]top

- investfortification[.]shop

- riseanalyze[.]click

- safegroup[.]shop

- subsidystart[.]shop

- systemaibarricade[.]shop

- systemaibasis[.]top

- systemaigroundwork[.]shop

- systemainegotiator[.]shop

KazMunayGas Scam Video URL Examples

- hxxps[:]//video[.]belmar-marketing[.]online/videos/kazmunay-preland.mp4

- hxxps[:]//ai-usmcollective[.]click/videos/kazmunay-preland.mp4

Liberty Coin Scam Webpage Example

- patriotsmaga2024[.]com/wp/2024/04/09/statement-from-president-trump-9th-of-april-2024/?1717411716131

Liberty Coin Scam Video URL Examples

- hxxps[:]//conspatriots2024[.]com/wp-content/uploads/2024/04/0-02-05-39b70d3c0c0582890da2793bd7551a4adacea2faef6e1303a943db46b875cde7_1c6dbb11ce91a9.mp4

- hxxps[:]//conspatriots2024[.]com/wp-content/uploads/2024/04/0-02-05-aca301a44b073ea87338efcd18aa41f18ebd53e8462b780976412fc70a3a28d2_1c6dbb100c4956.mp4

Additional Resources

- Scammers are getting creative using malvertising, deepfakes, and YouTube – Avast

- It's a scam! Celebrities are not getting rich from online investment trading platforms – Australian Competition & Consumer Commission (ACCC)

- Elon Musk used in fake AI videos to promote financial scam – RMIT University

- British engineering giant Arup revealed as $25 million deepfake scam victim – CNN

- AI Audio Deepfakes Are Quickly Outpacing Detection – Scientific American

- The Real-Time Deepfake Romance Scams Have Arrived – WIRED

- Deepfake Warren Buffett is selling bitcoin scams to TikTok users – Media Matters

- Elon Musk, Joe Rogan, and other celebrities used in deepfake scams – Unit 42 on X

- Deepfake images of German celebrities used in investment scams – Unit 42 on X

如有侵权请联系:admin#unsafe.sh