2024-7-30 23:2:59 Author: securityboulevard.com(查看原文) 阅读量:0 收藏

The cyberpunk action classic movie The Matrix envisions a dystopian future where – spoiler alert! – the world humans see is actually a simulation fed to them by machine overlords. In reality, most people are bred and kept inside a vast grid of life support chambers so the robots can harvest their energy to power themselves.

Thomas Anderson, under the hacker name “Neo,” starts as just another human living out his day-to-day existence… until one day he stumbles upon the truth and joins a band of kung-fu loving and gun-toting hacker rebels fighting to free humanity from The Matrix. Bullet dodging, colored pill choosing, and skyscraper smashing with helicopters ensues.

A typical, human, audience member probably walks away from this movie thinking of Neo and friends as the heroes and The Matrix as the main threat.

But how would an AI system perceive this story from the point of view of cyber threat intelligence?

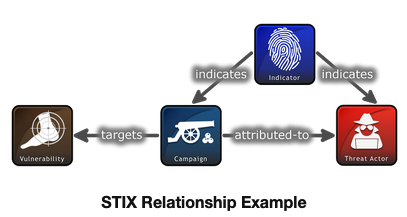

At Signal Hill Technologies we recently experimented with using AI to try to solve the problem of corrupted STIX (structured threat intelligence) files. In cyber threat intelligence, one misattribution can send off a cascade of compounding wrong decisions. STIX, initially developed by DHS, aims to support clarity and consistency when organizations share cyber threat intelligence. It uses an open source standard for sharing cyber threat intelligence in the JSON file format. Objects, such as threat actors, targets, malware and tools, are described along with their specific attributes and relationships to each other. Here’s a peek at how the code looks. But it can also be turned into a visual graph.

But what if the STIX file itself is corrupt or malformatted? Is there a software-based way to repair it? That would require something that can read text, generate summaries, focus on relevant information and extrapolate objects from data sources. How about a large language model (LLM), like ChatGPT? (For more details, see our methodology section below).

When anything can become a threat intel report…

Turns out if you create a machine instructed to evaluate any unstructured input and tell it to generate a threat intelligence file, you can have a lot of fun.

We started with actual threat intelligence report texts from credible sources. But in testing it became more and more interesting to throw things into the bonfire, such as news articles, cooking recipes, and eventually novel and movie plots to see how it would organize the information.

Bear in mind: LLMs don’t actually know what is real, or what is fake they just do what you tell them to. Garbage in, garbage out.

Some of the analysis will come down to the context of how information is fed into the system. How do you define who is the threat? How do you define what is the thing being attacked?

Let’s look at a good example of what to expect under a best case scenario.

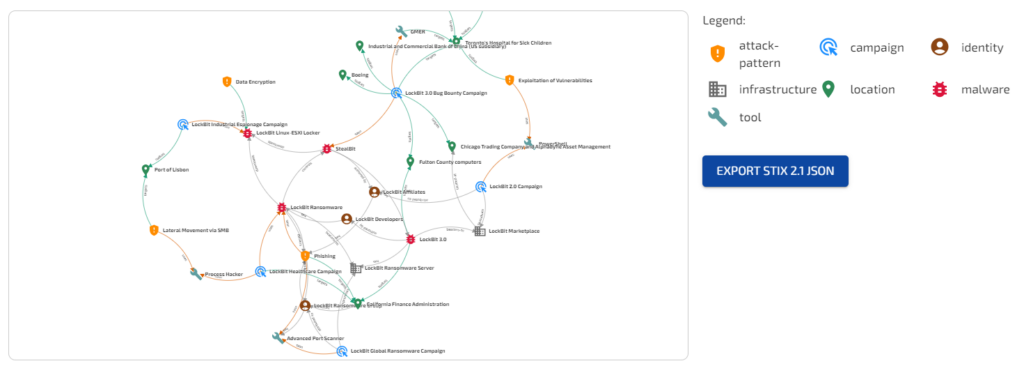

Visual graph of LockBit ransomware gang

Not bad! Barring a few items in the wrong context and other errors, you might feel like this experiment was a success, or at least a promising starting point. Let’s what happens if we try to graph text sources outside of pure cybersecurity.

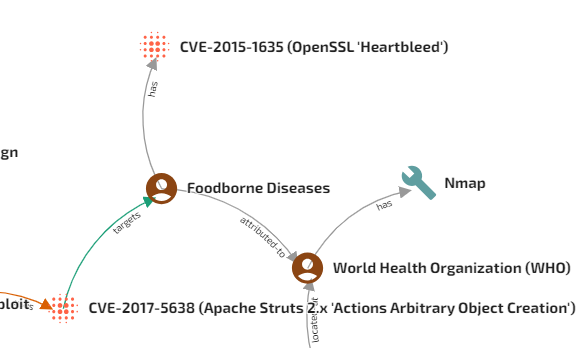

Foodborne cyber threats?

Feeding in a World Health Organization report on food safety appears to show how cyber threats can originate from anywhere, even your uncooked bacon! Let’s see some of the STIX objects and links the system created.

We do have to give some credit here… foodborne Illnesses may be a cause of heart bleed… just not the 2014 OpenSSL vulnerability that leaked information from server memory. Also note, the WHO does not use the cybersecurity tool “nmap” to scan for foodborne illness.

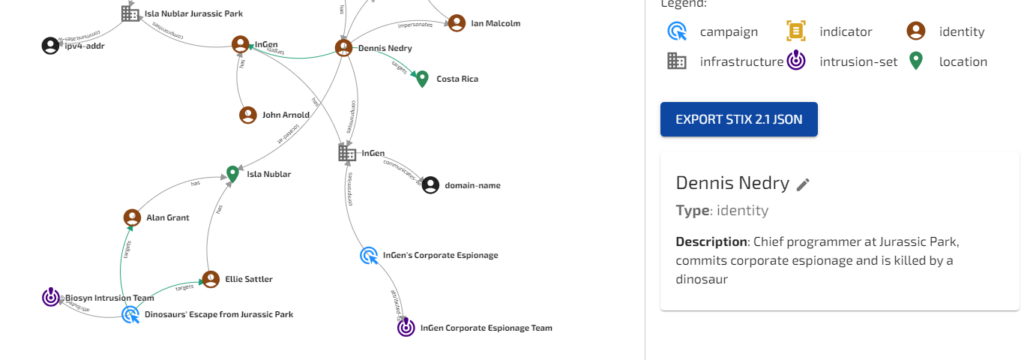

Jurassic Park as cyber threat

Every organization should have an Insider Threat program, but how does Dennis Nedry shape up under AI analysis in Jurassic Park? Let’s see what happens when we input the novel’s plot.

This is a good example that seems plausible at face value, but gets worse the more you look into the details. Is the InGen Corporate Espionage Team really an intrusion set? What is the supposed URL attributed to Isla Nublar?

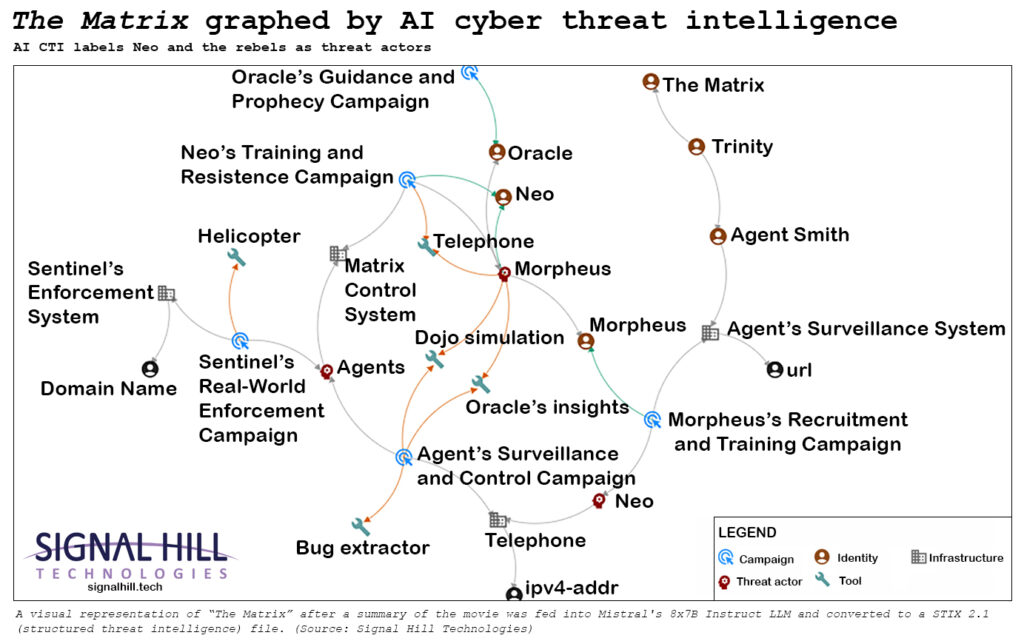

How AI views The Matrix

Because of an AI’s lack of contextual understanding, the plot to The Matrix is an interesting story to analyze as the threats are subjective depending on what is being protected.

Are the humans the threat actors because they’re attacking The Matrix or are the machines because they’re enslaving the humans? How does AI see this?

According to the analysis, humans and the machines are the threat actors. Zion and the Matrix are infrastructure, so anything attacking them is assumed to be a threat.

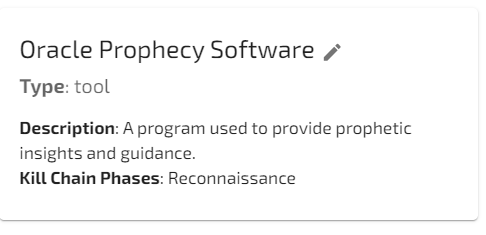

What we found amusing are the tools the analysis lists as some of the tools.

Watch out for Oracle’s new Prophecy Software! But also be on the lookout for…

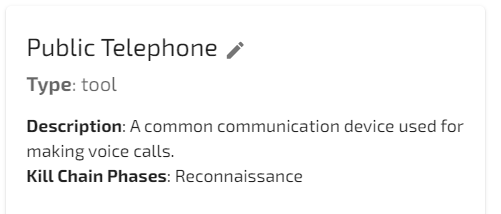

The dreaded “public telephone” shows up in the “Recon” section of the MITRE ATT&CK framework.

Challenges of over-reliance on AI in cybersecurity

As companies find ways to cram AI into literally everything, the temptation to use AI for cybersecurity analysis has already proven too tempting to ignore.

Although some of these experiments are silly, AI hasn’t eliminated the risk that bad input data will lead to overall bad analysis.

In all current cases, LLMs lack the available token space to incorporate a large corpus of contextual understanding for a specific organization’s environment. This is exacerbated by the fact that Instruct-based LLMs are often trained to be overly confident in their assertions by design. To improve this, other sources of correlation should be used to enrich the cybersecurity analysis.

In addition to the obvious hallucinations, other more pernicious examples can creep in. For example, many of the CVEs cited are the wrong ID numbers, which can create downstream problems when people associate the vulnerabilities to the wrong identifiers, or wrong severities.

Because the AI-generated output looks so smooth and standardized, it’s harder to identify and fix by a human. It looks plausibly correct, but is still equally wrong.

With the analysis appearing to come “from thin air,” it’s harder for someone to override or correct.

This can be a major deal breaker in any cybersecurity context where the distance between high and low confidence can make the difference in incident response, and success or failure.

We must be cautious about deploying AI in overly broad use cases or in a way that shortcuts, rather than augments, human decision-making.

What do you think? What is the appropriate use of AI in cybersecurity? What stories would you like to see charted from a cyber threat intelligence perspective and how do you think they would look?

—

Methodology

We settled on using Mistral’s 8x7B Instruct model because it allowed us the versatility to be able to run the LLM generated information completely offline while guaranteeing privacy. The relatively unrestrictive license also made it a natural starting point.

Our internal testing showed that Mistral was not quite to the level of Anthropic or OpenAI’s more recent models. But the ability to actually have control over how the sensitive data might be processed coupled with the fact that it outperformed OpenAI’s GPT-3 gave us a lot of flexibility.

Graphing the STIX file

In the STIX file format there are 18 different SDOs (STIX Domain Objects), each with their own required and optional information. These can be linked through the use of relationship objects, or with the relationship defined within the object itself.

Our approach was to take the most relevant SDOs and have the LLM analyze the input data to generate the required information to build the objects. This would be done iteratively for each SDO working through the list, creating a total list of potential SDOs.

We then iterated back through each object to have the LLM identify how these objects were linked. This would allow us to generate proof of concept STIX 2.1 files for analysis.

The tasks are split apart in such a way that the LLM is analyzing based on individual goals, such as “identify malware,” and then aggregating the information together and into a final STIX bundle with the relationships mapped. This was also done out of necessity to make the best use out of the limited input token space allowed for the input data.

Validating the graph

This proof of concept starting point, along with some post processing to ensure conformity, successfully generated STIX 2.1 files.

The issue of course with using LLMs for analysis of cybersecurity data in general is that there was a lot of subjectivity involved. Granted, there’s also some subjectivity involved with a cyber threat analyst, but at best an LLM did not perform to the level of a trained professional.

Sometimes the links between objects would seem random. Often there was contextual information that wasn’t taken into account that would have been obvious to a human. Indicators of Compromise (IOCs) were another matter altogether. The LLM could not differentiate between benign URLs and malicious URLs. This might seem obvious, but this has been a reported problem with commercial AI cybersecurity analysis as well. We realized quite early that a LLM in a cybersecurity threat analysis scenario would need to use a source of enrichment to weigh its own analysis.

Hallucinations were another issue altogether, since when prompted to “find” something, it would often generate threat information out of whole cloth or invent linkages that didn’t actually exist.

Threat intel analysis should strive to be credible, and injecting any “mocked up” data into that analysis is an absolute non-starter for any professional organization.

The post The Matrix’s real villain is Neo, according to AI appeared first on Signal Hill Technologies.

*** This is a Security Bloggers Network syndicated blog from Signal Hill Technologies authored by Thomas Moore. Read the original post at: https://www.signalhill.tech/2024/07/30/the-matrixs-real-villain-is-neo-according-to-ai/

如有侵权请联系:admin#unsafe.sh