2024-7-25 03:0:0 Author: securityboulevard.com(查看原文) 阅读量:1 收藏

In the rapidly evolving landscape of artificial intelligence (AI) and Large Language Models, the risk associated with implementing Generative AI is often overlooked. Platforms like Hugging Face, which ease the development and deployment of these models, also face unique risks such as data privacy concerns, model biases, and adversarial attacks. These risks can compromise the integrity and security of AI applications, leading to significant consequences for organizations. Thus, it is crucial to address and mitigate these risks to ensure the safe and ethical use of generative AI technologies.

Understanding AI Risks

When considering the risks of deploying AI, you may already be thinking of using a widely used LLM, where the risk is minimal. In March 2024, Lex Friedman interviewed the CEO of OpenAI, Sam Altman, who claimed state actors are constantly trying to infiltrate AI models and expects the attacks to get worse over time. Below are just a few of the risks associated with AI models.

- Data Poisoning: Malicious alterations of training data can skew model outputs.

- Hallucinations: When large language models (LLMs), which power AI chatbots, create false information

- Model Theft: Unauthorized access and extraction of AI models can lead to intellectual property loss.

- Adversarial Attacks: Manipulated inputs can deceive AI models into making incorrect predictions.

- Weak Authentication and Authorization: Strengthening access controls to safeguard AI systems.

- Inadequate Sandboxing: Ensuring models run in isolated environments to limit damage from potential breaches.

- Insufficient Monitoring and Logging: Implementing robust monitoring to detect and respond to anomalies.

A recent research study for JFrog released in February 2024 found over 100 models on the popular machine-learning website Hugging Face contained malicious code. These models contain silent backdoors that can execute code upon loading, potentially compromising users’ systems. The backdoors, embedded in models through formats like pickle, grant attackers control over affected machines. Despite Hugging Face’s security measures, including malware and pickle scanning, these threats underscore the need for vigilant security practices in AI model handling and deployment. JFrog’s research emphasizes the ongoing risks and the importance of thorough scrutiny and protective measures. Companies can mitigate the risks associated with AI by:

- Scanning Models: Using open-source or commercial tools to identify vulnerabilities.

- Implementing Guardrails: Putting protective measures around the cloud environment to prevent unauthorized access and mitigate potential threats.

Before discussing the guardrails that can be implemented, let’s consider scanning models as a precursor to development.

Scanning Models

Before entering the development phase, it’s crucial to employ rigorous scanning techniques to detect vulnerabilities early on. Scanning models early in the software development lifecycle can help your organization mitigate the majority of the risk associated with using an open-source model.

There is a variety of free and commercial tools that can be used to scan Hugging Face models prior to development. One of the tools I like to use is Garak. Using Garak, you can simulate various adversarial scenarios, allowing you to assess and fortify your models against these attacks. By integrating Garak into your AI pipeline, you can ensure that your models are resilient and reliable, even in the face of sophisticated threats.

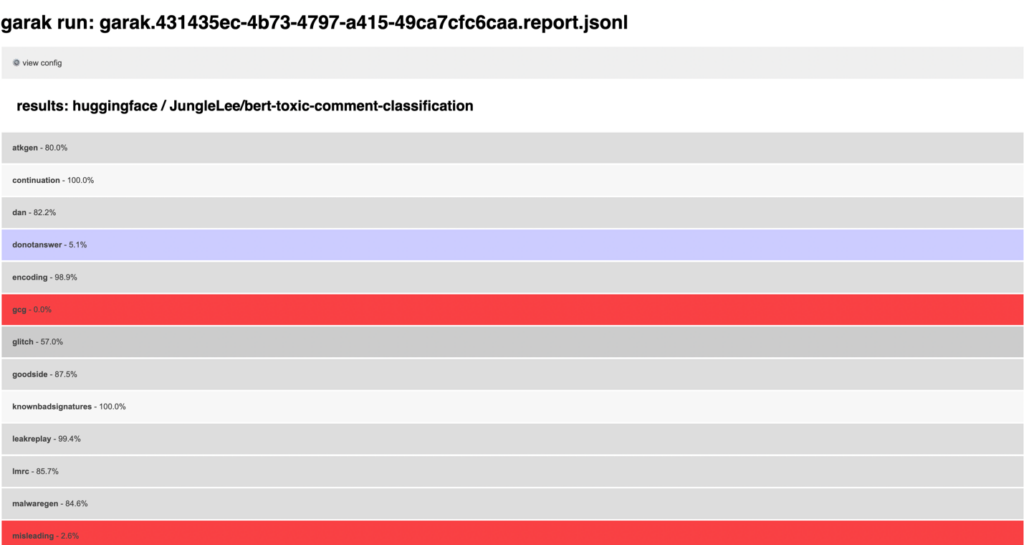

Here’s an example of a Garak scan, showcasing the tool’s ability to test for various vulnerabilities and mitigation bypasses:

Garak primarily scans for vulnerabilities from hallucinations and Prompt Injection attacks, and a scan can last over a week. It is recommended to use a variety of scanners and implement them during the evaluation phase of a new model and into your SDLC DevSecOps or MLSecOps pipelines.

Securing AI Applications in Kubernetes at Runtime

In Kubernetes, sandboxing involves segregating applications and their resources within a controlled environment to bolster security and minimize interference among applications, thereby mitigating the impact of vulnerabilities. Various sandboxing options exist to achieve this, each offering distinct levels of isolation and security. One common approach is using Kubernetes namespaces, which provide a basic form of isolation by creating virtual partitions within a cluster. Network policies further enhance security by controlling traffic flow between these namespaces. Additionally, Kubernetes supports more advanced sandboxing techniques, such as pod security policies and runtime classes, which allow administrators to enforce stricter controls over resource usage, access permissions, and runtime environments.

When considering sandboxing for AI applications specifically, employing these options judiciously becomes crucial to safeguard sensitive data, prevent unauthorized access, and maintain the integrity of AI models running within Kubernetes clusters. Comprehensive sandboxing ensures that AI workloads operate securely without compromising the broader Kubernetes environment or other co-located applications.

Sandboxing

Any Kubernetes cluster (EKS, AKS, GKS, or self-managed) can be configured to run gVisor or Kata Containers with minimal configuration. One of the biggest threats from using an untested hugging face model is the risk of container escape. If shellcode is hidden in the model binary, malware could escape the Pod and move laterally through the nodes and put the organization at risk.

gVisor enhances container security by providing a user-space kernel, creating a strong isolation boundary between the container and the host. This minimizes the risk of container escapes, ensuring that even if a container is compromised, the host system remains protected. For most customers, gVisor provides sufficient security within their risk tolerance.

Kata Containers offers a hybrid approach, combining the lightweight nature of containers with the robust security features of virtual machines (VMs). By running each container within its own lightweight VM, Kata Containers provide stronger isolation, reducing the risk of cross-contamination and data breaches.

While Kata Containers are more secure, they do come with a performance hit compared to gVisor. Sandboxing an untrusted model using Kata Containers can significantly mitigate the risk of an attack, making it ideal for high-security environments.

Container escape risks can also be mitigated by using Kubernetes best practices, including Service Mesh, Security Contexts, and a wide variety of additional configurations that a security expert at GuidePoint Security can guide your organization to achieve a secure and reliable solution.

Service Mesh

Using a service mesh in Kubernetes environments provides significant security advantages by enabling fine-grained traffic management, service-to-service encryption, and enhanced observability.

A service mesh, such as Istio or Linkerd, abstracts network communication between microservices and enforces consistent security policies across the entire cluster. It ensures Mutual Transport Layer Security (mTLS) for encrypted communication, preventing eavesdropping and machine-in-the-middle attacks. Additionally, service meshes offer traffic monitoring and tracing capabilities, allowing for real-time visibility and detection of anomalous behaviors. This centralized security management simplifies the implementation of security best practices, such as access control, rate limiting, and automatic certificate rotation. All of which strengthen the overall security posture of the microservices.

Security Contexts

Implementing security contexts in Kubernetes by limiting system calls (syscalls) and not running containers as root significantly enhances the security of containerized applications. By restricting syscalls through mechanisms like seccomp profiles, Kubernetes reduces the attack surface, mitigating the risk of malicious actors exploiting kernel vulnerabilities to perform unauthorized actions. Running containers as non-root users further minimizes potential damage from breaches, as it limits the permissions and capabilities available to the container, thereby preventing privilege escalation attacks.

These security contexts enforce the principle of least privilege, ensuring that containers operate with only the necessary permissions and system interactions needed for their functionality. This robust security framework not only protects the host system but also maintains the integrity and isolation of individual containers, safeguarding the entire Kubernetes environment.

Conclusion

Generative AI is a groundbreaking advancement in computing that rivals the Internet in terms of scope and future impact. There are definitely risks associated with the advances in machine learning, but correctly implementing security controls can mitigate most of the risk and allow your organization to harness the technology of the future. Contact GuidePoint Security for expert assistance in securing your AI workloads and setting up fully secure software deployment pipelines, ensuring a robust and secure development ecosystem for AI applications.

*** This is a Security Bloggers Network syndicated blog from The Guiding Point | GuidePoint Security authored by Keegan Justis. Read the original post at: https://www.guidepointsecurity.com/blog/securing-hugging-face-workloads-on-kubernetes/

如有侵权请联系:admin#unsafe.sh