Nisos

AI-Enabled Digital Messaging: From Political Campaigns to Influence Operations

To support our partners in the technology and election protection space, Nisos analysts are keeping a close eye on how both official and covert actors are using AI to influence elections, and tracking how their techniques evolve. Actors engaged in influence operations (IO) are leveraging AI-enabled tools to scale and accelerate their operations and more easily produce diverse content while attempting to hide their tracks. We have observed IO actors making use of AI-based text, text-to-image, and text-to-video generators, AI-generated avatars, and deepfake tools to achieve their objectives. In the meantime, political parties and candidates running in elections this year have also taken advantage of AI to scale campaign messaging, including to generate political content targeting their opponents. As the technology improves, it will likely become harder to distinguish between authentic and inauthentic or manipulated content, possibly increasing the efficacy of IO campaigns.

Inauthentic News Outlets Leveraging AI

Threat actors, such as the Russian-linked CopyCop and Doppelgänger networks, are using AI to create fake news articles and websites promoting election-related IO narratives. CopyCop plagiarizes, translates, and edits mainstream media content with AI, using prompt engineering to add a partisan bent to real news articles. Russia’s larger propaganda apparatus—including Doppelgänger, which predates CopyCop and is also known for impersonating news outlets—subsequently amplifies the articles and enables their spread across the information ecosystem.

Graphic 1: Example of a website posing as the German Der Spiegel (right).

Image from Deutsche Welle. (See source 1 in appendix)

AI-Generated Audio, Images, and Video

Threat actors and candidates running for office alike are using AI to produce multimodal content. The Chinese-linked operation Spamouflage has been leveraging AI-generated images to convey IO narratives for at least a year, typically to push pro-China and anti-US content. In the past, Spamouflage posts were relatively unsophisticated in their execution, consisting of simple jpeg files with both English and Mandarin text, the latter serving as an indicator of the content’s true origin (see source 2 in appendix). More recently, the likely Chinese-linked operation Shadow Play has managed to attract a larger audience than Spamouflage. Shadow Play frequently posts video essays with AI-generated voice-overs and has also leveraged technology that generates video content using AI avatars (see source 3 in appendix).

In the run-up to the November 2023 presidential election in Argentina, the now-president and then-candidate Javier Milei and his rival Sergio Massa used AI to generate content attacking one another. Milei published an AI-generated image of Massa depicting him as a communist. A doctored video showing Massa using drugs manipulated existing footage to add Massa’s image and voice. Massa’s team in turn distributed a series of AI-generated images and videos that vilified Milei (see source 4 in appendix).

Graphic 2: AI-generated image depicting Sergio Massa as a communist. (See source 5 in appendix)

Featuring AI-Generated Avatars in Video Content

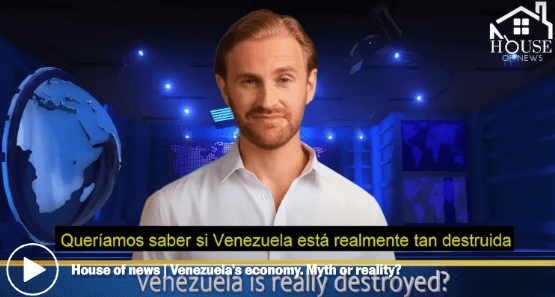

AI avatars have also appeared in both official election and IO campaigns, including by Spamouflage and Shadow Play—typically in the form of fake “news anchors”—as well as in the recent Indian and Mexican elections to serve as “digital copies” of candidates and other public figures, even dead ones (see source 6 and 7 in appendix). In India, an AI-generated digital clone of the daughter of the founder of the Sri Lankan militant separatist group Liberation Tigers of Tamil Eelam urged Tamilians globally “to take forward the political struggle for their freedom (see source 8 in appendix).” In Mexico, then-presidential candidate Xóchitl Gálvez created an AI avatar of herself to serve as a spokesperson to facilitate campaign messaging activities and save on production costs (see source 9 in appendix). In Venezuela, an inauthentic news channel called House of News featured AI-generated English-speaking news anchors that propagated narratives favorable to President Nicolás Maduro’s government (see source 10 in appendix).

Graphic 3: Digital avatar of deceased daughter of Tamil leader during a live stream.

Graphic 4: House of News AI avatar news anchor (see source 11 in appendix).

Deepfake audio, image, and video content has also featured in IO and election campaigns this year. Of the three, audio deepfakes are simpler and cheaper to make and likely thwart detection more easily as they lack more obvious visual indicators of manipulation more apparent in AI-generated video content. Various actors have used AI-generated voice cloning technology with authentic video clips to disseminate false information.

Former South African President Jacob Zuma’s daughter posted a deepfake video of former President Trump endorsing her father’s uMkhonto we Sizwe party ahead of the country’s May 2024 elections (see source 12 in appendix). In that same election season, President Biden’s likeness appeared in an AI-manipulated video warning that Washington would sanction South Africa if the ruling African National Congress Party won (see source 13 in appendix). In the United States, AI-generated robocalls mimicking Biden reached thousands of voters two days before the 23 January primary, falsely suggesting that voting in the primary would preclude voters from voting in the general election (see source 14 in appendix). This prompted the Federal Communications Commission to make robocalls containing AI-generated voices illegal in February (see source 15 in appendix).

Graphics 5 and 6: Deepfakes of Trump (left) and Biden (right) related to

South Africa’s 2024 elections (see source 16 and 17 in appendix).

AI has helped supercharge various actors’ digital campaigns in both benign and manipulative ways. However, there are different perspectives among security researchers on the efficacy of AI-generated content from an influence point of view, with some arguing that some AI-enabled IO campaigns have been “largely ineffective” in reaching audiences (see source 18 in appendix). This suggests that the impact so far is limited. However, this may change as the technology and threat actor tactics continue to evolve and grow in sophistication. Areas of concern already include:

Flooding of the information space or “poisoning the well.” The scalability of AI-generated content will possibly crowd out real and reliable information.

Enhanced proliferation of misinformation. As manipulative and harmful AI-generated content increasingly floods the information space, the risk arguably also increases that unsuspecting individuals will stumble upon and amplify—perhaps inadvertently—IO campaigns.As manipulative and harmful AI-generated content increasingly floods the information space, the risk arguably also increases that unsuspecting individuals will stumble upon and amplify—perhaps inadvertently—IO campaigns.

Scaling of IO in multiple languages and formats. AI also creates an opportunity to quickly add variety to content, possibly facilitating threat actors’ efforts to appear more authentic on social media. For example, instead of reposting identical copies of the same video multiple times, a threat actor can use AI tools to create multiple videos with the same message that are visually distinct, possibly deceiving users into thinking the content comes from unique creators. AI also creates an opportunity to quickly add variety to content, possibly facilitating threat actors’ efforts to appear more authentic on social media. For example, instead of reposting identical copies of the same video multiple times, a threat actor can use AI tools to create multiple videos with the same message that are visually distinct, possibly deceiving users into thinking the content comes from unique creators.

Cast doubt on and undermine legitimate information. Political figures and organizations may hide behind AI when evidence of a transgression surfaces, dismissing it as a “deepfake.”

Sowing doubt in the information we consume. AI-generated content encourages us to question the reliability of information in general—a key IO tactic, particularly in state-backed disinformation campaigns, which seek to sow doubt and distrust in the reliability of institutions, democratic processes, and information more broadly.

Companies around the world are developing technical solutions to detect and help mitigate AI-generated content. New fact-checking groups are emerging across the globe to aid in this effort as well, and subject matter experts help identify harmful content and prevent its virality. Efforts to debunk and pre-bunk mis and disinformation continue to require human analysis and intervention even as the threats accelerate their speed of propagation. Nisos uses a combination of tools, proprietary datasets, and domain expertise to identify harmful IO campaigns, networks, and actors, working closely with our partners to address the activity. Only by bridging and connecting these forces for good, can we hope to construct innovative methods and joint efforts using AI capabilities, trusted voices, and targeted intelligence to counter the proliferation of AI-enabled IO.

Online Platforms

Trust and Safety teams focusing their efforts on mitigating harmful content on their platforms can further address AI-enabled IO by concentrating efforts on inauthentic accounts and behaviors, including the use of botnets and compromised accounts. Bad actors use AI to scale content creation and engagement often using inauthentic accounts.

Global Brands

Ideologically motivated and financially motivated actors have leveraged AI capabilities to scale messaging targeting brands. Identifying and possibly publicizing about IO campaigns affecting brand reputation can serve as a step toward maintaining and rebuilding public trust.

Governments

Disinformation has the potential to influence policymakers at the highest level, as evidenced by the example of the pro-Russian disinformation outlet DC Weekly (see source 19 in appendix). Raising awareness of mis and disinformation operations is paramount to prevent bad actors from misleading leadership and the public.

Information Consumers

Generative AI has enabled bad actors to muddy the information landscape with mis, dis, and malinformation at scale. Arming consumers with digital media literacy education and transparency can help prevent the unintended spread of misinformation in online public discourse. Public and private partnerships are key to combating this ongoing challenge.

About Nisos®

Nisos is the Managed Intelligence Company. We are a trusted digital investigations partner, specializing in unmasking threats to protect people, organizations, and their digital ecosystems in the commercial and public sectors. Our open source intelligence services help security, intelligence, legal, and trust and safety teams make critical decisions, impose real world consequences, and increase adversary costs. For more information, visit: https://www.nisos.com.

Appendix

1. https://www.dw[.]com/en/fake-news-on-the-rise-leading-up-to-eu-elections/a-69046888

2. https://www.isdglobal[.]org/digital_dispatches/pro-ccp-spamouflage-campaign-experiments-with-new-tactics-targeting-the-us

3. https://www.aspi[.]org.au/report/shadow-play

4. https://www.context[.]news/ai/how-ai-shaped-mileis-path-to-argentina-presidency

5. https://www.context[.]news/ai/how-ai-shaped-mileis-path-to-argentina-presidency

6. https://www.theguardian[.]com/technology/article/2024/may/18/how-china-is-using-ai-news-anchors-to-deliver-its-propaganda

7. https://www.aspi[.]org.au/report/shadow-play

8. https://www.bbc[.]com/news/world-asia-india-68918330

9. https://elpais[.]com/mexico/elecciones-mexicanas/2023-12-19/xochitl-galvez-y-su-vocera-hecha-con-inteligencia-artificial-la-candidata-de-la-oposicion-profundiza-su-apuesta-por-la-tecnologia.htm

10. https://english.elpais[.]com/international/2023-02-22/theyre-not-tv-anchors-theyre-avatars-how-venezuela-is-using-ai-generated-propaganda.html

11. https://english.elpais[.]com/international/2023-02-22/theyre-not-tv-anchors-theyre-avatars-how-venezuela-is-using-ai-generated-propaganda.html

12. https://x[.]com/DZumaSambudla/status/1766512560554021094

13. https://x[.]com/Thisthat_Acadmy/status/1766042462290673989

14. https://apnews[.]com/article/biden-robocalls-artificial-intelligence-new-hampshire-texas

15.https://apnews[.]com/article/fcc-elections-artificial-intelligence-robocalls-regulationsa8292b1371b3764916461f60660b93e6

16. https://x[.]com/DZumaSambudla/status/1766512560554021094

17. https://x[.]com/Thisthat_Acadmy/status/1766042462290673989

18. https://www.wired[.]com/story/china-bad-at-disinformation

19. https://www.bbc[.]com/news/world-us-canada-67766964

The post AI-Enabled Digital Messaging: From Political Campaigns to Influence Operations appeared first on Nisos by Nisos

*** This is a Security Bloggers Network syndicated blog from Nisos authored by Nisos. Read the original post at: https://www.nisos.com/blog/ai-enabled-digital-messaging/