Autonomous vehicles have captured the imagination of humans for decades. There are few examples of 2024-7-11 21:0:51 Author: www.sentinelone.com(查看原文) 阅读量:16 收藏

Autonomous vehicles have captured the imagination of humans for decades. There are few examples of fully autonomous vehicles available today, designed for limited commercial use, but there is international consensus on what fully autonomous vehicles are and the standards by which they are measured. Autonomous flight is also quickly becoming one of the most popular, and controversial topics in aviation, known as “continuous autopilot engagement”, where machine learning-based algorithms are handling all necessary flight tasks from engine start through full navigation, landing, and shutdown.

In every case, security and safety are paramount due to the potential of harm to life and limb; therefore, we see that automation in transportation usually starts with features that increase security and enhance safety. The goal, however, is to make travel inexpensive and accessible to everyone while increasing efficiency and lowering cost. Whether referring to it as autonomy or automation, the truth is that artificial intelligence (AI) is progressively making these seemingly science fiction-based notions a reality.

There are many parallels that can be drawn between autonomous driving cars and what can be referred to as the Autonomous Security Operations Center (ASOC). Although it is still quite far off, this blog takes a deep dive into the key characteristics that would make the ASOC a reality and what this could mean in accelerating autonomous security operations based on well-defined levels of autonomous driving (Level 0-5).

From Autonomous Vehicles to Autonomous SOC

In traditional travel, it is typical to see one driver for one vehicle and one pilot for one aircraft. The same goes for cybersecurity – there is typically one analyst for one investigation or incident. Nowadays, one driver can monitor many highly automated vehicles with no steering wheels and no brake pedals. A single pilot can control and monitor many aircrafts. Soon, the information security community will see one security analyst handling many concurrent investigations or incidents through the use of AI-powered tools and agents.

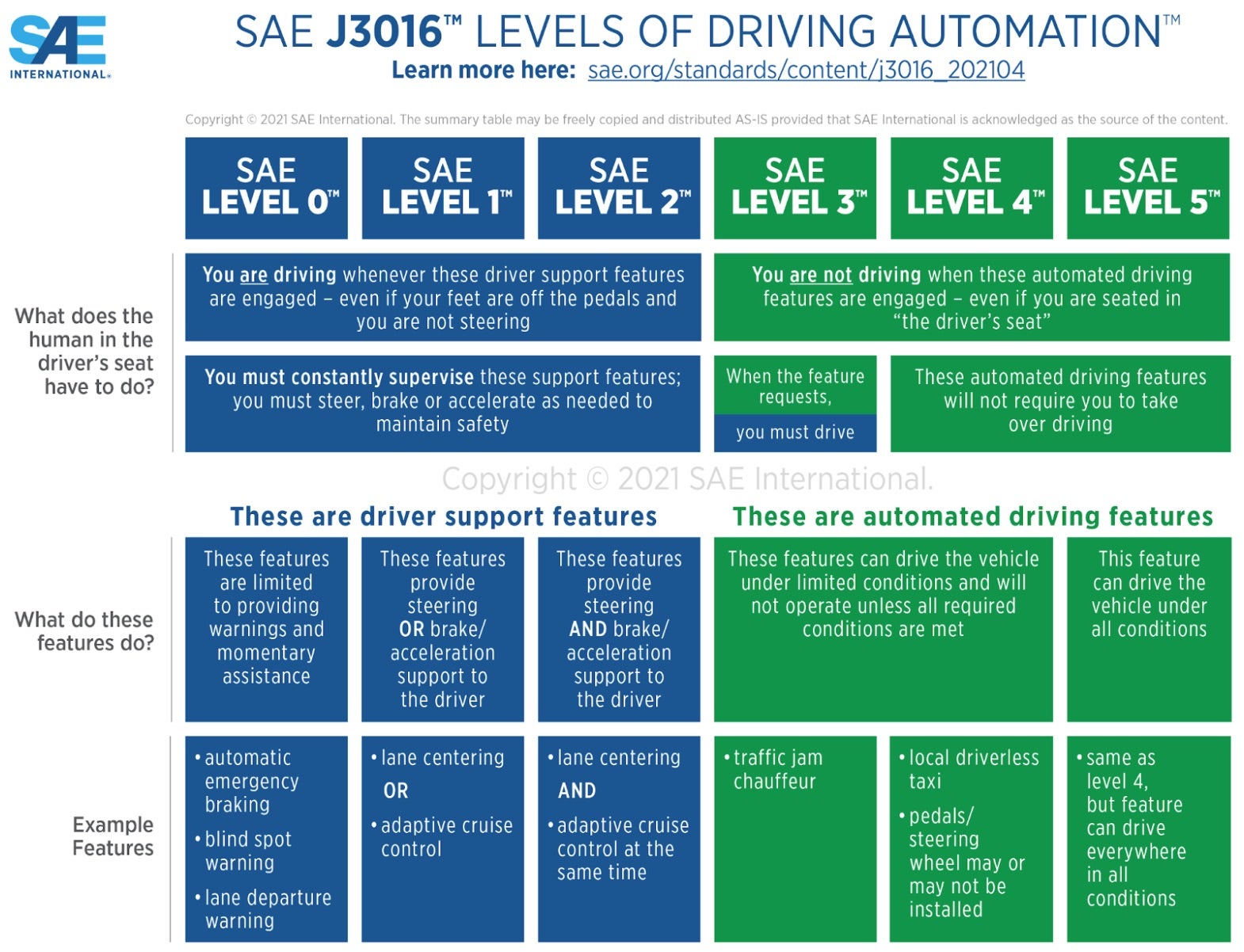

Here are the key characteristics apparent within each level of the SAE international standards of driving automation:

- Level 0 – The driver is fully responsible for controlling the vehicle, including steering, braking, accelerating, and monitoring the external environment.

- Level 1 – The vehicle can assist with either steering or acceleration/deceleration using Advanced Driver Assistance Systems (ADAS), but the human driver must remain engaged and monitor the environment at all times.

- Level 2 – The vehicle now can control both steering, acceleration/deceleration, and parking, but the human driver must remain attentive and ready to take over at any time.

- Level 3 – The vehicle manages most driving tasks and even monitors the external environment under certain conditions. A human driver must be ready to intervene when the system identifies a situation that it does not know how to handle.

- Level 4 – The vehicle can perform all driving tasks and monitor the environment in most situations without human intervention. However, it may not operate in all conditions, such as severe weather or off-road driving allowing a human to intervene.

- Level 5 – The fully autonomous vehicle can handle all driving tasks in all environments without any human intervention.

Below is a chart that describes the above levels of driving automation. The following sections describe key characteristics of security operations automation that can be leveraged to set standards for an ASOC.

Level 0: No Automation

Human analysts are fully responsible for all security investigations, including identifying, analyzing, and responding to threats. There are no built-in automations to assist with threat detection, security operations, or cyber investigation. There is, however, basic assistance from security tools to not only detect and protect, but also uncover potential threats. Basic security tooling is designed to make digital environments more secure but they work independently with no context or correlation.

For example, a firewall helps maintain control over network traffic, vulnerability scanners identify potential issues, and email filtering tools assist in flagging spam or phishing emails. In all cases, analysts must still review the data to determine if they are false positives or actual threats. They need to continuously monitor and configure rules to ensure intrusions are blocked. They need to prioritize all of the alerts and remediation efforts themselves. This presents tremendous human challenges that are exacerbated by too many siloed tools, extreme skills shortages, and a dramatically increasing attack surface.

Even tools that attempt to correlate and automate detections such as Intrusion Detection Systems (IDS) and Endpoint Detection & Response (EDR) require analysts to investigate most alerts to determine if they are indicative of a true security issue. Security Information & Event Management (SIEM) tools help centralize logs giving security analysts the capability to better access otherwise siloed security data. However, all this usually requires manual methods of threat hunting, data querying, and incident analysis performed entirely by humans.

Level 1: Analyst (aka Driver) Assistance

At Level 1, security analysts must always play an active role in security operations. Consider technologies that offer some level of support or automation, for example, Security Orchestration, Automation, and Response (SOAR) and hyper-automation tools can automate many tasks involved in threat detection and response. Tasks such as data enrichment, alert prioritization, and initiating response actions are prime for this level of automation. With effective automation to orchestrate security processes and augment human involvement, teams can optimize their Security Operations Centers (SOCs) more efficiently and operate at this level today.

Leveraging basic scripts and SOAR tools, humans can also automate non-investigation, routine tasks such as applying patches or remediating misconfigurations. These tasks still require analysts to monitor their execution and handle any exceptions or failures. Where security tools get better at performing more complex tasks, there is still no situational awareness of external context. Understanding business objectives, strong correlation of data, and deep integration with other technologies or environments is not present. Only a combination of all of these means achieving partial automation.

Level 2: Partial Automation

With partial automation, security systems can automate multiple investigation tasks such as correlating disparate alerts, gathering contextual information, and even suggesting recommended responses. Human analysts still need to oversee the process and, in many cases, make the final decisions. Analysts set the rules and workflows guided by AI assistance, monitor the actions taken by the system, and intervene when necessary. These systems can automatically respond to certain types of incidents based on predefined and even learned criteria.

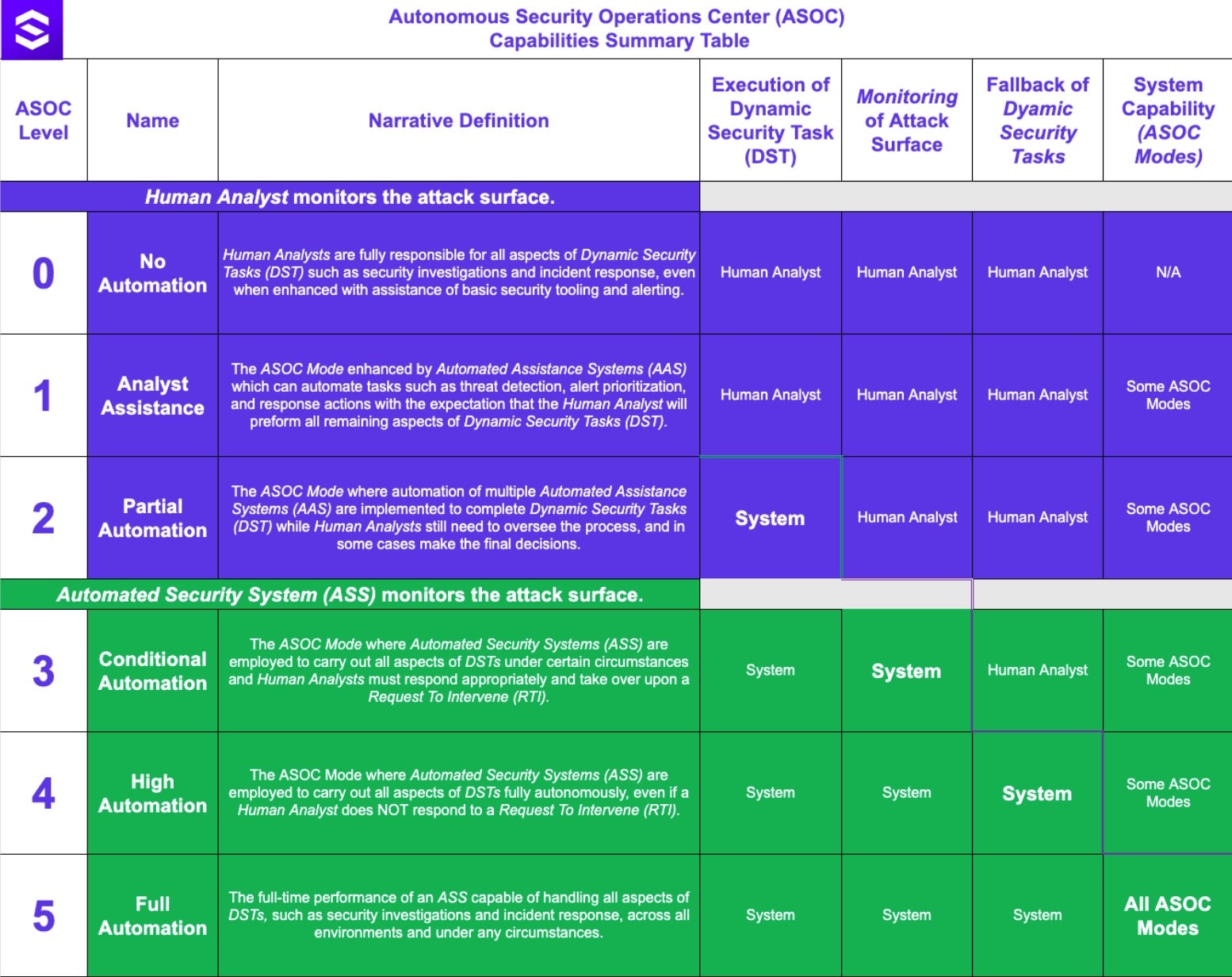

The following ASOC Capabilities Summary Table describes the different levels and their operating modes as they map to the SAE international standards for driving automation. Note that beginning in Level 2, the System begins to progressively take over for the human.

The ASOC Level at which any particular system is operating under can be referred to as the ASOC Mode (as in operational-mode). This concept of ASOC Modes describes the level of autonomy within which Dynamic Security Tasks (DST) are accomplished by an Automated Security Systems (ASS), with or without a Request To Intervene (RTI). All of these terms, which also map to SAE automated driving terms, demand some further explanation. Here are some high-level definitions:

- ASOC Mode – Corresponds to the ASOC Levels 1-5 based on the conditions under which an automated assistance or automated security system is operating.

- Automated Assistance System (AAS) – A system that can automate tasks such as threat detection, alert prioritization, and response actions with human oversight.

- Automated Security System (ASS) – A fully automated security system that can operate without human intervention under specific circumstances.

- Dynamic Security Task (DST) – Includes all the operational and tactical aspects of a complete security investigation, incident response, or other security task.

- Operational Design Domain (ODD) – The environment specifications within which a Dynamic Security Task is expected to be executed and run from beginning to end.

- Request To Intervene (RTI) – A notification by an automated security system to a human analyst that s/he should begin or resume the performance of a Dynamic Security Task.

An important concept to keep in mind when distinguishing between one operating mode and another is the system’s expectation of humans when it notifies them through a Request to Intervene (RTI). As the maturity of the ASOC grows and begins to operate at higher levels, the expectation that the human will take over decreases. This blend of automation and human oversight directly impacts the overall efficiency and effectiveness of security operations.

Some of the key characteristics of this level include continual assistance, partial automation, increased efficiency, and human oversight. The automation capabilities also extend out to governance, where the system can automatically generate detailed reports for compliance, audit, and strategic review purposes. Analysts benefit from significant assistance but must remain vigilant and directly involved to ensure accurate and appropriate threat management.

Level 3: Conditional Automation

At Level 3 the system is capable of autonomously carrying out comprehensive security functions under specific conditions, and analysts are required to take over only if the system encounters situations it is not programmed to handle. Automated Security Systems (ASS) with escalation mechanisms can fully automate the investigation process for certain types of threats. These systems usually leverage generative AI and large language models (LLM), promising to simplify the complex, empower every analyst, and accelerate security operations.

AI-powered platforms in the Level 3 ASOC can autonomously analyze and respond to threats based on pre-trained models and historical data. They perform tasks like threat hunting, vulnerability management, and cyber investigations. If the system encounters a more complex, anomalous, or novel threat, it escalates the incident to a human analyst for further investigation and response. This is called a Request to Intervene (RTI) and is a big part of understanding how to effectively manage this mode of operation.

Key characteristics are full autonomy under certain conditions, human readiness to intervene, escalation mechanisms for complex or novel threats, and enhanced efficiency and coverage by automating standard and routine tasks. The blend of advanced automation and human oversight provides a more efficient and responsive security operations center that goes a long way to alleviating some of the biggest challenges in today’s security operations centers.

Level 4: High Automation

High automation involves systems capable of fully managing the entire threat lifecycle autonomously, including detection, analysis, response, and even recovery from malfunctions or unexpected issues without human intervention. Security analysts can take manual control if needed, but typically, the system handles incidents independently. These systems would initially be deployed in specific Operational Design Domains (ODD) – controlled environments where the scope of potential threats is well-defined.

All security organizations are endlessly working towards some common goals: reduced alert fatigue, increased efficiency, plug the skills gap, and address manpower shortages. Already available on the market are solutions that deliver AI-guided investigations, provide threat hunting quickstarts, and recommend response actions, automating many steps required in security operations and cyber investigations. Soon, security analysts, incident responders, and threat hunters will be aided by fully autonomous security agents, powered by AI, that will allow them to handle many concurrent investigations.

Some characteristics of a Level 4 ASOC would include fully autonomous SOAR platforms, self-healing cybersecurity systems, AI-driven autonomous security agents, and autonomous network and endpoint defense. These systems are capable of managing complex security operations and Dynamic Security Tasks (DST) independently, providing a significant reduction in the need for human oversight while maintaining the ability for manual intervention.

Level 5: Full Automation

This is a system that is fully autonomous, capable of handling all aspects of security investigations, threat detection, and adaptive response across all environments and situations. The system continuously learns and adapts to new threats without needing human oversight and can operate independently, making decisions and taking actions as needed to protect the organization.

One could theorize based on today’s terminology that the following characteristics would describe the capabilities of the Level 5 ASOC operating in full automation mode:

- The system can autonomously detect, analyze, respond to, and mitigate threats in real-time, without needing human input.

- It uses advanced AI algorithms, data models, and data science techniques that continuously learn and adapt to new threats, ensuring up-to-date protection.

- The system can automatically recover from any incident, including complex breaches, anomalous situations, and system failures.

- Utilizes sophisticated behavioral analytics to detect and respond to nefarious activity in user behavior, network traffic, and system performance.

- Can autonomously distinguish between false positives and genuine threats with a high level of accuracy and precision.

- It proactively hunts for threats, identifying and neutralizing them before they cause harm.

- Utilizing the power of quantum computing it can perform complex security analyses and threat modeling that traditional systems cannot handle.

- It can restore systems, patch vulnerabilities, self-heal, and roll back changes without manual intervention.

- It can anticipate potential security issues (a.k.a. Breach Prediction) and take preventive measures to avoid them.

- Ensures transparency and accountability without requiring human effort.

- The system integrates with all aspects of the IT environment, including network devices, endpoints, cloud services, and applications.

- Provides a unified view of the security posture and coordinates defense strategies across the entire infrastructure.

The Levels of Security Operations Automation are described in the following table based on the SAE Levels of Driving Automation set forth at the beginning of this article.

Conclusion

As we progress on our journey to the Level 5 ASOC, AI algorithms are expected to continually evolve, driven by advances in computational power, data availability, and research innovations. Already, AI algorithms and data science techniques are increasingly sophisticated and capable of having the entire cybersecurity operation entrusted to advanced (autonomous) security systems, providing a level of efficiency, consistency, and responsiveness that far exceeds current capabilities.

AI however, will always have limitations, or more accurately, appropriate applications, which depend on the desired outcome. Even in the “Age of AI”, the right level of autonomy will vary based on the needs of the organization. Depending on the security goals and business objectives, each organization may implement security systems with varying levels of autonomy. This implies that humans will, and should, continue to learn how to perform dynamic security tasks themselves.

New drivers, as an example, learning exclusively on self-driving vehicles will never learn the importance of manual reaction time in the face of potential disaster. The ‘muscle memory’ learned by seasoned drivers helps them respond in real-time to changing conditions. If they never learn this critical skill, they could pose serious risk to navigating an incident. The same risk could apply to the ASOC. If an AI-powered system requests a human to intervene during a crisis, newer analysts may not be capable of competently taking over for the system if they have not mastered security fundamentals.

One thing that is clear is that AI will set new benchmarks in three key areas: Speed, Expertise, and Volume. The typical tiered structure (Tier1, Tier2, etc.) within today’s SOC is changing and will soon become obsolete. With this level of autonomy, security analysts will not be needed for day-to-day operations and will be able to focus on higher-level strategic planning, research, and other tasks unrelated to immediate threat management.

Purple AI

Your AI security analyst. Detect earlier, respond faster, and stay ahead of attacks.

如有侵权请联系:admin#unsafe.sh