2024-7-10 17:39:4 Author: securityboulevard.com(查看原文) 阅读量:8 收藏

The swift growth in digital authentication services offers governments and organizations the chance to enhance user experience, cut operational costs and unlock other economic benefits. However, transitioning services is not without its hurdles. The main among these is the need for organizations to develop reliable techniques to verify that people remotely accessing services are indeed who they claim to be. Understanding the structure of biometric attacks is important for assessing risks and ensuring that security measures are both adaptive and strong. It is also fundamental to crafting regulations and standards based on solid evidence.

Old-School Verification Methods are Failing

Traditional verification methods, like OTP (one-time password) authentication, have become commonplace due to the fast pace of digitalization.

Knowledge-based questions (like asking for your mother’s maiden name or pet’s name) are not always convenient for users. They have also been compromised by large-scale data breaches and the easy availability of personal information on social media.

The rapid growth of the internet has led to a huge increase in the number and variety of services that were once only available in secure, narrow-sphere settings. The major issue is that these services attract both legitimate users and cybercriminals.

As more services move online, successful cybercriminals find greater profit opportunities. Consequently, authentication and verification technologies that were once effective can no longer provide the level of identity assurance organizations require for high-risk scenarios.

Cybercriminals Bypass New Tech Too

Again, the increasing ability of cybercriminals to bypass traditional authentication methods, like OTP, to commit fraud has resulted in the widespread adoption of technologies that were previously considered secure.

Biometrics like iris scans, retina scans and fingerprints can effectively verify someone’s identity remotely. However, these techniques have a drawback: They do not connect a digital identity to an actual person in the real world.

Government-issued IDs, such as passports, do not always include chips. Additionally, fingerprints and retina scans require specialized sensors that are not built into every device, making them neither easily accessible nor inclusive.

Facial authentication technologies have become a convenient and secure way for users to verify their identity by using the front-facing camera on their laptop, smartphone or tablet. Governments, banks and healthcare providers have been leading the adoption of face verification technology.

As face verification technology progresses and becomes more widespread, cybercriminals focus on finding new sophisticated methods to bypass these security systems. Consequently, organizations need to remember that not all face verification technologies are equipped to handle the rapidly evolving threat landscape. These technologies vary in their resilience, security and ability to adapt to new threats. Face verification providers should analyze real-world data on biometric threats through active threat intelligence and management processes.

Presentation and Injection Attacks

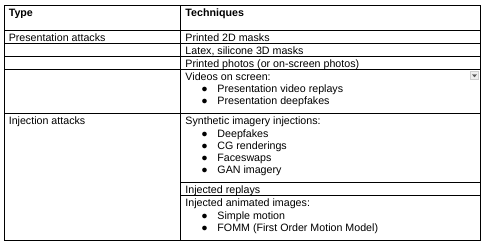

Threats have evolved significantly. Even the most advanced machine-learning computer vision systems are now facing challenges from new types of threats. When evaluating the threats to biometric systems, it is important to distinguish between presentation attacks and injection attacks.

• Presentation Attacks

Presentation attacks involve using artifacts like deepfake videos displayed on a screen, silicone masks, or printed photos to fool the smartphone or another authentication system. These attacks always leave clues in the imagery from the presentation process, such as patterns from a screen or color discrepancies in printed images.

Presentation attacks and their counterpart – Presentation Attack Detection (PAD) have a long history. Although attack methods have evolved, presentation attacks are well-studied and can often be detected.

• Injection attacks

Injection attacks, on the other hand, do not require presenting any object to a smartphone or laptop. Instead, images are inserted into the video stream using hacking tools, virtual cameras, or emulators.

The injected data can be a replay video, an image, or a deepfake, depending on which method the attacker believes will best bypass the specific security system. If a biometric system only needs a static image, an attacker might inject a stolen photo. However, if the system demands specific actions from the user, the attacker might inject a deepfake video that imitates those required actions.

Injection attacks differ from presentation attacks and require distinct methods for detection and prevention. Detection can be achieved by identifying an injection, such as by examining the metadata or other information from the device or by identifying that the image has been altered or synthesized in some way.

Injection attacks are much more scalable than presentation attacks because they do not require manually creating or presenting a physical object. Instead, they involve developing a highly automated attack system. Digital injection attacks are becoming increasingly common, occurring more frequently than persistent presentation attacks. The increased frequency of injection attacks compared to presentation attacks is due to their greater scalability.

The recent surge in tools for creating and injecting highly sophisticated synthetic imagery has led to a rapid increase in the evolution of injection attacks. Furthermore, since detecting injection attacks requires fundamentally different techniques compared to detecting presentation attacks, many biometric systems are not prepared to defend against this type of threat.

Unlike PAD, there are no universally accepted standards for detecting digital injection attacks. This gap gives cybercriminals an advantage as defenses struggle to keep up with new types of attacks.

Emulator-Driven Problems Rising

Cybercriminals are improving their skills to carry out digital injection attacks on various web and mobile platforms using emulators and metadata spoofing. The use of emulators by threat actors has surged by 353%. An emulator is a software tool that replicates a user device, like a mobile phone. This new attack vector is becoming more prevalent, with cybercriminals increasingly targeting iOS and Android mobile platforms.

Mobile platforms were considered more secure for biometric verification compared to web platforms due to their enhanced functionality and the superior security features offered by native apps.

Previously, cybercriminals targeted desktop platforms because they were the easiest to compromise. However, with the growing availability of attack tools and emulators, the threat has expanded to include mobile web and native platforms. This change indicates a blend of criminal expertise and suggests that a broader array of tools is now accessible on the dark web for versatile attackers.

This rise is also linked to the growing practice of cybercriminals spoofing metadata to hide the origins of their attacks. When metadata such as operating systems, user agents and camera models is manipulated, attackers can bypass most common injection detection methods, making it harder to track and block their attacks.

Increasing use of metadata spoofing by cybercriminals means that organizations can no longer depend on device data alone. Instead, the technology behind image-based verification must quickly evolve to address this vulnerability.

All organizations need to recognize that mobile platforms are significant points of vulnerability. Businesses along with biometric providers should employ a combination of technology, skilled personnel and effective processes to monitor traffic in real-time and detect various attack patterns across different regions, devices, and platforms.

Organizations should remember that authentication platforms can have vulnerabilities that can be exploited in traditional ways, such as with code, without employing presentation or injection attacks. To protect against such threats, businesses must proactively find and remediate vulnerabilities, relying on tools such as external attack surface management platforms to continuously monitor their assets and alert them of new issues.

Next-Gen Face Swap Attacks

Face swaps involve creating synthetic imagery by combining elements from two different faces. A cybercriminal merges the motion of one face with the appearance of another to produce a unique synthetic video in 3D format. This output mimics the genuine facial features of an individual. If the technology used for “liveness” verification lacks the latest defense features, this synthetic imagery can accurately match a person’s government-issued ID photo and fool the system.

Synthetic injection attacks come in many forms, including 2D image face swaps, video face swaps, and image-to-video deepfakes. Deepfakes involve making a 2D image move but in 3D, altering backgrounds and creating facial expressions such as blinks and smiles. Face swaps use two inputs – taking a live stream or an existing video and superimposing a different identity over it.

To confirm that the person authenticating is genuinely present and not a spoof, “active” authentication asks the user to perform actions like smiling, blinking, turning their head, or saying something. In contrast, passive authentication does not require any user actions. Instead, it detects attacks by analyzing image details.

Cybercriminals have advanced their techniques and tools to include highly sophisticated real-time 3D face swaps, capable of fooling both active and passive liveness detection systems.

Capabilities have changed. Now, low-skilled cybercriminals can access publicly available tools from code repository websites, enabling them to launch high-end synthetic attacks with minimal expertise. At the same time, the rise of a Crime-as-a-Service economy allows cybercriminals to sell, buy, and exchange attack techniques on the dark web. A Europol report highlighted that deepfakes-as-a-service has enabled organizations to create customized deepfakes on demand.

This kind of attack does not require cybercriminals to fake documents. They can use real documents to create convincingly realistic 3D video outputs that trick liveness detection systems. As synthetic imagery becomes more sophisticated, security teams must constantly learn by simulating similar attacks, often using automated penetration testing or red team exercises, and update their defenses accordingly.

Cybercriminals Exploit Motion Verification at Scale

Hackers attempt to execute automated, large-scale attacks targeting various systems simultaneously. Most of these attempts are motion-based digital injections. The typical motions used are blinking, smiling, nodding and frowning. This attack strategy is designed to simultaneously bypass systems requiring motions by overwhelming platforms and initiating hundreds of verification attempts.

When attackers bypass an active authentication system, they often replicate the attack on a larger scale to increase their chances of breaching other systems. A large-scale attack indicates that the method has been successful in other environments and is now being rapidly deployed across multiple systems and geographical clusters indiscriminately and on a large scale.

Future Trends

As facial recognition technology becomes more popular and organizations see its benefits for authentication, attackers will develop increasingly sophisticated methods to bypass these systems. Deepfake technology is already so easily accessible that even the most under-resourced cyberattacks can inflict serious damage. There has been a staggering 3,000% increase in phishing and fraud incidents involving deepfakes. The next wave of deepfake attacks will become even more widespread.

More advanced cybercriminals have also adopted cutting-edge techniques, leading to an increase in face swaps and 3-D deepfakes. These methods exploit security weaknesses and bypass security protocols in organizations globally. Organizations that have not secured their systems against deepfakes will need to leverage generative business intelligence to do so.

As more security measures rely on devices, cybercriminals exploit these vulnerabilities for theft and other malicious activities, causing device spoofing to increase rapidly. Criminals are predicted to enhance their ability to manipulate metadata to mask their attacks. For example, they could make a laptop look like a mobile device to fool government systems and evade corporate security protocols. Attacks targeting mobile web, iOS and Android platforms will become as widespread as those on desktops. Organizations, especially those relying on the mobile web, will acknowledge the flaws in their device data and transition authentication services to the cloud.

Conclusion

Organizations face the challenge of building and maintaining trust with millions of remote users every day. Various verification/authentication technologies have been created to support this effort, including PINs, passwords, security questions and OTPs sent via SMS.

However, with the rapid expansion of verification ecosystems, criminal activities have surged at an even faster rate. This has made widely used verification technologies increasingly ineffective and prone to failure.

Facial verification offers a convenient and secure alternative to forgotten or stolen PINs and passwords. It surpasses knowledge-based methods in security and cannot be shared or stolen. However, using biometrics without understanding the changing threat landscape can create a significant blind spot and increase risk exposure.

Grasping how biometric attacks work is crucial for organizations to make informed decisions based on actual threat intelligence, ensuring their technology provides the necessary level of security.

Protecting against biometric threats is similar to other cybersecurity measures. High-risk entities, such as governments and financial institutions, must use appropriate technologies tailored to user actions and prevailing threats.

Recent Articles By Author

如有侵权请联系:admin#unsafe.sh