2024-7-4 02:0:19 Author: hackernoon.com(查看原文) 阅读量:2 收藏

Authors:

(1) Clement Lhos, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA;

(2) Emek Barıs¸ Kuc¸uktabak, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA and Center for Robotics and Biosystems, Northwestern University, Evanston, IL, USA;

(3) Lorenzo Vianello, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA;

(4) Lorenzo Amato, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA and The Biorobotics Institute, Scuola Superiore Sant’Anna, 56025 Pontedera, Italy and Department of Excellence in Robotics & AI, Scuola Superiore Sant’Anna, 56127 Pisa, Italy;

(5) Matthew R. Short, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA and Department of Biomedical Engineering, Northwestern University, Evanston, IL, USA;

(6) Kevin Lynch2, Center for Robotics and Biosystems, Northwestern University, Evanston, IL, USA;

(7) Jose L. Pons, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA, Center for Robotics and Biosystems, Northwestern University, Evanston, IL, USA and Department of Biomedical Engineering, Northwestern University, Evanston, IL, USA.

Table of Links

V. Conclusion, Acknowledgment, and References

IV. DISCUSSION

In this paper, we evaluated a deep-learning model to predict the weight distribution (i.e., stance interpolation factor) of a user wearing a lower-limb exoskeleton during several walking conditions; we compared the exoskeleton controller performance when this stance interpolation factor was estimated with our deep-learning model (αˆ ) and measured with ground truth values (treadmill force plates or FSR sensor pads, α). This work highlights the viability and limitations of using deep-learning predictions to detect changes in the gait state using only joint kinematics information without ground reaction sensors.

Utilizing a history of kinematic data was shown to enhance the model’s accuracy (Fig. 3 and Tab. I) in comparison to using instantaneous values, as seen in a similar study [14]. These findings show the advantage of utilizing additional temporal data from previous time steps, enhancing the network’s performance in both training and testing phases. Importantly, including additional data did not significantly alter the model’s prediction speed; we observed comparable prediction times when using a history of kinematic data with respect to the instantaneous value predictions.

As a result, the model was implemented to achieve real-time performance. This is crucial for its use in the control of an exoskeleton, as predictions need to be faster than the main control loop. Achieving an average prediction time of 0.57 ms ensured the real-time usability of the system, as the main control loop runs usually significantly slower than 1 ms on lower-limb exoskeletons (e.g., 3 ms for the exoskeleton controller in our study). It is noteworthy that, without using TensorFlow Lite (i.e., employing the classic TensorFlow library), the average prediction time with a history of kinematic data is 67.1 ± 13.5 ms, which cannot be used in most real-time applications. Furthermore, the proposed method provides accurate predictions of the stance interpolation factor for previous and unseen users (Fig. 3 and Tab. I), demonstrating another aspect of its usability. Requiring training data for every exoskeleton user is not convenient or realistic, particularly in physical rehabilitation as a patient’s time spent receiving therapy must be prioritized.

In the context of machine learning for robotic control, using the predictions in a closed loop can affect the performance due to error propagation in real time [20]. Therefore, it was critical to evaluate the closed-loop performance of our system, using several conditions. We demonstrated that the deep-learning approach produces a similar stance interpolation factor compared to treadmill force plates during walking with haptic transparent control; these findings generalize for walking speeds between 0.14 and 0.47 m/s (Fig. 4). To

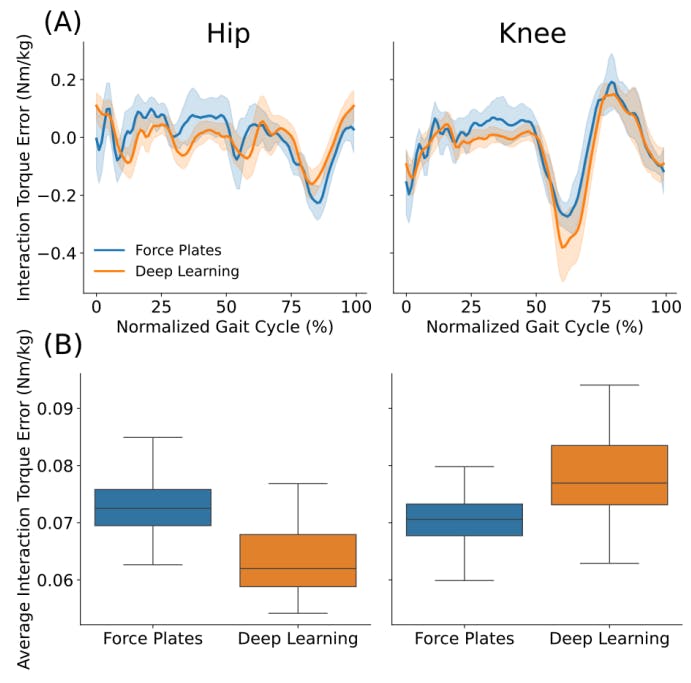

characterize the effect of the deep-learning predictions on the performance of the exoskeleton controller, we assessed both haptic rendering of nonzero impedance and haptic transparent modes during treadmill walking. Moreover, we evaluated the extent to which these results generalize to overground walking. During treadmill walking, mean interaction torque errors were similar between deep-learning predictions and treadmill force plate measurements. Evaluating the performance of the controller during more naturalistic, overground walking, the deep-learning predictions also facilitated similar interaction torque error profiles (Fig. 7A) and hip and knee kinematics (Fig. 7B).

While the overall performance of the exoskeleton controller was comparable when implementing our deeplearning predictions and ground reaction sensor values, we did observe a delay-like effect in the stance interpolation factor estimation which likely resulted in some performance discrepancies at specific phases of the gait cycle. Specifically, we observed a longer stance duration when walking with the deep-learning predictions at slower speeds (Fig. 4); this was associated with a longer transition between left and right single stance (i.e., double stance to swing).

During treadmill and overground walking, at the knee

joint, we observed higher interaction torque error at the beginning of the swing phase (around 60-70% of the cycle, Fig. 6A) with the deep-learning predictions. This increase in interaction torque error was more noticeable during overground walking, and also observed at the hip joint. In this condition, the use of crutches may have promoted additional lateral movement which was not measured by the exoskeleton [21].

For both treadmill and overground walking, the discrepancies in interaction torque errors relative to their respective ground truths could be due to the deep-learning model requiring users to change their kinematics before the stance interpolation factor changes. This is different from using force sensors, which can detect changes in weight distribution independent of kinematics recorded by the exoskeleton. One way to improve the detection of changes in weight distribution in the deep-learning model is to incorporate the frontal plane IMU angle of the backpack in addition to the sagittal plane angles from the backpack and joint encoders.

如有侵权请联系:admin#unsafe.sh