2024-6-19 22:0:19 Author: hackernoon.com(查看原文) 阅读量:2 收藏

New Story

by Auto Encoder: How to Ignore the Signal NoiseJune 19th, 2024

Too Long; Didn't Read

Reparameterising value and projection parameters in linear layers via the duality between downweighted residuals and restricted updates optimizes learning rates and model performance.

Authors:

(1) Bobby He, Department of Computer Science, ETH Zurich (Correspondence to: [email protected].);

(2) Thomas Hofmann, Department of Computer Science, ETH Zurich.

Table of Links

Simplifying Transformer Blocks

Discussion, Reproducibility Statement, Acknowledgements and References

A Duality Between Downweighted Residual and Restricting Updates In Linear Layers

B BLOCK LAYOUTS

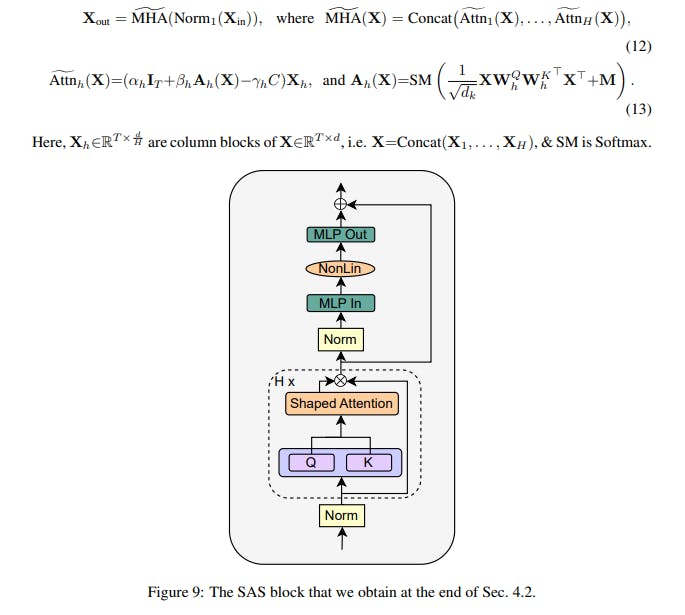

In Fig. 9 and Fig. 10 we show the layouts of our SAS block (Sec. 4.2) and parallel SAS-P block (Sec. 4.3). These are the equivalent plots to the layouts in Fig. 1. Mathematically, our SAS attention sub-block computes (in the notation of Eq. (2)):

This paper is available on arxiv under CC 4.0 license.

如有侵权请联系:admin#unsafe.sh