[This is a Guest Diary by Kaela Reed, an ISC intern as part of the SANS.edu BACS program]Viewing 2024-6-13 09:43:51 Author: isc.sans.edu(查看原文) 阅读量:6 收藏

[This is a Guest Diary by Kaela Reed, an ISC intern as part of the SANS.edu BACS program]

Viewing logs from a command-line can make it difficult to extract meaningful data if you’re unfamiliar with the utilities. While there is a learning curve to working with command-line utilities to sort through logs, they are efficient, flexible, and easy to incorporate into scripts. Using tools like jq, cut, sort, and wc, we can extract details from logs to gather statistics and help us build context from attacks.

What is JSON?

JavaScript Object Notation (JSON) is a log format that is a lightweight and structured data-interchange format [1]. JSON is a common format used for logs and APIs because it’s easy for machines to parse. The simple structure also makes it easy for humans to read, especially when used in conjunction with a utility called jq (JSON Query), which we will revisit after we cover the basics of JSON.

Objects

JSON uses curly braces to hold “objects,” which contain unordered key/value pairs [2]. A key/value pair is separated by a colon and each key/value pair is separated by a comma. You might recognize this format if you’ve ever decoded a JWT (JSON Web Token):

{

"alg": "HS256",

"typ": "JWT"

}

Arrays

JSON also uses ordered lists called “arrays” which can be contained within objects:

{

"method": "POST",

"url": "/aws/credentials",

“useragent”: [

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.129 Safari/537.36"

] }

JQ to the Rescue

The jq tool is a free, open-source JSON processor written in portable C programming and has no runtime dependencies. It’s easy to parse and filter through JSON logs with jq and it’s already packaged in major distributions of Linux, but you can also download it [3].

Extracting Data from Logs

If we read a JSON file using the cat utility in Linux, it can be difficult to sort through the information:

This is where jq comes in handy! Using jq, we can interact with the data from JSON logs in a meaningful way.

To read a JSON log with jq, we can either cat the file and pipe it through jq, or use the command:

jq . <filename>

Using jq with JSON logs makes it easier for the reader to sort through the data. However, simply printing the log to the screen isn’t enough to extract meaningful information when you’re dealing with large log files and thousands or more records to sort through.

Finding Keys

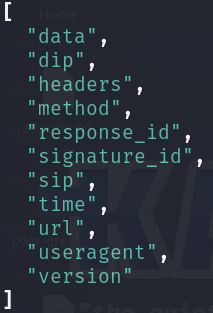

Recall that JSON consists of key/value pairs. We can list all the keys in a JSON file to help us extract specific information later:

cat logs/web/webhoneypot-2024-04-20.json | jq 'keys'

Source IPs

There’s a key named “sip” which stores source IP addresses. We can filter data by using dot notation with .<field name> [4]. To extract the source IPs from the JSON file, we can use .sip. Let’s look at all the source IPs in the log file by using jq, then pipe it to sort and remove the quotation marks in the output:

cat honeypot/logs/web/webhoneypot-2024-04-20.json | jq '.sip' | sort -u | tr -d "\""

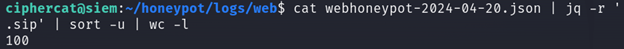

Even better, we could use jq -r for raw output instead of using the tr utility to get rid of the quotation marks.

cat honeypot/logs/web/webhoneypot-2024-04-20.json | jq -r '.sip' | sort -u

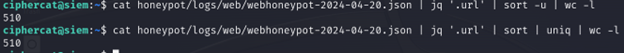

Piping the previous command to wc -l, we can count how many lines there are, which will also tell us how many source IP addresses we have:

cat honeypot/logs/web/webhoneypot-2024-04-20.json | jq -r '.sip' | sort -u | wc -l

Extracting URIs

URIs are stored in the field name "url." The following command will print every URI in the log on separate lines:

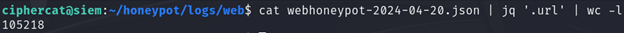

cat logs/web/webhoneypot-2024-04-20.json | jq '.url'

Piping the previous command to wc -l, we can count the number of URIs, which is 105,218. That’s a lot!

However, if we pipe the jq command to sort, we will see there are duplicate values. Many of the same URIs were visited multiple times and from multiple IP addresses.

To extract a list of unique URIs and get rid of the duplicates, we can follow the same method in the last example by sorting the URIs, but pipe the command through sort or uniq.

We have 510 unique URIs visited!

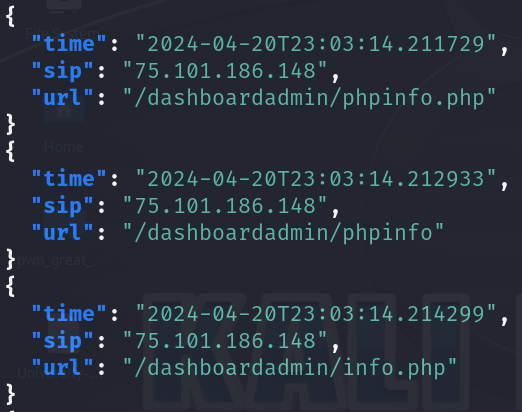

Extracting Multiple Elements

We can also extract multiple elements and separate them into objects:

cat logs/web/webhoneypot-2024-04-20.json | jq 'select(.sip == "75.101.186.148") | {time, sip, url}' > dirb-attack.json

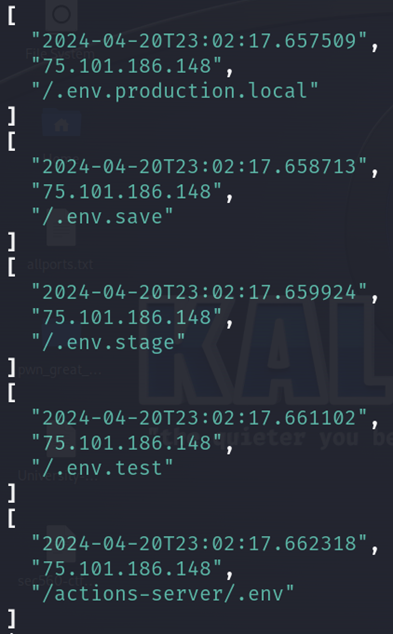

Alternative Ways with Arrays

Why did the programmer quit his job?

Because he didn’t get arrays!

In JSON, we can convert fields into different data types. In the last example, we extracted multiple elements and placed them into objects. We could also extract multiple elements and convert them to arrays:

cat honeypot/logs/web/webhoneypot-2024-04-20.json | jq 'select(.sip == "75.101.186.148") | [.time, .sip, .url]'

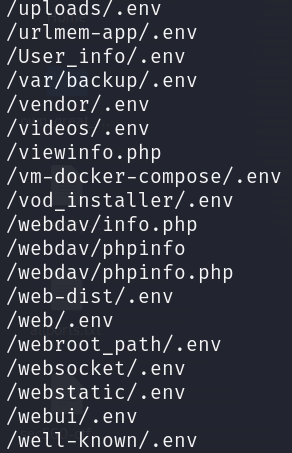

With arrays, we can access the data with an index number. If we want to look at the 3rd element, which consists of URIs, we can reference the index value. With indexing, the first element starts at 0, so if we want to look at the 3rd element, we need to use an index of 2. We can then pipe that to sort -u to sort unique URIs alphabetically:

cat honeypot/logs/web/webhoneypot-2024-04-20.json | jq 'select(.sip == "75.101.186.148") | [.time, .sip, .url]' | jq -r .[2] | sort -u

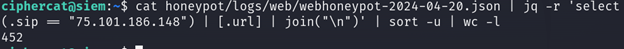

We can also grab only the URIs, join each one with a new line, sort and count how many unique URIs there are:

cat honeypot/logs/web/webhoneypot-2024-04-20.json | jq -r 'select(.sip == "75.101.186.148") | [.url] | join("\n")' | sort -u | wc -l

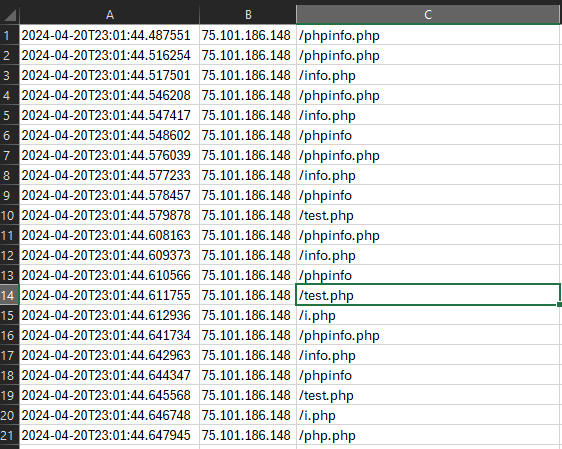

Converting to CSV Format

We can take different fields from JSON and convert that data into a CSV format [5]. Let’s take the "time", "sip" and "url" fields, then convert the data to a CSV and open it in a spreadsheet editor.

cat honeypot/logs/web/webhoneypot-2024-04-20.json | jq -r 'select(.sip == "75.101.186.148") | [.time,.sip,.url] | @csv' > attack.csv

What is Directory Busting?

In the following example, we’re going to extract useful information from a directory busting attack that came from one specific IP address, but first, what is directory busting?

Directory Busting (Forced Browsing) is a technique used to discover hidden webpages and files on a webserver [6]. This can be done manually by sending HTTP requests to the server requesting common page names and files, however, this is often performed with automated tools and scripts. Automation allows for hundreds or thousands of requests to different URIs in a short period of time. The goal of this kind of attack is to discover sensitive information, map the attack surface, and identify interesting pages (like administrative login pages) that could contain vulnerabilities.

Finding How Many Unique URIs an Attacker Probed

Let’s first look at all entries from the attacker’s IP and send that the output to a separate JSON file:

cat webhoneypot-2024-04-20.json | jq 'select(.sip == "75.101.186.148")’ > ip_75.101.186.148.json

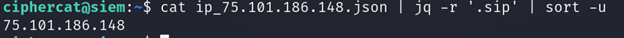

If we want to make sure this worked, we can list all the source IPs in the new file we created to make sure the logs are only from the attacker IP address 75.101.186.148:

cat ip_75.101.186.148.json | jq -r '.sip' | sort -u

Perfect! The new file only contains logs from the source IP of 75.101.186.148. If we use the wc utility, we see there are 104,196 entries from that one IP!

Looking at the time stamps, these attacks occurred in a very short amount of time (roughly 5 minutes). This is typical in an automated attack like directory busting.

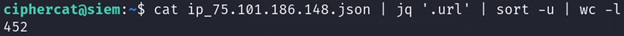

Let’s pipe the URIs through sort, then count how many different URIs were probed by this attacker:

cat ip_75.101.186.148.json | jq '.url' | sort -u | wc -l

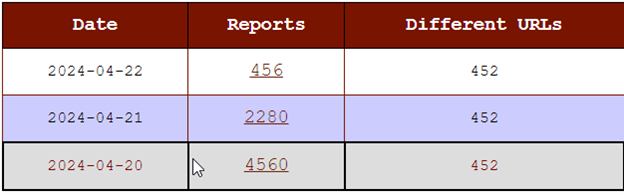

The attacker IP 75.101.186.148 probed 452 unique URIs on the webserver. Looking at the Internet Storm Center’s Report on the attacker IP, that is an accurate number [7]. Although directory busting attacks can be accomplished with brute-force techniques, these are usually accomplished as dictionary attacks. The threat actor has been reported multiple times and has probed the same number of unique URLs each time, so the attacker is likely using the same wordlist to perform the directory busting attack:

The previous commands in the directory busting scenario were run separately, but could have been performed with one command to achieve the same result:

cat honeypot/logs/web/webhoneypot-2024-04-20.json | jq 'select(.sip == "75.101.186.148") | (.url)' | sort -u | wc -l

Conclusion

These examples weren’t the only ways we could’ve arrived with the same outcome. This is the wonderful thing about using command-line fu! There isn’t just ONE way to reach the same answer and that’s part of what can make log analysis within the command-line fun! We’ve merely scratched the surface with jq, but there is a website you can go to paste JSON data and practice with jq, called JQ Play [8].

Keep practicing the art of command-line fu, grasshopper!

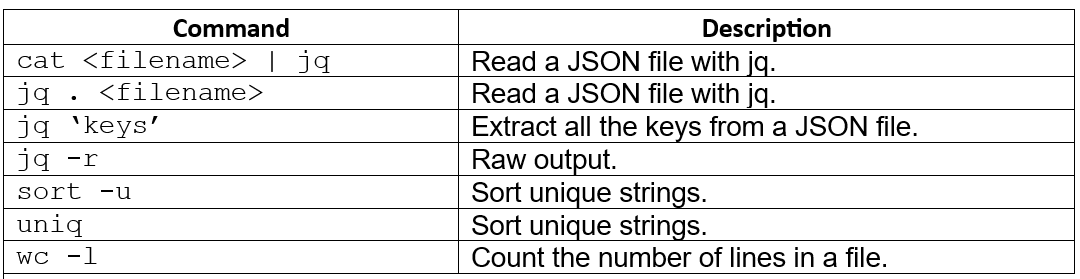

Cheat Sheet

[1] JSON.org. “Introducing JSON.” Json.org, www.json.org/json-en.html. Accessed 28 May 2024.

[2] w3schools. “JSON Syntax.” W3schools.com, 2019, www.w3schools.com/js/js_json_syntax.asp. Accessed 28 May 2024.

[3] jqlang.io. “Download jq,” jqlang.github.io. https://jqlang.github.io/jq/download. Accessed May 28, 2024).

[4] “How to Use JQ to Process JSON on the Command Line.” Linode Guides & Tutorials, 5 Nov. 2021, www.linode.com/docs/guides/using-jq-to-process-json-on-the-command-line/. Accessed 28 May 2024.

[5] Ramanujam, Sriram. “How to Convert JSON to CSV in Linux.” Baeldung, 13 Dec. 2023, www.baeldung.com/linux/json-csv. Accessed 28 May 2024.

[6] OWASP. “Forced Browsing.” Owasp.org, owasp.org/www-community/attacks/Forced_browsing. Accessed 28 May 2024.

[7] Internet Storm Center. “IP Info: 75.101.186.148.” SANS Internet Storm Center, https://isc.sans.edu/ipinfo/75.101.186.148. Accessed 28 May 2024.

[8] jqlay. “Jq Play.” Jqplay.org, jqplay.org. Accessed 28 May 2024.

[9] https://www.sans.edu/cyber-security-programs/bachelors-degree/

-----------

Guy Bruneau IPSS Inc.

My Handler Page

Twitter: GuyBruneau

gbruneau at isc dot sans dot edu

如有侵权请联系:admin#unsafe.sh