2024-5-29 02:58:46 Author: securityboulevard.com(查看原文) 阅读量:3 收藏

Generative AI and general AI platforms often include advanced computer vision technologies. These systems can easily solve traditional CAPTCHAs like the “pick all the squares with motorcycles” task because they are capable of interpreting complex images, text and patterns with high accuracy.

In contrast, Arkose MatchKey is a suite of AI-resistant challenges that actively confuse AI-based solvers. By introducing variations that aren’t visible to humans but alter the machine interpretation of the presented image, we have created challenges that malicious bots struggle to decipher.

This innovative approach enables Arkose Labs to effectively stop bots before they can make an impact on enterprises’ systems, ensuring strong security for business operations and creating an enhanced experience for genuine consumers.

The Mechanics of AI Resistance in Arkose MatchKey

The evolution of the bad actors on the internet from lone-wolf attackers to well-funded and organized cybercrime-as-service (CaaS) networks has led to adoption of sophisticated machine learning technologies to evade protection and commit fraud.

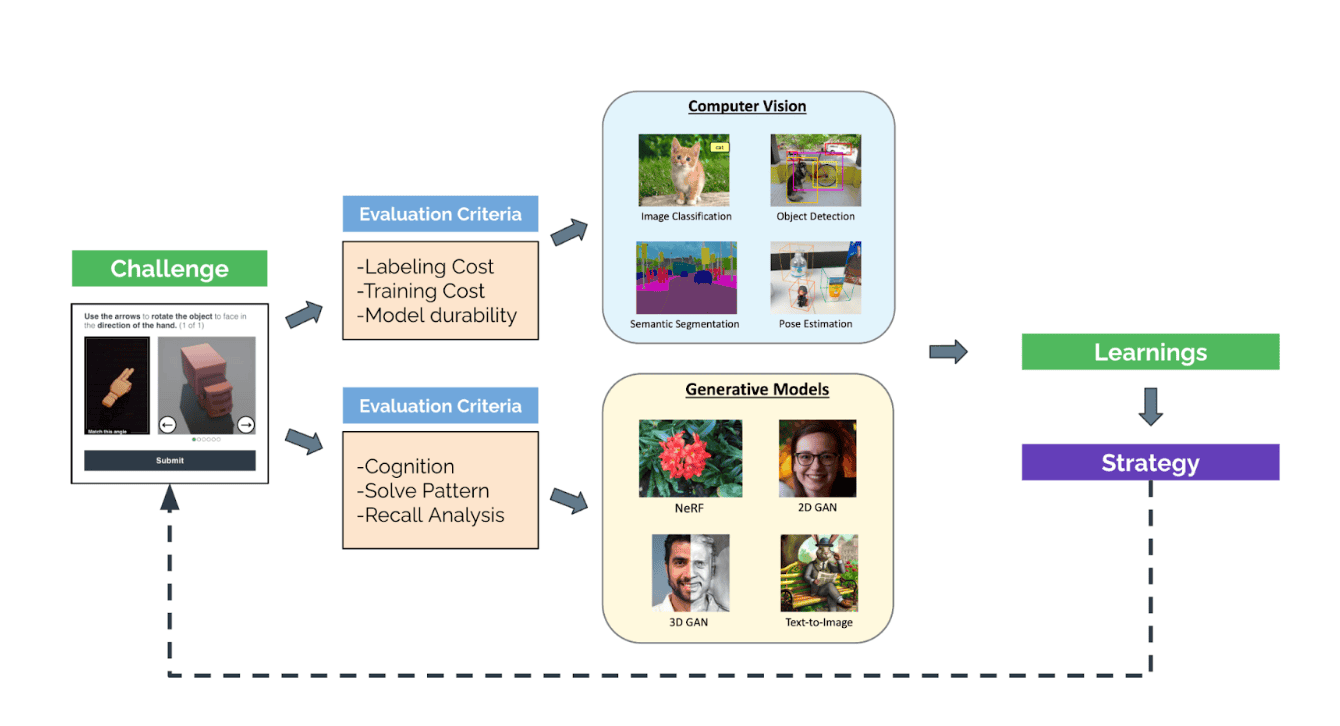

Our CAPTCHA design team continuously tests our MatchKey challenges against off-the-shelf and new computer vision technologies. We evaluate our challenges against different classes of computer technologies, including statistical methods to model with cognitive capabilities.

The Arkose MatchKey suite is designed to disrupt the models during inference by incorporating targeted techniques. The goal is to increase the cost for the adversaries to maintain and update the models.

The advent of the multi-modal language models has added a new dimension to the already evolving threat landscape. Arkose Labs has the unique advantage of working with the category leaders in the generative AI space and understanding the capabilities of this technology first hand.

We have partnered closely with generative AI platforms to understand and evaluate the capabilities of large language models, especially the multimodal models and their potential use to evade detection and solve CAPTCHAs. We have an ongoing process to:

- Evaluate the cognitive capabilities of M-LLMs to understand and describe Arkose challenges.

- Analyze and understand the general behaviors and approaches that M-LLMs take to solve image questions.

- Incorporate the learnings from various experiments into our CAPTCHA design process.

How We Thwart AI Bots

To test out the effectiveness of our approach, we started with a visual challenge we knew sophisticated bots could solve. Next, we incorporated the elements mentioned above to create what we call “AI-resistant” images. We then looked at an account where we were seeing attacks and ran a controlled A/B test on known AI solver traffic.

The results were significant:

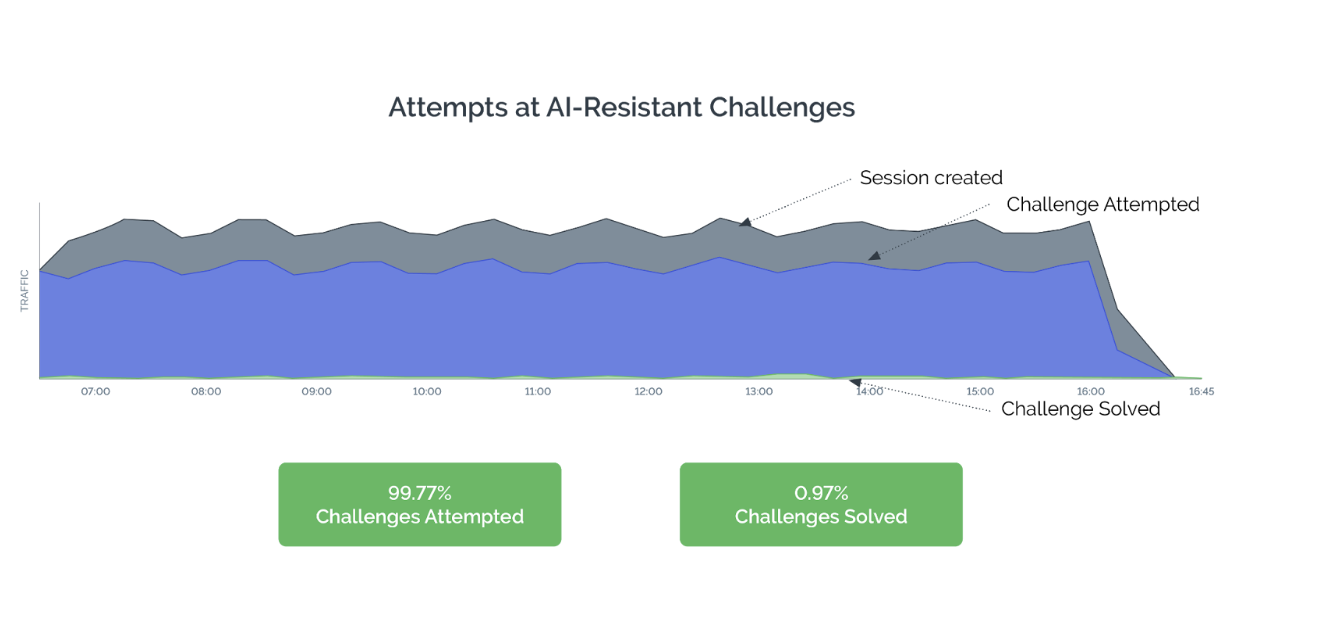

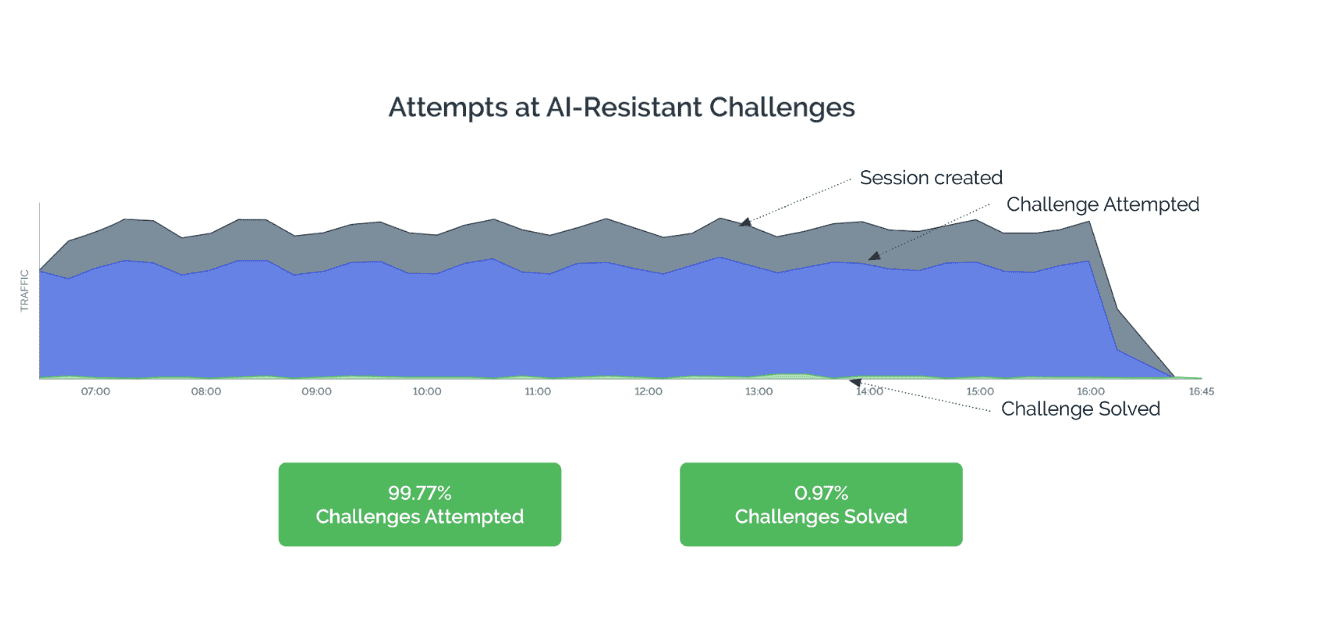

- For account update tasks like password resets, less than 1% of the bots could solve the AI-resistant challenge, compared to over 92% that could solve the non-altered image.

The below graph is for a prominent gaming customer on their account update flow. The performance is against a sophisticated CAPTCHA solver that was able to solve a low-complexity challenge but failed to solve the AI-resistant version of the same low-complexity challenge.

Not only are these challenges stifling AI bots, they’re also wowing end users. The evidence is all over the news, in stories like the recent Wall Street Journal article that reported consumers are frustrated with traditional CAPTCHAs but “charmed” by the new type of challenges and “happy to see a different kind of prompt.” Our industry-leading approach means that we’re not just securing systems against malicious bots – we’re also enhancing the user experience by providing engaging and novel challenges.

The Road Ahead

We are continuing to fine-tune our models, engaging in continuous research and feedback cycles with AI developers. We’re partnering with LLM platforms to assess large language models’ capabilities, focusing on multimodal models and their use in evading detection and solving challenges. Our collaboration involves evaluating the cognitive abilities of these models, analyzing their behavior in solving image-based questions, and integrating findings into the challenge design process. This will help us both learn and influence how AI models are developed.

So we’re not only evolving our own products – harnessing AI to secure against AI bots – we’re also contributing to a broader understanding of how AI can be shaped to enhance security measures.

The learnings we’re gathering are invaluable. Each interaction gives us deeper insights into how different AI models attempt to solve or bypass CAPTCHAs. This journey is crucial not just for our company but for the cybersecurity community at large. As AI becomes more commoditized and accessible, the risk of misuse scales exponentially. The necessity for AI-resistant challenges extends beyond the immediate need to secure digital assets. It’s part of a larger discussion on the ethics of AI and machine learning, particularly concerning image recognition and copyright issues, and our research is a critical component of broader efforts.

Thank you for taking the time to read about our work on AI-resistant challenges. To learn more about our advanced technology, visit Arkose MatchKey.

*** This is a Security Bloggers Network syndicated blog from Arkose Labs authored by Vikas Shetty. Read the original post at: https://www.arkoselabs.com/blog/arkose-matchkey-ai-resistant-attack-innovation

如有侵权请联系:admin#unsafe.sh