The Labs team at VMRay actively gathers publicly available data to identify any noteworthy malwa 2024-5-8 15:40:12 Author: www.vmray.com(查看原文) 阅读量:18 收藏

The Labs team at VMRay actively gathers publicly available data to identify any noteworthy malware developments that demand immediate attention. We complement this effort with our internal tracking and monitor events the security community reports to stay up-to-date with the latest changes in the cybersecurity landscape.

In April 2024, the VMRay Labs team has been specifically focused on the following areas:

- New VMRay Threat Identifiers, including:

- VTI to detect usage of OpenAI and Hugging Face API

- VTI to detect stopping backup-related services

- Enhancing Smart Link Detonation domain trigger list

- New YARA rule for White Rabbit ransomware

- YARA rule improvement for RisePro stealer

Now, let’s delve into each topic for a more comprehensive understanding.

In a few last blog posts, we introduced you to the concept of the VMRay Threat Identifiers (VTIs). In short, VTIs identify threatening or unusual behavior of the analyzed sample and rate the maliciousness on a scale of 1 to 5, with 5 being the most malicious. The VTI score, which greatly contributes to the ultimate Verdict of the sample, is presented to you in the VMRay Platform after a completed analysis.

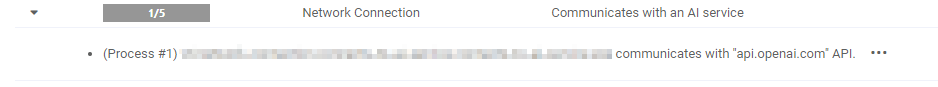

1) VTI: Detect usage of OpenAI and Hugging Face API

Category: Network Connection

In the ever-evolving landscape of cybersecurity threats, staying watchful against emerging technologies is our priority. Among these advancements, Large Language Models (LLMs) present both opportunities and challenges. While LLMs have immense potential for innovation and advancement, they also introduce new avenues for malicious actors to exploit. Let’s stop for a second to have a recap on what the LLMs are in a few words.

Large Language Models (LLMs) are powerful artificial intelligence models trained on vast amounts of text data, enabling them to generate human-like text and understand natural language with remarkable accuracy. These models, such as OpenAI’s GPT (Generative Pre-trained Transformer) series, have revolutionized various Natural Language Processing (NLP) tasks, including text generation, translation, summarization, and more.

Threat authors can potentially leverage LLMs in several ways to carry out cyber attacks:

- Automated malware generation – LLMs can be used to automatically generate new malware variants by training the model on existing malware samples and associated documentation.

- Obfuscation and evasion – Malicious actors can employ LLMs to develop advanced obfuscation techniques. By training the model on security research papers and discussions about detection methods, they make it challenging for security tools to detect and analyze malware.

- Social engineering attacks – LLMs can generate convincing and contextually relevant text, making them ideal for crafting convincing phishing emails, fake websites, or social media messages.

- Dynamic adaptation – LLMs can analyze real-time data streams, such as network traffic and system logs, to dynamically adapt malware behavior. This capability allows threat actors to develop malware that can adjust its tactics in response to changes in its environment, making it more resilient to detection efforts.

In response to the potential misuse of Large Language Models (LLMs) by malicious actors, we are introducing a new VTI to proactively identify suspicious behavior associated with LLMs. Initially, we will focus on identifying communication with specific URLs associated with LLM-based Hugging Face APIs for machine learning. This proactive step aids in detecting threats while also facilitating the creation of comprehensive analysis reports. These reports enable us to identify and investigate samples that could potentially exploit AI technologies.

AI service communication VTI

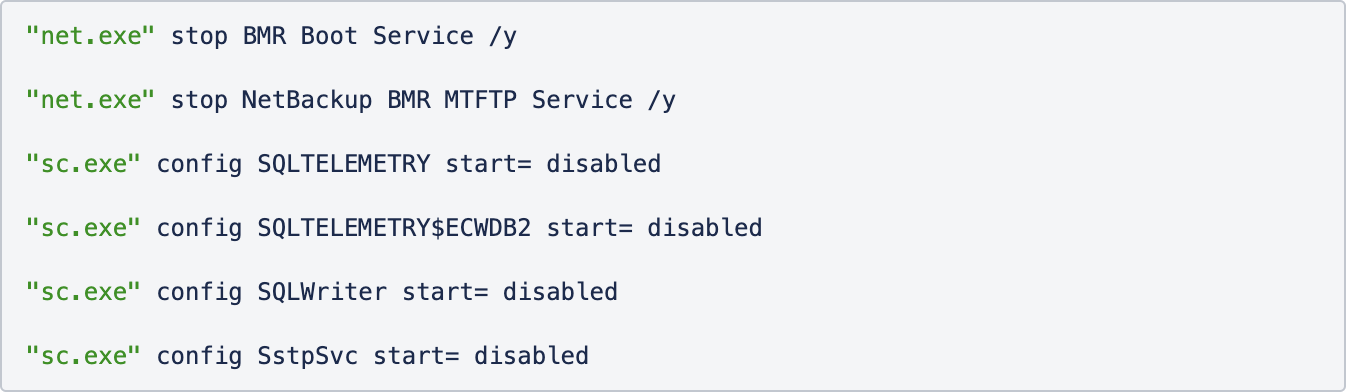

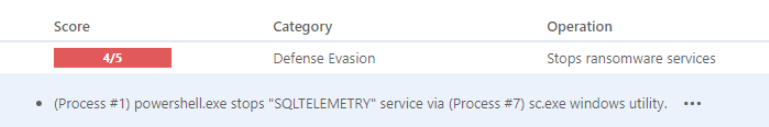

2) VTI: Detect stopping backup-related services

Category: Defense Evasion

MITRE ATT&CK® Technique: T1489

Ransomware remains an ever-present threat in today’s digital landscape, and its menacing shadow is unlikely to melt away anytime soon. These attacks have been evolving in sophistication, targeting not only individuals but also businesses, government agencies, and critical infrastructure. The rise of Ransomware-as-a-Service (RaaS) platforms enables even cybercriminals with limited technical expertise to launch ransomware attacks, potentially leading to a growth in the number of such attacks.

Among the variety of tactics employed by ransomware, one particularly insidious strategy involves the deliberate attempt to stop or disable crucial services, such as backups, on infected machines for several reasons:

- Prevention of data recovery – By disabling backup services, ransomware prevents users from restoring their data from backups. This increases the likelihood that victims will pay the ransom to regain access to their encrypted files, as they have no other means of recovering their data.

- Increased leverage for ransom demands – When victims realize they cannot restore their files from backups, they may feel a greater sense of urgency to pay the ransom to regain access to their data. This gives the attackers more leverage in demanding higher ransom payments.

- Evading detection – By stopping backup services, ransomware can prevent backup software from detecting changes to files and triggering alerts or initiating backup processes. This allows the malware to operate undetected for longer periods, maximizing its impact and the potential for ransom payments.

Hakbit ransomware, for instance, uses a blend of net.exe and sc.exe Windows configuration commands to execute these disabling maneuvers.

Example of Hakbit’s stopping services commands

Our new VTI has been crafted to flag attempts within malware samples to stop or disable different backup services. In a cyber-secure landscape, any action aimed at disabling critical backup or database services is exceedingly uncommon. Therefore, the identification of such activity serves as a vital early warning signal, indicating a potential intrusion.

Disable Backup Related Services VTI

In April 2024, we implemented several enhancements to the Smart Link Detonation (SLD) mechanism within our Platform products. For those who may not be familiar, SLD is a feature designed to facilitate the automatic evaluation and safe detonation of relevant hyperlinks within document and email samples, optimizing the best balance between productivity, performance, and efficacy. Below is a summary of the key changes.

Enhancing SLD domain triggers to strengthen phishing detection

In response to insights from our internal Q4 2023 Threat Landscape report, we’ve implemented key updates to improve our phishing detection capabilities within the SLD feature. By expanding our domain trigger list, we aim to enhance our ability to swiftly identify and neutralize threats stemming from widely exploited links. Here are the recent additions to our SLD domain trigger list:

href.li: Discovered in one of our analyzed samples, this domain is used to conceal referrer information, potentially evading automatic blocking or phishing detection mechanisms employed by redirector websites. It can also function as a redirector itself.r2.dev: We encountered a phishing page hosted on “r2.dev”, a domain seemingly provided by Cloudflare. This addition aims to mitigate threats originating from this source.googleads.g.doubleclick.net: Our analysis revealed instances where this domain was employed as a redirector for phishing pages. To address this, we’ve updated the SLD trigger conditions for “doubleclick.net” URLs to capture more phishing pages.linodeobjects[.]com: During routine phishing analysis, we identified “linodeobjects[.]com” as a storage service, similar to Cloudflare R2, in this case – frequently utilized for malicious purposes.lookerstudio.google.com: We tracked some phishing campaigns abusing the Google Looker Studio, which is a data analytics and business intelligence platform that helps organizations explore, analyze, and share insights from their data. You can check more about that campaign at: https://www.securityweek.com/new-phishing-campaign-launched-via-google-looker-studio/. A glance at VirusTotal confirmed multiple instances of malicious activity associated with “lookerstudio.google.com.” Consequently, we’ve added this domain to our SLD triggers to actively address this phishing threat.

1) YARA rule improvement for RisePro stealer

In the preceding month, we introduced a YARA rule targeting RisePro stealer malware family. However, threat actors persist in enhancing the sophistication of RisePro, continuously implementing new techniques to evade security tools and procedures.

It appears that a modification in the code base of the RisePro malware has rendered it more similar to Amadey samples, using specialized libraries for credential theft. Furthermore, subtle modifications in the ports used by the threat actor further complicated its detection. Security systems often employ signature-based detection methods to identify known malware by analyzing patterns or signatures associated with malicious code. By altering the ports used for communication, malware can evade detection based on predefined signatures, as these systems may not recognize the modified behavior.

To stay sharp in our hunt for the most recent threats, we have refined the YARA rule to accommodate these changes. The updated rule now employs a broader approach to capturing the samples associated with the RisePro stealer, enabling more accurate detection and classification within our VMRay Platform.

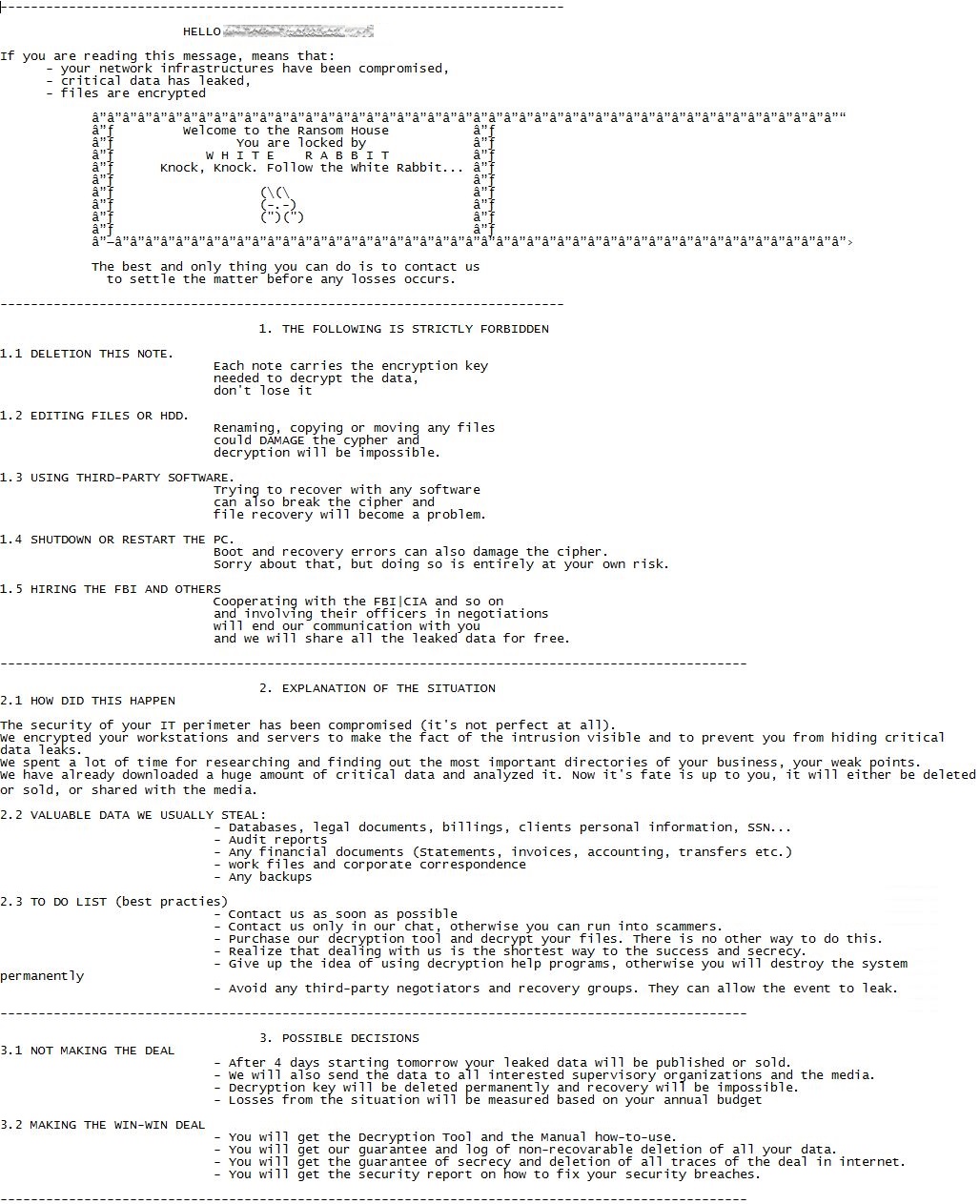

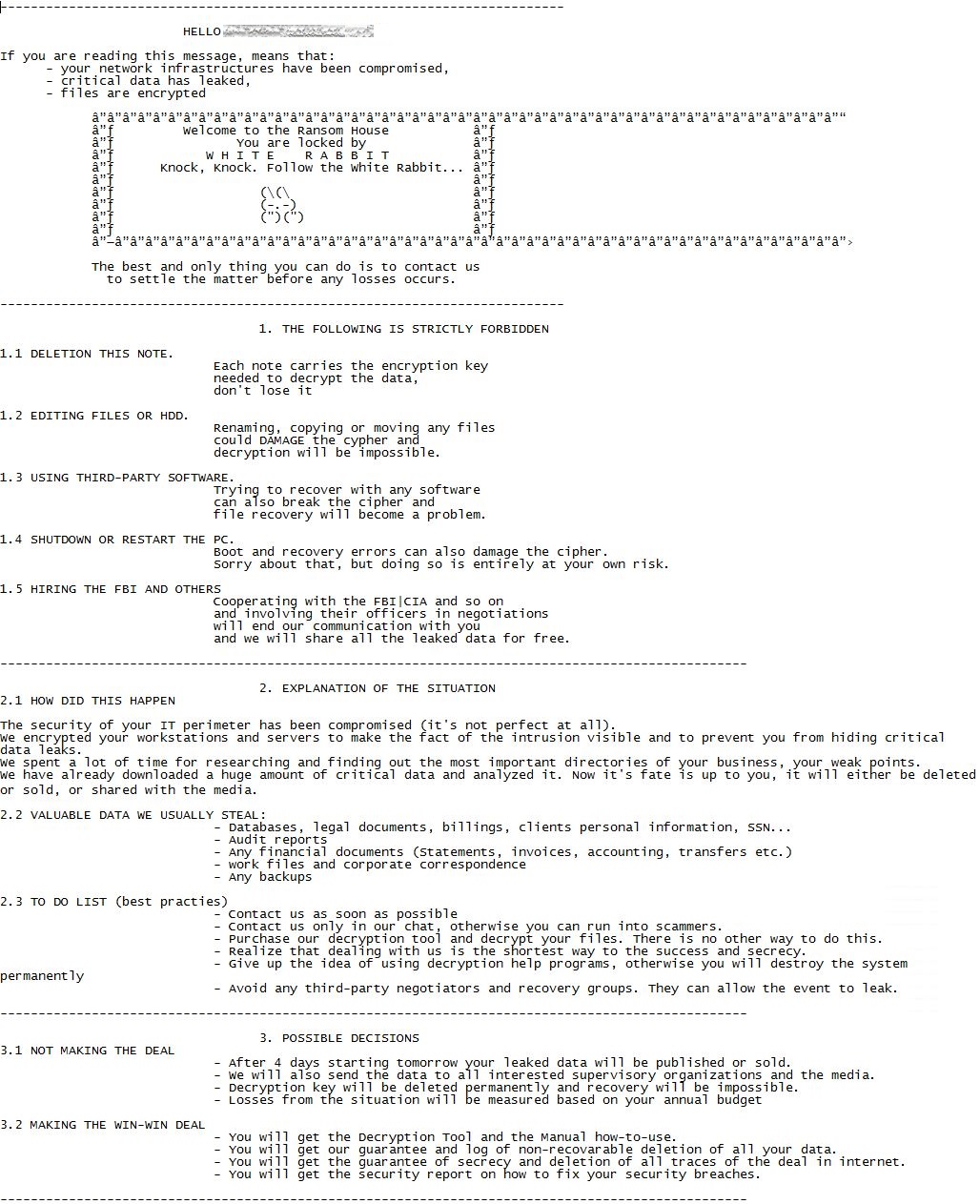

2) New YARA rule for White Rabbit ransomware

White Rabbit is a ransomware variant that first emerged onto the cyber threat landscape in late 2021. It is specifically crafted to exploit vulnerabilities within financial institutions.

How does it work? White Rabbit gains initial access to systems through sophisticated phishing campaigns, exploiting unsuspecting users to infiltrate target networks. Once inside, its compact size, roughly 100 KB, allows it to operate discreetly, evading detection.

To execute its payload, White Rabbit requires a password specified via command line arguments. Without this password, the malware lies resting, undetected. However, upon entering the correct password, White Rabbit starts to act, scanning the compromised machine for files to encrypt. It selectively locks these files, rendering them inaccessible without the encryption key.

Adding to files’ encryption, White Rabbit employs a double extortion strategy; it threatens victims with the publication or sale of their stolen data, adding another layer of “call-to-action” to its ransom demands.

To combat this emerging threat, a new YARA rule has been developed and integrated. This YARA rule specifically targets the packed version of White Rabbit, enabling proactive detection of activities associated with this malware family.

White Rabbit ransom note

source: https://www.trendmicro.com/en_se/research/22/a/new-ransomware-spotted-white-rabbit-and-its-evasion-tactics.html

We do hope our constant research of new malware trends and the features we together bring to our products help you in the navigation of the complex landscape of cybersecurity. In this ever-evolving realm, agility is crucial, but we must not underestimate “good old” threats like phishing, spoofing, or classic ransomware attacks, even as AI development advances. Stay tuned for our May updates, which we’ll share in the upcoming weeks. Wishing you a cyber-secure and joyous spring season!

如有侵权请联系:admin#unsafe.sh