Get Visibility and Control Over Your Data The world’s biggest tech firms 2024-5-1 20:15:2 Author: www.forcepoint.com(查看原文) 阅读量:18 收藏

Get Visibility and Control Over Your Data

The world’s biggest tech firms all want to emerge as a leader in this space, including Microsoft, who, with their huge customer base, are starting to introduce and encourage the deployment of Copilot for Microsoft 365.

Copilot is an embedded AI assistant

Unlike other publicly available generative AI tools on the web such as Google’s Gemini or ChatGPT, Copilot for Microsoft 365 is baked into Microsoft Office applications and has access to vast swaths of internal and confidential corporate data that could not have previously been queried in this fashion.

With generative AI (e.g. ChatGPT) on the web, Forcepoint DLP has been able to monitor and control this as a data exfiltration from the start and protect intellectual property leaving the organisation, needing to secure internal AI use such as Copilot for M365 is just the natural next step.

Copilot for M365 has huge benefits, use cases demoed by Microsoft at launch include:

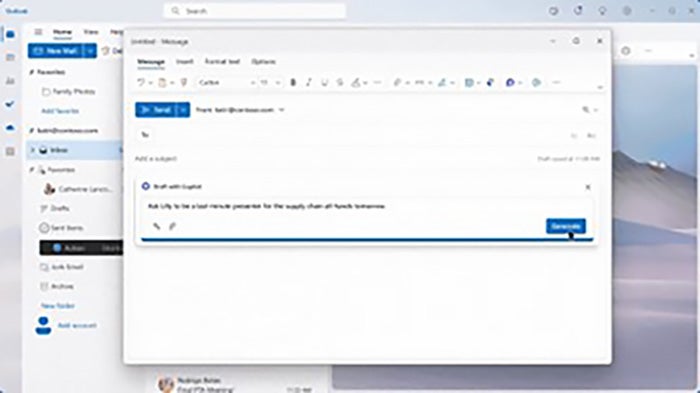

Copilot crafting an email in Outlook

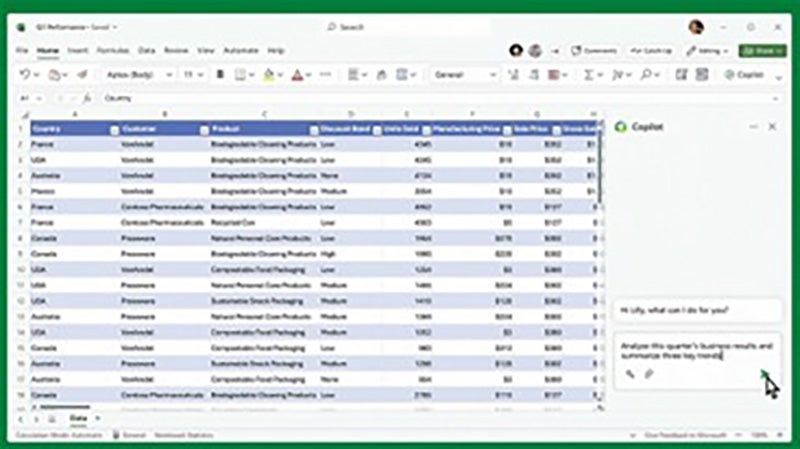

Copilot in Excel analysing data for trends

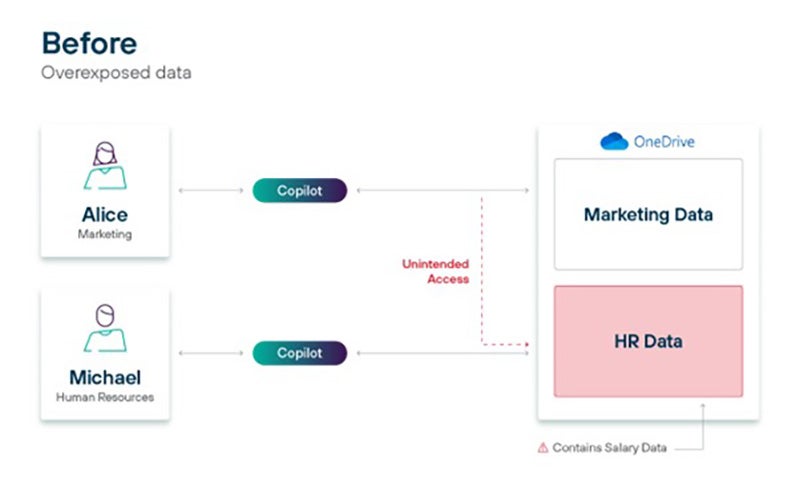

These use cases, while great, also have the potential to spell disaster. Imagine someone from the sales team querying Copilot for some sensitive HR data such as other salaries in the team, and these results actually being returned.

Another disastrous example: Imagine at a publicly traded company if someone outside of the finance team started querying Copilot with information related to the company’s financial results and performance. That could help others outside the company to gain insight that might be used for insider trading, or even worse cause the organization to have to pre-announce earnings details.

Microsoft 365’s inherited user privileges can be problematic

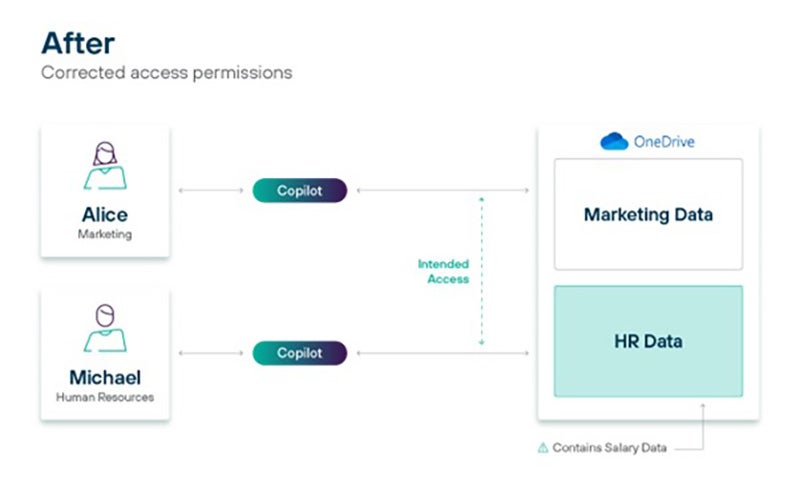

Situations like these underscore why it’s essential to have a data governance strategy and controls in place before deploying Copilot for Microsoft 365. Even Microsoft advises customers around governance challenges like these. But there’s even more when you consider that Copilot’s security model for Microsoft 365 is based on a user’s existing Microsoft 365 permissions. That means any files, folders, emails or Teams information an individual has access to could possibly be used by Copilot AI in its response.

Forcepoint can help to ensure organisations are ready for a secure rollout of Copilot. With Forcepoint Data Security Posture Management’s (DSPM) capabilities, we are able to integrate tightly with the Microsoft environment—including Azure AD, Azure Blob, Azure Files, OneDrive and SharePoint. This integration gives us full visibility into:

- Access Permissions

- Data Classification and Labelling

- Potential Misconfigurations

- Data Residency

- Redundant, Outdated, Trivial (ROT) Data

- Duplicate Data

Forcepoint DSPM’s insights provide visibility and clarity to your data

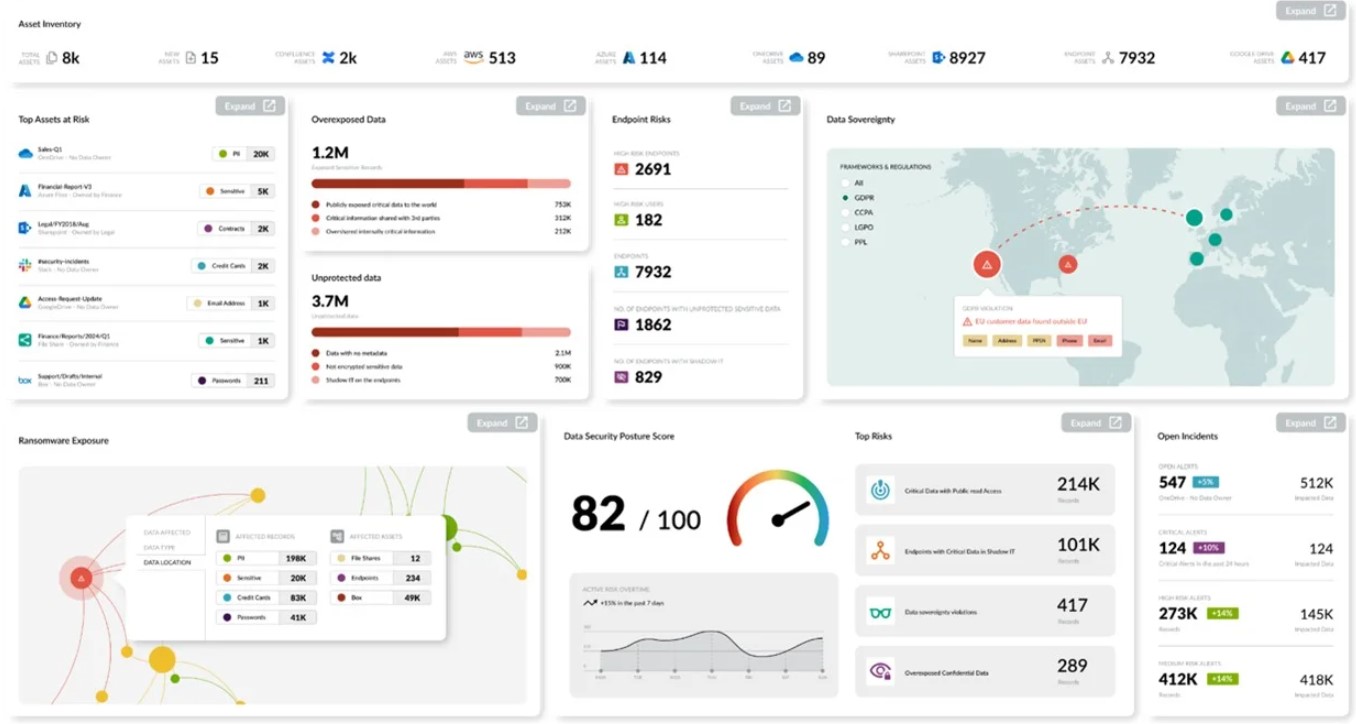

Forcepoint DSPM collects this level of data and feeds it into our DSPM analytics dashboards to provide security analysts a real-time view of the risks they are currently exposed to, allowing them to correctly prioritise and decide on the best course of action.

When it comes to considering the various risk factors, particularly in relation to a Copilot rollout, Access Permissions and Data Classification are two components that stand out. Having full visibility over both of these is key— allows your organization to appropriately manage the associated risks. That visibility also means your security will have more time to test configurations. Having this knowledge alleviates many of the risks involved with a Copilot deployment, ensuring a secure and seamless rollout.

Here at Forcepoint, we’re ready to assist your organization to ensure a successful Copilot rollout. Reach out to us for a Forcepoint DSPM demo. We can also help with a free data risk assessment and so much more.

如有侵权请联系:admin#unsafe.sh