2024-4-12 03:21:35 Author: securityboulevard.com(查看原文) 阅读量:7 收藏

While there’s no shortage of advice on safeguarding Artificial Intelligence (AI) applications from a vast array of new threats, much of it still remains theoretical.

In this blog post, we’ll share practical insights based on our real-world experiences defending customers’ AI apps from emerging adversarial techniques, such as prompt injection and denial of wallet (DoW) attacks. Following the advice in this post can save you hundreds of thousands of dollars and protect your applications from all kinds of abuse at scale.

Challenges with Defending AI Apps from Growing Security Threats

The adoption of generative AI has unlocked immense potential for organizations across various industries. Large Language Models (LLMs) and AI-based text-to-image technologies are seeing rapid adoption, enabling the creation of features and applications no one thought possible only years ago. However, as the demand for AI surges, so do the risks associated with malicious automated threats and bot attacks.

High Costs: Using AI models – whether through third-party services like OpenAI or self-hosted infrastructure – can incur significant expenses. Invoking AI APIs is expensive compared to other commonly used compute primitives.

Relatively New Attack Surface: Many companies offer free or unauthenticated access to AI models, such as sandboxes or chatbots, creating vulnerabilities ripe for exploitation.

Understanding Security Threats to AI Models

As part of the Open Web Application Security Project (OWASP)’s Top 10 List of Risks to Large Language Models (LLMs) Applications, OWASP has outlined the different types of threats to AI in their AI Threat Map below.

Source: OWASP Top 10 for LLM Applications

For the purposes of this post, we’re focusing on Threats to AI Models, specifically Prompt Injection and Denial of Wallet (DoW) attacks.

- Prompt Injection attacks occur when attackers manipulate inputs to AI applications to generate unintended responses and extract sensitive data from embedded business logic or queries. This abusive practice effectively redirects computational expenses onto the victim, allowing attackers to exploit expensive AI APIs without incurring costs themselves.

- Denial of Wallet attacks involve deploying bots with the aim of inflating the operational costs of AI applications. By flooding the system with automated requests, attackers force the target organization to incur unwarranted costs, ultimately disrupting operations and imposing significant financial losses. This is similar to Denial of Service (DoS) attacks.

These are not hypothetical threats; they have materialized across various AI platforms, as evidenced by the myriad of GitHub repositories, such as this as an example.

Since attackers abuse AI models at a much higher rate than real users legitimately using them, these types of attacks can quickly rack up expenses for any company using AI.

AI Protection Real-World Case Study

Here at Kasada, we’ve partnered with companies like Vercel to fortify their AI applications against these evolving threats.

Vercel’s AI SDK Playground provides users with a range of AI models, attracting both legitimate users and malicious actors seeking unauthorized access. The Vercel platform became a target for highly motivated attackers aiming to either exploit AI resources for free or bypass geographical restrictions from countries or regions that don’t allow access to certain AI apps.

“We’ve been in the trenches learning how to protect our AI workloads from abuse, such as denial of wallet attacks and prompt injection. It’s a unique situation over classic bot abuse because AI APIs are orders of magnitude more expensive per request than your traditional APIs, and so abuse can be much more costly.” – Malte Ubl, CTO @ Vercel

Needless to say, there’s a high incentive for bad actors to abuse Vercel’s AI workloads. Leveraging automation – or building a bot – provides the attacker with programmatic access to these powerful AI models.

Despite initial attempts at basic bot protection, Vercel realized the need for a more sophisticated solution as attackers persisted in circumventing their defenses. If the friction introduced to the attackers doesn’t outweigh the value of the prize, they’ll stick around and figure out how to win.

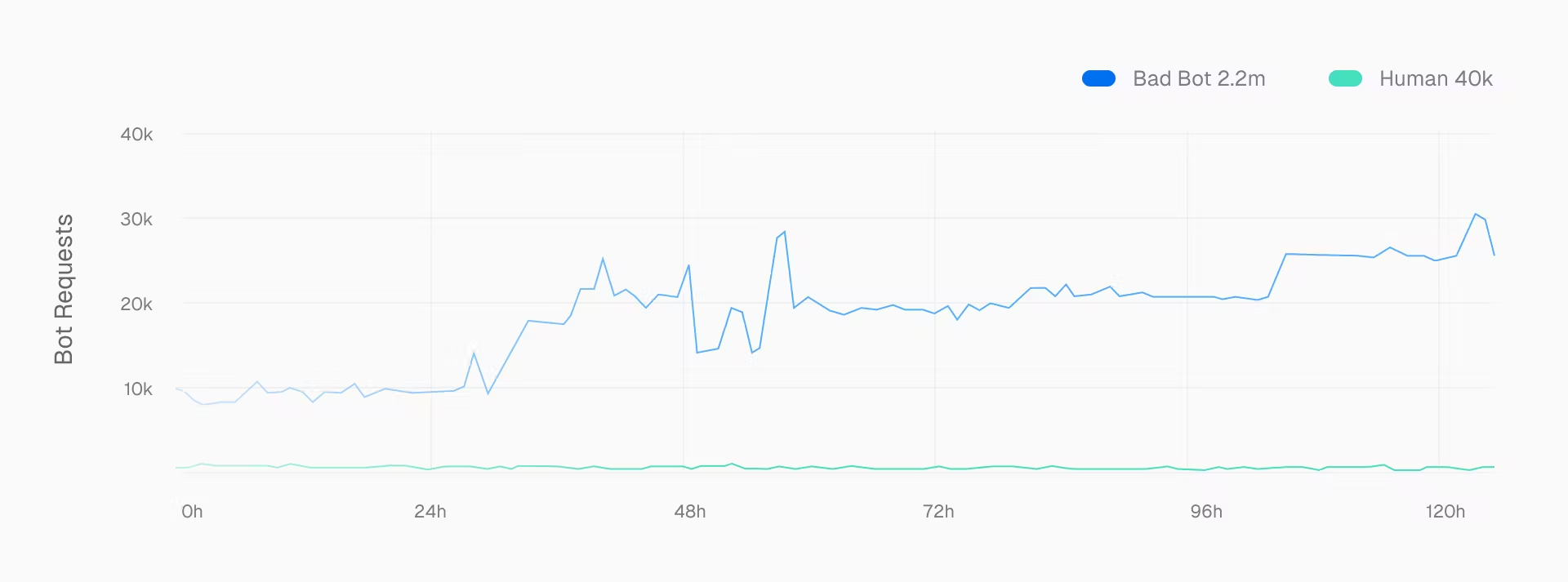

Kasada immediately eliminated the costly abuse by removing the automated traffic. Before Kasada’s go-live, bots made up a staggering 84% of total traffic.

See Vercel’s Blog, “Protecting AI apps from bots and bad actors with Vercel and Kasada”

After Kasada was implemented, the bot traffic plummeted to negligible levels. Kasada Bot Defense not only safeguarded Vercel’s proprietary AI applications but also improved the overall digital experience for genuine users by ensuring seamless access to AI models.

Practical Recommendations to Secure Your AI Models

Generative AI and LLMs are tricky to defend. Natural language makes a comprehensive solution against prompt injection attacks almost impossible. Industry best practices for securing gen AI are changing rapidly, and it’s important to stay on top of new developments.

With this in mind, here’s our advice to protect your AI applications from abuse.

- Assume user input is unsafe. Treat all places where users can enter input for AI models as inherently unsafe and vulnerable to forms of prompt injection attack. Changes to models can accidentally introduce new methods of prompt injection attacks.

- Closely monitor usage. Given the high cost of running AI apps, it’s important to ensure your usage is in line with your expectations. Compare user analytics to the executions of your AI models to make sure there isn’t hidden abuse.

- Employ advanced bot mitigation. For effective defense against automated abuse of your AI apps, such denial of wallet attacks, leverage modern solutions like Kasada Bot Defense.

- Stay informed and updated. AI is a fast-moving space. Educate yourself and your wider team on emerging threats and best practices in all areas of AI security, using resources like the OWASP Top 10 for LLM Applications to enhance your security policies and procedures.

Ultimately, protecting AI models from increasingly common attacks requires a proactive and multi-layered approach. Teams can mitigate risks by deeply understanding the threats facing AI apps and APIs and implementing robust security defenses.

At Kasada, we are committed to partnering with companies to deliver tangible results in securing AI models from sophisticated adversaries. Request a demo to learn how we can help you protect your brand today.

The post Defending AI Apps Against Abuse: A Real-World Case Study appeared first on Kasada.

*** This is a Security Bloggers Network syndicated blog from Kasada authored by Sam Crowther. Read the original post at: https://www.kasada.io/defending-ai-apps/

如有侵权请联系:admin#unsafe.sh