2024-2-29 03:9:19 Author: securityboulevard.com(查看原文) 阅读量:8 收藏

Part I: The Good and the Bad of AI

Few would argue that 2023 was the year AI, specifically generative AI (Gen AI) like ChatGPT, was discussed everywhere. In October, Forrester published a report about how security tools will leverage AI. The findings in that report showed that Gen AI would augment your security tools rather than, as some may think, replace your team.

In our recent webinar, Sumanth Kakaraparthi, VP of Product Management for Data Security, Imperva, and guest speaker, Heidi Shey, Principal Analyst, Forrester, looked at key trends from 2023 and shared insights on what to expect in 2024 around data security. AI was a big focus of the discussion. If you missed the webinar, you can view it here. This blog is the first part of a three-part series highlighting key discussion areas from the session.

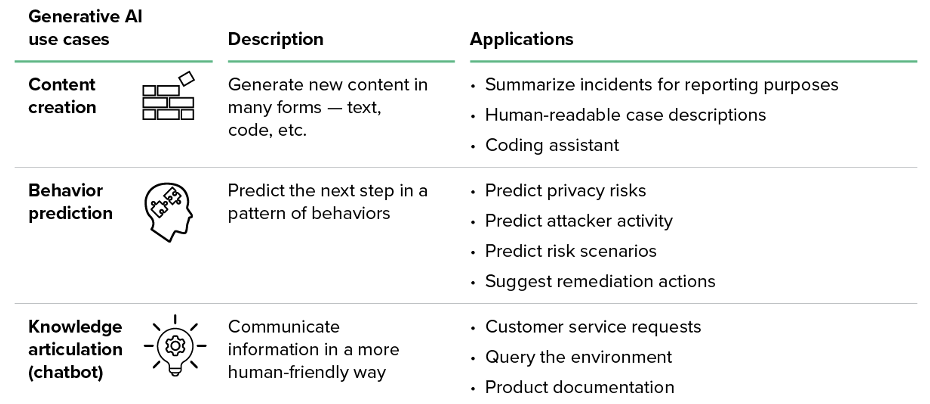

Forrester points to content creation, behavior prediction, and knowledge articulation as three drivers for adopting AI in security tools.

The use case for content creation describes generating new content in many forms, whether text, code, or others. Organizations have applied this content creation to summarize reports more efficiently, develop human-readable case descriptions, and gain assistance with coding. Behavioral predictions talk about predicting the next step in a pattern of behavior, such as predicting privacy risks, predicting attacker activity, predicting risk scenarios, and predicting remediation actions. Knowledge articulation, such as using a Chatbot, allows organizations to use machines to communicate information in more human-friendly ways. This often applies to customer service requests, querying the environment, or developing product documentation.

Forrester shows how Generative AI can help organizations transform how they identify and assess risk

Source: Forrester report “How Security Tools Will Leverage Generative AI”, October 20th, 2023

In the Forrester Trends report, “How Security Tools Will Leverage Generative AI,” from Oct 2023, Forrester explains why Gen AI can help organizations transform how they identify and assess privacy risks proactively. The report states, “Today, organizations identify privacy risks with forms. This task, still manual for many privacy teams, is typically reactive. Generative AI will predict where privacy risks are likely to arise, guide privacy teams to categorize them, and help them focus on high-risk scenarios requiring their attention. The transformation will go beyond privacy teams. Analytics and customer data platforms that leverage generative AI will also be able to indicate to business and data teams which privacy risks will emerge from the processing of certain data and will nudge them to proactively involve their privacy counterparts.”

The reality is that the business will benefit from the possibilities Gen AI-driven applications can deliver. Still, cybersecurity teams will have to deal with all the negative consequences that Gen AI introduces by accelerating the evolution of threats. An analogy used in the session relates to how cybersecurity teams are already navigating the dangerous waters and currents of the ocean in a storm. Adding Gen AI to the mix is like adding a meteor shower on top of an already chaotic environment. Gen AI allows cybercriminals to evolve threats and execute them faster and at a broader scale, making them far more difficult to contend with.

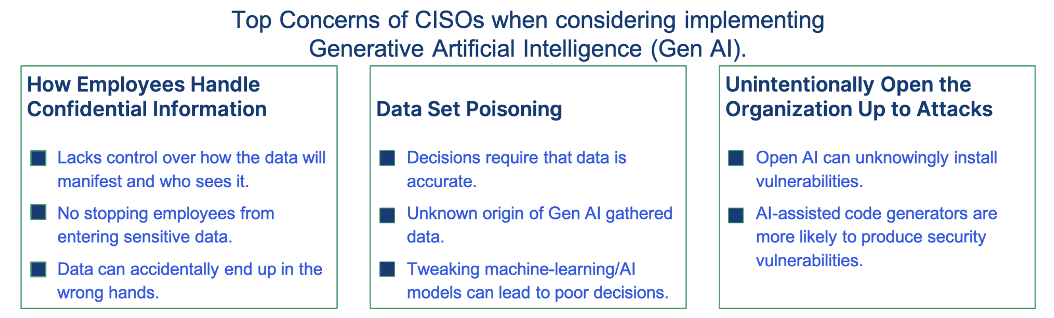

Generative AI as an Asset and a Risk

Generative AI is a powerful tool that can improve how people search for information, code, or even support customers. However, you need to be extremely careful when interfacing with generative AI, regardless if it is internet-based or contained on-premises. Gen AI is very user-friendly. You input a query using natural language and get a logical response. The response may not be 100% accurate, but it often serves as a good starting point. However, CISOs are expressing their security concerns when implementing Gen AI. These concerns include how employees handle confidential information, data set poisoning, and unintentionally opening the organization up to attacks.

Privacy and Confidentiality

When entering information into a Gen AI prompt, employees and the organization have little control over how the data will manifest and who can see it. There needs to be more control around privacy and confidentiality of information. Sensitive data can, without malice, be entered into a machine and be exposed. The idea of accidental or unintentional data exposure stems from public machine-learning models. There must be more to keep employees from entering customer data, employee data, or intellectual property into an AI prompt. This lack of inherent controls does not ensure confidence in leaders that their data won’t accidentally end up in the wrong hands.

Data Trust

Today, decisions are data-driven, so you need confidence that your data is accurate. Using Gen AI to gather data to make these decisions can be risky. You may need to find out where it originated and sanitize it. Now that Open AI language models like Chat GPT can be prompted to leverage Internet-based resources, the validity of the data it collects and displays becomes more important to understand the data source before making business-impacting decisions.

Model Fidelity and Accuracy

Another hidden concern relates to machine learning, specifically machine learning-based models. Insiders can subtly tweak machine-learning/AI-based models. If the person manipulating the models doesn’t understand how the models work, they may cause the models to produce inaccurate results. These inaccurate results could cause analysts to chase non-consequential events or false positives. Referring to data trust above, this changes decisions in ways we don’t easily see, leading to unseen vulnerabilities. It’s like an invisible hand guiding the system, sometimes in the wrong direction.

Lack of Artificial Intelligence Governance

Open AI tools can easily install vulnerabilities within your organization without you realizing it. In 2022, research out of Stanford found that participants who used AI-assisted code generators were more likely to produce code with security vulnerabilities. Although not intentionally done, this code could open the company to risk. The reason is that as AI evolves from a technology being tested within specific scenarios to a general-purpose technology, the issues of control, safety, and accountability come into play. There has been some AI development around the world; however, effectively governing the use of AI will require collaboration between private and public sectors. However, both have different risk tolerance levels around ethical concerns, limiting misuse, managing data privacy, and copyright protection.

Sumanth Kakaraparthi discusses CISO’s top concerns with implementing Gen AI

Source: Imperva Data Security Webinar, “Trends in Data Security 2023; A Strategic Playbook for CISOs.” December, 2023

Reframing AI as a Security Asset

To reframe AI and get the substantial value that it intended, we first have to keep tabs on how it is developing. The key is to understand its limitations and to use the technology effectively. This will help you understand what it can do for you today and become an asset. You should look at Gen AI as an assistant or facilitator that augments a specific use case. Defining what you are trying to accomplish using AI will allow you to pinpoint the expected benefits and value. This will allow you to start backing out some of the threats and risks associated with that specific use case and focus on how you will use the Gen AI results. In the webinar session, the speakers looked at a detailed response use case using this methodology to improve analysts’ ability to query their environment, suggest remediation actions, and speed up the writing of reports.

Navigating The Good and Bad of AI

Generative AI has the potential to aid security teams in identifying risk and creating a logical action plan to mitigate it. But it also has the potential to introduce new risks caused by users needing to understand how it works and where the data entered is going. Further, machine learning-based models can be manipulated and skew results in the wrong direction. The key to using Gen AI is clearly defining how it will be used with guardrails to channel AI’s potential and ensure that AI will not leave you battling a tidal wave of threats. These guardrails are more than a protective measure; they enable AI to be used as a force multiplier to enhance your security posture. Implementing robust tools like machine learning-powered behavior models helps organizations leverage incident identification beyond static models. This opens up a more comprehensive range of incident detection that is harder to deceive.

Please view the webinar here to learn more.

Upcoming in this Series

Please look out for the other blogs as part of this series that will discuss:

- Part II: New data risks brought on by adopting Gen AI

- Part III: Regulations and compliance associated with Gen AI

The post Navigating the Waters of Generative AI appeared first on Blog.

*** This is a Security Bloggers Network syndicated blog from Blog authored by brianrobertson. Read the original post at: https://www.imperva.com/blog/navigating-the-waters-of-generative-ai/

如有侵权请联系:admin#unsafe.sh