02/28/2024

6 min read

On February 6th, 2024 we announced eight new models that we added to our catalog for text generation, classification, and code generation use cases. Today, we’re back with seventeen (17!) more models, focused on enabling new types of tasks and use cases with Workers AI. Our catalog is now nearing almost 40 models, so we also decided to introduce a revamp of our developer documentation that enables users to easily search and discover new models.

The new models are listed below, and the full Workers AI catalog can be found on our new developer documentation.

Text generation

- @cf/deepseek-ai/deepseek-math-7b-instruct

- @cf/openchat/openchat-3.5-0106

- @cf/microsoft/phi-2

- @cf/tinyllama/tinyllama-1.1b-chat-v1.0

- @cf/thebloke/discolm-german-7b-v1-awq

- @cf/qwen/qwen1.5-0.5b-chat

- @cf/qwen/qwen1.5-1.8b-chat

- @cf/qwen/qwen1.5-7b-chat-awq

- @cf/qwen/qwen1.5-14b-chat-awq

- @cf/tiiuae/falcon-7b-instruct

- @cf/defog/sqlcoder-7b-2

Summarization

- @cf/facebook/bart-large-cnn

Text-to-image

- @cf/lykon/dreamshaper-8-lcm

- @cf/runwayml/stable-diffusion-v1-5-inpainting

- @cf/runwayml/stable-diffusion-v1-5-img2img

- @cf/bytedance/stable-diffusion-xl-lightning

Image-to-text

- @cf/unum/uform-gen2-qwen-500m

New language models, fine-tunes, and quantizations

Today’s catalog update includes a number of new language models so that developers can pick and choose the best LLMs for their use cases. Although most LLMs can be generalized to work in any instance, there are many benefits to choosing models that are tailored for a specific use case. We are excited to bring you some new large language models (LLMs), small language models (SLMs), and multi-language support, as well as some fine-tuned and quantized models.

Our latest LLM additions include falcon-7b-instruct, which is particularly exciting because of its innovative use of multi-query attention to generate high-precision responses. There’s also better language support with discolm_german_7b and the qwen1.5 models, which are trained on multilingual data and boast impressive LLM outputs not only in English, but also in German (discolm) and Chinese (qwen1.5). The Qwen models range from 0.5B to 14B parameters and have shown particularly impressive accuracy in our testing. We’re also releasing a few new SLMs, which are growing in popularity because of their ability to do inference faster and cheaper without sacrificing accuracy. For SLMs, we’re introducing small but performant models like a 1.1B parameter version of Llama (tinyllama-1.1b-chat-v1.0) and a 1.3B parameter model from Microsoft (phi-2).

As the AI industry continues to accelerate, talented people have found ways to improve and optimize the performance and accuracy of models. We’ve added a fine-tuned model (openchat-3.5) which implements Conditioned Reinforcement Learning Fine-Tuning (C-RLFT), a technique that enables open-source language model development through the use of easily collectable mixed quality data.

We’re really excited to be bringing all these new text generation models onto our platform today. The open-source community has been incredible at developing new AI breakthroughs, and we’re grateful for everyone’s contributions to training, fine-tuning, and quantizing these models. We’re thrilled to be able to host these models and make them accessible to all so that developers can quickly and easily build new applications with AI. You can check out the new models and their API schemas on our developer docs.

New image generation models

We are adding new Stable Diffusion pipelines and optimizations to enable powerful new image editing and generation use cases. We’ve added support for Stable Diffusion XL Lightning which generates high quality images in just two inference steps. Text-to-image is a really popular task for folks who want to take a text prompt and have the model generate an image based on the input, but Stable Diffusion is actually capable of much more. With this new Workers AI release, we’ve unlocked new pipelines so that you can experiment with different modalities of input and tasks with Stable Diffusion.

You can now use Stable Diffusion on Workers AI for image-to-image and inpainting use cases. Image-to-image allows you to transform an input image into a different image – for example, you can ask Stable Diffusion to generate a cartoon version of a portrait. Inpainting allows users to upload an image and transform the same image into something new – examples of inpainting include “expanding” the background of photos or colorizing black-and-white photos.

To use inpainting, you’ll need to input an image, a mask, and a prompt. The image is the original picture that you want modified, the mask is a monochrome screen that highlights the area that you want to be painted over, and the prompt tells the model what to generate in that space. Below is an example of the inputs and the request template to perform inpainting.

import { Ai } from '@cloudflare/ai';

export default {

async fetch(request, env) {

const formData = await request.formData();

const prompt = formData.get("prompt")

const imageFile = formData.get("image")

const maskFile = formData.get("mask")

const imageArrayBuffer = await imageFile.arrayBuffer();

const maskArrayBuffer = await maskFile.arrayBuffer();

const ai = new Ai(env.AI);

const inputs = {

prompt,

image: [...new Uint8Array(imageArrayBuffer)],

mask: [...new Uint8Array(maskArrayBuffer)],

strength: 0.8, // Adjust the strength of the transformation

num_steps: 10, // Number of inference steps for the diffusion process

};

const response = await ai.run("@cf/runwayml/stable-diffusion-v1-5-inpainting", inputs);

return new Response(response, {

headers: {

"content-type": "image/png",

},

});

}

}

New use cases

We’ve also added new models to Workers AI that allow for various specialized tasks and use cases, such as LLMs specialized in solving math problems (deepseek-math-7b-instruct), generating SQL code (sqlcoder-7b-2), summarizing text (bart-large-cnn), and image captioning (uform-gen2-qwen-500m).

We wanted to release these to the public, so you can start building with them, but we’ll be releasing more demos and tutorial content over the next few weeks. Stay tuned to our X account and Developer Documentation for more information on how to use these new models.

Optimizing our model catalog

AI model innovation is advancing rapidly, and so are the tools and techniques for fast and efficient inference. We’re excited to be incorporating new tools that help us optimize our models so that we can offer the best inference platform for everyone. Typically, when optimizing AI inference it is useful to serialize the model into a format such as ONNX, one of the most generally applicable options for this use case with broad hardware and model architecture support. An ONNX model can be further optimized by being converted to a TensorRT engine. This format, designed specifically for Nvidia GPUs, can result in faster inference latency and higher total throughput from LLMs. Choosing the right format usually comes down to what is best supported by specific model architectures and the hardware available for inference. We decided to leverage both TensorRT and ONNX formats for our new Stable Diffusion pipelines, which represent a series of models applied for a specific task.

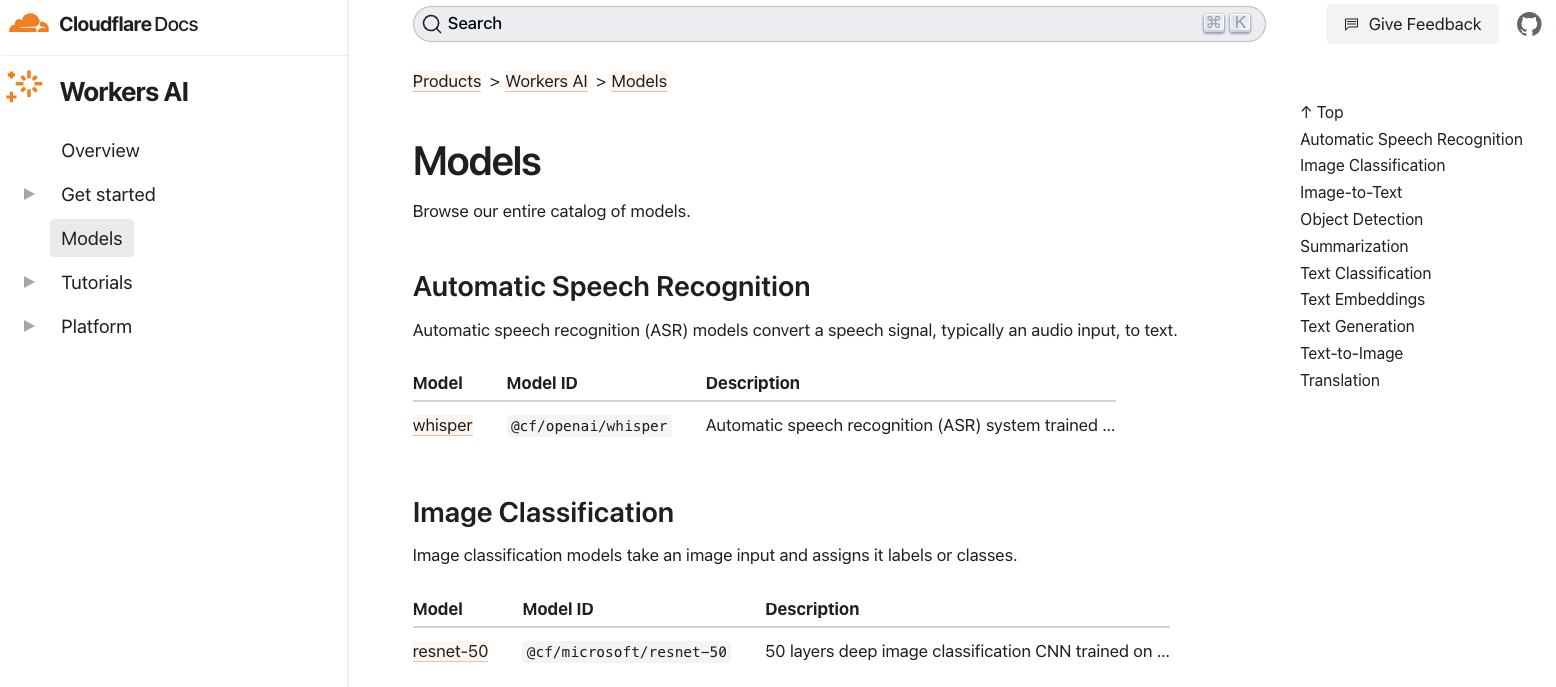

Explore more on our new developer docs

You can explore all these new models in our new developer docs, where you can learn more about individual models, their prompt templates, as well as properties like context token limits. We’ve redesigned the model page to be simpler for developers to explore new models and learn how to use them. You’ll now see all the models on one page for searchability, with the task type on the right-hand side. Then, you can click into individual model pages to see code examples on how to use those models.

We hope you try out these new models and build something new on Workers AI! We have more updates coming soon, including more demos, tutorials, and Workers AI pricing. Let us know what you’re working on and other models you’d like to see on our Discord.

We protect entire corporate networks, help customers build Internet-scale applications efficiently, accelerate any website or Internet application, ward off DDoS attacks, keep hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.