If ever there was a technology of the moment, it is generative AI. Thanks to the breakout success of OpenAI’s ChatGPT offering, the tech has achieved unprecedented global awareness—from the home user to the corporate boardroom. And although it is already being leveraged by threat actors to scale attacks and probe for vulnerabilities, it can also be a force multiplier for success in a cybersecurity context.

We are in a world where data is exploding, complexity is everywhere, and threat volumes are surging. So much so, that human capabilities alone are simply not enough to push back the tide. Against this backdrop, generative AI and the large language models (LLMs) on which it is trained offer the prospect of minimizing security risk, bridging the cyber-skills gap and empowering organizations to tackle cyber attacks head on.

The Dark Side of Generative AI

Soon after ChatGPT gained prominence in boardrooms, security experts raised alarms about its potential exploitation by threat actors—a concern that has proven valid. Already, dark web actors are actively engaged. Chatbots like WormGPT and FraudGPT are showing how the technology could democratize cybercrime to those with nefarious intent.

Threat actors are harnessing the power of generative AI to:

- Create grammatically flawless phishing campaigns at scale and in multiple languages.

- Develop novel malware, lowering the barrier to entry for what was once a highly technical task. The caveat here is that the threat actor must still know what prompts to input.

- Probe for vulnerabilities and craft specialized targeted attacks designed to circumvent organizations’ defenses.

- Produce deepfake audio and video for fraud and to bypass biometric authentication.

- Gather publicly available intelligence on victims – the better to target them with social engineering and other tactics.

But as with most innovations, there are potential applications for both offensive and defensive teams.

What can generative AI do for security teams?

Generative AI holds immense potential for enhancing the capabilities of security teams, offering positive use cases across various aspects of cybersecurity. Beyond threat intelligence, its applications extend to vulnerability and attack surface management, contributing to a safer digital connected world. Let’s explore some of the ways generative AI can benefit security team:

Data exploration and query building: Data is arguably the most important tool at the disposal of a security analyst. The trick is to optimize its use. It’s difficult to drive continuous improvements in security posture when the same security data is described differently by already deployed vendor solutions.

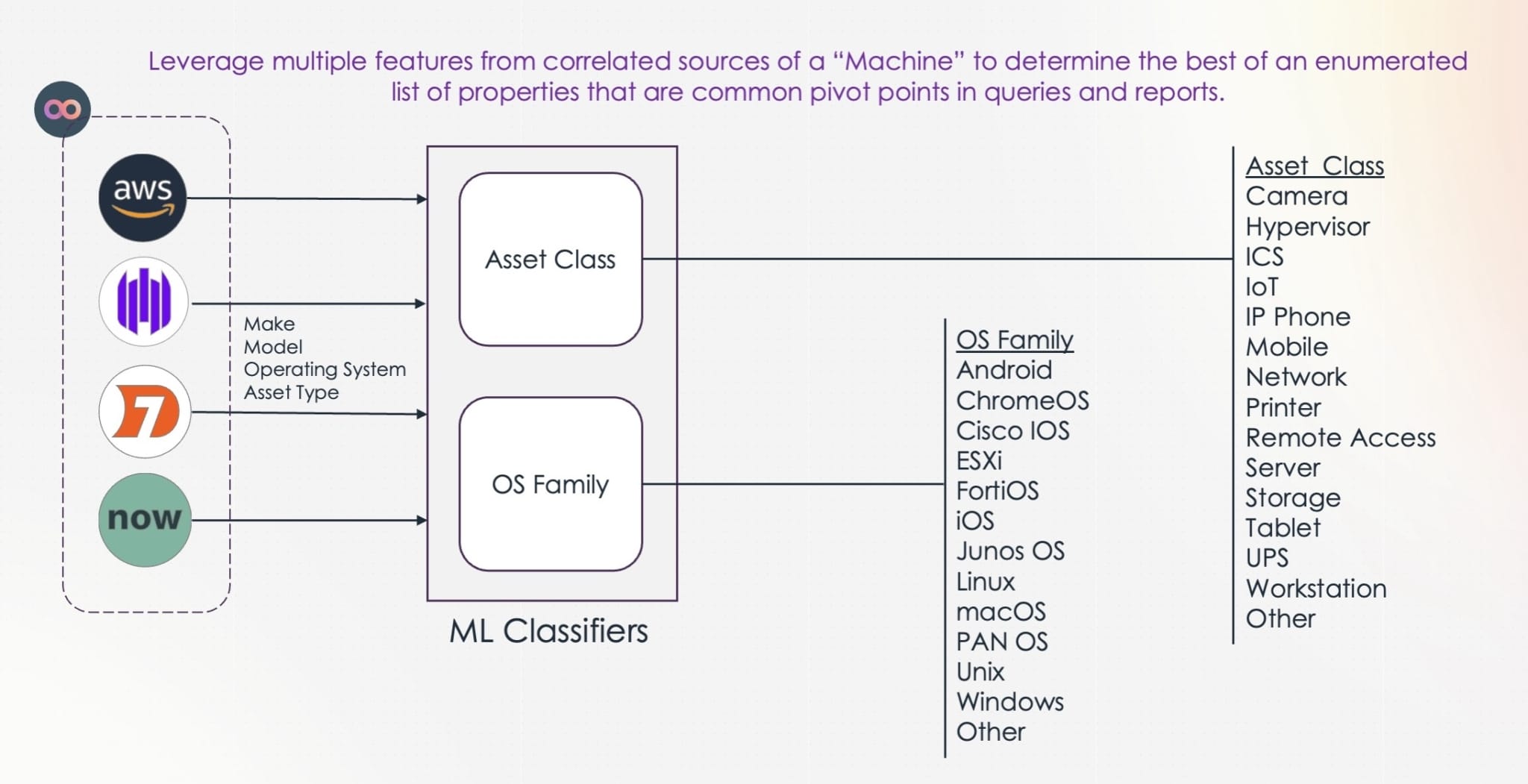

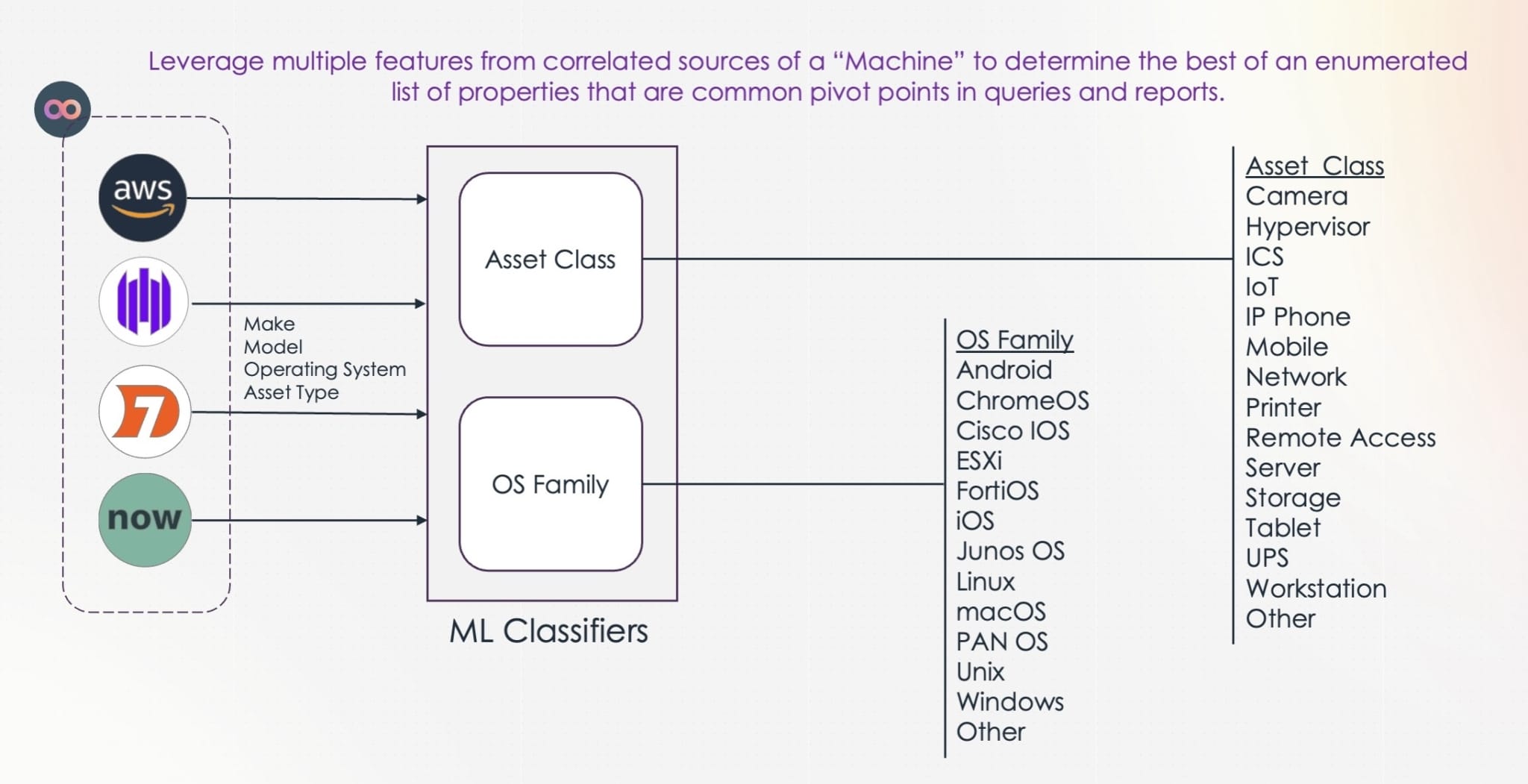

Example: When managing risk across the digital attack surface, analysts are increasingly turning to tools like the Noetic Cyber platform to provide visibility into their assets and the relationships between these assets. Noetic recently introduced an LLM to normalize naming conventions with ML classifiers–“Asset Class” and “OS Family”–regardless of the combination of asset data sources. The result of this greater consistency is that they’re able to build queries across large datasets far more easily, to find the information they need to make better security decisions.

Vulnerability management: Over 26,000 new CVEs were recorded in the US National Vulnerability Database (NVD) last year, the seventh year in a row the number has hit record figures. Organizations are understandably buckling under the weight of both new CVEs, legacy bugs they have not been able to patch, and the sheer size and complexity of their asset environment. The prevalence of open-source code and dependencies make the whole process even more complex.

Generative AI trained on the right datasets could help here by analyzing data on critical systems, in-use software libraries and other entities, suggesting which product security updates should be prioritized for deployment. Such tools could also be used to identify common cloud misconfigurations, another popular attack vector for threat actors. And there’s also a potential role for generative AI in creating synthetic data sets which could be used to test an organization’s own vulnerability assessment tools and processes.

Strengthening cyber defenses: Generative AI’s ability to produce synthetic data may also be of use in simulating novel attack patterns. These in turn could be used to help train AI and machine learning-powered security tooling to spot sophisticated and previously unseen threats more accurately—without exposing sensitive corporate data.

Example: Nvidia says it used the capability to generate synthetic examples of spear phishing emails to train its AI-powered security tool. The resulting model apparently identified 21% more malicious emails than existing tools.

A co-pilot for threat analysts: Research reveals that nearly three-quarters of enterprise security operations (SecOps) teams find it challenging to cut through the noise when analyzing threat intelligence. A further 63% say they don’t have enough skilled staff in these roles. This is where generative AI can help, by explaining alerts, decoding complex scripts and recommending actions for less skilled analysts—thus helping to bridge the skills gap and enhance productivity. Such tools could even enable analysts to build more sophisticated threat hunting queries.

Example: A good example is SentinelOne’s Purple AI, which delivers rapid, accurate and detailed responses to analyst questions in plain English, without requiring hours of research and querying for indicators of compromise first. It works as well for specific, known threats as suspicious activity which the analyst is trying to find the root cause of.

Training employees and security teams: Finally, generative AI can also improve security posture by creating realistic attack simulations. These can be utilized in training programs to improve the knowledge and awareness of regular employees and enable cybersecurity staff to practice their skills.

A Cybersecurity Ally

We’re only at the start of a fascinating journey, which in time will surely uncover more use cases for generative AI—for both defense and offense. The key for security teams will be to ensure that their own use of such tools is in line with corporate policy. Specialized generative AI applications trained on closed LLMs offer arguably more privacy and data security guardrails. The last thing a security analyst needs is to unwittingly share corporate secrets with the world via their queries.

*** This is a Security Bloggers Network syndicated blog from Noetic: Cyber Asset Attack Surface & Controls Management authored by Alexandra Aguiar. Read the original post at: https://noeticcyber.com/generative-ai-cybersecurity/