Custom Python operators in SAP Data Intelligence stand out as a versatile tool for developing 2024-1-16 04:21:30 Author: blogs.sap.com(查看原文) 阅读量:13 收藏

Custom Python operators in SAP Data Intelligence stand out as a versatile tool for developing bespoke integrations. The richness of Python’s library ecosystem opens up endless opportunities. This article will delve into how pyodbc, as a robust and versatile ODBC (Open Database Connectivity) technology, enables seamless connections to an extensive array of databases. ODBC technology stands as a testament to interoperability, allowing uniform access to database systems regardless of their type or vendor. By incorporating pyodbc into a custom operator, users can tap into this vast database support, ensuring efficient and flexible data handling within the SAP Data Intelligence environment.

Connect to MS SQL Server using pyodbc

Szenario Architecture

In this example, we illustrate how to connect Python Operators in SAP Data Intelligence to a Microsoft SQL Server database using the pyodbc library. This scenario showcases the practical application of Python’s versatility in the SAP environment, focusing on establishing a reliable and efficient connection to MS SQL Server for data manipulation and retrieval.

To test this szenario, I use a dockerized version of SQL Server from the Dockerhub.

Supported Databases

Numerous databases are compatible with this approach, including but not limited to: Microsoft SQL Server, Oracle Database, MySQL, PostgreSQL, IBM DB2, Teradata, Amazon Redshift, Microsoft Access, SQLite, Informix, MariaDB, SAP HANA, MongoDB, Snowflake, Google BigQuery, Azure SQL Database, FileMaker Pro, InterSystems Cache, and HP Vertica.

Connection Flow

The requirement for a database to be compatible is the availability of matching driver software to interact with it.

1. Create a Dockerfile with drivers

To configure pyodbc for a particular database, begin by installing the necessary drivers into a dockerfile. SAP Data Intelligence Python Operators typically operate on SLES (“SUSE Linux Enterprise Server”) base images. Here, we provide an example for MS SQL Server, though this setup can be adapted for any database driver available. We utilize an OpenSUSE base image equipped with the zypper package manager, noting that the base images provided with SAP Data Intelligence do not include zypper.

Note: Exercise caution with tagging when using non-sap-managed base images.

FROM opensuse/leap:15.3

RUN zypper --non-interactive update

RUN zypper --non-interactive install --no-recommends --force-resolution python39 python39-pip git # Install python3, pip3, git

# install sql server odbc driver

RUN zypper install -y curl

RUN curl -O https://packages.microsoft.com/keys/microsoft.asc

RUN rpm --import microsoft.asc

RUN zypper ar https://packages.microsoft.com/config/sles/15/prod.repo

RUN ACCEPT_EULA=Y zypper install -y msodbcsql17

# add vflow user

RUN groupadd -g 1972 vflow && useradd -g 1972 -u 1972 -m vflow

USER 1972:1972

WORKDIR /home/vflow

ENV HOME=/home/vflow

# install additional python packages as usual

RUN python3.9 -m pip --no-cache-dir install 'pyodbc' --userIn the demonstrated code, we utilize a widely available base image, enabling you to debug this image on your local machine as well. The process begins with installing Python and other essentials. Then, we proceed to install the actual ODBC driver using the zypper package manager. This includes adding the Microsoft repository and importing its key to ensure functionality. It’s important to be mindful that drivers are often proprietary software and are subject to specific licensing agreements.

Since we are using a custom image, we need to add the vflow user, set the workdir and specify a environment variable. Additionally we can install any python packages as we are used to in the Dockerfiles. In this case, we of course install pyodbc.

Regarding the base image, we need to tag is with ‘python36’ and ‘tornado’ as the minimum requirements for use in the subengine. See the content of the Tags.json file.

Note: For further background, you can view the setup for the $com.sap.sles.base image under dockerfiles/com/sap/sles/base in the system, to understand how it aligns with the SAP Data Intelligence environment.

{

"pythonsqlserverodbc": "",

"python36": "",

"tornado": "5.0.2"

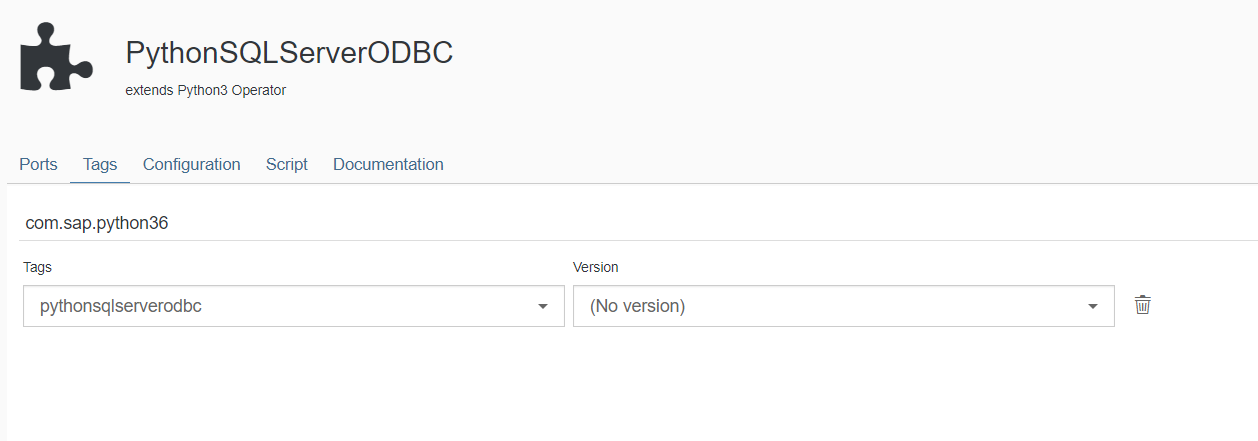

}2. Create a Custom Python Operator with the matching Tag

The next step is to create a new python custom operator.

Custom Operator on Python3 basis

A key aspect in this stage is to assign the tag we created in the Dockerfile to our new custom Python operator. After assigning the tag, we then proceed to populate the operator script with our Python implementation.

import pyodbc

sqlserver_connection = api.config.http_connection

api.logger.info(str(sqlserver_connection))

def connect_sqlserver():

# use pyodbc with the driver name of choice

driver_name = "ODBC Driver 17 for SQL Server"

host = sqlserver_connection["connectionProperties"]["host"]

port = sqlserver_connection["connectionProperties"]["port"]

server = f"{host},{port}"

user = sqlserver_connection["connectionProperties"]["user"]

password = sqlserver_connection["connectionProperties"]["password"]

conn = pyodbc.connect(f"DRIVER={driver_name};SERVER={server};UID={user};PWD={password}")

# sample sql query

cur = conn.cursor()

cur.execute("SELECT GETDATE() AS CurrentTimestamp;")

for row in cur:

api.logger.info(str(row))

cur.close()

conn.close()

api.add_generator(connect_sqlserver)This is a straightforward example demonstrating how to connect to the database and execute a sample SQL query. For specific examples of different databases, see the wiki of pyodbc.

Upon successful execution, a current timestamp will be visible in the logs. In this instance, I’ve adapted an HTTP connection type, assigned through the operator’s configuration, to convey certain configuration details. While not mandatory (as you could hardcode connection details), employing this method enhances clarity. For reference, here’s the JSON for the configSchema.json of the custom operator:

{

"$schema": "http://json-schema.org/draft-06/schema#",

"$id": "http://sap.com/vflow/PythonSQLServerODBC.configSchema.json",

"type": "object",

"properties": {

"codelanguage": {

"type": "string"

},

"scriptReference": {

"type": "string"

},

"script": {

"type": "string"

},

"http_connection": {

"title": "HTTP Connection",

"description": "HTTP Connection",

"type": "object",

"properties": {

"configurationType": {

"title": "Configuration Type",

"description": "Configuration Type",

"type": "string",

"enum": [

" ",

"Configuration Manager",

"Manual"

]

},

"connectionID": {

"title": "Connection ID",

"type": "string",

"format": "com.sap.dh.connection.id"

},

"connectionProperties": {

"title": "Connection Properties",

"description": "Connection Properties",

"$ref": "http://sap.com/vflow/com.sap.dh.connections.http.schema.json",

"sap_vflow_constraints": {

"ui_visibility": [

{

"name": "configurationType",

"value": "Manual"

}

]

}

}

}

}

},

"required": [

"http_connection"

]

}In summary, we enhance the configuration by introducing a new property called “http_connection,” which points to a connection of the same type within the SAP Data Intelligence connection management application. While other connection types may be suitable, I find the HTTP connection to be a convenient way to store various types of credentials. If needed, you can even store custom JSON data in the connection manager fields to achieve your desired outcome. The HTTP connection is particularly advantageous when connecting through the SAP Cloud Connector since it supports it as a gateway type.

3. Create Connection

Create HTTP Connection to store credentials

4. Test the new operator

Finally we can embed this operator into a graph, fill the http_connection property in the operator with our created “SQLSERVER_CONNECTION” and run it.

Custom ODBC Operator in graph

(datetime.datetime(2024, 1, 13, 14, 30, 15, 790000),)Examining the log output, it’s evident that we’ve effectively gained access to the database within an SAP Data Intelligence custom operator using ODBC.

We hope you found this blog enjoyable! Please don’t hesitate to reach out if you have any questions.

如有侵权请联系:admin#unsafe.sh