OpenAI’s Dev Day on November 6th, 2023, marked a significant milestone in the field of artif 2024-1-2 00:42:56 Author: blogs.sap.com(查看原文) 阅读量:10 收藏

OpenAI’s Dev Day on November 6th, 2023, marked a significant milestone in the field of artificial intelligence. The event introduced a suite of groundbreaking AI services, such as GPTs, the GPTs App Store, GPT-4 Turbo, and the Assistants API. In my opinion, the most exciting revelation at the developer conference was the Assistants API. If you’re interested, you can watch the Dev Day event that focuses on the Assistants API by following this link.

“We believe that if you give people better tools, they will do amazing things. We know that people want AI that is smarter, more personal, more customizable, can do more on your behalf. Eventually, you’ll just ask the computer for what you need, and it’ll do all of these tasks for you.” – Sam Altman – CEO, OpenAI

An Overview of the Assistants API

The Assistants API is a powerful tool that enables developers to build AI assistants within their apps. An Assistant has instructions and can leverage models, tools, and knowledge to respond to user queries. The API currently supports three types of tools: Code Interpreter, Retrieval, and Function calling. It is designed to help developers create their own assistive AI apps that have goals and can call models and tools. Explore further in the OpenAI documentation.

In this blog post, I will guide you on how to build AI agents with OpenAI’s new Assistants API on the Retrieval tool. This tool simplifies the process of setting up a Q&A system based on a custom knowledge base, making it remarkably user-friendly. With the Retrieval tool, you can effortlessly retrieve information, answer questions, provide recommendations, and more.

To quote the OpenAI documentation:

Retrieval augments the Assistant with knowledge from outside its model, such as proprietary product information or documents provided by your users. Once a file is uploaded and passed to the Assistant, OpenAI will automatically chunk your documents, index and store the embeddings, and implement vector search to retrieve relevant content to answer user queries.

The beauty of the Assistants API retrieval tool lies in its simplicity – no chunking, embeddings, or vector database handling is required. With this tool, you won’t need to write anything for these intricate processes; everything is seamlessly abstracted via a user-friendly Assistant API.

It’s a great way to integrate AI into various business processes, allowing them to enhance efficiency, automate tasks, and provide faster and more accurate responses to users’ queries.

Lastly, addressing Data and Privacy concerns related to the Assistants API.

As Sam Altman said at DevDay…

We don’t train on your data from the API, or ChatGPT Enterprise, ever

How does Assistants API work?

Before we dive into the code, let’s first take a high-level overview of building on the Assistants API. There are several new components to understand:

Assistants:

An Assistant is a purpose-built AI that leverage OpenAI’s models and invoke tools. They operate based on a customized set of instructions, defining the range of actions they can perform to respond to user queries.

Tools:

OpenAI-hosted tools such as Code Interpreter and Knowledge Retrieval, or the option to construct your own tools through Function calling.

Threads:

A Thread is a conversation session between an Assistant and a user, to which messages can be added, creating an interactive session. Threads store Messages and automatically handle truncation to ensure content fits within a model’s context.

Messages:

Collections of messages within threads, each message assigned a role (user/assistant) and content. Messages contain the text input by the user and can include text, files, and images.

Running the Assistant:

Finally, we run the Assistant to process the Thread, call certain tools if necessary, and generate the appropriate response.

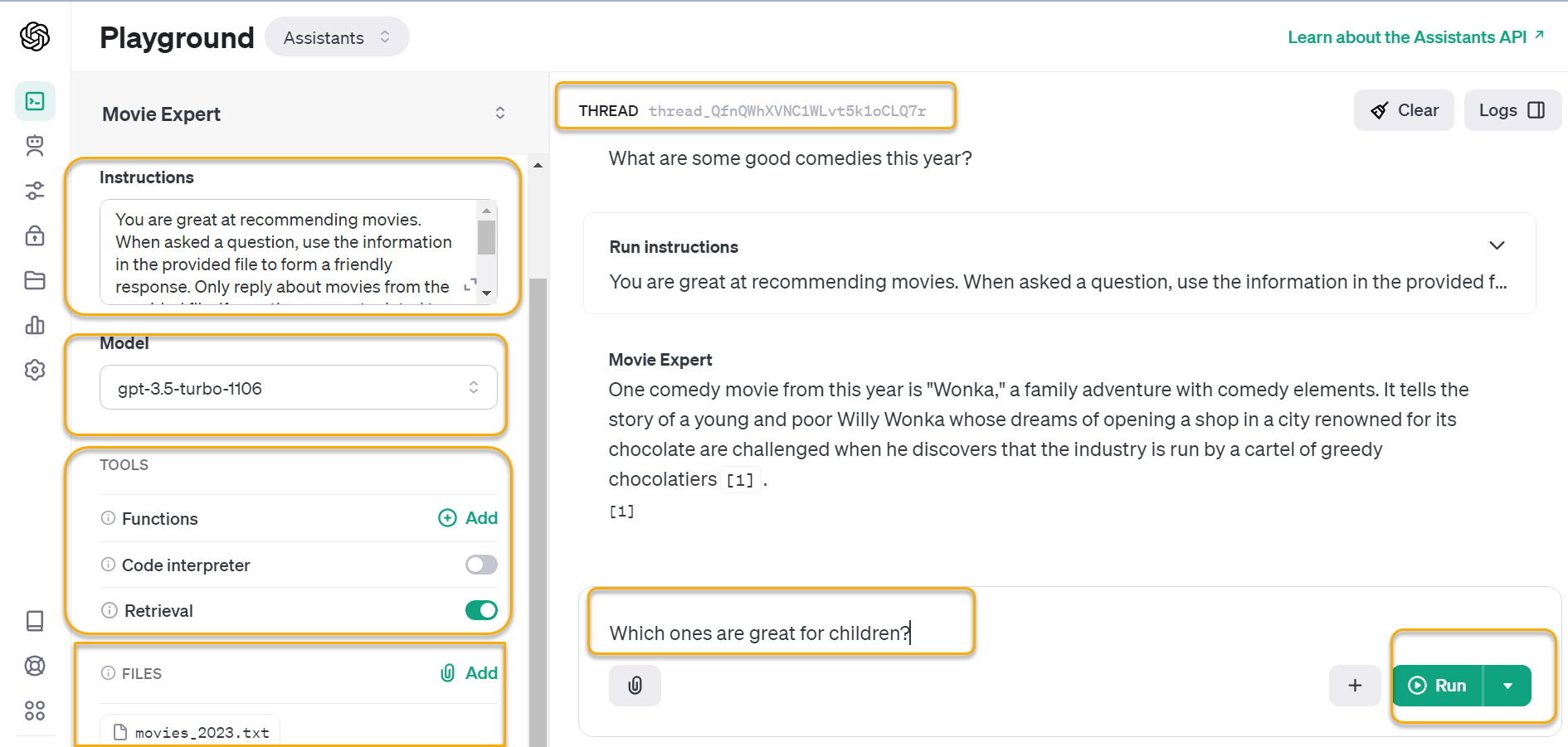

The Assistants API is in beta and the OpenAI team is actively working on adding more functionality. You can explore the capabilities of the Assistants API using the Assistants playground or by building a step-by-step integration outlined in this blog.

Motivation:

This blog post showcases how to integrate OpenAI’s Assistants API into SAP Cloud Integration. While numerous codes and blogs on this subject predominantly use Python or Node.js, I aim to offer an alternative approach. As in my previous blogs, I strive to explore unconventional ways to harness the inherent capabilities of SAP Cloud Integration, pushing the boundaries of what can be achieved within this framework. With this goal in mind, I embark on the same journey once again.

Hands-On: Getting Started with OpenAI’s Assistants API and SAP Cloud Integration

Now that you have a basic understanding of the OpenAI Assistants API, let’s dive into a practical use case. This section will guide you through the process of creating a Movie Expert assistant that can answer questions and provide recommendations based on a custom knowledge base from a movies_2023.txt file.

This demo or prototype serves as an example of how OpenAI’s Assistants API can be leveraged within a web application to create interactive and engaging user experiences.

Please note that I have only built the complete backend API development, which can be consumed by any Frontend Framework like Vanilla JS, React, SAP UI5, SAP Build Apps, etc. to create the frontend chat interface. To demonstrate the frontend, I will use Postman and show all necessary APIs that can be consumed by Frontend Framework to create the frontend chat interface.

You can download the complete integration package from GitHub at this link: Download. The full source code and all other necessary files are included in the download. Everything you need is also available in the GitHub repository openai-assistant-sap-integration.

Knowledge Retrieval Tool Flow Diagram

When implementing any technical solution, it is better to provide a visual flow that illustrates the interaction between the main components of the overall backend being developed. The flow diagram below outlines the five key steps that are integral to implementing the assistant, spanning from the initial file upload to the generation of the final response.

Knowledge Bot Backend APIs

The steps are as follows:

- Create your own assistant with instructions.

- Upload your files to OpenAI.

- Assign the file to an assistant.

- Create a new thread.

- Create a new message in the message thread with the user’s query.

Let’s take a closer look at each of these steps.

Prerequisites

- First, create an OpenAI account or sign in. Next, navigate to the API key page and “Create new secret key”. Copy the API Key to your clipboard to use in the step 4 and Step 6.

- Download the Integration Package Zip file from the GitHub Repo and import Integration Package into your tenant.

- Create an OAuth client (Client Credentials grant type) on the tenant with service plan ‘integration-flow’.

- Navigate to your SAP BTP cockpit of the Cloud Foundry sub-account, go to ‘Services’, then ‘Service Marketplace’ and select ‘Process Integration’

- Create a new instance with service plan ‘integration-flow‘ and with the following configuration:

- Grant-types: ‘client_credentials’

- Roles: ESBMessaging.send

- Create a Service Key and copy the entire JSON text to your clipboard to use in the step 7 for ‘Configure Environments’.

- For the Neo Environment, please refer to steps 1 and 2 in the Neo documentation. Assign the roles mentioned above to the user ‘oauth_client_’. Copy the Token Endpoint (found in the branding tab), Client ID, and Client Secret to your clipboard to use in the step 6 for ‘Configure Environments’. [Subscription: Select iflmap node]

- Create the User Credentials.

- Manage Security Material -> Add Create Secure Parameter for the OpenAI API Key.

- Download the Postman Collection Zip file from the GitHub Repo. Unzip and import the collections and environment into your Postman.

- Configure Collections Variables. ⚠️ Put in current value.

- The Retrieval tool supports either the gpt-3.5-turbo-1106 or the gpt-4-1106-preview model. For free trial or testing purposes, it is advised to use the gpt-3.5-turbo-1106 model. However, for users on a paid plan, OpenAI recommends opting for the latest model for best results and maximum compatibility with tools.

- Configure Environments – Update the Environment variables with the credentials generated in Step 3 to connect the HTTP end point of your SAP Cloud Integration tenant. ⚠️ Put in current value.

Note: We will be referring to the starter guide on OpenAI documentation at https://platform.openai.com/docs/assistants/overview/assistants-playground for guidance on each step involved in constructing the Knowledge Bot backend API.

Step 1: Create your own assistant with instructions

Use Postman Request Create assistant with necessary instruction and name. This correspond to https://platform.openai.com/docs/assistants/overview/step-1-create-an-assistant on OpenAI documentation.

Copy assistant_id to be required in later steps.

You will see the assistant being created on https://platform.openai.com/assistants

Step 2: Upload your files to OpenAI

Use Postman Request Upload file to upload your file.

Copy file_id to be required in later steps.

Assistants can access Files in various formats, either during their creation or as part of threads between Assistants and users. Each Assistant can accommodate a maximum of 20 files, with each file size capped at 512 MB.

You will see the file on https://platform.openai.com/files

Step 3: Assign the file to an assistant

Use Postman Request Create assistant file to assign the file to your assistant. Pass assistant_id to the path parameter and file_id in the body.

Step 4: Create a new thread

Use Postman Request Create thread to create a new thread. This correspond to https://platform.openai.com/docs/assistants/overview/step-2-create-a-thread on OpenAI documentation.

Copy thread_id to be required in later steps.

Step 5: Create a new message in the message thread with the user’s query

Now go the integration iflow Knowledge Bot and configure the external parameters. Set the name of the credential created in Security Material to store the OpenAI API Key. Deploy the iflow.

Use Postman Request Create message to add the user’s message to the thread. Pass assistant_id and thread_id to the path parameter and user’s message in the body (only text). ⚠️ Get New Access Token from the Authorization tab first before creating a message.

The Integration iflow under the hood will perform the following steps as per the OpenAI documentation at https://platform.openai.com/docs/assistants/overview/step-3-add-a-message-to-a-thread.

- Step 3: Add a Message to a Thread

- Step 4: Run the Assistant

- Step 5: Check the Run status

- Step 6: Display the Assistant’s Response

Corresponding Process Call in the Integration iflow which handles the above steps is marked as below

Step 5 is where it gets tricky. You can read about the run lifecycle in the OpenAI docs: https://platform.openai.com/docs/assistants/how-it-works/runs-and-run-steps.

If parameter Enable Logging is set as true, you will able to see all the API response for Step 3 to Step 6.

Refer to the Postman examples named “Movie Suggestions” to observe how the Assistant API has responded to various recommendations I have queried.

Some key point to consider

- If you’re on a free account, be careful about the number of times you hit Send since you might reach the OpenAI rate limits.

- I have included examples for each Postman request discussed above. Please refer to them in case of any doubts.

- While testing with a free account using the gpt-3.5-turbo-1106 model, there were instances of incorrect results or hallucinations. However, testing with a paid account using the gpt-4-1106-preview consistently provided accurate results in most cases.

- For testing and using a free account, avoid uploading large documents to prevent quickly exceeding the $5 credit limit. During my testing, I successfully conducted a substantial amount of user message testing within the free credit limit.

- To ensure the Assistant meets its intended purpose and responds effectively to user messages, provide clear instructions. You can define instructions in two places: during the creation of the Assistant and during the creation of a run (refer to Local Integration Process -> Run the Assistant -> Set Run Instructions in the integration flow).

- The accuracy and efficiency of the Assistant’s responses depend on the quality and relevance of the data, i.e., the file you upload. Optimize your data to achieve the best results.

- Test and iterate over instructions to improve the performance and response of the Assistant.

- Before using the Assistant API in a paid plan, make sure to familiarize yourself with the pricing details outlined by OpenAI.

Limitations

- Refer to the OpenAI documentation to understand the limitations. Please note that these limitations are subject to change as the API is still in beta and OpenAI is actively working on adding more functionality.

- Even though you’re no longer sending the entire history each time, you will still be charged for the tokens of the entire conversation history with each Run. Refer to the community post.I have implemented a workaround to automatically delete threads that are 7 days old, preventing them from accumulating extensive history.

Conclusion

OpenAI’s Assistants API is a significant step forward in making complex AI functionalities more accessible and practical for developers. By integrating a knowledge base directly into an AI assistant, we can create a dynamic, responsive, and highly intelligent system that can provide specific, sourced information on demand. This approach is much simpler and more straightforward than the previous method of manually chunking text and creating embeddings, storing them in a vector database, and implementing vector search to return relevant sections according to a user query’s embeddings.

I hope you enjoyed the sneak peek into the Assistant API in the blog and that it has sparked your curiosity and excitement about the possibilities ahead. Please note that this is still a work in progress under beta, so be sure to keep up with the latest development by exploring the Assistant API docs frequently. Thank you and happy building!

If you have any questions, feel free to ask in the comment section. If you find the blog helpful, please like and share it with your SAP colleagues.

如有侵权请联系:admin#unsafe.sh