The EU-GDPR became enforced in May 2018 already. So many may think “not again – this topic has already been discussed so many times. Why again and again?”

The answer is more than obvious: GDPR is a kind of “never ending story” Any change in existing business processes, transition in cloud, archiving, new system or service added – will be a topic or require a review of conformity to GDPR – if personal data of any data subject is used.

This blog posts concerns mainly

- transition to S/4HANA (both on Premise and PCE)

- large installations with multiple SAP Systems, NON SAP Systems, BTP Services, Multicloud & Multi Provider and Master Data Governance

- Move to One SAP S/4HANA

What topics need to be considered:

- Controlling instance for blocking and destruction

- Notification communication between systems

- Archiving solutions for GDPR

- Migration topics

- Shutdown / decommissioning of legacy systems after migration

Disclaimer

Need to repeat, this blogpost is no legal advice. It considers technical aspects of GDPR architectures only. In any case the data protection officer, LoB staff operating with personal data and a law firm or consultancy need to be invoked in any process that concerns GDPR topics. The information below is based on experience and information collected in discussion with various customers of different industries. By no means this intended as “the” ultimate solution to resolve GDPR or any archiving topic. It is a collection of solutions that are currently in use and what methods were used to achieve a compliant archiving and reporting solution. If a solution fits a specific use case need to be analysed and verified individually by a specialised architect.

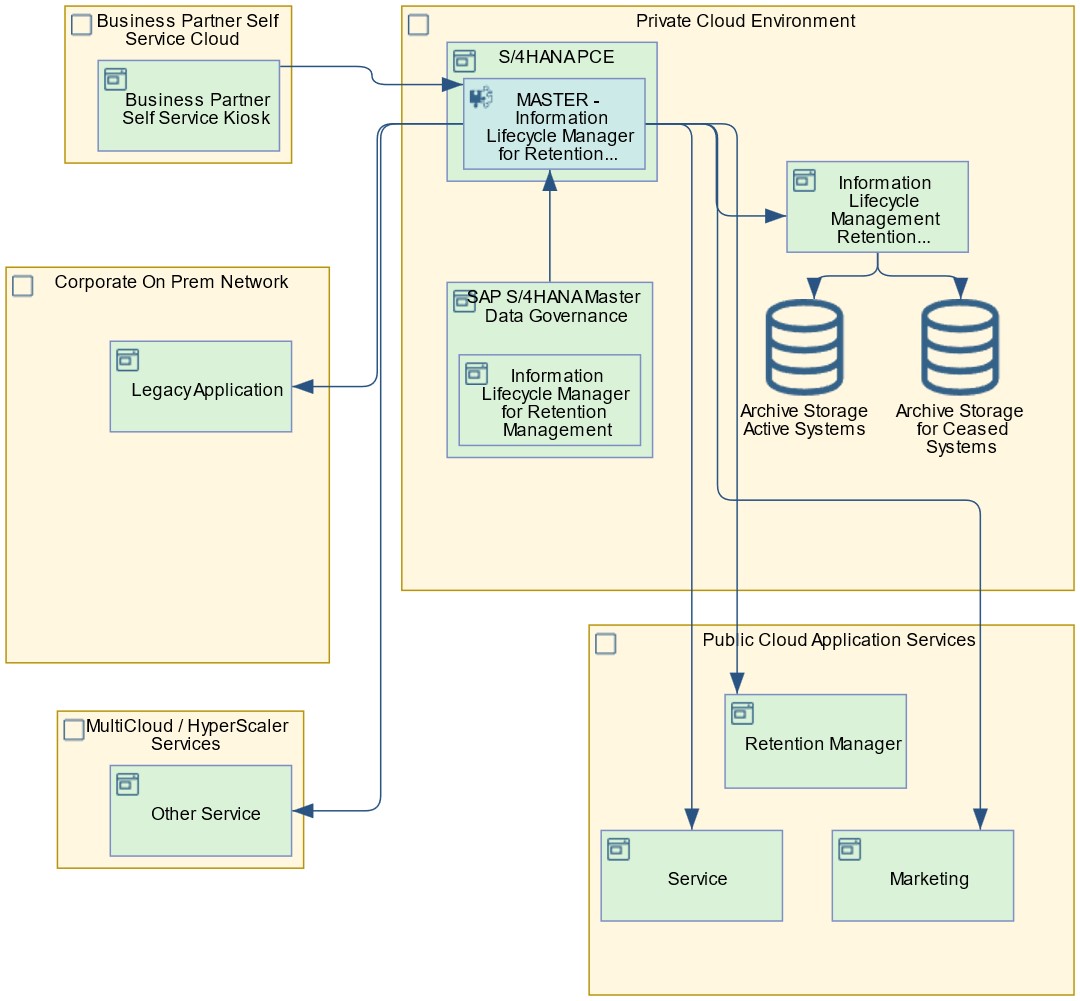

Example of a typical application architecture

Example Architecture

The example application architecture as illustrated above is defined as a typical reference shown by many customers.

A common scenario

Let’s assume we are in transformation to cloud:

We are already using some cloud services like SAP Ariba and SAP SuccessFactors, SAP Sales and Service Cloud

We are getting additional services from 2 different hyperscalers

The intention is now to move in a step-wise process our existing SAP Business Suite on premise in a PCE system and add more services to BTP and after a cool down we shut down the on premise systems.

We will later analyze where to look out for GDPR and tasks to information lifecycle of personal data and any data of systems to be ceased but need to be kept for legal reason.

The challenge:

- A kiosk system hosted in cloud by hyperscaler A: Purpose a self service master data entry system to create, maintain and change master data and allow insight in any contract, agreement and control purpose of use of master data such as marketing or increase / decline further business.

- On premise are still the legacy ERP in use, also a CRM and a custom non SAP application that is scheduled to be migrated or adopted in a later phase.

- Another hyperscaler cloud provider we have chosen to store legacy data / BLOB store that is to be used as archive and document store. (Just to increase complexity and to highlight some risk)

- A subscription to a couple of BTP services such as SAP MarketingCloud , SAP Datasphere as our DWH and SAP Analytics Cloud for presentation. The data is consumed from on Premise and elsewhere and data to the applications will come from both legacy systems and the new S/4HANA on PCE, Master Data from SAP Master Data Governance and a SAP ILM PCE shall take the storage control for all active systems and Rules and deletion of all ceased systems in near future.

- Old and new systems will run for a time in parallel. We do a green field approach, and the project is run agile in sprints. We do not want any business interruption and move stepwise processes to S/4HANA. The master data is cleansed, golden record created and moved to SAP MDG.

So that is our vision. Now this is what is need to be considered in sense of GDPR.

Analyzing the architecture from GDPR perspective

We won’t look at all topics but the important ones in this blogpost are the following questions:

- Where will personal data be created / altered / added and requests are set?

- Where will personal data be used and consumed?

- Where will personal data be stored, blocked and destroyed?

Not looking at how data will be used, electronic transfer etc

Recap

Data of a data subject must be destroyed immediately after end of purpose unless:

- There is a legal reason to keep the data for a given period (e.g. tax law, insurance, healthcare topics)

- There is a valid and binding contract / warranty between the data subject and the company storing the data even if there is no current active business (e.g. warranty purpose)

- Consent by data subject

This is valid for both the active and any decommissioned system. This means, if a legacy system is kept only to fulfill compliance regulation and what I heard from customers: “We set it to ‘read only’ that is likely the cheapest solution”

However according to the directive “destruction immediately if there is no legal reason to keep data”

We shall conclude:

- “read only” is usually not compliant with destruction rules and some records in a ceased system may be eligible for destruction far earlier than others. And there might be data that will fall under destruction policy.

- This means a “read only” system is incompliant if content is applicable to GDPR or similar laws. As well as any archiving system that has no capability of selective destruction of data.

Move along the Information Life Cycle

Creation and Modification of Personal Data

Kiosk System

Both the kiosk system in DMZ and SAP S/4HANA Master Data Governance are likely to create, alter and complement information on data subjects

SAP S/4HANA Master Data Governance

The kiosk system allows the business partner to maintain its data as a self service. Decoupled from the internal processes in a safe and secure process. It might be used just to maintain B2B or B2C personal information including bank data, but the application could be many – included perhaps in a web information on outstanding orders, warranty claims or marketing communication. A business partner may configure the communication options including marketing channels.

However this is a subset of information maintained in SAP Master Data Governance but may receive some information that is NOT maintained in SAP Master Data Governance but elsewhere. An indicator “I wish to be deleted” may not be sent to SAP SAP S/4HANA Master Data Governance (herein SAP MDG) but SAP ILM may become notified.

The SAP MDG will maintain the entire BP master data process user friendly and workflow oriented system but also data invisible to the business partner such as scoring information, geospatial data, records on supply chain due diligence, export restrictions etc.

From a GDPR perspective it is important what data is stored here, and where data is sent to.

Case 1. A business partner has requested to be deleted.

Fact:

- A kiosk system has little to no knowledge on archived data and transactional data.

- The SAP MDG has no information on business process active

- Both Kiosk and SAP MDG can set marketing rules and set blocks for future business

- Necessary work – block users / cut roles from access to business partner data

- Stop providing data to systems that are not eligible to know this business partner anymore.

- As neither Kiosk nor SAP MDG are aware of transaction data status the systems make no sense as a ILM GDPR controlling instance.

- Obviously other applications not handling transactional data may be also eligible to decide on an end of purpose status (e.g. handling contracts)

Case 2 The business partner went out of business / ceased / contracts invalidated, no business for more than a reporting period

Same as 1. as long as lawful storage according to GDPR is applicable

- Data has simply to be blocked in database and writing access denied

- Depending on business case redirect any request from business partner to data protection officer

if no legitimate reason exists initiate a destruction process to destroy the data and fulfill the right to be forgotten.

However both systems do not know about transactional status of records and so are dependent of becoming notified by transactional systems.

The very same is applicable on any CRM, Warehouse or B2B platforms. With very few exceptions none of these “satellite” systems are aware and needed of any legally applicable record keeping.

What are exceptions: controlled substances and materials that need to be traced from production to final consumer. Even in this case a controlled material will always be linked to a business transaction.

Also if there are more than one SAP S/4HANA or applications that process transactional data of business partners there is synchronization between transactional data required. One transactional system must be selected to become the master instance of ILM and rules development need to be aligned. This is one of the complex cases in GDPR alignment.

So the master system controlling ILM rules whether it is related to GDPR purpose or ILM used to maintain space management. It is best located in the main operative SAP S/4HANA system.

For decommissioned systems all rules are centrally maintained in the ILM retention warehouse and data will be destroyed along the retention time defined for each object and system.

So usually the S/4HANA production system is defined as the “controlling” instance for all active systems. How will any other systems be notified and learn on what to do if ILM rules are applied?

The ILM business function notification service is used to communicate a message set between systems.

ILM Notifications concerns

-

Archiving Delete

-

Data Destruction Run Using Archiving Write Report

-

Data Destruction of Archive Files

-

Destruction Run Using Data Destruction Object

-

All

-

Blocked

-

Unblocked

In case some master data change (MDG) a typical process would look like so, a business partner (customer) sets a flag “please delete me” in a kiosk system. the message is passed to e.g. Master Data Governance and there the information need to be passed to S/4HANA ILM for the End Of Purpose Check. Then ILM will decide to notify SAP MDG / ILM, Marketing Cloud or via external call (SOAP, REST, OData) send the notification to systems not using ILM such as BTP application or 3rd party applications having a similar technology implemented. Custom applications may need to be extend to receive ILM notifications via SOAP / REST/ OData.

The notification must then be interpreted and executed according to the list above.

This illustration shows a bit what challenges lie ahead when notifying systems

ILM Notification Flows

The notification of changes and requests depend on purpose of data and in many cases do not need to be bidirectional. A confirmation of action may not be required. If however something goes wrong or if data is “still be in use” this needs to be determined and properly in rules or even application extension handled.

Determining and estimating notification may require some time and integrating custom legacy systems in a GDPR compliant life cycle network may generate some effort implementing such interfaces. So a cost / risk / benefit estimation should be executed.

As SAP Information Lifecycle Management (abbreviated as SAP ILM) is a business function in SAP Netweaver – and no archive solution it must be complemented by a storage or archive solution providing sufficient space to store all archived data.

SAP ILM Private Cloud Edition provides 2 major capabilities:

- For active systems e.g. SAP S/4HANA PCE, legacy SAP ECC on premise it is a space manager controlling connected storage such as SAP IQ, Azure BLOB.. etc.

- For ceased systems it performs access control, reporting on archived data on all systems archived data and rules execution on destruction.

The active systems use their own ILM business functions to determine when data needs to be blocked or destroyed.

Parameters required to right size a SAP ILM PCE solution

On Premise the licensing metric is simple, – just the number of managed systems (active or to be decommissioned) count to a license. The effort for use, operations, hardware, storing, test and qs is not counted as a metric and any SAP customer must deal with all risks and efforts.

SAP ILM PCE is a service. So the minimum count here is at least 3 connections. The connection may be used as a customer demand the use. E.g. active system, test, development, or active system, legacy archived system, Test & development system.

The storage service is not part of the SAP ILM PCE license but need to be estimated separately. A typical packaged solution recommends SAP IQ as storage directly managed by SAP ILM Store Business Function.

Alternatives could be stored by OpenText (via WEBDAV), Azure Blob or other supported solutions on demand. However in this case it need to be considered that any store that is not controlled or managed by SAP.

So estimating the cost of SAP ILM will need:

- Number of active systems and systems to be ceased and connected to SAP ILM PCE plus at least one connection for Test / Development.

- The cumulative size of archived data in TB of all managed systems.

It is usually not necessary to continuously maintain for each system to be ceased an additional T&D system. It is rather unlikely to have frequent modifications on ILM rules on a decommissioned system. It might be necessary to have more than one archive storage connected to SAP ILM PCE to fulfill compliance, some countries may request to store the personal data of citizen or fiscal data in the respective country. This means in some cases data archives may need to be duplicated.

Little benefit often not considered: The cost of operating the archives in a landscape consolidation process e.g. to “one S/4HANA” means also, the data not needed anymore will be automatically destroyed, and once a system is out of its legacy period the connection cost disappears. – So amount of data archived and number of connections should shrink over time to the amount of only consolidated data of active environment.

Connecting SAP ILM PCE

ILM PCE Packaged

ILM packaged architecture

The packaged solution is the simplest architecture in an SAP ILM PCE setup and move to cloud process. Both the ILM Service and storage will be managed by SAP ECS. Any active SAP S/4HANA system in PCE, any still on premise active legacy system and any ceases system will be managed in one place. The connection will be achieved using an IPSec tunnel or equivalent depending on hyperscaler hosting the PCE environment. So there is one single partner, SAP ECS, maintaining all operations. Last remaining system in customer responsibility is the legacy ECC until its getting decomissioned. This is the simplest setup, just one connection.

ILM PCE with archive storage in customer responsibility

The same configuration as above but ILM storage is hosted elsewhere. There are plenty of reasons to choose this configuration. There are customers who are already use standard WEBDAV compliant archive for GDPR compliance like OpenText or have other reasons to choose another solution. In this case the connection between SAP ILM PCE Server and storage is tunneled back to on premise and routed to a supported storage solution. Obviously there is some added complexity in setup of tunnels, routing and responsibility of actions. Also bandwidth, latency need to be considered in such a setup.

ILM PCE with VPN Peering

ILM PCE and VPN Peering

This setup can be chosen if a customer uses already some services at a hyperscaler (eg. using Microsoft ™ Azure(tm) services) , all SAP BTP services and SAP ECS provided services are based on the very same hyperscaler and location. In this case a peering can be used to connect to storage provided by the hyperscaler. Lower complexity but needs to have a single hyperscaler strategy.

In this “brain dump” some remarks on migration:

Archiving is not migration and both topics are often aligned in a “move to cloud” scenario but not to be mixed up. But the following rules make the move simpler and easier.

- Archive early to minimize the amount of data to be migrated.

- Clean up records outstanding in an incomplete status for what reason ever. (dead bodies)

- Decide early what can be archived and what needs to be migrated.

- Destroy / delete what is not required.

- Ensure data quality – data archived cannot be modified

- Existing ADK (archive development kit) files need to be converted in ILM aware archive files.

- Greenfield needs master and reference data too. (some migration cannot be avoided)

- Plan for consistent information lifecycle, what to keep resident for what time, when to archive and when to destroy. Implementing archiving objects for an active system takes always more time than archiving and decommissioning a legacy system

- Quality analysis of the systems to be migrated estimates efforts needed and identifies possible issues.

Now having successfully migrated to cloud what to do with the legacy systems?

One question that always raises in any discussion: When it’s time to shutdown legacy systems?

In a green field approach:

- Not: As long as there are still open books, financial accounts and statement of balance need to be created. Open transactions to be closed, that were opened before transition started.

- Dead business need to be resolved before archiving.

In a brown field approach

- If all data was migrated, archived data, data in residence, active transactions and all master data, the decommissioning can be started quite early as there should be no need to consider maintaining a legacy system once the migration completed and data verified.

Rule of the thumb once the number of access requests has dropped significantly – It’s time to decommission the system.

In the majority of cases customers perform a green field approach. Only required master data will be migrated from legacy systems and all operational / transactional data will start as new.

The process of decommissioning a legacy systems requires a well predictable amount of time, usually less than 3 months duration.

Using SAP Information Lifecycle Management Retention-Warehouse

- all legacy systems will be moved in one single Retention Warehouse

- All Data will be replicated from source to Retention Warehouse

- Attachment documents (PDF, Scan, Images, ..) that are directly stored with data objects will also be replicated as archive objects.

- If attachments are stored in a document management systems it need to be decided if the attachments shall be moved into Retention Warehouse or stay where there are and links only. It need to be resolved if the document management system needs to be replaced the links to documents are to remain and read only. If documents need to be destroyed, notifications must be sent and executed by document management system. If the same document will be used elsewhere too or earlier destruction requests occur this must be resolved and may add complexity.

- A data quality check should be performed before decommissioning.

- Archiving means data will be (occasionally) accessed. Once a system is decommissioned the access to original transactions and chain of records navigation becomes impossible. Instead reports will allow access to data and reports must be created on demand. SAP Data Management and Landscape Transformation Team offers services and a reporting framework that allows a reporting providing similar content to transactions but of course read only.

- GDPR relevant data in an ILM store will be destroyed according to rules based on the legal restrictions applicable. The controlling of decommissioned systems is independent of active systems. Any business partner request of destruction is not applicable while legal requirements demand keeping of data. However a request of information by a business partner: “What data have you stored about me and what is the reason” must be answered and such a report must be created also on an archive system.

As we have learned – there is always some GDPR review no matter if on premise or in cloud.

- The best place to control GDPR data in an operative system is the ILM in active ERP because it knows most of the status of active transactions and serves transactional information to satellites such as analytical consumers. Only if external data processors and further SAP or NON-SAP transactional processing is performed a notification needs to be exchanged.

- DCRF Data Controller Rules Framework can be adjusted to align and reuse rules.

- Personal data in analytical database should be avoided or anonymized whenever possible.

- SAP MDG and Kiosk systems are usually the last systems to be notified of blocking and destruction

- SAP ILM PCE Service is only to control the decommissioned systems data life cycle but acts as a storage maintaining service for active systems.

- Keeping legacy systems alive in a read only mode – may be not compliant as this violates destruction rules after end of any legal reason to keep the data

- Using an archive system that does not permit selective destruction of records on expiry may be not compliant to regulation.

At last even if GDPR is a never ending story – this blog post is now finalized. I hope I covered most topics related to GDPR, archiving and system cease that are usually discussed in customer meetings. Still, each business is different and there is not “one method fits all” but all topics in this blog post will be subject of a transformation process.

Thank you for reading. Comments, critics, suggestions and questions are welcome.

如有侵权请联系:admin#unsafe.sh