2023-12-14 05:52:22 Author: blogs.sap.com(查看原文) 阅读量:14 收藏

Why is it that people don’t trust Artificial Intelligence (AI)? The results of a recent survey puts the situation in a nutshell: (US-American) people trust AI to pick their films and music, but not much else. In contrast, they have no problems with old-fashioned automated systems because their understanding is that these simply do what they’re told. The question therefore is, how can we make AI systems more understandable and trustworthy?

Recently, I’ve had the privilege to be a temporary member of SAP’s AI Ethics team, led by SAP’s head of AI ethics, Sebastian Wieczorek. Being a psychologist and human-centricity practitioner, one topic specifically caught my attention: the aspect of AI transparency towards the individuals working with or affected by AI systems. This led me to the following questions:

- How does the user know where a decision based on / made by AI comes from?

- How can it be ensured that they understand how this output can be interpreted?

- And, first of all: Why would they actually care?

Summarizing my learnings on the topic, I’m going to line out five steps that will help you create appropriate and meaningful AI understandability.

1. Distinguish between explainability and interpretability

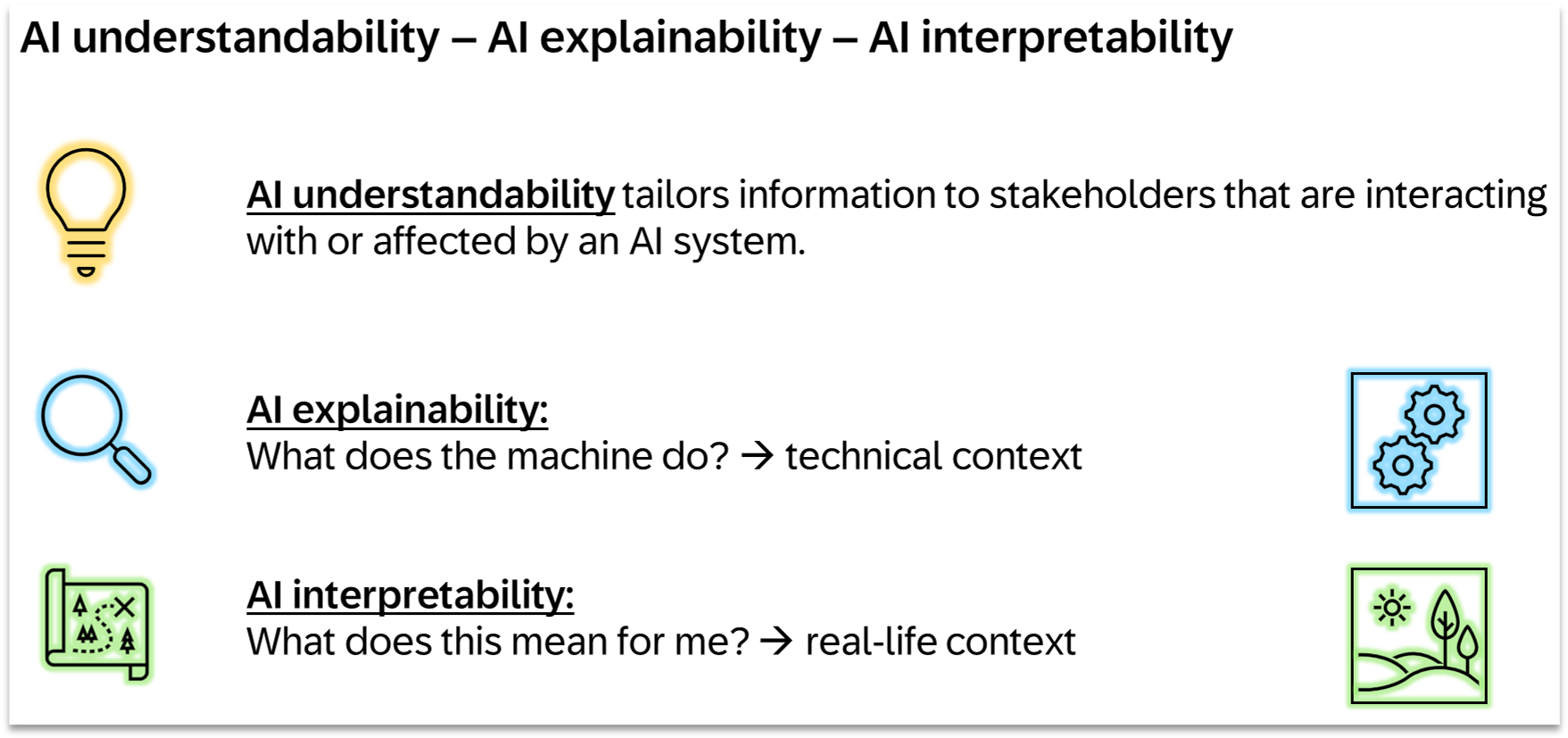

Explainability and interpretability are important principles for building trustworthy AI (see https://oecd.ai/en/ai-principles for example). The differentiation between these two concepts is not consistent across publications. The most useful definitions I found so far (Broniatowski, 2021) are providing a helpful perspective for human-centered product definition and design of AI systems:

Explainability

AI explainability provides an answer to the question “What did the machine do to reach the output?”

It refers to the technological context of the AI, its mechanics, rules and algorithms, to the training data etc.

It is interesting for users who need to understand whether an AI is working properly, for example with regards to non-discrimination.

Interpretability

AI interpretability addresses the question: “What does the output mean for me and the problem I need a solution for?”

It refers to the respective domain / ‘real-life’ context of the AI application, for example, in case of a decision about a loan application.

Understandability

I will use “understandable / understandability” as an umbrella term for explainability and interpretability whenever the concepts of explainability and interpretability as defined above are both being referred to.

Infographic 1: Definitions of AI understandability, AI explainability, and AI interpretability.

2. Clarify potential audiences and their cognitive model and context

Let me now introduce four personas to help illustrate their different needs towards explainability / interpretability, depending on their task and role: The AI developer, the AI ethicist, the AI end-user, and the affected user. Expectations towards understandability are typically different for these roles. (on other taxonomies see for example Zytek, A. et al., 2022.) The level of professional expertise (technology and / or domain) of the understandability audience is an additional aspect worthwhile looking into.

Infographic 2: Different personas and their needs towards explainability and interpretability.

3. Determine regulatory and ethical requirements for AI understandability

Next to the personas and their specifics, we also have the respective use cases with their characteristics and risks. After all, there is a difference whether we are talking about music suggestions or hiring processes.

High-stake / high-risk use case

Use cases from areas such as human resources, law enforcement, or the operation of critical infrastructure are strong candidates for a high-risk rating. Usually, you will find the respective definition in the ethical, regulatory and / or organizational frameworks that are relevant for you.

Implementing understandability features can address related requirements towards transparency, for example in the draft EU AI Act:

- Provide explainability to AI technology experts and AI ethicists for “human oversight” (draft EU AI Act Article 14, §4 (a) ):

“The measures […] shall enable the individuals to whom human oversight is assigned to do the following […]: fully understand the capacities and limitations of the high-risk AI system and be able to duly monitor its operation, so that signs of anomalies, dysfunctions and unexpected performance can be detected and addressed as soon as possible.” - Provide interpretability for AI end-users and affected users for “Transparency and provision of information to users” (draft EU AI act Article 13, §1):

“High-risk AI systems shall be designed and developed in such a way to ensure that their operation is sufficiently transparent to enable users to interpret the system’s output and use it appropriately. […]”

Infographic 3: Personas and their needs as regards high-risk use cases.

Low risk / impact use case

However, not every AI system needs to provide transparency in the sense of explainability or interpretability, for example, if the impact on financial, social, legal or other critical aspects is low or if the specific technology is very mature and regarded as safe and secure.

4. Combine the technical capabilities with design methodology

For implementing understandability that is suitable, appropriate, and usable for the respective roles and use cases, sometimes the output of technical tools can already be sufficient. For other contexts, however, that may not be the case.

Combining technical tools and a human-centered practice is a good approach to ensure relevance and usability of implemented understandability.

Leveraging (XAI) technology

Depending on the respective use case, explainable AI (XAI) algorithms like SHAP or LIME can contribute significantly to the creation of AI understandability, for example, by providing:

- information about data limitations and generizability

- multi-level global explanations

- interactive counterfactual explanations

- personalized and adaptive understandability

- post-hoc explanations.

Applying design practices

Design practices can enhance AI understandability implementation in a variety of ways, for example by:

- Contextualizing the system’s output for the task and problem to be solved.

- Adapting the XAI output to the users’ information needs, for example, level of details.

- Ensuring usability of interactive explanation and interpretation features.

There are several resources available on how to utilize (and if necessary: adapt) design methodology to AI and specifically to the creation of explainable AI (see for example Liao et al., 2021).

5. Evaluate AI understandability

Whichever combination of methods you choose for creating AI understandability features, the result needs to be as efficient, correct and useful as possible. To ensure the highest quality and relevance, consider technical criteria as applicable, including:

- Consistency

- Stability

- Compacity

And of course human-centered criteria, for example:

- Subjective assessment of pragmatic or hedonic qualities (e. g. using the User Experience Questionnaire UEQ)

- Task performance / effectiveness / efficiency

Infographic 4: Human-centered and technical criteria for AI understandability.

Summary

Looking at understandability of AI systems from different angles – the technical perspective and a human-centered point of view – holds great potential for meaningful transparency to individuals working with or affected by AI systems. This approach helps to create the appropriate trust and best performance in human–AI collaboration.

References / further reading:

- EU Artificial Intelligence Act (Proposal) link

- Broniatowski, D. A. (2021): Psychology of Explainability and Interpretability. pdf

- Laugwitz, Schrepp, Held (2008): User Experience Questionnaire. link

- Liao, Q. V. et al. (2021): Questioning the AI: Informing Design Practices for Explainable AI User Experiences. pdf

- Molnar, C. (2023): Interpretable Machine Learning: A Guide for Making Black Box Models Explainable. link

- Rosenfeld, A. (2021): Better Metrics for Evaluating Explainable Artificial Intelligence. pdf

- Zytek, A., et al. (2022): The Need for Interpretable Features: Motivation and Taxonomy. pdf

如有侵权请联系:admin#unsafe.sh