Today I would like to introduce a highly anticipated feature that we have developed specifica 2023-12-4 20:46:26 Author: blogs.sap.com(查看原文) 阅读量:5 收藏

Today I would like to introduce a highly anticipated feature that we have developed specifically for customers with high data volumes and who like to operate in the regular ABAP / BW world.

Cold Storage enablement for SAP BW bridge in SAP Datasphere is available now.

Our cold store solution with the embedded hana cloud datalake relational under SAP HANA Cloud helps SAP BW bridge customers to classify the data stored in the DataStore object (advanced) as warm or cold, depending on the cost and performance requirements for the data.

Depending on this classification and how the data is used, the data is stored in different storage areas.

The following options are available:

- Standard Tier – Extension Tier (WARM): The data is stored in an SAP HANA extension node in SAP BW bridge.

- External Tier (COLD): The data is stored externally in separate DB (SAP HANA Cloud embedded datalake relational

- Reporting Tier (HOT): SAP HANA Cloud in SAP Datasphere

How does it work?

In SAP BW bridge, all data is stored in SAP HANA native storage extension (NSE), which is our warm storage area. As everyone knows, SAP BW bridge is not enabled for reporting and acts as a cheaper staging option for all legacy related sources like SAP ECC or SAP S/4HANA. If you also license the embedded HANA Cloud datalake relational under SAP Datasphere as cold store for SAP BW bridge, you can easily move your data out into the cold store like with SAP BW/4HANA and SAP IQ as cold store, like the Data Tiering Optimization (DTO). SAP Datasphere acts as your hot storage area and reflects your reporting layer for SAP Analytics Cloud.

Procedure to move data into the embedded hana cloud data lake relational. There are two options available, manual or automated.

The cold store option for the respective advanced DataStore Object (aDSO) must be checked in the data tiering properties.

Create partitions based on a field and define the partition granularity of the aDSO. This can be done in the settings of the object.

Jump into the Data Tiering Management Setting in the SAP BW bridge Cockpit and select your aDSO.

Select the desired partition or year that you want to move towards SAP HANA Cloud, Data Lake relational.

Change the temperature to cold and execute the temperature settings in the previous screen.

How to move data into the Cold Store automated.

Create a rule for the selected aDSO.

Move for example data which is older than 5 years to the cold store and set the flag “active”.

Execute the temperature change for the schemas in the previous screen.

With the job sceduler you can decide, if you want to start the job immediately or planned.

If the job successfully finished, the status changes to OK.

In the overview page you can check afterwards if all partitions are fine.

Your data is now successfully moved from SAP BW bridge to SAP HANA Cloud, Data Lake relational.

How to handle temperatures in SAP Datasphere:

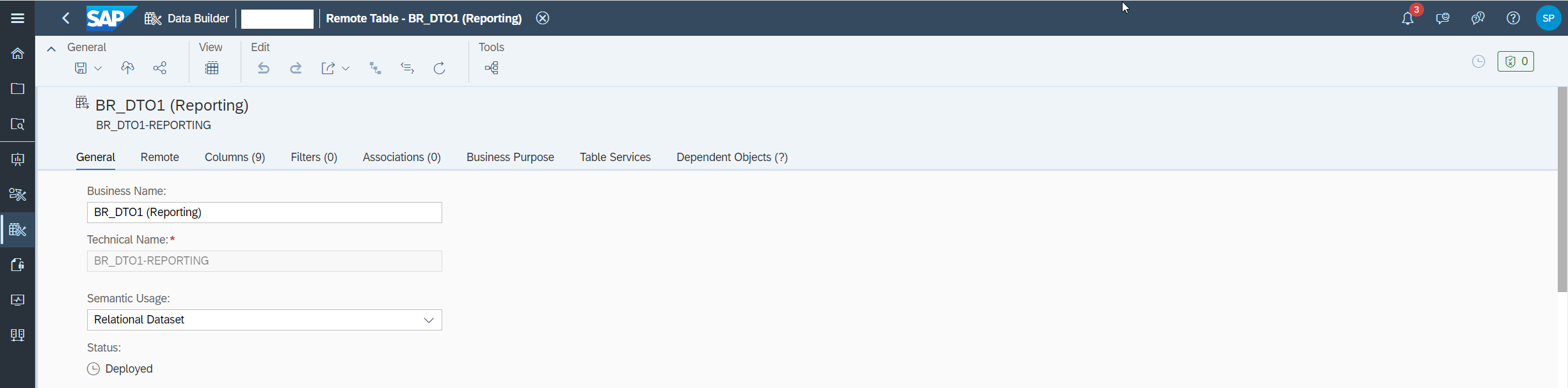

To use the cold store for reporting purposes, you have to import the respective remote table of the aDSO from SAP HANA Cloud, Data Lake relational and SAP BW bridge.

Remote Table: SAP BW bridge

Remote Table: SAP HANA Cloud, Data Lake relational

In the last step you have to create a union between your regular SAP BW bridge Remote Table and SAP HANA Cloud, Data Lake Remote Table.

As you know, our recommendation is clearly to replicate data from SAP BW bridge to SAP Datasphere. More Information about the Layers shift approach can be found under the following Link.

For data out of the cold store, you have to decide individually what kind of data is really reporting relevant and if a replication of some partitions makes sense or not.

In practice it often turns out that data sets, which are older than 5 years are only very rarely reported and you can access the data on request.

How to get the cold store enabled for SAP BW bridge?

Status quo via Ticket. Please refer to SAP Note 3401908 – Cold Store enablement for SAP BW bridge for further information on the procedure.

In future, we plan of course to automate this process.

Prerequisites

HANA Data Lake must be provisioned by the customer itself and in advance. This can be done in the flexible tenant configuration.

What’s about sizing?

The size of your cold store heavily depends on the usage pattern of the data. There are however certain guidelines and restrictions that need to be considered. All data that is written to or read from the ColdStore goes through Hana Cloud instance of the SAP BW bridge tenant. This means the Hana Cloud instance must be sufficiently sized to handle all parts of the cold data that is processed in parallel. Please keep in mind that not all query operations can be pushed down to the Cold Store, but in certain cases Hana Cloud is required to read more data (or finer granularity) in order to perform the requested computation. Additionally it is important to consider that changes to the data in the ColdStore (e.g. updates) are not performed in the Cold Store but the data needs to be re-loaded into the warm partition in Hana Cloud, updated and then moved back to Cold Store.

With this in mind a rough guideline is a warm:cold ratio of maximal 1:3 if the data in the ColdStore is frequently accessed (with the performance penalty) and may be updated during its lifetime. Data which is not changed and not accessed for reporting, like historic data stored for legal reasons only, can see a warm:cold ratio of up to 1:10. This is however the maximum that is supported and any such sizing should be done with great care and thorough usage considerations only.

Conclusion:

As described in the above part, data can be easily outsourced to SAP HANA Cloud, Data Lake relation in a cheaper storage medium, specially with SAP BW bridge. It is also very clear that we have based this functionality very closely on SAP BW/4HANA in order to keep the user experience of both worlds synchronized.

Please let me know, if you have any detailed question!

Regards,

Dominik

如有侵权请联系:admin#unsafe.sh