2023-12-1 13:54:49 Author: blogs.sap.com(查看原文) 阅读量:11 收藏

Unveiling a New Era in AI with RAG

Retrieval-Augmented Generation (RAG) is transforming AI’s approach to data, similar to the impact of GPS on navigation. It merges pre-trained language models with a data retrieval mechanism, allowing AI to incorporate precise, context-relevant information from extensive external sources. This innovation is particularly crucial in enterprise environments where accuracy and detailed context are key.

The Impact of RAG or In context learning in Business AI

RAG significantly expands the scope of Large Language Models (LLMs) in enterprise settings. Typically, while LLMs excel in text creation, they lack the capability to pull in specific, detailed data from company databases. RAG addresses this by retrieving the necessary information to ensure AI-generated responses are both relevant and factually accurate.

Real-World Applications of RAG

Consider the possibilities: a chatbot that consistently provides correct answers about your company’s policies, a document summarization tool that captures essential information accurately, or a dependable question-answering system. RAG not only makes AI systems more reliable but also enhances their intelligence by ensuring accurate, context-specific responses.

RAG Workflow: Comprehensive Data Handling

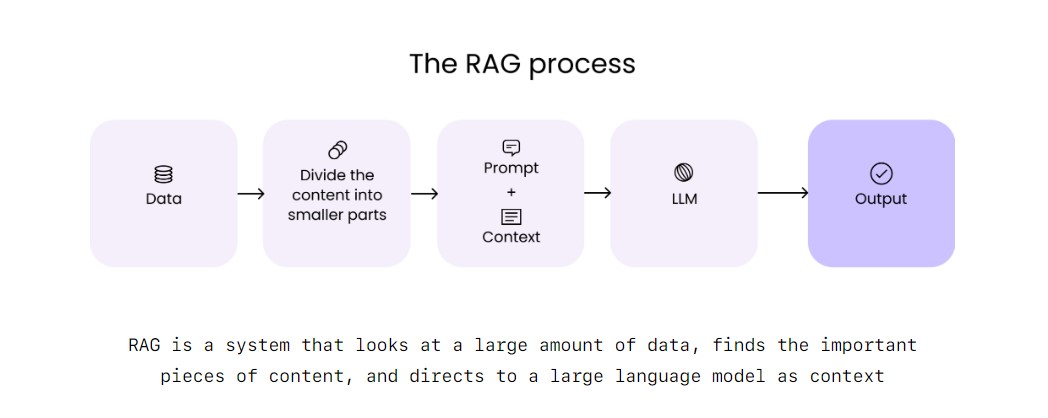

The RAG process encompasses several critical steps to produce relevant and high-quality AI responses:

- Data Preparation(Information Preprocessing): Similar to library cataloging, this step involves collecting and organizing data for easy accessibility. https://writer.com/blog/retrieval-augmented-generation-rag/

- Data Integration(Context Integration): Organized data is stored in a vector database, akin to arranging books in a library, setting the foundation for efficient AI text generation.

- AI-Driven Text Creation(Text generation): Utilizing the contextual data, language models generate accurate and relevant textual responses.

- Refining Outputs(Postprocessing and output): The AI-generated text is further refined to align with specific standards and requirements.

- Interface and Application Integration: The final step involves delivering RAG outputs through user interfaces or integrating them into larger applications like chatbots, providing users with precise and useful information.

Concluding Thoughts: RAG’s Transformative Role in Business AI

RAG is transforming business AI, providing solutions that are not only more precise and smart but also tailored to specific needs. This advancement broadens the scope of conventional AI, significantly enhancing its utility for various business applications, including improving customer interactions and optimizing internal data processing. In our next blog, we’ll delve into how tools like LangChain and VectorDB facilitate context integration in these advanced AI systems.

如有侵权请联系:admin#unsafe.sh