2023-11-15 14:52:6 Author: blogs.sap.com(查看原文) 阅读量:21 收藏

Hey folks! 🙋♂️👋

Another AI blog post, right? Nope, not this time – let’s finally stop talking and start walking! 🎬💥 We’ve got some real goodies to share with you, whether you’re an SAP customer, SAP partner, or just one of those strange but adorable enthusiasts like me, who can’t get enough of SAP. 🤓💼

At TechEd 2023, SAP dropped some major news on the Artificial Intelligence front, including our new friend Joule, the SAP HANA Cloud Vector Engine, and the star of today’s show, the generative AI hub in SAP AI Core, wrapped up in a real-world use-case. 🚀💡As we get ready for the service to roll out in Q4/2023 (planned), we just wanted to fill you in on what’s coming and how you can make the most of it in your SAP BTP solutions.

Too busy to read but still want the essential bits? 🏃♀️💨

Take a quick peek at our SAP-samples repository and Generative AI reference architecture🕵️♂️

Make sure you catch the brand-new openSAP course, “Generative AI at SAP“✏️

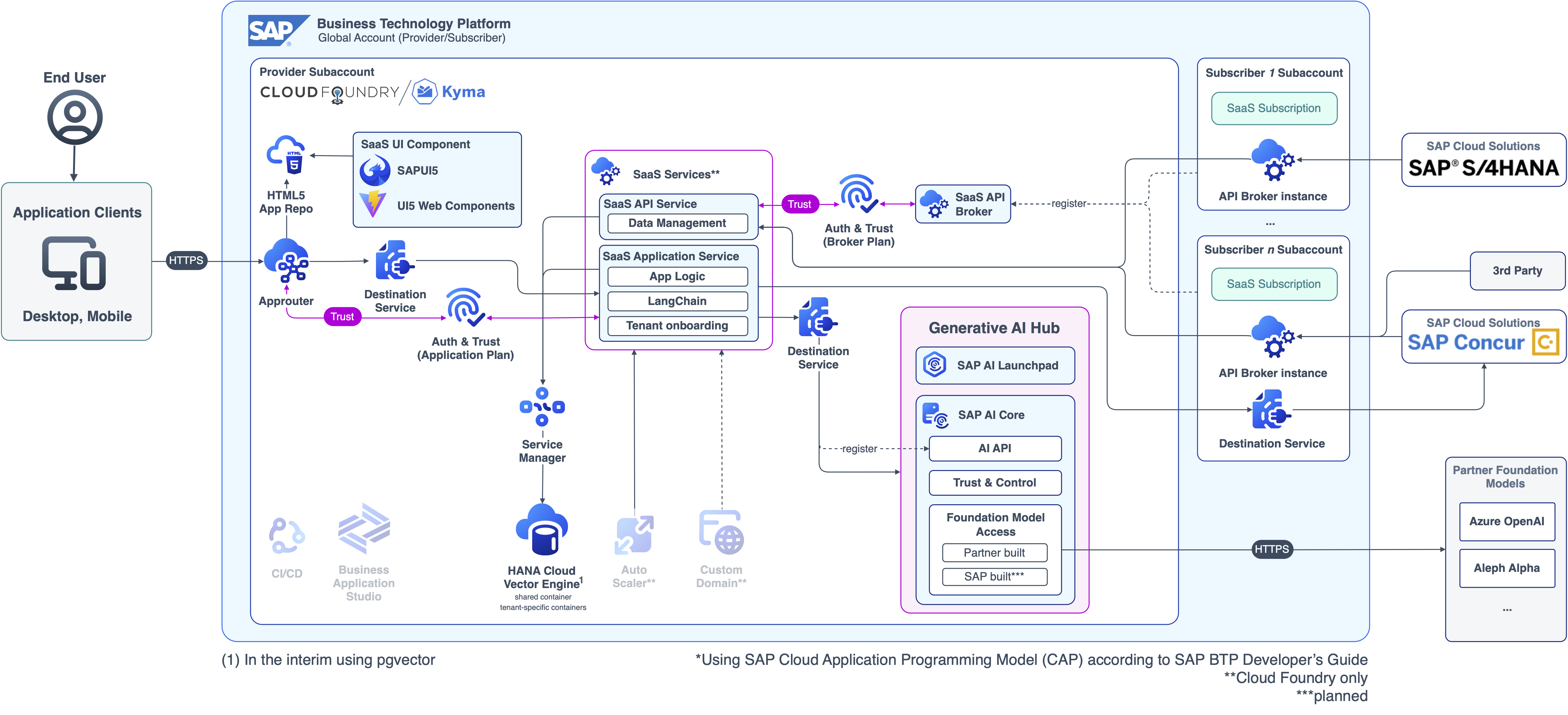

With our fresh-off-the-press reference architecture guidance for Generative AI scenarios, you’ll be more than ready to kickstart your own AI journey on SAP BTP. 🚀📚 Whether you’re building extensions, standalone solutions, or selling your own SaaS apps on SAP BTP, you can count on the generative AI hub for infusing your architecture with AI superpower. 🏗️💼👍

And it doesn’t stop there! You’ll also get to leverage best practices like a great SAPUI5 app, a robust SAP HANA Cloud back-end, a single and multitenant CAP application, and an API for your SaaS customers using the SAP Service Broker Framework. This sample scenario has got it all, folks!🌟💪🎉 Great news – our sample is available for the SAP BTP, Kyma Runtime and the the SAP BTP, Cloud Foundry Runtime!

Generative AI reference architecture for multitenant SAP BTP applications

This blog post is gonna shine a spotlight 🕵️♂️ on how customer and partner apps on SAP BTP can take advantage of SAP’s freshest service offering – the new generative AI hub as part of SAP AI Core. 🎉 This is all about helping you to add some serious AI capabilities to your single- and multitenant solutions.

To make things a bit easier and to lay down some solid groundwork for all you developers 🧑💻 diving headfirst into the SAP AI space, we’re gonna show off a few neat AI features. These are part of a new SAP-samples GitHub repository all built around a make-believe travel agency 🔮 we’re calling ThorTours. 🌍✈️

And here’s the fun part: we’re gonna make ThorTours’ customer support processes even better using Generative AI by reducing customer response efforts for faster handling of incoming requests and at the same time boosting the quality for higher consistency in support through reproducible responses. 🤖🚀 So hold on to your hats and let’s dive in! 🏊♀️👩💻

Customer Service app before the introduction of GenAI Mail Insights

Our GenAI Mail Insights sample solution is the new best friend of all ThorTours 🏝️⛱️customer support employees 🙌. It’s all about giving you top-notch mail insights and automation in a comprehensive, (multitenant) SAP BTP solution enhanced with a bunch of cool AI features 🤖. How does it do it? It uses Large Language Models (LLMs) via the generative AI hub in SAP AI Core to analyze incoming mails, offering categorization, sentiment analysis 📊, urgency assessment, and even extracts key facts to add a personalized touch 🎁.

What’s the cherry on top? 🍒 It’s the super innovative feature of Retrieval Augmented Generation (RAG) using mail embeddings. This helps in figuring out how similar requests and infuses those confirmed responses as additional context to reduce hallucinations 👻, ensuring you always get consistent service quality 💯. It doesn’t stop there, it also summarizes and translates simple mail enquiries for But wait, there’s more!

Our sample setup boosts automation by generating potential answers for customer inquiries, enhancing response accuracy and speed like a boss 💪. And the best part? It’s not just for the travel industry – it’s adaptable for various scenarios using custom schemas. So, whatever your need, we’ve got you covered! 😎

Customer Service app after the introduction of GenAI Mail Insights

Why does this matter, you ask? 🤔 Well, companies are often struggle with customer support headaches, everything from reading through long mails manually 📧 to language hurdles 🌍 and a lack of automation 🤖. What our demo solution brings to the table is quicker, more personalized, and consistent customer service 👥, savings on cost 💸, flexibility, and an edge over the competition 💼. And we’re not stopping there! In upcoming releases, we’re even thinking about an integration with SAP Cloud Solutions such as SAP Concur or a Microsoft Exchange Online Inbox. This could supercharge operations and give a boost to data-driven decision-making 📈.

Alright, enough talking for now – let’s roll up our sleeves and dive into some of the techie highlights you can uncover in our SAP-samples repository 🗂️. Ready to kick off with the first steps of processing new mail enquiries? This will lead us straight into our first challenge and the GenAI spotlight of our use-case 🎯. First things first, we’re going to see how our solution, built on SAP BTP, actually links up to the Large Language Models using the generative AI hub capabilities – the latest and greatest service offering as part of SAP AI Core announced at TechEd 2023 🔥.

If you’ve had a go at existing LLMs like Azure OpenAI, you’ll find the setup pretty familiar. 🧐 When an app needs to hook up with a Large Language Model (we’re talking Azure OpenAI in our scenario), it links up with the generative AI hub and dials up the URL or what we often call the inference endpoint of a tenant-specific deployment. 🖥️🔗 Each tenant in a multitenant setup gets their own dedicated so-called SAP AI Core resource group onboarded, which is awesome because it offers the flexibility for individual model deployments and upcoming metering features. 📊🚀

Tenant specific SAP AI Core Resource Groups and LLM Deployments

(Preview Version – subject to change – check latest openSAP course)

When it comes to our sample scenario, we’re sending payloads to the Inference URL for Chat Completion and creating Embeddings. This process is a piece of cake 🍰 because it’s just like the Azure OpenAI API specification, which means requests and custom modifications are a breeze 🌬️. Just a heads up though, this is still an early-bird 🐦 release of the generative AI hub, and we might simplify the integration even more in the future. Why, you ask? Well, it’s all thanks to the rising popularity of contributions to Open-Source resources like LangChain. 🚀🔥

To learn more about the generative AI hub (incl. the latest preview) and how SAP is leveraging Generative AI, please check out the latest openSAP course Generative AI at SAP.

For the time being, we’ve got some handy sample wrappers 🎁 that make connecting to the generative AI hub deployments super easy. They take care of handling multitenancy and can be swiftly adapted for other Large Language models available. Plus, these wrappers ensure compatibility with available LangChain features once the LLM object is instantiated. This allows you as a developer 🎩💡 to keep their eyes on the prize – the actual business process!

Calling the Chat Completion inference URL of a tenant-specific Deployment

(Implementation subject to change)

So, we’ve got a customer that’s just a big block of text, right? 😕 Our goal is to wrangle that into some useful info we can store it away in our database. 📚 We’re using the brainpower of a Large Language Model, LangChain and custom schemas (courtesy of the npm zod package) to make this happen. It’s all about having a flexible and hands-on setup with the Large Language Model. 🧠💬

We’re using custom schemas and auto-generated format instructions (that’s some prompt engineering magic right there! 🔮) to get a nice and structured JSON result. 📄 This we can process further and easily store in our SAP HANA Cloud Database using our beloved CAP framework making that task a child’s play. ☁️💾

The parsing features of LangChain and the custom schema package features are like two peas in a pod 🌱 They make sure that the LLM response fits into a properly typed object without any further type conversion hustle. 🛡️ Pretty cool!? Sounds almost too good to be true! 😲🎉 Using these kind of custom schema definitions will allow you to adapt the solution to any other kind of support scenario, by simply updating the respective schema details!

Using a custom schema (zod + LangChain) for a programmatic LLM interaction

Apart from extracting a summary, insights and relevant key-facts from a new customer enquiry, we’ve also made life easier by automating the drafting of reliable response proposals for new mails. 📩 This brings us to our second reveal, a cool mix of Large Language Models (LLM) magic and the capabilities of what’s known as Retrieval Augmented Generation . 🎩✨Also check the following blog post to learn more.

The concept is as straightforward as it is genius – we use an LLM to create a new response for a customer enquiry, but here’s the twist: we add in some extra context from previously answered similar mails. 🔄

While this might sound like a lot of effort, a very simple implementation is as easy as pie. 🥧 All you need is access to generative AI hub that is generating so-called embeddings for incoming mails and a suitable vector store to hold these embeddings. 🗄️ Embeddings are vector representations of versatile input formats (e.g., text, images, videos) in a high-dimensional space, capturing semantic relationships for natural language processing tasks 🤖. The SAP HANA Cloud Vector Engine will also support this requirement soon!

Once you’ve got these two features ready to go, you only need a few lines of custom code to spot similar mails in real time and inject the previous answer as additional context. All part of the regular prompt engineering process. 🚀

Leveraging the power of Retrieval Augmented Generation

Last but not least, let us try to handle the language barrier! As you all know, many of our fabulous SAP customers and partners are global rockstars 🌍🤘. This means dealing with international customers and team members from various countries. So, getting reliable translations is super crucial, right? 👏🔍

Our advice for top-notch translations in the SAP universe hasn’t shifted a bit. The SAP Translation Hub is our go-to recommendation for translating texts and documents in productive SAP scenarios. (Need a refresher? Click here!) 📚💡

Only for our simple and non-productive demo scenario, we’re mixing things up a bit and using Large Language Model capabilities – just for the sake of demonstration, mind you 😉. The LLM translates incoming customer demands into the user’s preferred language. And it doesn’t stop there! When you reply to those queries in your comfy working language, guess what? The recipient gets your response plus an automated translation whipped up by the Large Language Model. Another interesting use-case for using Generative AI 🖥️🔗, showing you about the endless possibilities this new technology has to offer.

Explore further exciting use-cases and scenarios for Generative AI

Hungry for more knowledge? 🧐 Get the ball rolling by diving into our Generative AI Reference Architecture 🏗️ and exploring our SAP-samples GitHub repository or related Discovery Center mission. 🚀 Once the generative AI hub in SAP AI Core goes public by end of , you’ll be able to navigate our step-by-step guide 🗺️ to deploy our sample solution to your own SAP BTP account. 🤓

Please be aware, that the generative AI hub as part of SAP AI Core will be generally available in Q4/2023 (see Road Map Explorer) and the planned availability for the SAP HANA Cloud Vector Engine is scheduled for the Q1/2024 release (see Road Map Explorer). Please check the available SAP-samples GitHub repository to learn more!

Big virtual high-five 🙌 to all the team members who played a part in putting together this sample use-case for TechEd 2023, including Kay Schmitteckert, Iyad Al Hafez, Adi Pleyer, Julian Schambeck, Anirban Majumdar, and the entire SAP AI Core team around Hadi Hares and Andreas Roth. 🎉👏

Further links

- openSAP Course GenerativeAI at SAP

- Artificial Intelligence / Machine Learning

- SAP AI Core

- SAP AI Launchpad

- SAP AI Business Services

- SAP AI Ethics

- Document Information Extraction (SAP AI Business Services)

- Data Attribute Recommendation (SAP AI Business Services)

- Personalized Recommendation (SAP AI Business Services)

如有侵权请联系:admin#unsafe.sh