SAP Datasphere allows organisations to create and manage data models, views, and integrations 2023-11-8 03:36:31 Author: blogs.sap.com(查看原文) 阅读量:6 收藏

SAP Datasphere allows organisations to create and manage data models, views, and integrations.

It provides native connections to SAP’s business suite. Snowflake is the ‘Data Cloud’ : providing organisations a way to store, process and share their data.

In this blog post, we will explore how to bring SAP Datasphere tables and views to Snowflake through a few simple steps :

- Prerequisites

- Importing data to SAP Datasphere

- Modeling In SAP Datasphere

- Create a database user for your SAP Datasphere space

- Establishing a connection from a replication tool to SAP Datasphere

- Run & monitor the pipeline

- Merge data from SAP Datasphere with data from Snowflake

1. Prerequisites

Before we begin, make sure you have the following prerequisites in place:

- Set up an SAP Datasphere (trial) account if you don’t already have one here.

- Create a space in SAP Datasphere and establish a connection to your source systems.

- Create a database user in your SAP Datasphere space.

- Set up a Snowflake (trial) account if you don’t already have one.

2. Import data to SAP Datasphere

First you need to set up your SAP Datasphere account, space, connection and database user. Then you’ll need some test data in tables. Follow Getting started with SAP Datasphere if it is your first time using SAP Datasphere.

You can access data by Import remote tables into SAP Datasphere, or by uploading a file to create a new local table :

Upload a file to create a table

3. Modeling In SAP Datasphere

In order to enable seamless data consumption by business users, a developper on SAP Datasphere can create data models that define the structure data, including tables, columns, and relationships.

SAP Datasphere allows you to integrate data from various sources, both on-premises and in the cloud. You can use pre-built connectors or custom connections to bring in data from SAP and non-SAP systems. Then, transformations are applied to raw data to prepare it for analysis. SAP

SAP Datasphere provides tools for cleansing, enriching, and transforming data :

Calculation Views and SQL Views used to define complex calculations and aggregations on your data. Calculation views enable you to create meaningful business metrics and key performance indicators (KPIs).

Security and Access Control: You can define security policies and access controls to ensure that users have the appropriate level of access to the data based on their roles and responsibilities. Collaboration: SAP DWC emphasises collaboration, allowing multiple users to work on the same data model simultaneously.

Graphical View

SQL View

Once your data model is created, expose the analytical dataset for consumption:

4. Create a database user for your SAP Datasphere space

Within Space management, create a new database user which will be used to exposed data to external tools. Ensure that the database user has the necessary read/write privileges.

You will need the host name, database user name, password.

Creation of a database user

5. Establishing a connection from a replication tool to SAP Datasphere

In this example, I use Microsoft Azure Data Factory as a replication tool from SAP Datasphere to Snowflake.

Within the Microsoft Azure console, open Azure Data Factory and start by creating Linked services for SAP HANA (the underlying database of SAP Datasphere) and Snowflake.

Create linked services for SAP Datasphere and for Snowflake

Add the host, user name and password of SAP Datasphere

Then, within the Author section, create a new Pipeline.

Create a new pipeline

Add a “copy data” activity to the pipeline.

Add a Copy data activity to the pipeline

Switch to the Source tab of your Copy data activity.

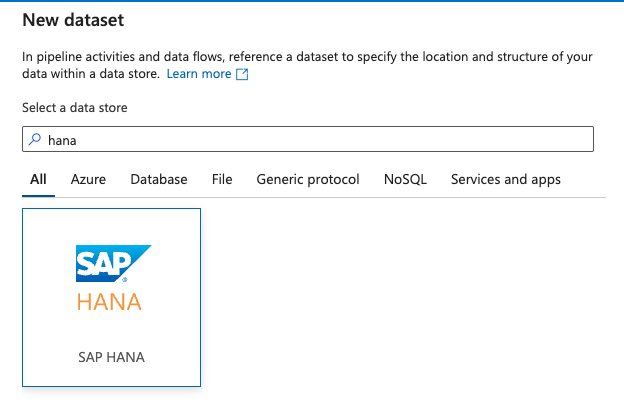

You will need to create a new source dataset for the Copy activity based on the view you created in SAP Datasphere.

Choose “New” for the source.

Set up the data source

Then choose SAP HANA Linked service and the corresponding data source.

Set the data source

Once your Source is set up, switch to the Sink tab of your Copy data activity. Then, create a new Sink dataset.

Set the data sink

Choose your Snowflake Linked service, then insert the target table name.

Snowflake Data sink

Finally, you can map your source columns to target columns.

Mapping

6. Run & monitor the pipeline

Monitor pipeline runs

7. Merge data from SAP Datasphere with data from Snowflake

Once you bring data from SAP Datasphere to Snowflake, you can merge it with other datasets residing in Snowflake. Snowflake supports advanced analytics and machine learning capabilities. By merging SAP data with other data sources, you can leverage these features to gain deeper insights and make more informed business decisions.

Here is the result of our data replication pipeline :

Select data from SAP Datasphere in Snowflake

Snowflake publishes tutorials for further data analysis, including SAP Accounts Receivable to Snowflake using Azure Data Factory. The result of this tutorial is a Tableau dashboard where you visualize Days Sales Outstanding coming from SAP FI-AR.

Track your Days Sales Outstanding in Tableau

Conclusion

You can seamlessly integrate SAP Datasphere views in Snowflake using any Replication tool.

This integration empowers you to leverage the combined capabilities of both platforms and unlock advanced analytics and machine learning opportunities.

Bringing SAP Datasphere data to Snowflake enables you to perform complex data analyses, build machine learning models, and gain valuable insights from your SAP data. Embrace the synergy of these robust tools to deliver successful data analytics.

Share any feedback or questions in the comments !

Maxime SIMON

如有侵权请联系:admin#unsafe.sh