Hello. In today’s blog, I will discuss why and how we can use an external Generative AI servi 2023-10-19 13:49:27 Author: blogs.sap.com(查看原文) 阅读量:25 收藏

Hello. In today’s blog, I will discuss why and how we can use an external Generative AI service from SAP BTP using AI Core.

🤔 What is AI Core, and what is the difference with Data Intelligence Cloud

🎯 SAP AI Core handles the execution and operations of your AI assets in BTP.

🌐 SAP Data Intelligence Cloud is for data scientists and IT teams to collaboratively design, deploy, and manage machine-learning models with SAP built-in tools for data governance, management, and transparency.

In a nutshell, in BTP, you use Data Intelligence Cloud if you want to build your models and AI Core to run a model you push from your Github Repo or consume an external model through an API.

There is a third way to use Phyton in the SAP ecosystem, with SAP HANA or HANA Cloud; PAL and APL libraries; if you want to use the power of HANA instead of the power of Docker/Kubernetes, this is not the blog, check out this Repository this link

AI Core has been around for some months, and running a Language Model on BTP has been described in previous blog posts here and here and in this tutorial.

Now, I will describe how to consume an external LLM service from BTP using AI Core.

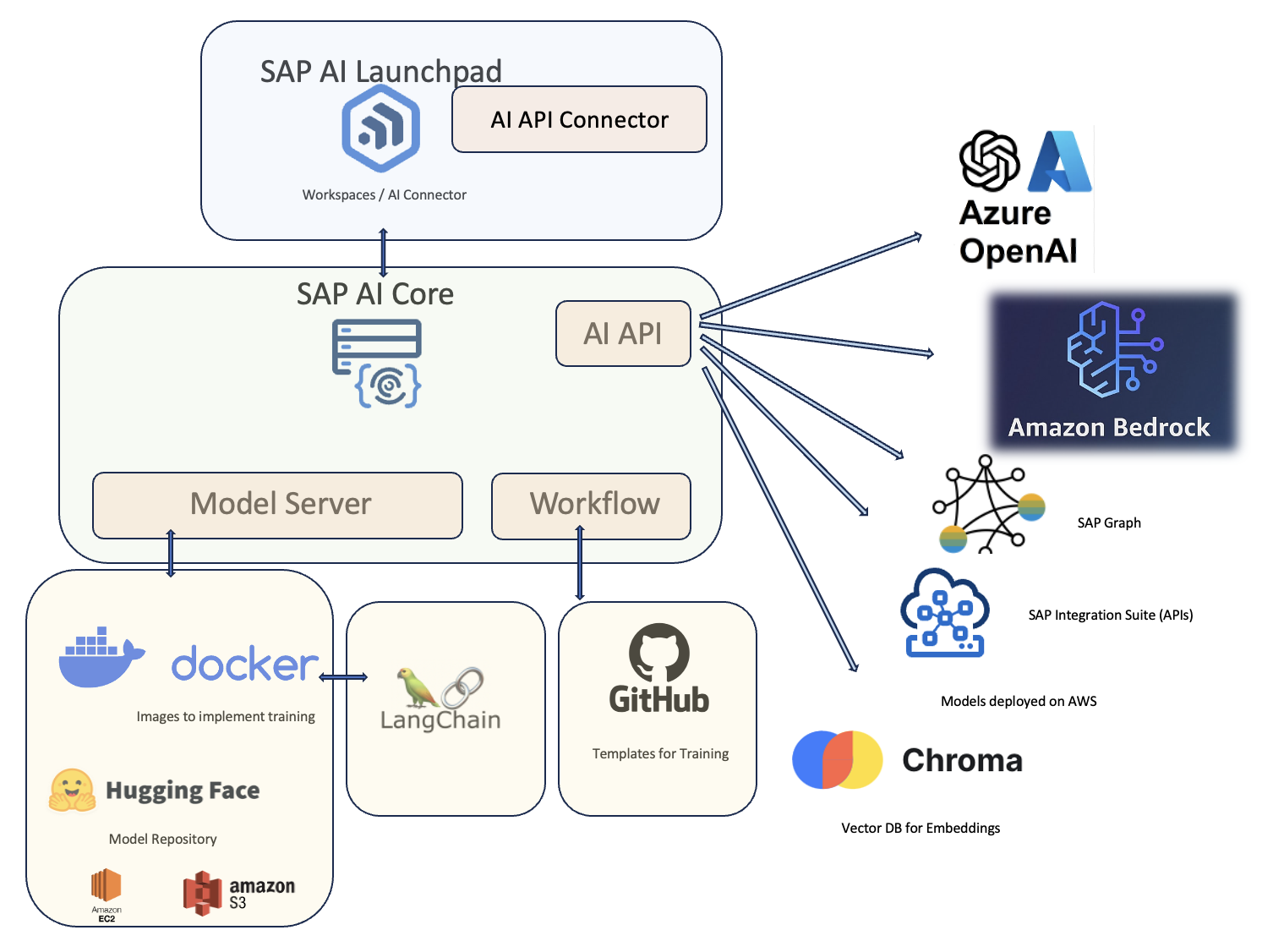

Key Elements of BTP AI Core and Launchpad from the image

| Summary of Elements and Usage | |

| What | Why |

| Git repository | For storing training and serving workflows and templates |

| Amazon S3 | For storage of input and output artifacts, such as training data and models |

| Docker Repository | For custom Docker images referenced in the templates |

| Docker / Kubernetes | The cluster for AI pipelines. |

| Amazon S3 / EC2 | As of today, AI Core runs on AWS infrastructure only, with docker images tied to EC2 instances from P and G families. |

| AI API |

For managing your artifacts and workflows (such as training scripts, data, models, and model servers) across multiple runtimes The AI API can also integrate other machine learning platforms, engines, or runtimes into the AI ecosystem. |

| LangChain | Language Model Application Development / Agents |

| SAP AI Launchpad | SAP AI Launchpad to manage AI use cases (scenarios) across multiple instances of AI runtimes (such as SAP AI Core) |

| Amazon Bedrock /Azure OpenAI | Models hosted on Hyperscalers accessed through API |

| SAP Graph |

SAP Graph accessing from multiple line of business (LOB) systems, SAP S/4HANA Cloud, SAP Success Factors, SAP S/4HANA |

| SAP Integration Suite | Access APIs from SAP or 3rd party systems |

| ChromaDB | Vector DB for Embeddings (RAG) |

| SAP Integration Suite | Access APIs from SAP or 3rd party systems |

If you want to see other description of AI Core objects, check out this blog

- SAP BTP Subaccount

- SAP AI Core instance

- SAP AI Launchpad subscription (recommended)

- SAP BTP, Cloud Foundry Runtime

- SAP Authorization and Trust Management Service

- Docker for LangChain

- Complete AI Core Onboarding

- Access to the Git repository, Docker repository, and S3 bucket onboarded to AI Core.

- Amazon Bedrock or Azure OpenAI account created an API key to access this account and deployed a Model on the services.

- Install SAP AI Core SDK. A Python-based SDK that lets you access SAP AI Core using Python methods and data structures using this link

- Install the Content Package for Large Language Models for SAP AI Core. A content package to deploy LLM workflows in AI Core following this link

Dockers are used to Integrate your cloud infrastructure and push it to BTP.

- Register your Docker registry,

- Synchronize your AI content from your git repository,

- Productize your AI content

- Expose it as a service to consumers in the SAP BTP marketplace (for Langchain).

- Generate a Docker image with

aicore-content create-image. - Generate a serving template with

aicore-content create-template.

Beware that using Langchain, Llama Index or other Open Source frameworks on BTP is easy and possible on any of the Resources available at BTP, you can find more information about the power of these instances checking the AWS documentation for P3 and G4 families.

Langchain is a recommended framework to use in combination of BTP, it allows developers to use and build Tools, Prompts, Vector stores, Agents, Text splitters, Output parsers which are fundamental tools high quality LLM scenarios.

These are the instructions for registering essential artifacts and setting up a connection to SAP AI Core for deployment. Here is a summarized overview of the key steps:

- Register Artifacts: Create and configure JSON files for the following artifacts:

- aic_service_key.json: Service key for your AI Core instance.

- git_setup.json: Details of the GitHub repository that will contain workflow files.

- docker_secret.json: Optional Docker secret for private images (use Docker Hub PAT).

- env.json: Environment variables for Docker, Amazon Bedrock, or Azure OpenAI services.

- Connect to AI Core Instance: Use the AI API Python SDK to connect to your AI Core instance using the credentials from the service key file.

- Onboard the Git Repository: Register a GitHub repository with workflow files for training and serving. Provide repository details, including username and password.

- Register an Application: Create an application registration for the onboarded repository, specifying the repository URL, path, and revision.

- Create a Resource Group: Establish a resource group as a scope for registered artifacts. Modify the resource group ID based on your account type (free or paid).

Set up and deploy an inference service in AI Core:

- Serving Configuration: Create a serving configuration that includes metadata about the AI scenario, the workflow for serving, and details about which artifacts and parameters to use. This configuration helps define how the inference service will work.

- Execute Code: Use the provided code to create the serving configuration. This code involves loading environment variables and specifying parameters for the Docker repository and Azure OpenAI service. The resulting configuration will be associated with a resource group.

- Verify Configuration: Once the serving configuration is successfully created, it should appear in AI Launchpad under the ML Operations > Configurations tab.

- Serve the Proxy: Use AI Core to deploy the inference service based on the serving configuration. The code provided will initiate the deployment process.

- Check Deployment Status: Poll the deployment status until it reaches “RUNNING.” The code will continuously check the quality and provide updates.

- Deployment Complete: Once the deployment is marked as “RUNNING,” the inference service is ready for use. The Deployment ID and other details in AI Launchpad under the Deployments tab.

Here are the key actions and steps for using RAG question Answering With Embeddings or In Context Learning from SAP Graph

Pre-requisites:

- Create an object store secret in AI Core

- Chroma vector database runs on a Docker on BTP.

- Get handy on SAP Graph APIs or SAP Integration Suite APIs

How to set up and deploy Chroma DB, a vector database, as a Docker container. Here are the key steps and points covered:

- Chroma DB in Production: When using Chroma DB in production, running it in client-server mode is preferable. This involves having a remote server running Chroma DB and connecting to it using HTTP requests.

- Setting Up a Chroma Client: The text provides Python code for setting up a Chroma client that connects to the Chroma DB server using HTTP. It configures the client to interact with the server.

- Creating a Dockerfile for the Client: A Dockerfile is designed for the Chroma client to run it as a container service. This file specifies the base image, working directory, package installations, and default command.

- Docker Compose: A Docker Compose file defines two services: the Chroma client and the Chroma DB server. It also creates a network bridge to allow communication between these services.

- Running Chroma DB and the Client: The

docker-compose up --buildcommand creates containers and runs Chroma DB and the Chroma client. This command should be executed with Docker Desktop running. - Consume SAP Graph from SAP Integration Suite following this video.

- Repository Link: A link to the complete repository for the Docker approach is provided.

- Use your deployment for question-answer queries, as demonstrated in the example provided on this URLhttps://pypi.org/project/sap-ai-core-llm/

More Materials 📚

Begginer Materials for AI Core and AI Launchpad

- SAP AI Core Toolkit

- SAP AI Core Architecture and objects for beginners

- Quick Start for Your First AI Project Using SAP AI Core

- Create Your First Machine Learning Project using SAP AI Core

- Develop an LLM-Based Application with Graph in SAP Integration Suite

Blog post series of Building AI and Sustainability Solutions on SAP BTP

- Meet SAP’s AI Portfolio and What It Can Do For You

- An overview of sustainability on top of SAP BTP

- Introduction of end-to-end ML ops with SAP AI Core

- BYOM with TensorFlow in SAP AI Core for Defect Detection

- BYOM with TensorFlow in SAP AI Core for Sound-based Predictive Maintenance

- Embedding Intelligence and Sustainability into Custom Applications on SAP BTP

- Maintenance Cost & Sustainability Planning with SAP Analytics Cloud Planning

Demo videos recorded by SAP HANA Academy

- SAP Artificial Intelligence Onboarding / Pre-reqs Playlist

- SAP Artificial Intelligence Defect Detection Playlist

- SAP Artificial Intelligence Predictive Maintenance Playlist

- SAP Artificial Intelligence; Application Playlist

- Maintenance Cost & Sustainability Planning with SAP Analytics Cloud Playlist

Useful links for SAP AI Core and SAP AI Launchpad

- SAP AI Core Help Center

- SAP AI Launchpad Help Center

- Learning Journey of SAP AI

- SAP Computer Vision Package

- Metaflow library for SAP AI Core

Amazon Bedrock, Azure OpenAI, and ChromaDB GitHub repositories

如有侵权请联系:admin#unsafe.sh