2023-10-12 04:2:6 Author: blogs.sap.com(查看原文) 阅读量:8 收藏

Hi, SAP community!

I’d like to present a proof of concept I undertook, focusing on a particular scenario in Datasphere.

Problem Statement:

Consider this scenario where we need to store the data from 1000+ Excel files in SAP Datasphere. With the current features available in SAP Datasphere as of version 2023.21. Data from CSV files can be uploaded into a local table by going to Databuilder>Import CSV File.

However, the drawback of this method is that it has a size limit of around 200 MB and the files can not be queued and have to be uploaded one at a time. Considering the number of files, and depending upon the type of transformations & cleansing this can quickly become a daunting task.

Solution:

I embarked on a proof of concept (POC) to automate this mammoth manual task. Leveraging AI solutions like ChatGPT & BingAI, I built a pipeline that encompasses the following steps:

Steps:

- Initial Upload: Gather the required CSV files and upload them to Google Drive.

- Mounting the Drive to Collab: Use Python to mount your Google Drive in Google Colab.

- Data Transformation with Python: Run a Python script in Google Colab to process and cleanse the data. Save the results as new Excel files in a designated Google Drive folder.

- Upload into Google Cloud Storage: Set up an account on the Google Cloud platform and move the transformed files into a bucket in Google Cloud Storage (GCS)

- Integration with Google BigQuery (BQ): Transfer data from GCS to a structured table in BQ.

- SAP Datasphere & BQ Connection: Set up a BigQuery connection in Datasphere.

- Dataflow: Push data from BQ to a local table in Datasphere using the BQ source connection in Dataflow.

- Dashboard: Craft a story in SAC to visualize the processed data.

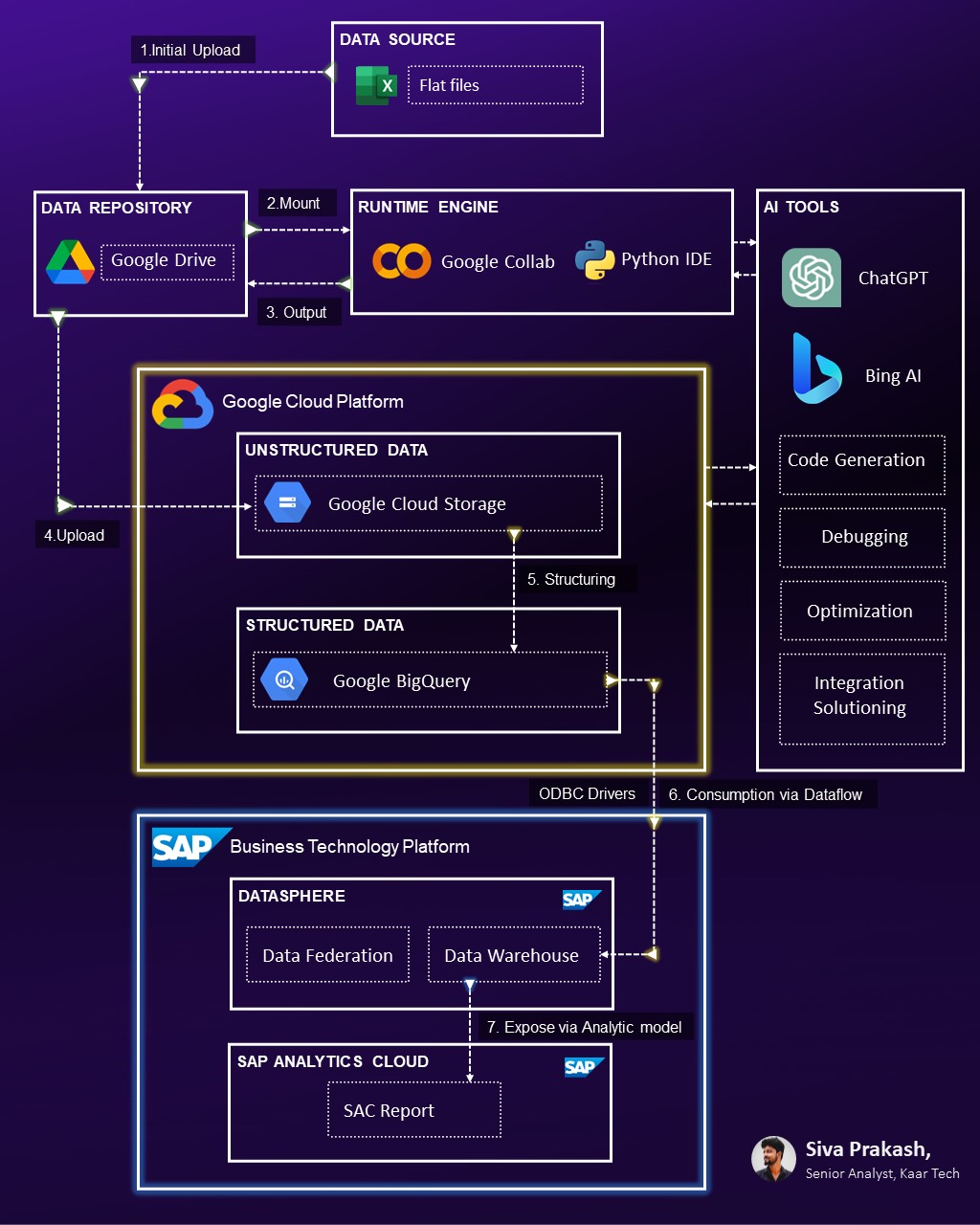

Architecture Diagram:

High-level architecture of automating flat file upload into Datasphere using AI, Google GCS, and BQ

Deep Dive into the Process:

- Python coding in Collab with AI Tools Support: I automated data transformations for a multitude of Excel files by leveraging Google Colab and the powerful Python IDE and its libraries. With the assistance of AI tools like ChatGPT and Bing AI, the Python script was developed, tested, and refined incrementally. I validated the code initially with a smaller dataset and eventually backtested with larger datasets and did a few error handling.

- Google Storage Solutions & BigQuery Integration: I used the advanced capabilities of GCS to house the transformed files. Initially, I tried fetching data directly from Google Cloud Storage to SAP Datasphere. However, this method required creating a projection for every file in our bucket. To circumvent this, I decided to structure the data in Google BigQuery using the data from the GCS bucket.

- Connecting BQ to Datasphere: With the data aptly structured in BQ, I then integrated it with SAP Datasphere, ensuring a smooth and accurate data transition.

Conclusion:

With strategic AI collaboration, we transitioned from a labor-intensive process to an automated, efficient solution. Embracing AI tools, rather than viewing them as competition, can amplify human capabilities, leading to groundbreaking solutions.

I hope this blog offers a clear perspective on integrating SAP with non-SAP solutions. By adopting such methodologies, you might find ways to enhance your development process and potentially save time.

Hope this article was helpful.

For further insights, please refer to these articles:

Create Google Cloud Storage Bucket

Prepare Connectivity to Google BigQuery

Creating a Google Big Query connection in SAP Datasphere (DWC)

如有侵权请联系:admin#unsafe.sh