Server-Side Request Forgery (SSRF) is a vulnerability that allows an attacker to trick a server 2023-3-21 00:6:37 Author: blog.includesecurity.com(查看原文) 阅读量:26 收藏

Server-Side Request Forgery (SSRF) is a vulnerability that allows an attacker to trick a server-side application to make a request to an unintended location. SSRF, unlike most other specific vulnerabilities, has gained its own spot on the OWASP Top 10 2021. This reflects both how common and how impactful this type of vulnerability has become. It is often the means by which attackers pivot a level deeper into network infrastructure, and eventually gain remote code execution.

In this article we are going to review the different ways of triggering SSRF, the main effective mitigation techniques for it, and discuss which mitigation techniques we believe are most effective from our experience of application security pentests.

SSRF Refresher

Like most vulnerabilities, SSRF is caused by a system naively trusting external input. With SSRF, that external input makes its way into a request, usually a HTTP request.

These two tiny Python Flask applications give a simplified example of the mechanism of SSRF:

admin.py

from flask import Flask

app = Flask(__name__)

@app.route('/admin')

def admin():

return "Super secret admin panel"

if __name__ == "__main__":

app.run(host="127.0.0.1", port=8888)

app.py

from flask import Flask, request

import requests

app = Flask(__name__)

@app.route('/get_image')

def get_image():

image_url = request.args.get('image_url', '')

if image_url:

return requests.get(image_url).text

else:

return "Please provide an image URL"

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000)

The admin app is running on 127.0.0.1:8888, the localhost network, as it is intended to only be used by local application administrators. The main app, which is running on the same machine at port 5000 but (let’s imagine) is exposed to the Internet, has the vulnerable get_image() function. This can be used by an external attacker to forge a server-side request to the internal admin app:

$ curl http://0.0.0.0:5000/get_image?image_url=http://127.0.0.1:8888/admin

Super secret admin panelThis simple example shows the two key elements of an SSRF attack:

- The application allows external input to flow into a server-side request.

- A resource which should not be available to the attacker can be accessed or manipulated.

An SSRF can either be used to access internal resources that should not be available to an attacker, or used to access external resources in an unintended way. Examples of external resource access would include importing malicious data into an application, or spoofing the source of an attack against a third-party. SSRF against internal resources is more common and usually more impactful, so it’s what we’ll be focusing on in this post.

Failed Attempts at Mitigating SSRF

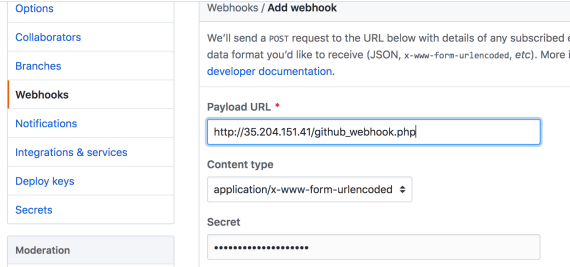

The most obvious way to mitigate SSRF would be to completely prevent external input from influencing server-side requests. Unfortunately, there are legitimate reasons why an application may need to allow external input. For instance, webhooks are user-defined HTTP callbacks that execute in order to build workflows with third-party infrastructure, and can usually be arbitrary URLs.

But before investigating the case where we need to allow arbitrary user-controlled requests, let’s just focus on the get_image() functionality for now which is trying to fetch an image from an external service.

Incomplete Allowlisting

The get_image() function of app.py is fetching a certain type of image URL so should be much more specific in what user input it accepts. Someone who is unaware of the history of SSRF attacks might think the below is enough to stop any shenanigans:

BASE_URL = "http://0.0.0.0:5000"

@app.route('/get_image')

def get_image():

image_path = request.args.get('image_path', '')

if image_path:

return requests.get(f"{BASE_URL}{image_path}.png").text

else:

return "Please provide an image URL"

get_image() now appears to be anchoring the input to the application hostname at the beginning, and the PNG extension at the end. However both can be cut out of the request by taking advantage of features of the URL standard with the payload @127.0.0.1:8888/admin#:

$ curl 'http://0.0.0.0:5000/[email protected]:8888/admin%23'

Super secret admin panelIt’s clearer to show the full request that gets sent by the backend:

>>> requests.get("http://0.0.0.0:5000@127.0.0.1:8888/admin#png").text

'Super secret admin panel'The BASE_URL becomes HTTP basic authentication credentials due to use of “@”, and “png” becomes a URL fragment due to use of “#”. Both of these get ignored by the admin server and the SSRF succeeds.

We said that this takes advantage of features of the URL standard, but things get wild when you consider that here are multiple URL specifications and that different requests libraries out there tend to implement them slightly differently. This opens the door to bizarre methods for fooling URL parsing by researchers like Orange Tsai.

Overall, limiting the influence of external input is always a good idea, but it’s not a failsafe technique.

Incomplete Blocklisting

The other commonly seen, but even more inadequate approach to SSRF prevention is an incomplete attempt at blocking scary hostnames.

Worst of all is a check that uses a function like urlparse() that just grabs the hostname out of a URL:

from flask import Flask, request

from urllib.parse import urlparse

import requests

app = Flask(__name__)

BLOCKED = ["127.0.0.1", "localhost", "169.254.169.254", "0.0.0.0"]

@app.route('/get_image')

def get_image():

image_url = request.args.get('image_url', '')

if image_url:

if any(b in urlparse(image_url).hostname for b in BLOCKED):

return "Hack attempt blocked"

else:

return requests.get(image_url).text

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000)

There are a large number of payloads which bypass the snippet above, 127.0.0.1 in different numeric formats (decimal, octal, hex etc.), equivalent IPv6 localhost addresses, parsing peculiarities like 127.1, as well as alternative representations of 0.0.0.0, and generally enough variants to make you realize that hand-rolling a blocklist is a cursed idea.

But, without even considering whether the BLOCKED list is any good, there’s a straightforward bypass here. An attacker can just input a domain like localtest.me whose DNS lookup resolves to 127.0.0.1:

$ curl http://0.0.0.0:5000/get_image?image_url=http://127.0.0.1:8888/admin

Hack attempt blocked

$ curl http://0.0.0.0:5000/get_image?image_url=http://localtest.me:8888/admin

Super secret admin panelThis demonstrates that the provided hostname needs to be resolved, so the next stage of evolution of bad mitigations would be the following:

BLOCKED = ["127.0.0.1", "localhost", "169.254.169.254", "0.0.0.0"]

@app.route('/get_image')

def get_image():

image_url = request.args.get('image_url', '')

if image_url:

if any(b in socket.gethostbyname(urlparse(image_url).hostname) for b in BLOCKED):

return "Hack attempt blocked"

else:

return requests.get(image_url).text

Even assuming the blocklist was perfect (this one is far from it), this code is still vulnerable to three different types of time-of-check to time-of-use (TOCTTOU) vulnerability:

- HTTP Redirects

- DNS Rebinding

- Parser differential attacks

HTTP Redirects

A redirect is the most straightforward way to demonstrate the problem of TOCTTOU in the context of HTTP. The attacker hosts the following server:

attackers_redirect.py

from flask import Flask, redirect

app = Flask(__name__)

@app.route('/')

def main():

return redirect("http://127.0.0.1:8888/admin", code=302)

if __name__ == '__main__':

app.run(host='0.0.0.0', port=1337)

By causing the vulnerable application to make an SSRF to the attacker server, the attacker server’s hostname bypasses the localhost blocklist, but a redirect is triggered back to localhost, which is not checked:

$ curl http://127.0.0.1:5000/get_image?image_url=http://0.0.0.0:1337

Super secret admin panelDNS Rebinding

DNS rebinding is an even more devastating technique. It exploits the TOCTTOU that exists between the DNS resolution of a domain when it’s validated, and when the request is actually made.

Services like rbndr.us make this easy; we can use it to generate a URL such as http://7f000001.0a00020f.rbndr.us, which alternates between resolving to 127.0.0.1 and 10.0.2.15, and request that in a loop:

$ while sleep 0.1; do curl http://0.0.0.0:5000/get_image?image_url=http://7f000001.0a00020f.rbndr.us:8888/admin; done

<!doctype html>

<html lang=en>

<title>404 Not Found</title>

<h1>Not Found</h1>

<p>The requested URL was not found on the server. If you entered the URL manually please check your spelling and try again.</p>

Super secret admin panelHack attempt blockedHack attempt blockedIt took a while, but eventually the timings lined up and rbndr.us’s DNS server’s DNS responses to the gethostbyname() check and the requests library were different, allowing the SSRF to succeed. DNS rebinding is fun as it can subvert the logic of applications which assume that DNS records are fixed, rather than malleable values under attacker control.

Parser Differential Attacks

Finally, parser differential attacks are the end-game of the multiple URL standards and implementations mentioned a few sections above, where you’ll sometimes find applications that use one library to check a URL and another to make the request. Their different interpretations of the same string can enable an SSRF exploit to slip through.

Not Returning a Response

Sometimes you’ll find an SSRF that doesn’t return a response to the attacker, perhaps under the assumption that this is unexploitable. This is termed a “Blind SSRF”, and there’s some great resources out there that show how these can often be converted to full SSRF vulnerabilities.

Application Layer Mitigation

So, having been through that journey of failed approaches, let’s talk about the decent techniques for SSRF mitigation. We’ve accepted that sometimes we need to allow users to input arbitrary URLs of requests to make from our infrastructure, how can we stop those requests from doing anything malicious?

As we’ve seen in the previous sections, an effective SSRF mitigation approach needs at least the following capabilities:

- Blocklist private IP addresses

- Allowlist only permitted domains (configurable)

- Check an arbitrary number of redirected domains

- DNS rebinding protection

All these can be incorporated into an application library. This was the approach Include Security took back in 2016 with the release of our SafeURL libraries. They are designed to be drop-in replacements for HTTP libraries such as Python’s requests. Doyensec recently released a similar library for Golang.

The way these libraries work is by hooking into the lower-level HTTP client libraries of the respective languages (e.g. urllib3 or pycurl). TOCTTOU is prevented by validating the IP we are about to connect to just before opening a socket to the requested website with that IP. An optional configuration can be provided that enables a developer to decide which types of requests to allow, but by default it just blocks any requests to internal IP addresses. Then, a user-friendly interface can be presented similar to the higher-level HTTP libraries that are normally used.

Pros

The main benefit of this approach is that it’s easy to integrate into an application. An application developer can import the library and use it to make requests and the details are taken care of. One could argue that these sorts of libraries should become the default higher-level HTTP requests libraries just as modern XML libraries no longer load external entities without explicit configuration.

Cons

However, there are downsides to this approach. Developers have to remember to use the SSRF-safe library instead of the normal requests library. This can be enforced by static code analyzers on code checkin, but there’s a large number of HTTP client libraries in most languages (e.g. Ruby has faraday, multipart-post, excon, rest-client, httparty, and more) making it easy for the rules to miss some. Further, there’s several places that SSRF vulnerabilities can exist outside of the application. They could occur for instance in HTML to PDF generation services using PhantomJS or Headless Chrome or other types of media conversion where an external process is spawned that circumvents the mitigation. Third-party package dependencies that incidentally make HTTP requests would also not use the SSRF-safe library.

There’s also the burden of maintaining SSRF-safe libraries. While more annoying in some languages than others, the low-level functions that the libraries hook into change over time and there’s always the chance of bugs appearing due to this. As we’ve noted, it’s hard to design perfect blocklists and possible for libraries to miss some detail of address validation – this is particularly evident with IPv6 support which is complex with compatibility protocols such as DNS64, Teredo, and 6to4. Finally, internal hosts may be given globally routable addresses but be firewalled off from external hosts. Ultimately, an application layer library isn’t the best place to defend subtleties in network policy like this.

SSRF Jail

An approach we explored internally but never published is to go one level lower and mitigate SSRF at a level somewhere between the operating system and application layer. Named SSRF Jail, it’s a dynamic library that is installed into a target process and hooks into DNS and networking subsystem calls. For instance, on Linux the getaddrinfo() and connect() functions are hooked in the C standard library such that an opened socket can only connect to configured IP addresses.

The advantage of this approach is that it doesn’t require application library changes, and is more exhaustive so long as the target process doesn’t bypass the C standard library and use syscalls directly (e.g. Golang). The main disadvantage that led us to abandoning this approach is that it is not granular enough – an application may need to permit requests to private IPs in specific circumstances but not in most requests, but the lower-level hooks are indiscriminatory.

Network Controls

We haven’t really addressed the elephant in the room for some readers here, which is “why not just firewall the app”. With all the talk of the tricky problems of application layer blocklisting surely the network is the right place to mitigate SSRF. Firewall rules would prevent any traffic between services and ports unless they needed it for normal functioning.

However this is overall a blunt approach that doesn’t work with many real-world systems. We often see applications where some privileged part of it needs to make HTTP requests to another internal service, but the rest of the application has no need to. Further, firewalls struggle to protect against SSRFs from hitting services running on the localhost that the application is running on (e.g. Redis). An ideal network architecture wouldn’t be setup this way in the first place, but for organizations that haven’t fully bought into microservices (usually for valid reasons) it’s common to see.

Therefore, requiring authentication to internal services and endpoints is important, especially (but not only) if an IP or port can’t be filtered outright. In cloud world things are moving in this direction. A well-known target for SSRF has been the AWS EC2 instance metadata endpoint 169.254.169.254. Enabled by default, the endpoint is a rich source of internal data and credentials that can be accessed via SSRF from an EC2 instance. Outrightly blocking it could be difficult if the application relies on metadata. Version 2 of the instance metadata endpoint was released in 2019, which adds token authentication, but at the time of writing still has to be explicitly configured. GCP and Azure, being second movers, managed to better restrict their metadata endpoints from the get-go.

Request Proxy

The approach to SSRF mitigation that we most like is something of a hybrid between an application- and a network-layer control. It is to proxy egress traffic in a system through a single point which applies security controls.

This concept is implemented by Stripe’s Smokescreen which is an open source CONNECT proxy. It is deployed on your network, where it proxies HTTP traffic between the application and the destination URL and has rules about which hosts it allows to talk to on behalf of the app server. By default Smokescreen validates that traffic isn’t bound for an internal IP, but developers can define allowed or blocked domains in Smokescreen on a per-application basis. After Smokescreen has been fully configured, any other direct HTTP requests made from the application can be blocked in the firewall to force use of the proxy.

The real advantage of this approach is that it deals with SSRF at a level that makes sense, since as we’ve explored, SSRF is both an application- and a network-layer concern. We gain the configurability of the application-layer mitigation, with the exhaustiveness of the network-layer mitigation. We no longer have to rely on every potentially SSRF-prone HTTP request being made in an application to use the right library, and no longer have to count on every internal service having the right firewall and authentication controls applied to it.

Further, there are other advantages to centralizing the location where outbound requests are made. It enables better logging and monitoring, and means that egress comes from a small list of IPs which third parties can allowlist, which simplifies other infrastructure concerns.

Downsides are that this approach can only work if the application supports HTTP CONNECT (although this is usually the case). Smokescreen and its policies must also be built and maintained, so it needs an amount of organizational buy-in to be deployed.

Conclusion

Overall, we looked at a number of SSRF mitigations that don’t work and a number that do. Of those, for more mature organizations we most like the request proxying approach, and zero-trust security architectures that require authentication for all internal services. Failing that, e.g. for companies that don’t yet have resources to setup detailed network controls or maintain their own proxy infrastructure, an anti-SSRF application library applied on any endpoints that accept attacker-controlled input is a good initial mitigation. For defense-in-depth, multiple techniques could be combined together, however this would mean that you end up with multiple identical allowlists/blocklists that have to be kept in sync so is not necessarily recommended.

如有侵权请联系:admin#unsafe.sh