Loading...

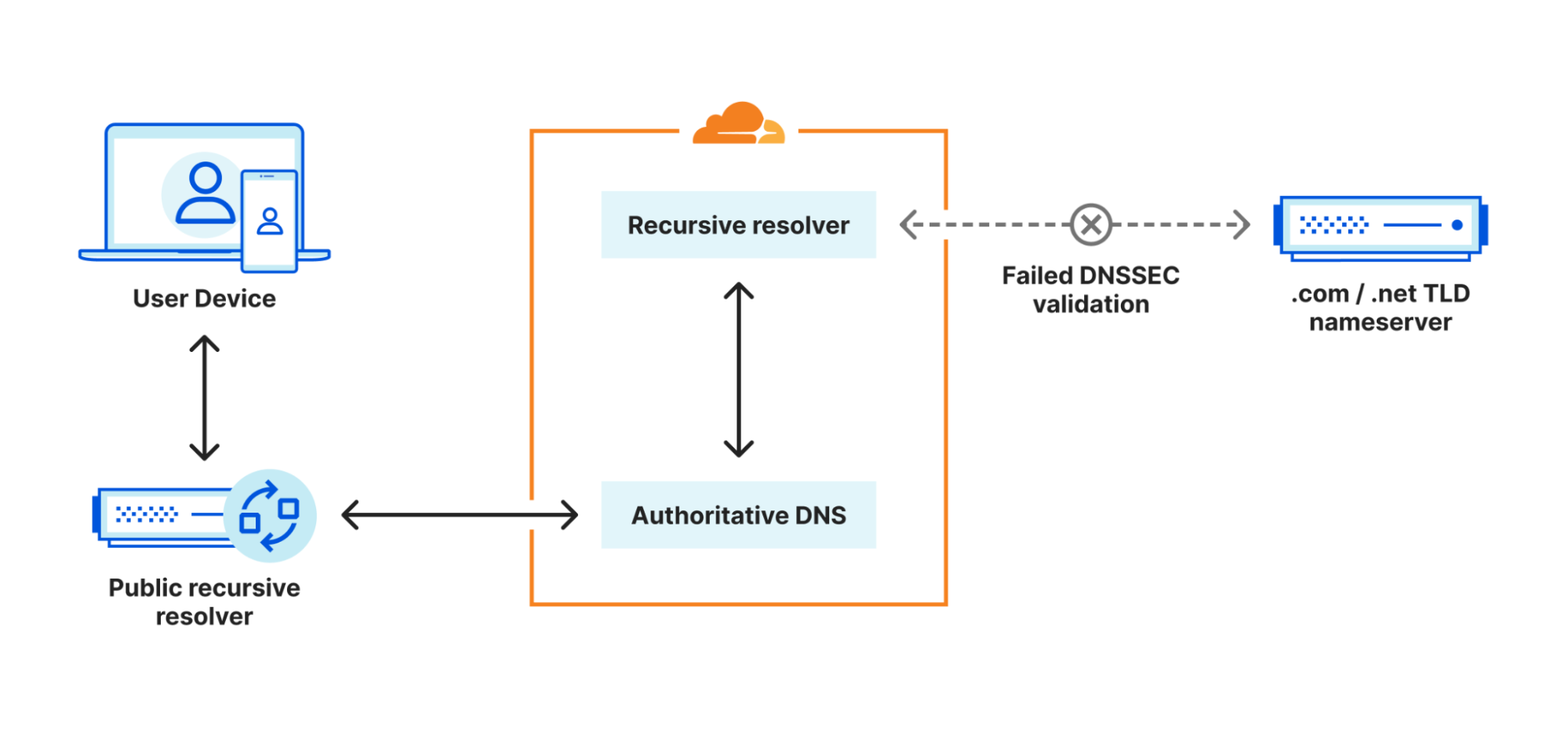

On Sunday, July 9, 2023, early morning UTC time, we observed a high number of DNS resolution failures — up to 7% of all DNS queries across the Asia Pacific region — caused by invalid DNSSEC signatures from Verisign .com and .net Top Level Domain (TLD) nameservers. This resulted in connection errors for visitors of Internet properties on Cloudflare in the region.

The local instances of Verisign’s nameservers started to respond with expired DNSSEC signatures in the Asia Pacific region. In order to remediate the impact, we have rerouted upstream DNS queries towards Verisign to locations on the US west coast which are returning valid signatures.

We have already reached out to Verisign to get more information on the root cause. Until their issues have been resolved, we will keep our DNS traffic to .com and .net TLD nameservers rerouted, which might cause slightly increased latency for the first visitor to domains under .com and .net in the region.

Background

In order to proxy a domain’s traffic through Cloudflare’s network, there are two components involved with respect to the Domain Name System (DNS) from the perspective of a Cloudflare data center: external DNS resolution, and upstream or origin DNS resolution.

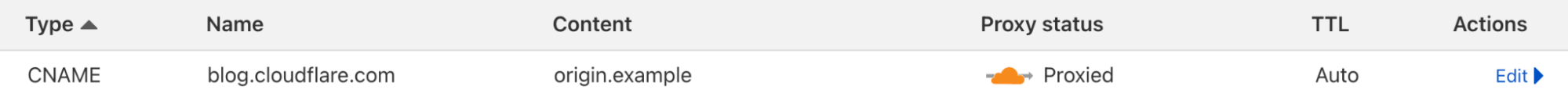

To understand this, let’s look at the domain name blog.cloudflare.com — which is proxied through Cloudflare’s network — and let’s assume it is configured to use origin.example as the origin server:

Here, the external DNS resolution is the part where DNS queries to blog.cloudflare.com sent by public resolvers like 1.1.1.1 or 8.8.8.8 on behalf of a visitor return a set of Cloudflare Anycast IP addresses. This ensures that the visitor’s browser knows where to send an HTTPS request to load the website. Under the hood your browser performs a DNS query that looks something like this (the trailing dot indicates the DNS root zone):

$ dig blog.cloudflare.com. +short

104.18.28.7

104.18.29.7

(Your computer doesn’t actually use the dig command internally; we’ve used it to illustrate the process) Then when the next closest Cloudflare data center receives the HTTPS request for blog.cloudflare.com, it needs to fetch the content from the origin server (assuming it is not cached).

There are two ways Cloudflare can reach the origin server. If the DNS settings in Cloudflare contain IP addresses then we can connect directly to the origin. In some cases, our customers use a CNAME which means Cloudflare has to perform another DNS query to get the IP addresses associated with the CNAME. In the example above, blog.cloudflare.com has a CNAME record instructing us to look at origin.example for IP addresses. During the incident, only customers with CNAME records like this going to .com and .net domain names may have been affected.

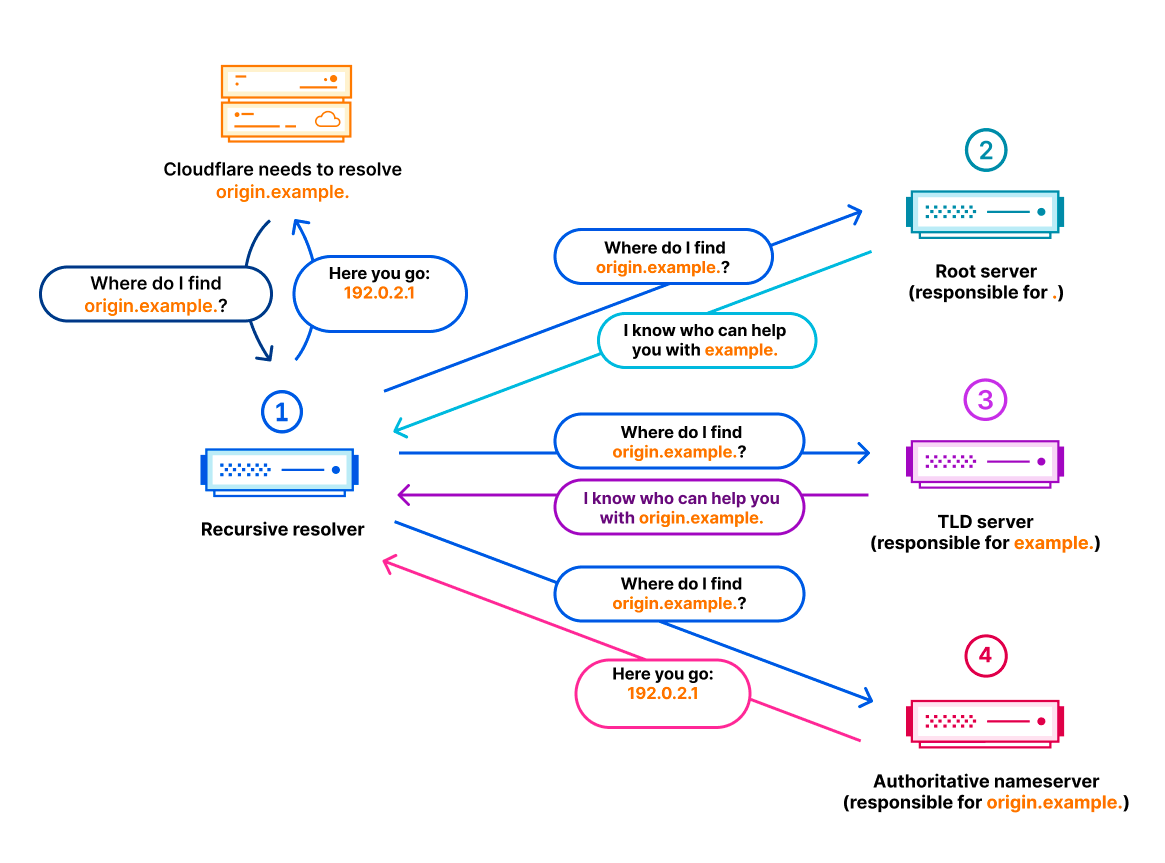

The domain origin.example needs to be resolved by Cloudflare as part of the upstream or origin DNS resolution. This time, the Cloudflare data center needs to perform an outbound DNS query that looks like this:

$ dig origin.example. +short

192.0.2.1

DNS is a hierarchical protocol, so the recursive DNS resolver, which usually handles DNS resolution for whoever wants to resolve a domain name, needs to talk to several involved nameservers until it finally gets an answer from the authoritative nameservers of the domain (assuming no DNS responses are cached). This is the same process during the external DNS resolution and the origin DNS resolution. Here is an example for the origin DNS resolution:

Inherently, DNS is a public system that was initially published without any means to validate the integrity of the DNS traffic. So in order to prevent someone from spoofing DNS responses, DNS Security Extensions (DNSSEC) were introduced as a means to authenticate that DNS responses really come from who they claim to come from. This is achieved by generating cryptographic signatures alongside existing DNS records like A, AAAA, MX, CNAME, etc. By validating a DNS record’s associated signature, it is possible to verify that a requested DNS record really comes from its authoritative nameserver and wasn’t altered en-route. If a signature cannot be validated successfully, recursive resolvers usually return an error indicating the invalid signature. This is exactly what happened on Sunday.

Incident timeline and impact

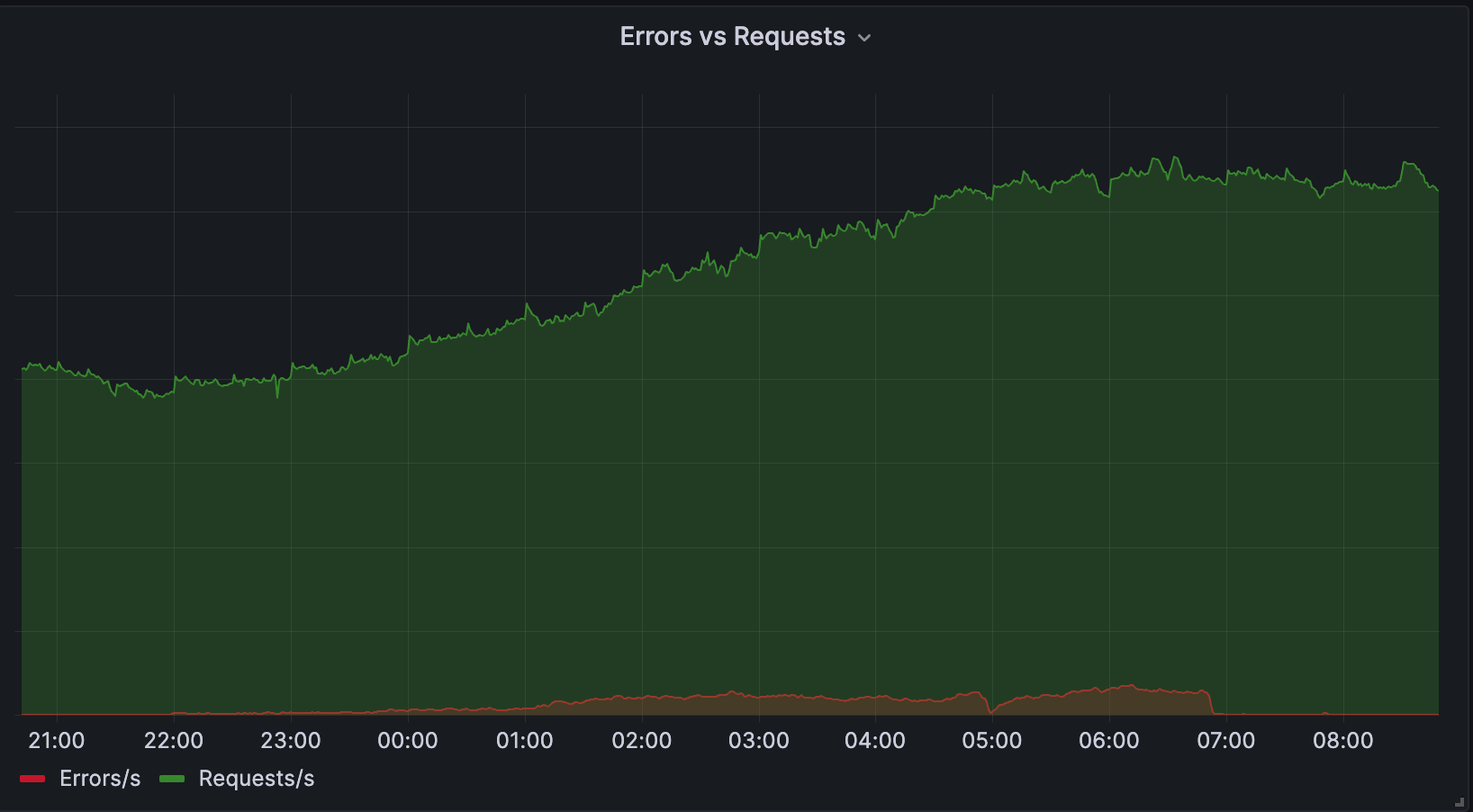

On Saturday, July 8, 2023, at 21:10 UTC our logs show the first instances of DNSSEC validation errors that happened during upstream DNS resolution from multiple Cloudflare data centers in the Asia Pacific region. It appeared DNS responses from the TLD nameservers of .com and .net of the type NSEC3 (a DNSSEC mechanism to prove non-existing DNS records) did not include signatures. About an hour later at 22:16 UTC, the first internal alerts went off (since it required issues to be consistent over a certain period of time), but error rates were still at a level at around 0.5% of all upstream DNS queries.

After several hours, the error rate had increased to a point in which ~13% of our upstream DNS queries in affected locations were failing. This percentage continued to fluctuate over the duration of the incident between the ranges of 10-15% of upstream DNS queries, and roughly 5-7% of all DNS queries (external & upstream resolution) to affected Cloudflare data centers in the Asia Pacific region.

Initially it appeared as though only a single upstream nameserver was having issues with DNS resolution, however upon further investigation it was discovered that the issue was more widespread. Ultimately, we were able to verify that the Verisign nameservers for .com and .net were returning expired DNSSEC signatures on a portion of DNS queries in the Asia Pacific region. Based on our tests, other nameserver locations were correctly returning valid DNSSEC signatures.

In response, we rerouted our DNS traffic to the .com and .net TLD nameserver IP addresses to Verisign’s US west locations. After this change was propagated, the issue very quickly subsided and origin resolution error rates returned to normal levels.

All times are in UTC:

2023-07-08 21:10 First instances of DNSSEC validation errors appear in our logs for origin DNS resolution.

2023-07-08 22:16 First internal alerts for Asia Pacific data centers go off indicating origin DNS resolution error on our test domain. Very few errors for other domains at this point.

2023-07-09 02:58 Error rates have increased substantially since the first instance. An incident is declared.

2023-07-09 03:28 DNSSEC validation issues seem to be isolated to a single upstream provider. It is not abnormal that a single upstream has issues that propagate back to us, and in this case our logs were predominantly showing errors from domains that resolve to this specific upstream.

2023-07-09 04:52 We realize that DNSSEC validation issues are more widespread and affect multiple .com and .net domains. Validation issues continue to be isolated to the Asia Pacific region only, and appear to be intermittent.

2023-07-09 05:15 DNS queries via popular recursive resolvers like 8.8.8.8 and 1.1.1.1 do not return invalid DNSSEC signatures at this point. DNS queries using the local stub resolver continue to return DNSSEC errors.

2023-07-09 06:24 Responses from .com and .net Verisign nameservers in Singapore contain expired DNSSEC signatures, but responses from Verisign TLD nameservers in other locations are fine.

2023-07-09 06:41 We contact Verisign and notify them about expired DNSSEC signatures.

2023-07-09 06:50 In order to remediate the impact, we reroute DNS traffic via IPv4 for the .com and .net Verisign nameserver IPs to US west IPs instead. This immediately leads to a substantial drop in the error rate.

2023-07-09 07:06 We also reroute DNS traffic via IPv6 for the .com and .net Verisign nameserver IPs to US west IPs. This leads to the error rate going down to zero.

2023-07-10 09:23 The rerouting is still in place, but the underlying issue with expired signatures in the Asia Pacific region has still not been resolved.

2023-07-10 18:23 Versign gets back to us confirming that they “were serving stale data” at their local site and have resolved the issues.

Technical description of the error and how it happened

As mentioned in the introduction, the underlying cause for this incident was expired DNSSEC signatures for .net and .com zones. Expired DNSSEC signatures will cause a DNS response to be interpreted as invalid. There are two main scenarios in which this error was observed by a user:

- CNAME flattening for external DNS resolution. This is when our authoritative nameservers attempt to return the IP address(es) that a CNAME record target resolves to rather than the CNAME record itself.

- CNAME target lookup for origin DNS resolution. This is most commonly used when an HTTPS request is sent to a Cloudflare anycast IP address, and we need to determine what IP address to forward the request to. See How Cloudflare works for more details.

In both cases, behind the scenes the DNS query goes through an in-house recursive DNS resolver in order to lookup what a hostname resolves to. The purpose of this resolver is to cache queries, optimize future queries and provide DNSSEC validation. If the query from this resolver fails for whatever reason, our authoritative DNS system will not be able to perform the two scenarios outlined above.

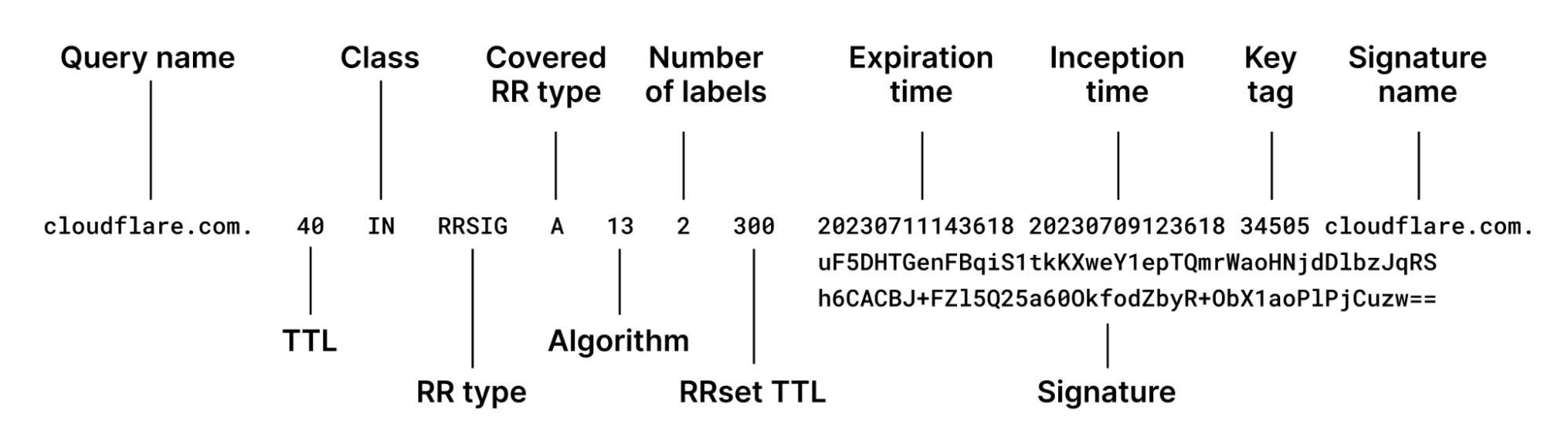

During the incident, the recursive resolver was failing to validate the DNSSEC signatures in DNS responses for domains ending in .com and .net. These signatures are sent in the form of an RRSIG record alongside the other DNS records they cover. Together they form a Resource Record set (RRset). Each RRSIG has the corresponding fields:

As you can see, the main part of the payload is associated with the signature and its corresponding metadata. Each recursive resolver is responsible for not only checking the signature but also the expiration time of the signature. It is important to obey the expiration time in order to avoid returning responses for RRsets that have been signed by old keys, which could have potentially been compromised by that time.

During this incident, Verisign, the authoritative operator for the .com and .net TLD zones, was occasionally returning expired signatures in its DNS responses in the Asia Pacific region. As a result our recursive resolver was not able to validate the corresponding RRset. Ultimately this meant that a percentage of DNS queries would return SERVFAIL as response code to our authoritative nameserver. This in turn caused connection issues for users trying to connect to a domain on Cloudflare, because we weren't able to resolve the upstream target of affected domain names and thus didn’t know where to send proxied HTTPS requests to upstream servers.

Remediation and follow-up steps

Once we had identified the root cause we started to look at different ways to remedy the issue. We came to the conclusion that the fastest way to work around this very regionalized issue was to stop using the local route to Verisign's nameservers. This means that, at the time of writing this, our outgoing DNS traffic towards Verisign's nameservers in the Asia Pacific region now traverses the Pacific and ends up at the US west coast, rather than being served by closer nameservers. Due to the nature of DNS and the important role of DNS caching, this has less impact than you might initially expect. Frequently looked up names will be cached after the first request, and this only needs to happen once per data center, as we share and pool the local DNS recursor caches to maximize their efficiency.

Ideally, we would have been able to fix the issue right away as it potentially affected others in the region too, not just our customers. We will therefore work diligently to improve our incident communications channels with other providers in order to ensure that the DNS ecosystem remains robust against issues such as this. Being able to quickly get hold of other providers that can take action is vital when urgent issues like these arise.

Conclusion

This incident once again shows how impactful DNS failures are and how crucial this technology is for the Internet. We will investigate how we can improve our systems to detect and resolve issues like this more efficiently and quickly if they occur again in the future.

While Cloudflare was not the cause of this issue, and we are certain that we were not the only ones affected by this, we are still sorry for the disruption to our customers and to all the users who were unable to access Internet properties during this incident.

We protect entire corporate networks, help customers build Internet-scale applications efficiently, accelerate any website or Internet application, ward off DDoS attacks, keep hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.